/

+ - In this demo we would look in https://chtc.github.io/web-preview/preview-helloworld/

+

+**You can continue to push commits to this branch and have them populate on the preview at this point!**

+

+- When you are satisfied with these changes you can create a PR to merge into master

+- Delete the preview branch and Github will take care of the garbage collection!

+

+## Testing Changes Locally

+

+### Quickstart (Unix Only)

+

+1. Install Docker if you don't already have it on your computer.

+2. Open a terminal and `cd` to your local copy of the `chtc-website-source` repository

+3. Run the `./edit.sh` script.

+4. The website should appear at [http://localhost:8080](http://localhost:8080). Note that this system is missing the secret sauce of our setup that converts

+the pages to an `.shtml` file ending, so links won't work but just typing in the name of a page into the address bar (with no

+extension) will.

+

+### Run via Ruby

+

+```shell

+bundle install

+bundle exec jekyll serve --watch -p

+```

+

+### Run Docker Manually

+

+At the website root:

+

+```

+docker run -it -p 8001:8000 -v $PWD:/app -w /app ruby:2.7 /bin/bash

+```

+

+This will utilize the latest Jekyll version and map port `8000` to your host. Within the container, a small HTTP server can be started with the following command:

+

+```

+bundle install

+bundle exec jekyll serve --watch --config _config.yml -H 0.0.0.0 -P 8000

+```

+

+## Formatting

+

+### Markdown Reference and Style

+

+This is a useful reference for most common markdown features: https://daringfireball.net/projects/markdown/

+

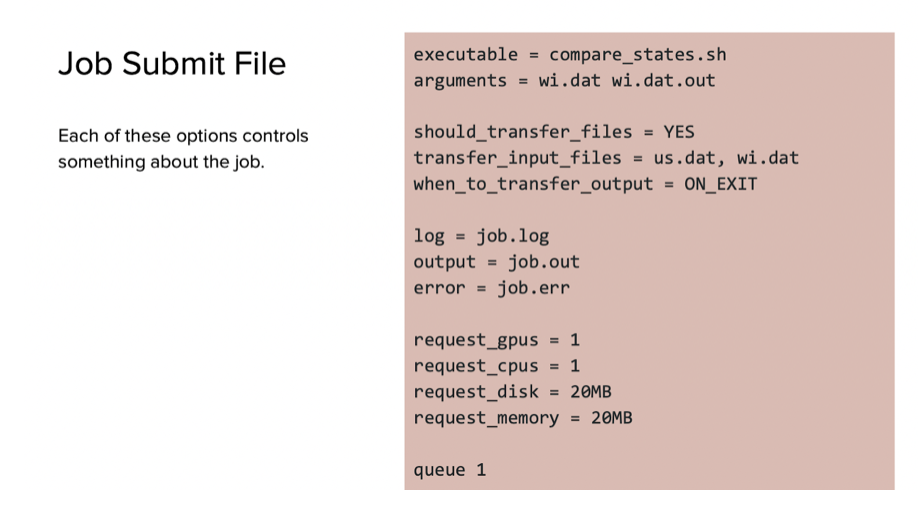

+To format code blocks, we have the following special formatting tags:

+

+ ```

+ Pre-formatted text / code goes here

+ ```

+ {:.sub}

+

+`.sub` will generate a "submit file" styled block; `.term` will create a terminal style, and `.file` can

+be used for any generic text file.

+

+We will be using the pound sign for headers, not the `==` or `--` notation.

+

+For internal links (to a header inside the document), use this syntax:

+* header is written as

+ ```

+ ## A. Sample Header

+ ```

+* the internal link will look like this:

+ ```

+ [link to header A](#a-sample-header)

+ ```

+

+### Converting HTML to Markdown

+

+Right now, most of our pages are written in html and have a `.shtml` extension. We are

+gradually converting them to be formatted with markdown. To easily convert a page, you

+can install and use the `pandoc` converter:

+

+ pandoc hello.shtml --from html --to markdown > hello.md

+

+You'll still want to go through and double check / clean up the text, but that's a good starting point. Once the

+document is converted from markdown to html, the file extension should be `.md` instead. If you use the

+command above, this means you can just delete the `.shtml` version of the file and commit the new `.md` one.

+

+

+### Adding "Copy Code" Button to code blocks in guides

+

+Add .copy to the class and you will have a small button in the top right corner of your code blocks that

+when clicked, will copy all of the code inside of the block.

+

+### Adding Software Overview Guide

+

+When creating a new Software Guide format the frontmatter like this:

+

+software_icon: /uw-research-computing/guide-icons/miniconda-icon.png

+software: Miniconda

+excerpt_separator: <!--more-->

+

+Software Icon and software are how the guides are connected to the Software Overview page. The

+excerpt_seperator must be <!--more--> and can be placed anywhere in a document and all text

+above it will be put in the excerpt.

\ No newline at end of file

diff --git a/preview-fall2024-info/Record.html b/preview-fall2024-info/Record.html

new file mode 100644

index 000000000..6843c9727

--- /dev/null

+++ b/preview-fall2024-info/Record.html

@@ -0,0 +1,357 @@

+

+

+

+

+

+

+OSPool Hits Record Number of Jobs

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+ OSPool Hits Record Number of Jobs

+

+

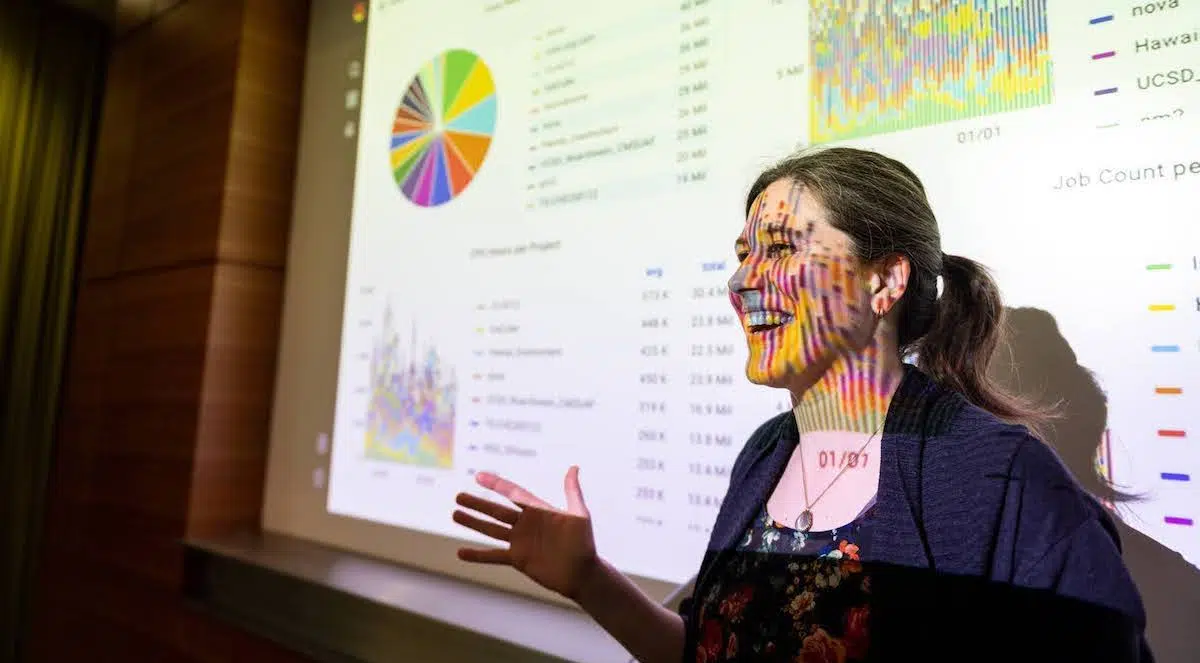

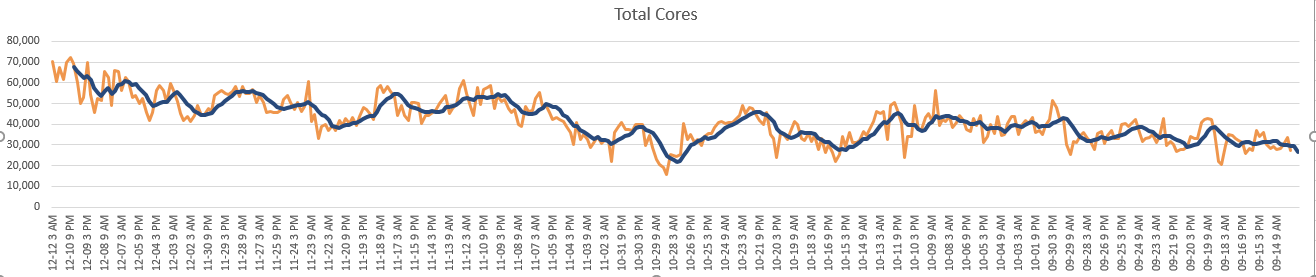

The OSPool processed over 2.6 million jobs during the week of April 14th - 17th this year and ran over half a million jobs on two separate days that week.

+

+

OSPool users and collaborators are smashing records. In April, researchers submitted a record-breaking number of jobs during the week of April 14th – 2.6 million, to be exact. The OSPool also processed over 500k jobs on two separate days during that same week, another record!

+

+

Nearly 60 projects from different fields contributed to the number of jobs processed during this record-breaking week, including these with substantial usage:

+

+ - BioMedInfo: University of Pittsburgh PI Erik Wright of the Wright Lab, develops and applies software tools to perform large-scale biomedical informatics on microbial genome sequence data.

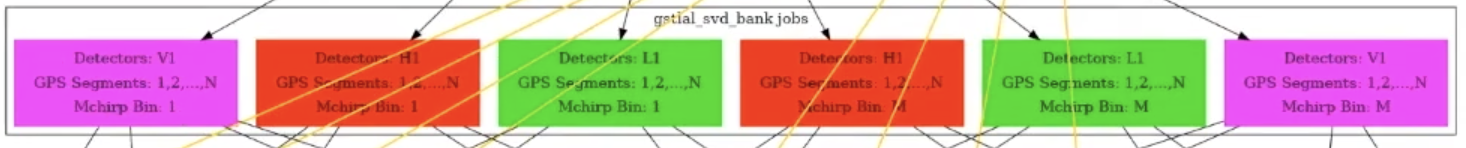

+ - Michigan_Riles: University of Michigan PI Keith Riles leads the Michigan Gravitational Wave Group, researching continuous gravitational waves.

+ - chemml: PI Olexandr Isayev from Carnegie-Mellon University, whose group develops machine learning (ML) models for molecular simulations.

+ - CompBinFormMod: Researcher PI Geoffrey Hutchison from the University of Pittsburgh, looking at data-driven ML as surrogates for quantum chemical methods to improve existing processes and next-generation atomistic force fields.

+

+

+

Any researcher tackling a problem that can run as many self-contained jobs can harness the capacity of the OSPool. If you have any questions about the Open Science Pool or how to create an account, please visit the FAQ page on the OSG Help Desk website. Descriptions of active OSG projects can be found here.

+

+

…

+

+

Learn more about the Open Science Pool.

+

+

+

+

+

+

+

+ Resilience: How COVID-19 challenged the scientific world

+

+

In the face of the pandemic, scientists needed to adapt.

+The article below by the Morgridge Institute for Research provides a thoughtful look into how researchers have pivoted in these challenging times to come together and contribute meaningfully in the global fight against COVID-19.

+One of these pivots occurred in the spring of 2020, when Morgridge and the CHTC issued a call for projects investigating COVID-19, resulting in five major collaborations that leveraged the power of HTC.

+

+

For a closer look into how the CHTC and researchers have learned, grown, and adapted during the pandemic, read the full Morgridge article:

+

+

Resilience: How COVID-19 challenged the scientific world

+

+

+

+

+

+

+

+

+ OSG fuels a student-developed computing platform to advance RNA nanomachines

+

+

How undergraduates at the University of Nebraska-Lincoln developed a science gateway that enables researchers to build RNA nanomachines for therapeutic, engineering, and basic science applications.

+

+

+

+

The UNL students involved in the capstone project, on graduation day. Order from left to right: Evan, Josh, Dan, Daniel, and Conner.

+

+

When a science gateway built by a group of undergraduate students is deployed this fall, it will open the door for researchers to leverage the capabilities of advanced software and the capacity of the Open Science Pool (OSPool). Working under the guidance of researcher Joe Yesselman and longtime OSG contributor Derek Weitzel, the students united advanced simulation technology and a national, open source of high throughput computing capacity –– all within an intuitive, web-accessible science gateway.

+

+

Joe, a biochemist, has been fascinated by computers and mathematical languages for as long as he can remember. Reminiscing to when he first adopted computer programming and coding as a hobby back in high school, he reflects: “English was difficult for me to learn, but for some reason mathematical languages make a lot of sense to me.”

+

+

Today, he is an Assistant Professor of Chemistry at the University of Nebraska-Lincoln (UNL), and his affinity for computer science hasn’t waned. Leading the Yesselman Lab, he relies on the interplay between computation and experimentation to study the unique structural properties of RNA.

+

+

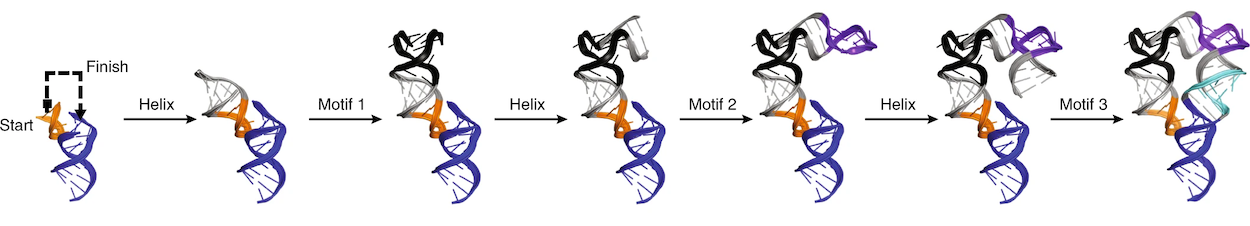

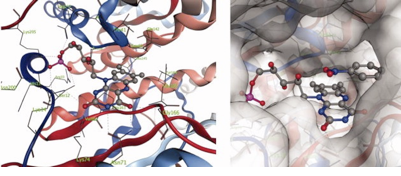

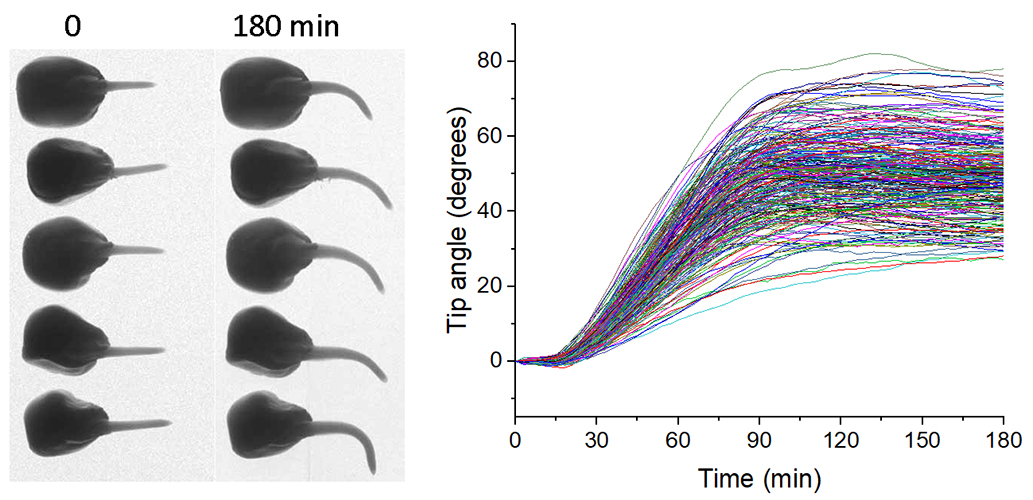

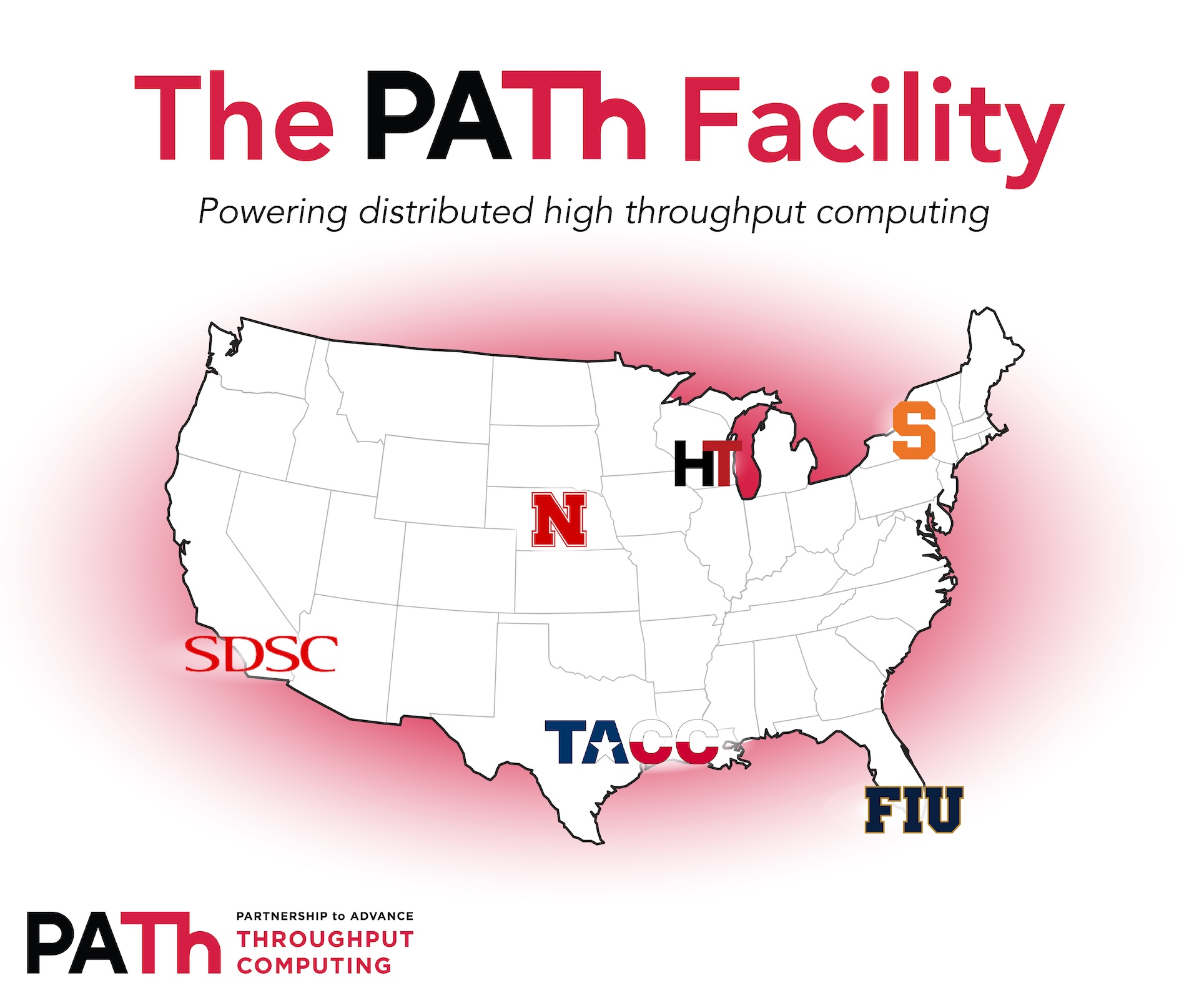

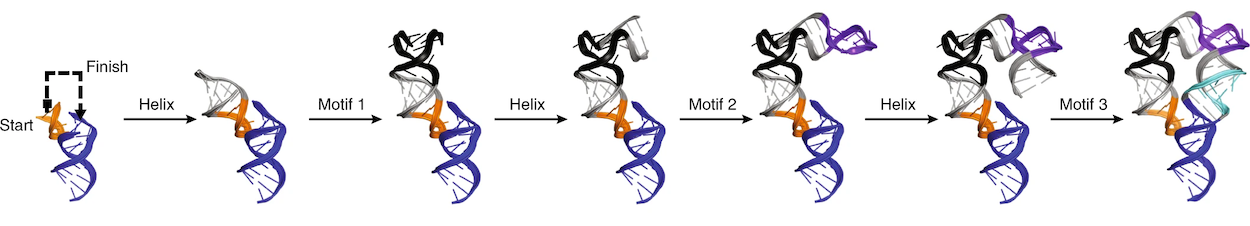

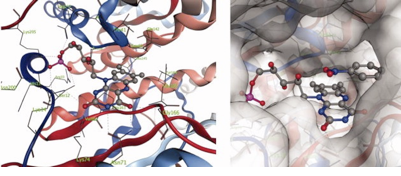

In September of 2020, Joe began collaborating with UNL’s Holland Computing Center (HCC) and the OSG to accelerate RNA nanostructure research everywhere by making his lab’s RNAMake software suite accessible to other scientists through a web portal. RNAMake enables researchers to build nanomachines for therapeutic, engineering, and basic science applications by simulating the 3D design of RNA structures.

+

+

Five UNL undergraduate students undertook this project as part of a year-long computer science capstone experience. By the end of the academic year, the students developed a science gateway –– an intuitive web-accessible interface that makes RNAMake easier and faster to use. Once it’s deployed this fall, the science gateway will put the Yesselman Lab’s advanced software and the shared computing resources of the OSPool into the hands of researchers, all through a mouse and keyboard.

+

+

The gateway’s workflow is efficient and simple. Researchers upload their input files, set a few parameters, and click the submit button –– no command lines necessary. Short simulations will take merely a few seconds, while complex simulations can last up to an hour. Once the job is completed, an email appears in their inbox, prompting them to analyze and download the resulting RNA nanostructures through the gateway.

+

+

This was no small feat. Collaboration among several organizations brought this seemingly simple final product to fruition.

+

+

To begin the process, the students received a number of startup allocations from the Extreme Science and Engineering Discovery Environment (XSEDE). When it was time to build the application, they used Apache Airavata to power the science gateway and they extended this underlying software in some notable ways. In order to provide researchers with more intuitive results, they implemented a table viewer and a 3D molecule visualization tool. Additionally, they added the ability for Airavata to submit directly to HTCondor, making it possible for simulations to be distributed across the resources offered by the OSPool.

+

+

The simulations themselves are small, short, and can be run independently. Furthermore, many of these simulations are needed in order to discover the right RNA nanostructures for each researcher’s purpose. Combined, these qualities make the jobs a perfect candidate for the OSPool’s distributed high throughput computing capabilities, enabled by computing capacity from campuses across the country.

+

+

Commenting on the incorporation of OSG resources, project sponsor Derek Weitzel explains how the gateway “not only makes it easier to use RNAMake, but it also distributes the work on the OSPool so that researchers can run more RNAMake simulations at the same time.” If the scientific process is like a long road trip, using high throughput computing isn’t even like taking the highway –– it’s like skipping the road entirely and taking to the skies in a high-speed jet.

+

+

The science gateway has immense potential to transform the way in which RNA nanostructure research is conducted, and the collaboration required to build it has already made lasting impacts on those involved. The group of undergraduate students are, in fact, no longer undergraduates. The team’s student development manager, Daniel Shchur, is now a software design engineer at Communication System Solutions in Lincoln, Nebraska. Reflecting on the capstone project, he remarks, “I think the most useful thing that my teammates and I learned was just being able to collaborate with outside people. It was definitely something that wasn’t taught in any of our classes and I think that was the most invaluable thing we learned.”

+

+

But learning isn’t just exclusive to students. Joe notes that he gained some unexpected knowledge from the students and Derek. “I learned a ton about software development, which I’m actually using in my lab,” he explains. “It’s very interesting how people can be so siloed. Something that’s so obvious, almost trivial for Derek is something that I don’t even know about because I don’t have that expertise. I loved that collaboration and I loved hearing his advice.”

+

+

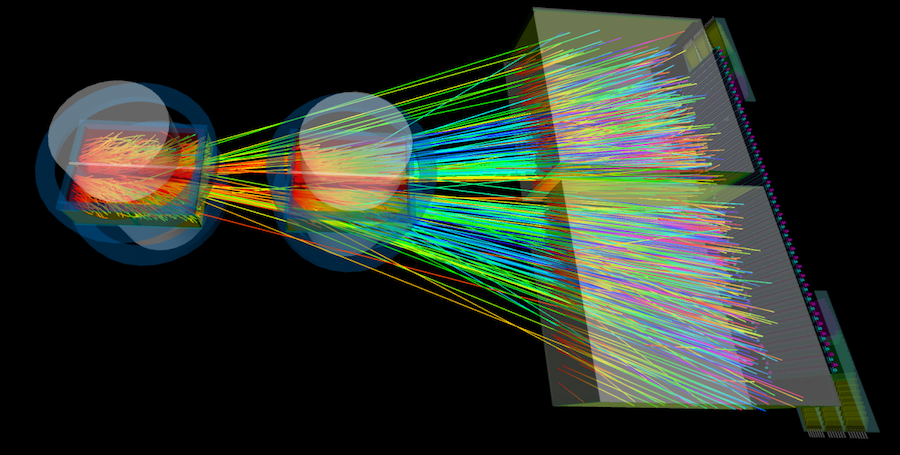

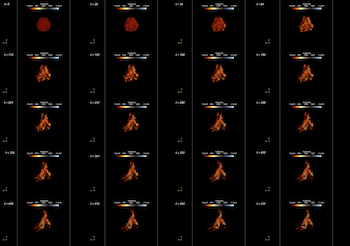

In the end, this collaboration vastly improved the accessibility of RNAMake, Joe’s software suite and the focus of the science gateway. Perhaps he explains it best with an analogy: ”RNAMake is basically a set of 500 different LEGO® pieces. Using enthusiastic gestures, Joe continues by offering an example: “Suppose you want to build something from this palm to this palm, in three-dimensional space. It [RNAMake] will find a set of LEGO® pieces that will fit there.”

+

+

+

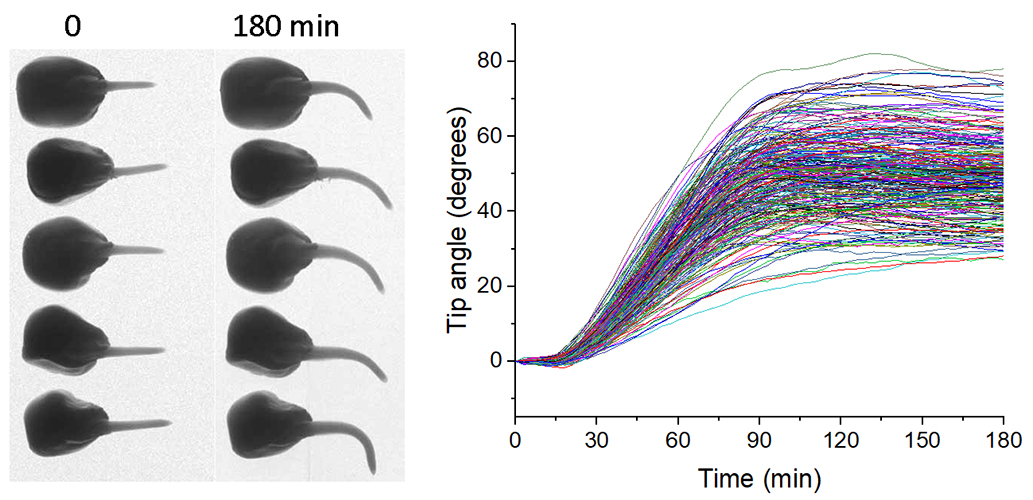

+

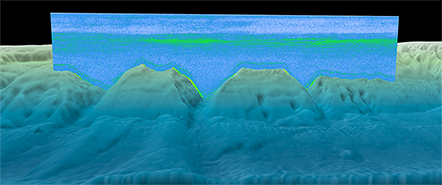

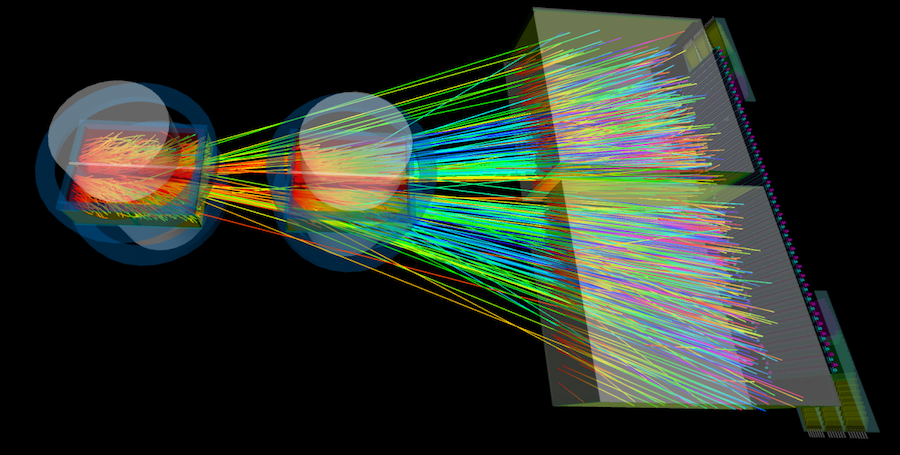

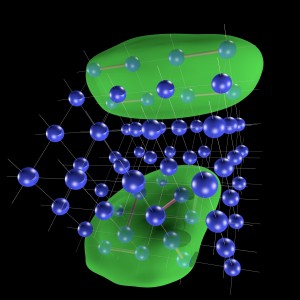

A demonstration of how RNAMake’s design algorithm works. Credit: Yesselman, J.D., Eiler, D., Carlson, E.D. et al. Computational design of three-dimensional RNA structure and function. Nat. Nanotechnol. 14, 866–873 (2019). https://doi.org/10.1038/s41565-019-0517-8

+

+

Since the possible combinations of these LEGO® pieces of RNA are endless, this tool saves users the painstaking work of predicting the structures manually. However, the installation and use of RNAMake requires researchers to have a large amount of command line knowledge –– something that the average biochemist might not have.

+

+

Ultimately, the science gateway makes this previously complicated software suddenly more accessible, allowing researchers to easily, quickly, and accurately design RNA nanostructures.

+

+

These structures are the basis for RNA nanomachines, which have a vast range of applications in society. Whether it be silencing RNAs that are used in clinical trials to cut cancer genes, or RNA biosensors that effectively bind to small molecules in order to detect contaminants even at low concentrations –– the RNAMake science gateway can help researchers design and build these structures.

+

+

Perhaps the most relevant and pressing applications are RNA-based vaccines like Moderna and Pfizer. These vaccines continue to be shipped across cities, countries, and continents to reach people in need, and it’s crucial that they remain in a stable form throughout their journey. Insight from RNA nanostructures can help ensure that these long strands of mRNA maintain stability so that they can eventually make their way into our cells.

+

+

Looking to the future, a second science gateway capstone project is already being planned for next year at UNL. Although it’s currently unclear what field of research it will serve, there’s no doubt that this project will foster collaboration, empower students and researchers, and impact society –– all through a few strokes on a keyboard.

+

+

+

+

+

+

+

+ Transforming research with high throughput computing

+

+

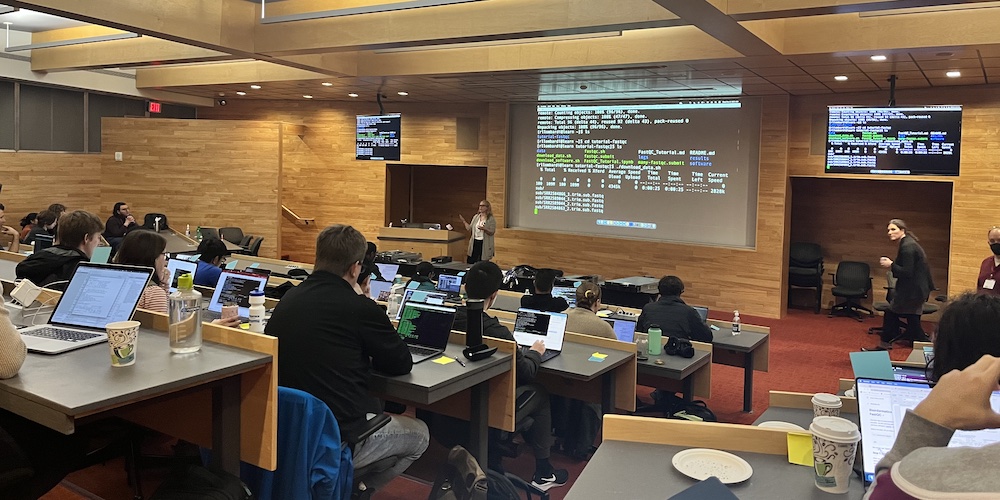

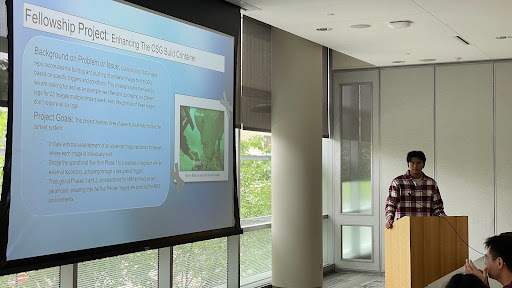

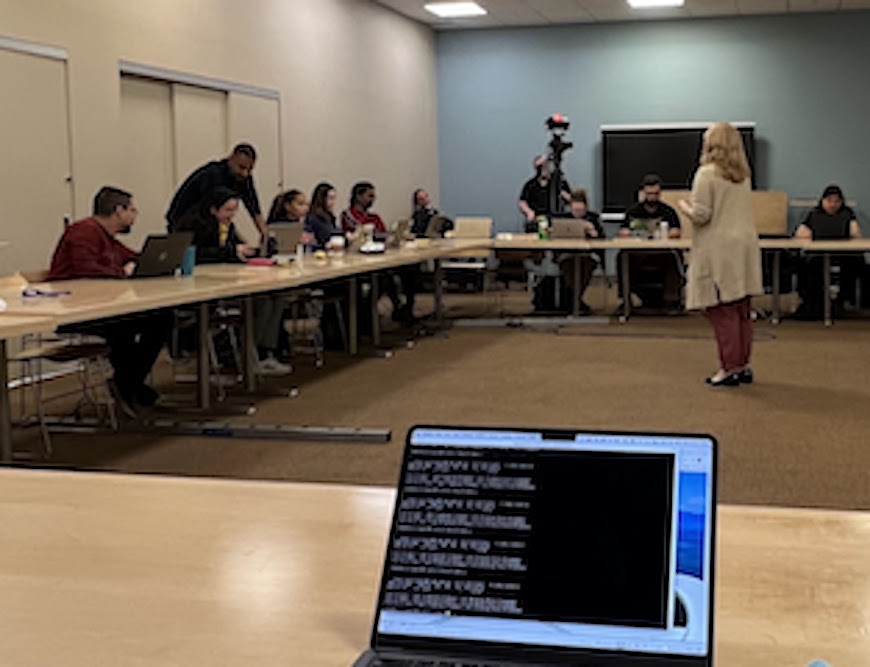

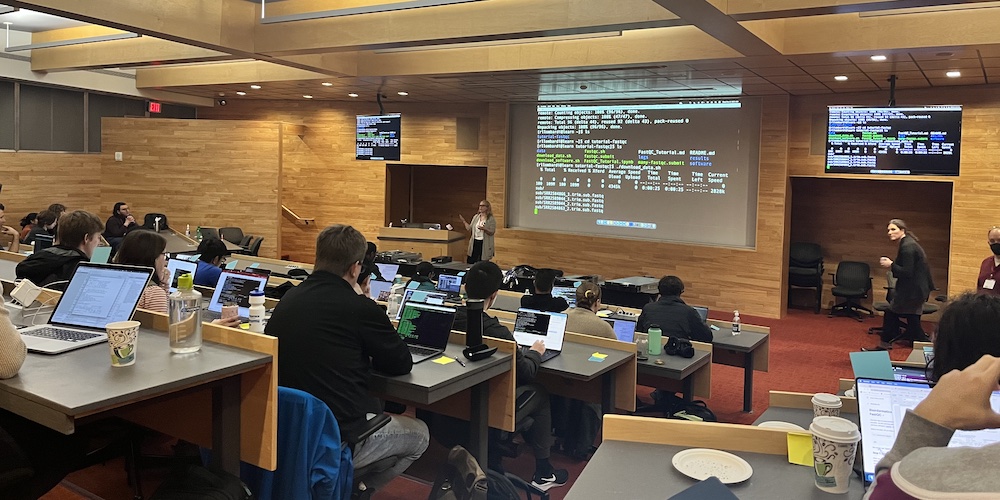

During the OSG Virtual School Showcase, three different researchers shared how high throughput computing has made lasting impacts on their work.

+

+

+

+

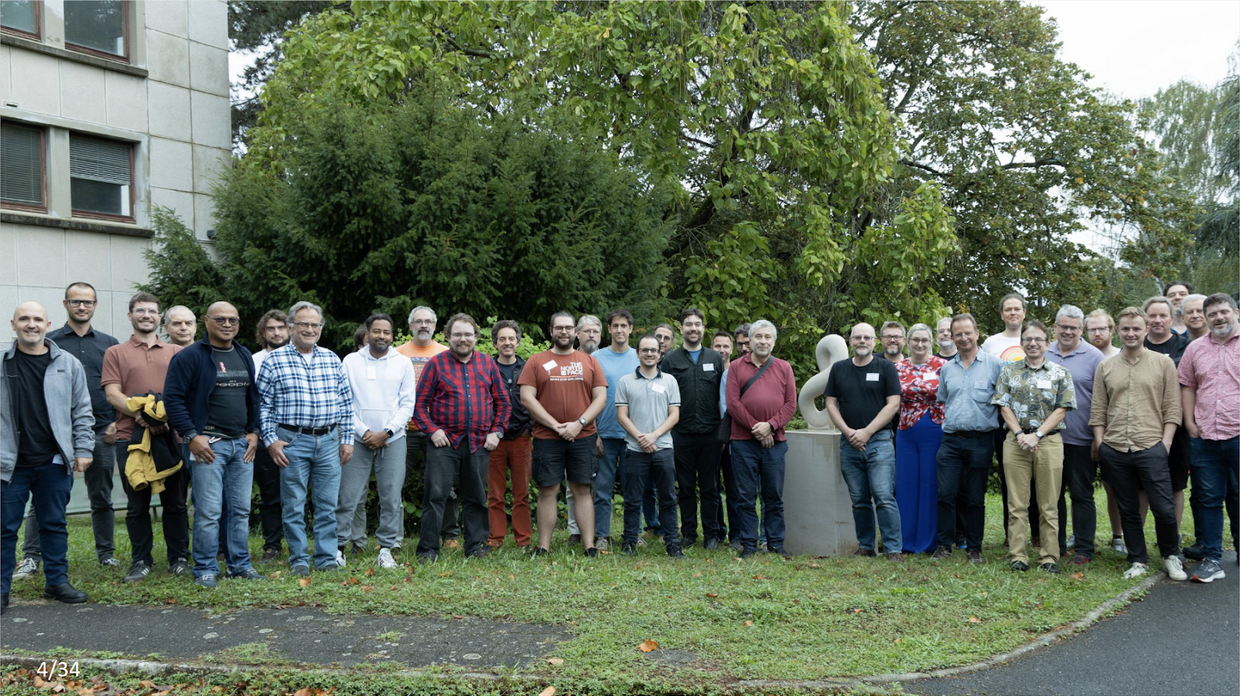

Over 40 researchers and campus research computing staff were selected to attend this year’s OSG Virtual School, all united by a shared desire to learn how high throughput computing can advance their work. During the first two weeks of August, school participants were busy attending lectures, watching demonstrations, and completing hands-on exercises; but on Wednesday, August 11, participants had the chance to hear from researchers who have successfully used high throughput computing (HTC) to transform their work. Year after year, this event –– the HTC Showcase –– is one highlight of the experience for many User School participants. This year, three different researchers in the fields of structural biology, psychology, and particle physics shared how HTC impacted their work. Read the articles below to learn about their stories.

+

+

Scaling virtual screening to ultra-large virtual chemical libraries – Spencer Ericksen, Carbone Cancer Center, University of Wisconsin-Madison

+

+

Using HTC for a simulation study on cross-validation for model evaluation in psychological science – Hannah Moshontz, Department of Psychology, University of Wisconsin-Madison

+

+

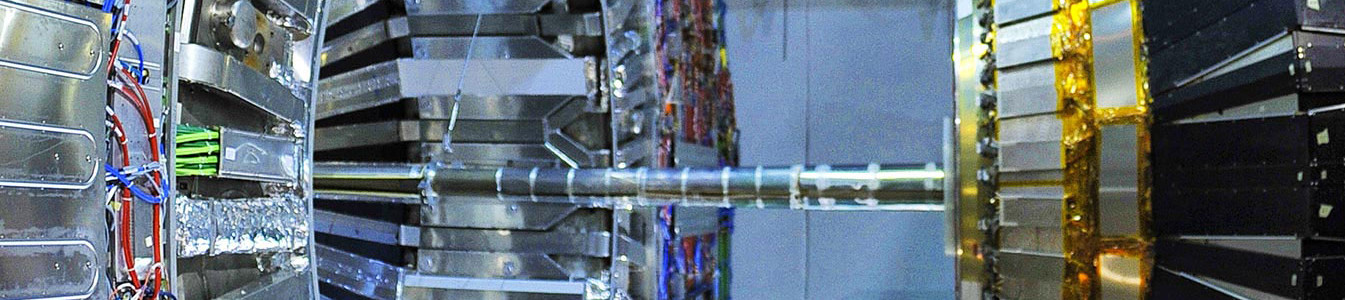

Antimatter: Using HTC to study very rare processes – Anirvan Shukla, Department of Physics, University of Hawai’i Mānoa

+

+

Collectively, these testimonies demonstrate how high throughput computing can transform research. In a few years, the students of this year’s User School might be the next Spencer, Hannah, and Anirvan, representing the new generation of researchers empowered by high throughput computing.

+

+

…

+

+

Visit the materials page to browse slide decks, exercises, and recordings of public lectures from OSG Virtual School 2021.

+

+

Established in 2010, OSG School, typically held each summer at the University of Wisconsin–Madison, is an annual education event for researchers who want to learn how to use distributed high throughput computing methods and tools. We hope to return to an in-person User School in 2022.

+

+

+

+

+

+

+

+

+ Scaling virtual screening to ultra-large virtual chemical libraries

+

+

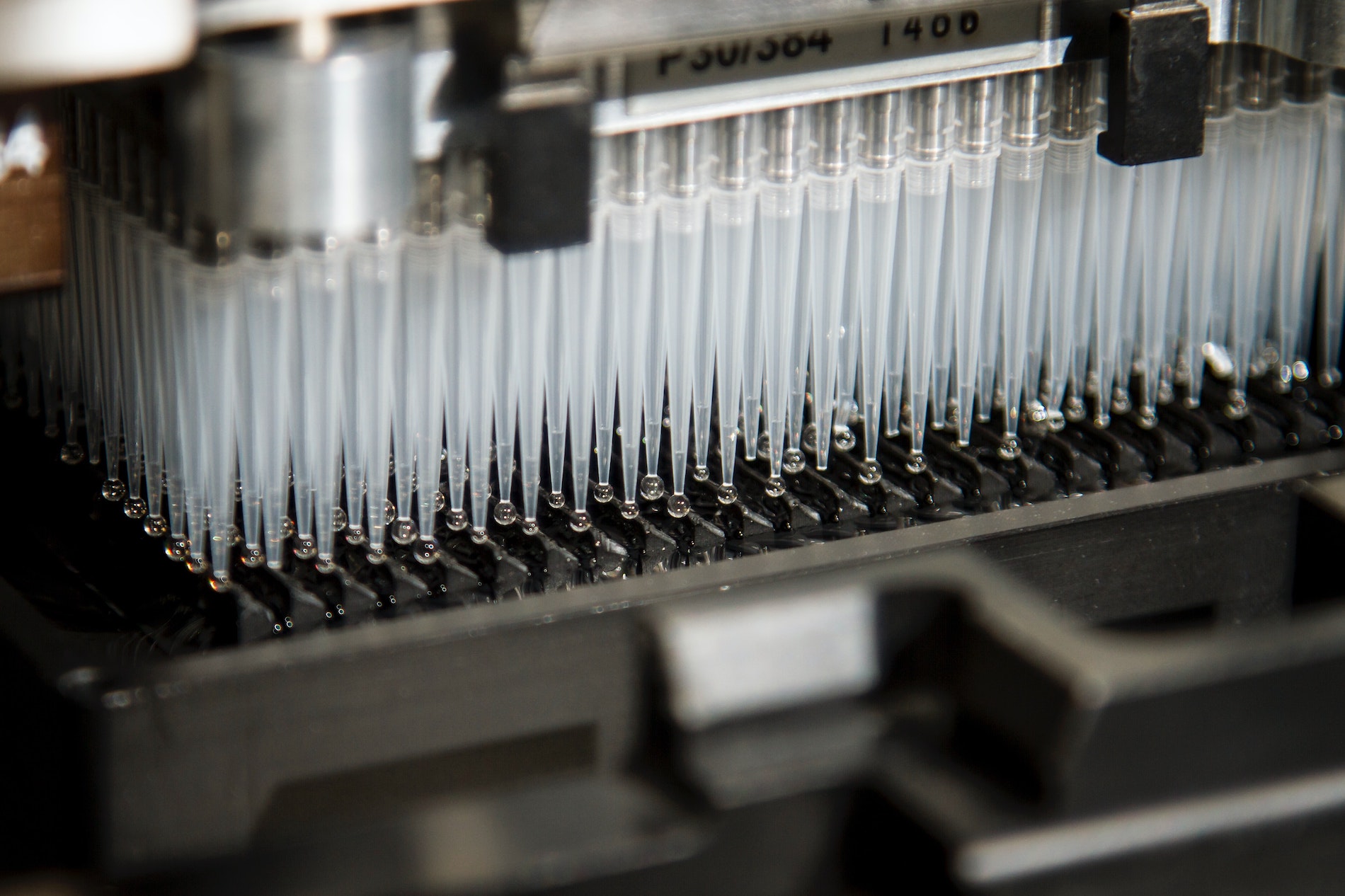

+  + Image by the National Cancer Institute on Unsplash

+ Image by the National Cancer Institute on Unsplash

+

+

+

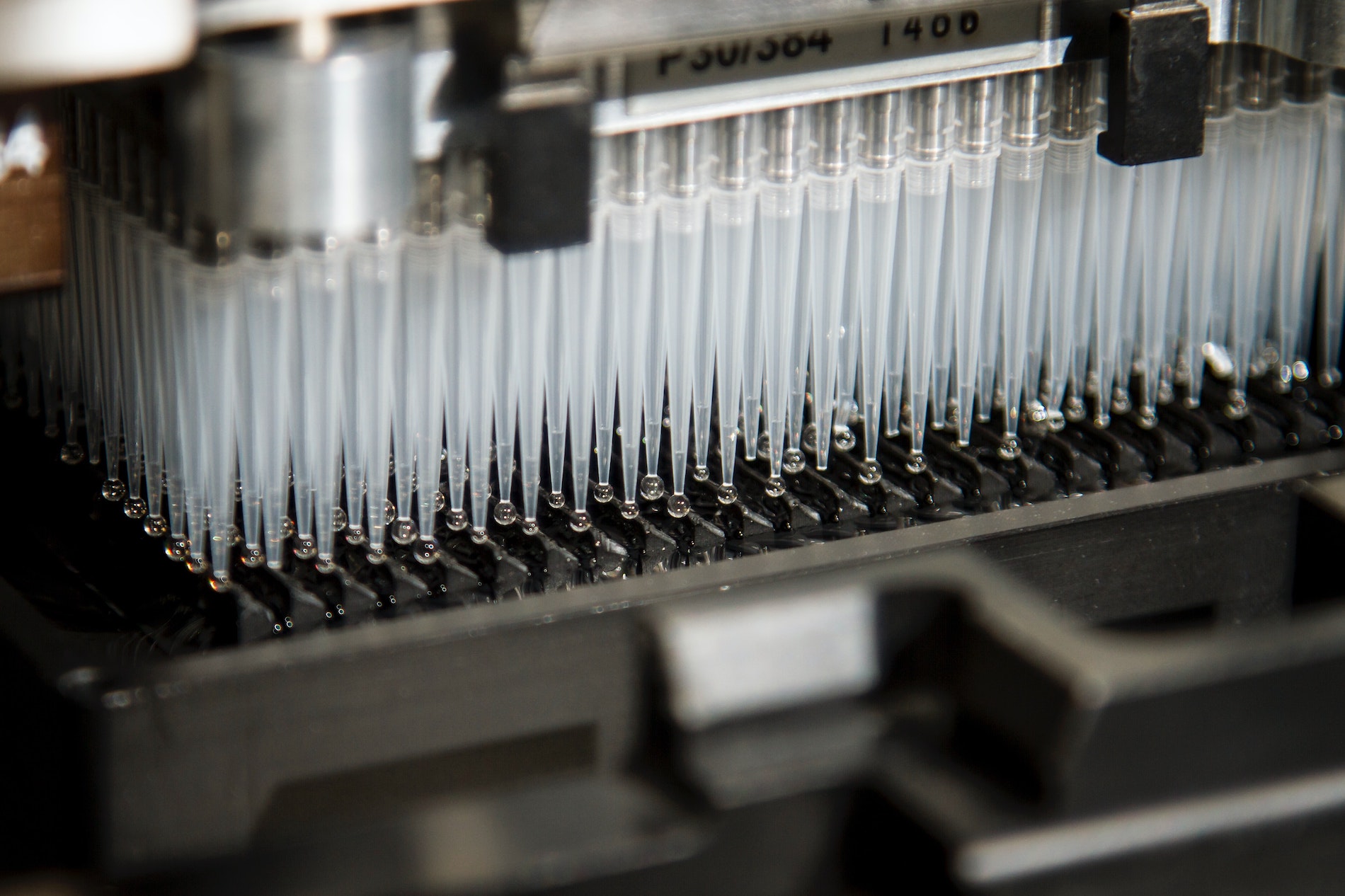

Kicking off the OSG User School Showcase, Spencer Ericksen, a researcher at the University of Wisconsin-Madison’s Carbone Cancer Center, described how high throughput computing (HTC) has made his work in early-stage drug discovery infinitely more scalable. Spencer works within the Small Molecule Screening Facility, where he partners with researchers across campus to search for small molecules that might bind to and affect the behavior of proteins they study. By using a computational approach, Spencer can help a researcher inexpensively screen many more candidates than possible through traditional laboratory approaches. With as many as 1033 possible molecules, the best binders from computational ‘docking’ might even be investigated as potential drug candidates.

+

+

With traditional laboratory approaches, researchers might test just 100,000 individual compounds using liquid handlers like the one pictured above. However, this approach is expensive, imposing limits both on the number of molecules tested and the number of researchers able to pursue potential binders of the proteins they study.

+

+

Spencer’s use of HTC allows him to take a different approach with virtual screening. By using computational models and machine learning techniques, he can inexpensively filter the masses of molecules and predict which ones will have the highest potential to interfere with a certain biological process. This reduces the time and money spent in the lab by selecting a subset of binding candidates that would be best to study experimentally.

+

+

“HTC is a fabulous resource for virtual screening,” Spencer attests. “We can now effectively validate, develop, and test virtual screening models, and scale to ever-increasing ultra-large virtual chemical libraries.” Today, Spencer is able to screen approximately 3.5 million molecules each day thanks to HTC.

+

+

There are a variety of virtual screening programs, but none of them are all that reliable individually. Instead of opting for a single program, Spencer runs several programs on the Open Science Pool (OSPool) and calculates a consensus score for each potential binder. “It’s a pretty old idea, basically like garnering wisdom from a council of fools,” Spencer explains. “Each program is a weak discriminator, but they do it in different ways. When we combine them, we get a positive effect that’s much better than the individual programs. Since we have the throughput, why not run them all?”

+

+

And there’s nothing stopping the Small Molecule Screening Facility from doing just that. Spencer’s jobs are independent from each other, making them “pleasantly parallelizable” on the OSPool’s distributed resources. To maximize throughput, Spencer splits the compound libraries that he’s analyzing into small increments that will run in approximately 2 hours, reducing the chances of a job being evicted and using the OSPool more efficiently.

+

+

…

+

+

This article is part of a series of articles from the 2021 OSG Virtual School Showcase. OSG School is an annual education event for researchers who want to learn how to use distributed high throughput computing methods and tools. The Showcase, which features researchers sharing how HTC has impacted their work, is a highlight of the school each year.

+

+

+

+

+

+

+

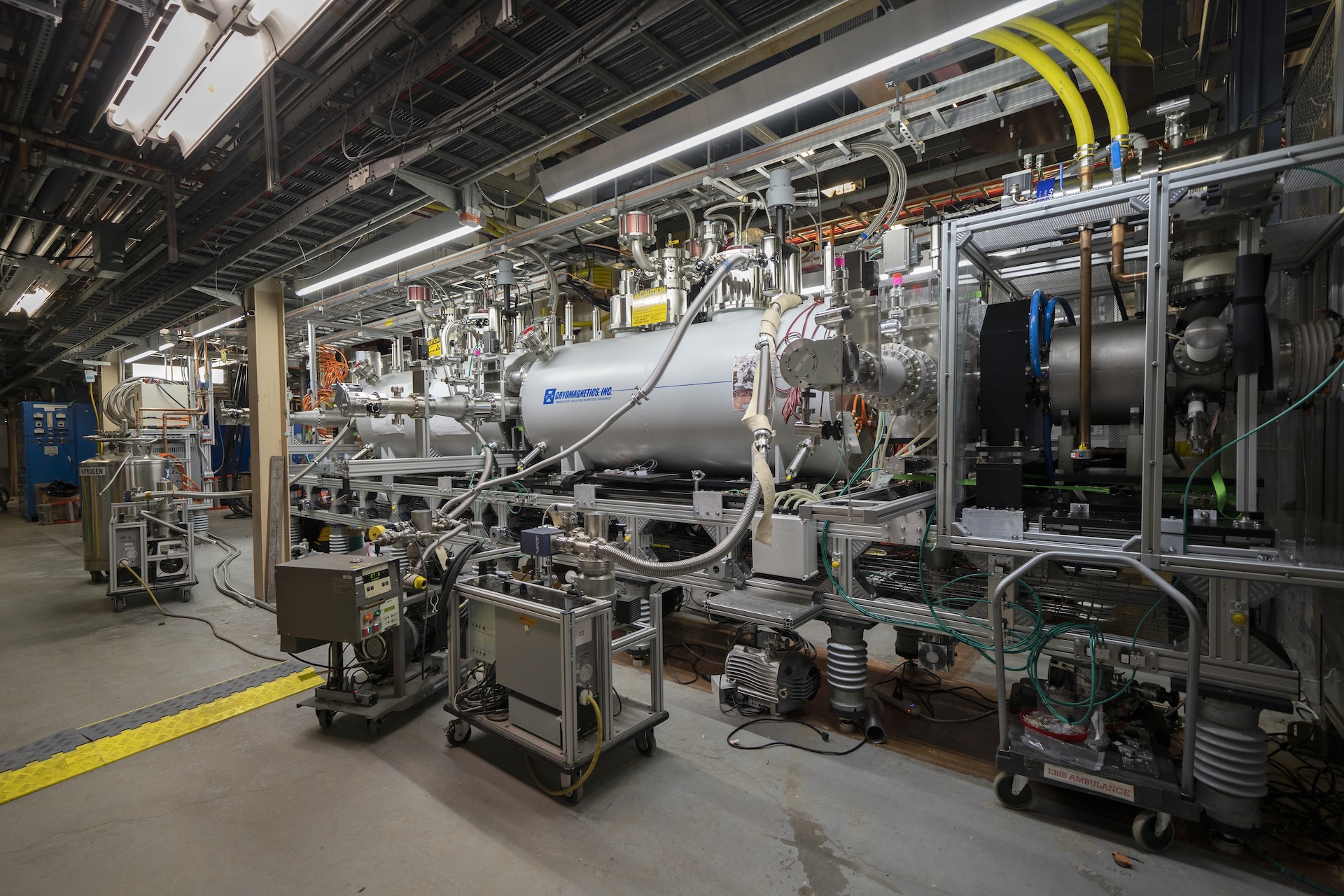

+ Technology Refresh

+

+

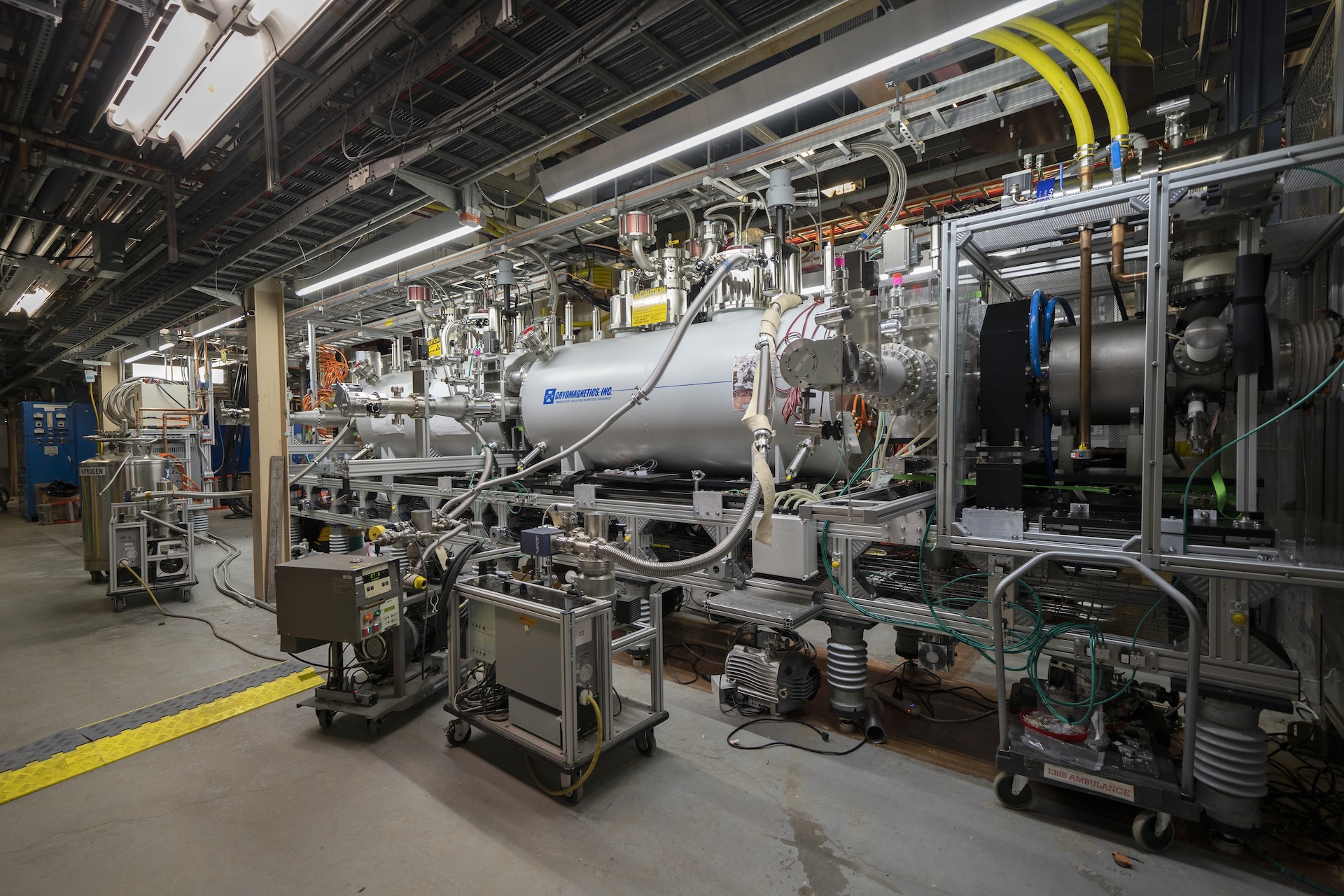

Thanks to the generous support of the Office of the Vice Chancellor for Research and Graduate Education with funding from the Wisconsin Alumni Research Foundation, CHTC has been able to execute a major refresh of hardware. This provided 207 new servers for our systems, representing over 40,000 batch slots of computing capacity. Most of this hardware arrived over the summer and we have started adding them to CHTC systems.

+

+

Continue reading to learn more about the types of servers we are adding and how to access them.

+

+

HTC System

+

+

On the HTC system, we are adding 167 servers of new capacity, representing 36,352 job slots and 40 high-end GPU cards.

+

+

The new servers will be running CentOS Linux 8 – CHTC users should see our website page about how to test your jobs and

+take advantage of servers running CentOS Stream 8. Details on user actions needed for this change can be found on the

+OS transition page.

+

+

New Server specs

+

+

PowerEdge R6525

+

+

+ - 157 servers with 128 cores / 256 job slots using the AMD Epyc 7763 processor

+ - 512 GB RAM per server

+

+

+

PowerEdge XE8545

+

+

+ - 10 servers, each with four A100 SXM4 80GB GPU cards

+ - 128 cores per server

+ - 512GB RAM per server

+

+

+

HPC Cluster

+

+

For the HPC cluster, we are adding 40 servers representing 5,120 cores. These servers have arrived but have not yet been added to the HPC cluster. In most cases, when we add them, they will form a new partition and displace some of our oldest servers, currently in the “univ2” partition.

+

+

New server specs:

+

+

Dell Poweredge R6525

+

+

+ - 128 cores using the AMD Epyc 7763 processor

+ - 512GB of memory

+

+

+

Users interested in early access to AMD processors before all 40 servers are installed should contact CHTC at chtc@cs.wisc.edu.

+

+

We have also obtained hardware and network infrastructure to completely replace the HPC cluster’s underlying file system and infiniband network fabric. We will be sending more updates to the chtc-users mailing list as we schedule specific transition dates for these major cluster components.

+

+

+

+

+

+

+

+ Save the dates for Throughput Computing 2023 - a joint HTCondor/OSG event

+

+

Don't miss these in-person learning opportunities in beautiful Madison, Wisconsin!

+

+

Save the dates for Throughput Computing 2023! For the first time, HTCondor Week and the OSG All-Hands Meeting will join together as a single, integrated event from July 10–14 to be held at the University of Wisconsin–Madison’s Fluno Center. Throughput Computing 2023 is sponsored by the OSG Consortium, the HTCondor team, and the UW-Madison Center for High Throughput Computing.

+

+

This will primarily be an in-person event, but remote participation (via Zoom) for the many plenary events will also be offered. Required registration for both components will open in March 2023.

+

+

If you register for the in-person event at the University of Wisconsin–Madison, you can attend plenary and non-plenary sessions, mingle with colleagues, and have planned or ad hoc meetings. Evening events are also planned throughout the week.

+

+

All the topics typically covered by HTCondor Week and the OSG All-Hands Meeting will be included:

+

+

+ - Science Enabled by the OSPool and the HTCondor Software Suite (HTCSS)

+ - OSG Technology

+ - HTCSS Technology

+ - HTCSS and OSG Tutorials

+ - State of the OSG

+ - Campus Services and Perspectives

+

+

+

The U.S. ATLAS and U.S. CMS high-energy physics projects are also planning parallel OSG-related topics during the event on Wednesday, July 12. (For other attendees, Wedneday’s schedule will also include parallel HTCondor and OSG tutorials and OSG Collaborations sessions.)

+

+

For questions, please contact us at events@osg-htc.org or htcondor-week@cs.wisc.edu.

+

+

View last year’s schedules for

+

+

+

+

+

+

+

+

+

+

+ How to Transfer 460 Terabytes? A File Transfer Case Study

+

+

When Greg Daues at the National Center for Supercomputing Applications (NCSA) needed to transfer 460 Terabytes of NCSA files from the National Institute of Nuclear and Particle Physics (IN2P3) in Lyon, France to Urbana, Illinois, for a project they were working with FNAL, CC-IN2P3 and the Rubin Data Production team, he turned to the HTCondor High Throughput system, not to run computationally intensive jobs, as many do, but to manage the hundreds of thousands of I/O bound transfers.

+

+

The Data

+

+

IN2P3 made the data available via https, but the number of files and their total size made the management of the transfer an engineering challenge. There were two kinds of files to be transferred, with 3.5 million files with a median size of roughly 100 Mb, and another 3.5 million smaller files, with a median size of about 10 megabytes. Total transfer size is roughly 460 Terabytes.

+

+

The Requirements

+

+

The requirement for this transfer was to reliably transfer all the files in a reasonably performant way, minimizing the human time to set up, run, and manage the transfer. Note the noni-goal of optimizing for the fastest possible transfer time – reliability and minimizing the human effort take priority here. Reliability, in this context implies:

+

+

Failed transfers are identified and re-run (with millions of files, a failed transfer is almost inevitable)

+Every file will get transferred

+The operation will not overload the sender, the receiver, or any network in between

+

+

The Inspiration

+

+

Daues presented unrelated work at the 2017 HTCondor Week workshop. At this workshop, he heard about the work of Phillip Papodopolous at UCSD, and his international Data Placement Lab (iDPL). iDPL used HTCondor jobs solely for transferring data between international sites. Daues re-used and adapted some of these ideas for NCSA’s needs.

+

+

The Solution

+

First, Daues installed a “mini-condor”, an HTCondor pool entirely on one machine, with an access point and eight execution slots on that same machine. Then, given a single large file containing the names of all the files to transfer, he ran the Unix split command to create separate files with either 50 of the larger files, or 200 of the smaller files. Finally, using the HTCondor submit file command

+

+

Queue filename matching files *.txt

+

+

the condor_submit command creates one job per split file, which runs the wget2 command and passes the list of filenames to wget2. The HTCondor access point can handle tens of thousands of idle jobs, and will schedule these jobs on the eight execution slots. While more slots would yield more overlapped i/o, eight slots were chosen to throttle the total network bandwidth used. Over the course of days, this machine with eight slots maintained roughly 600 MB/seconds.

+

+

(Note that the machine running HTCondor did not crash during this run, but if it had, all the jobs, after submission, were stored reliably on the local disk, and at such time as the crashed machine restarted, and the init program restarted the HTCondor system, all interrupted jobs would be restarted, and the process would continue without human intervention.)

+

+

+

+

+

+

+

+ The Future of Radio Astronomy Using High Throughput Computing

+

+

Eric Wilcots, UW-Madison dean of the College of Letters & Science and the Mary C. Jacoby Professor of Astronomy, dazzles the HTCondor Week 2022 audience.

+

+

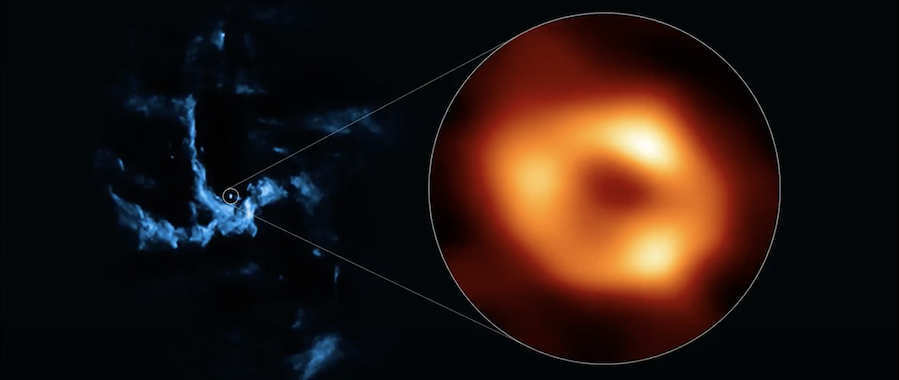

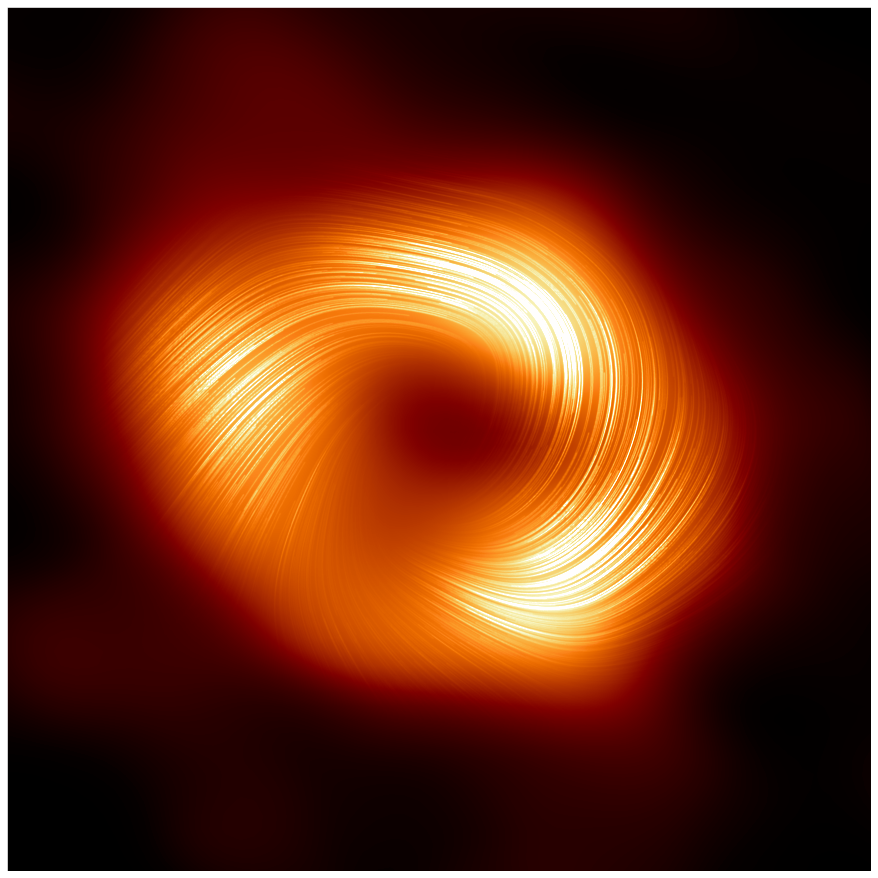

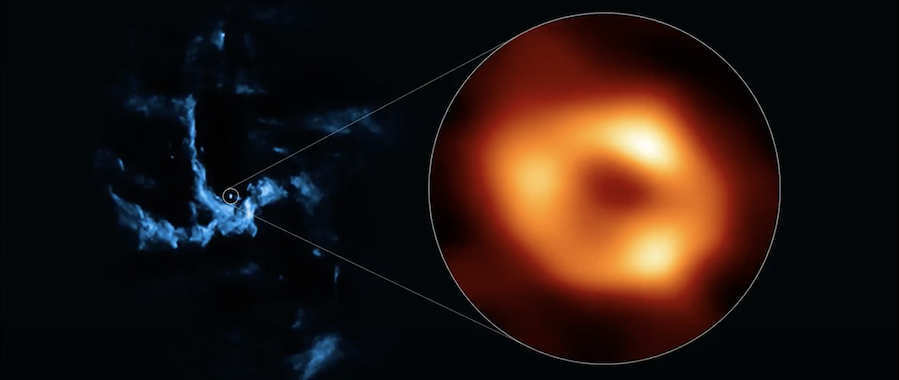

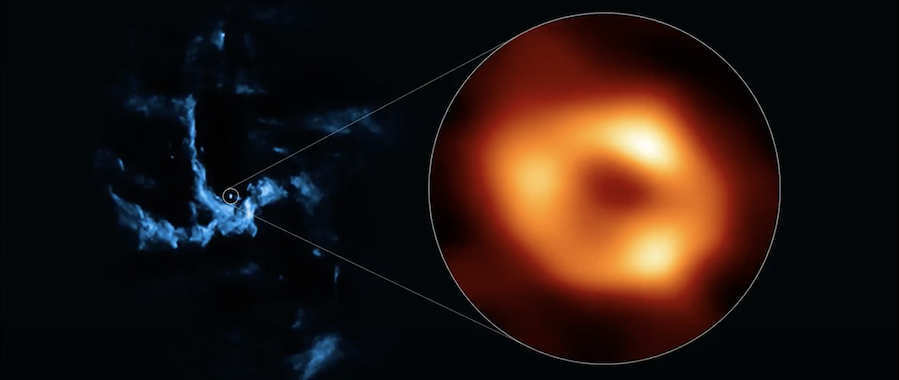

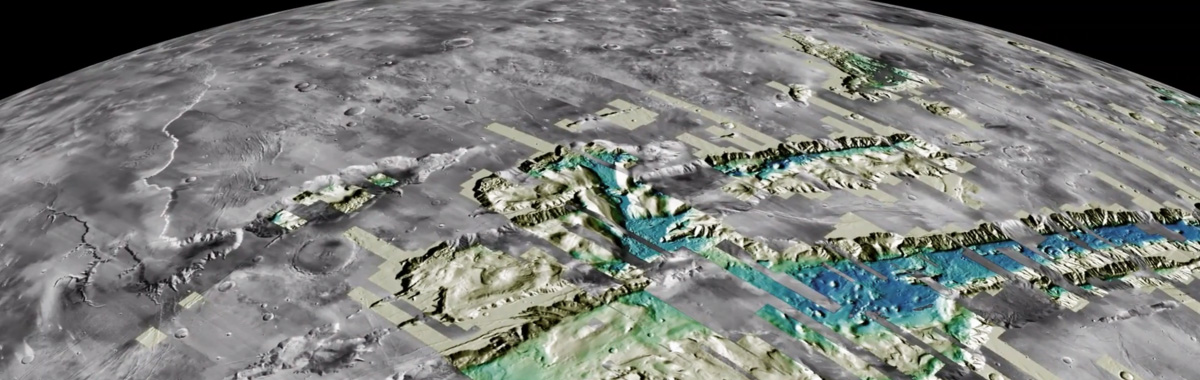

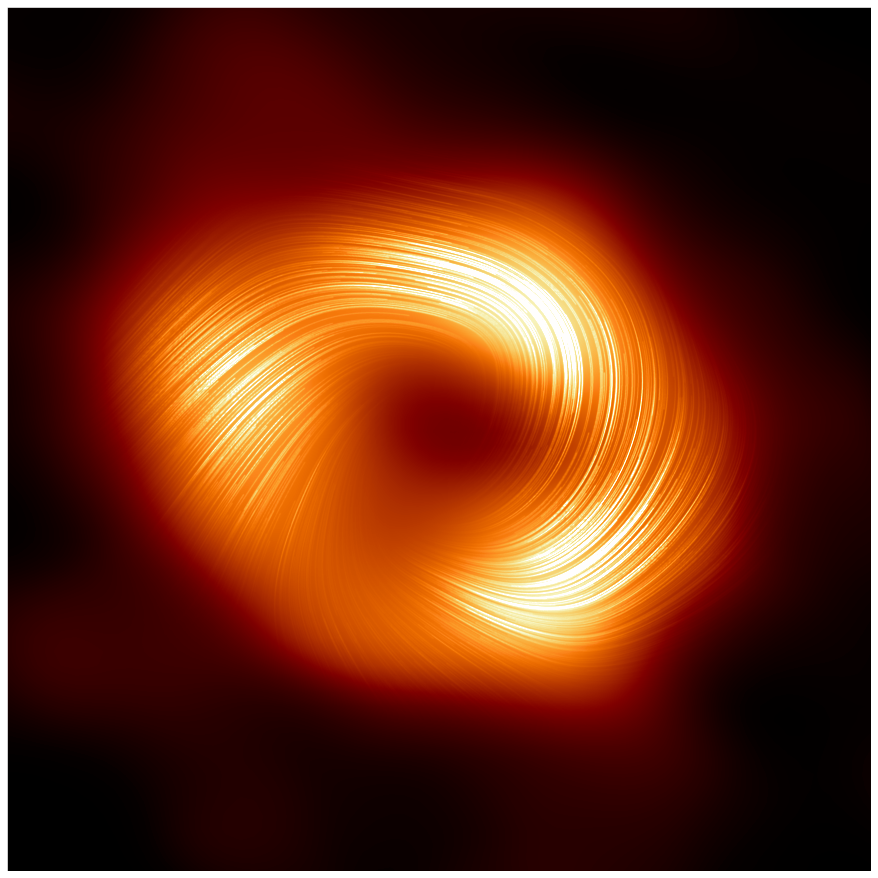

+  + Image of the black hole in the center of our Milky Way galaxy.

+ Image of the black hole in the center of our Milky Way galaxy.

+

+

+

+  + Eric Wilcots

+ Eric Wilcots

+

+

+

“My job here is to…inspire you all with a sense of the discoveries to come that will need to be enabled by” high throughput computing (HTC), Eric Wilcots opened his keynote for HTCondor Week 2022. Wilcots is the UW-Madison dean of the College of Letters & Science and the Mary C. Jacoby Professor of Astronomy.

+

+

Wilcots points out that the black hole image (shown above) is a remarkable feat in the world of astronomy. “Only the third such black hole imaged in this way by the Event Horizon Telescope,” and it was made possible with the help of the HTCondor Software Suite (HTCSS).

+

+

Beginning to build the future

+

+

Wilcots described how in the 1940s, a group of universities recognized that no single university could build a radio telescope necessary to advance science. To access these kinds of telescopes, the universities would need to have the national government involved, as it was the only one with this capability at that time. In 1946, these universities created Associated Universities Incorporated (AUI), which eventually became the management agency for the National Radio Astronomy Observatory (NRAO).

+

+

Advances in radio astronomy rely on current technology available to experts in this field. Wilcots explained that “the science demands more sensitivity, more resolution, and the ability to map large chunks of the sky simultaneously.” New and emerging technologies must continue pushing forward to discover the next big thing in radio astronomy.

+

+

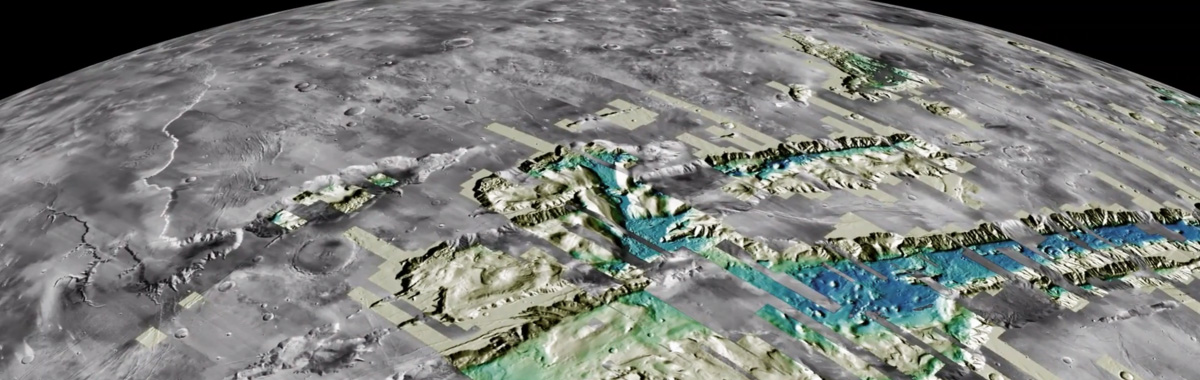

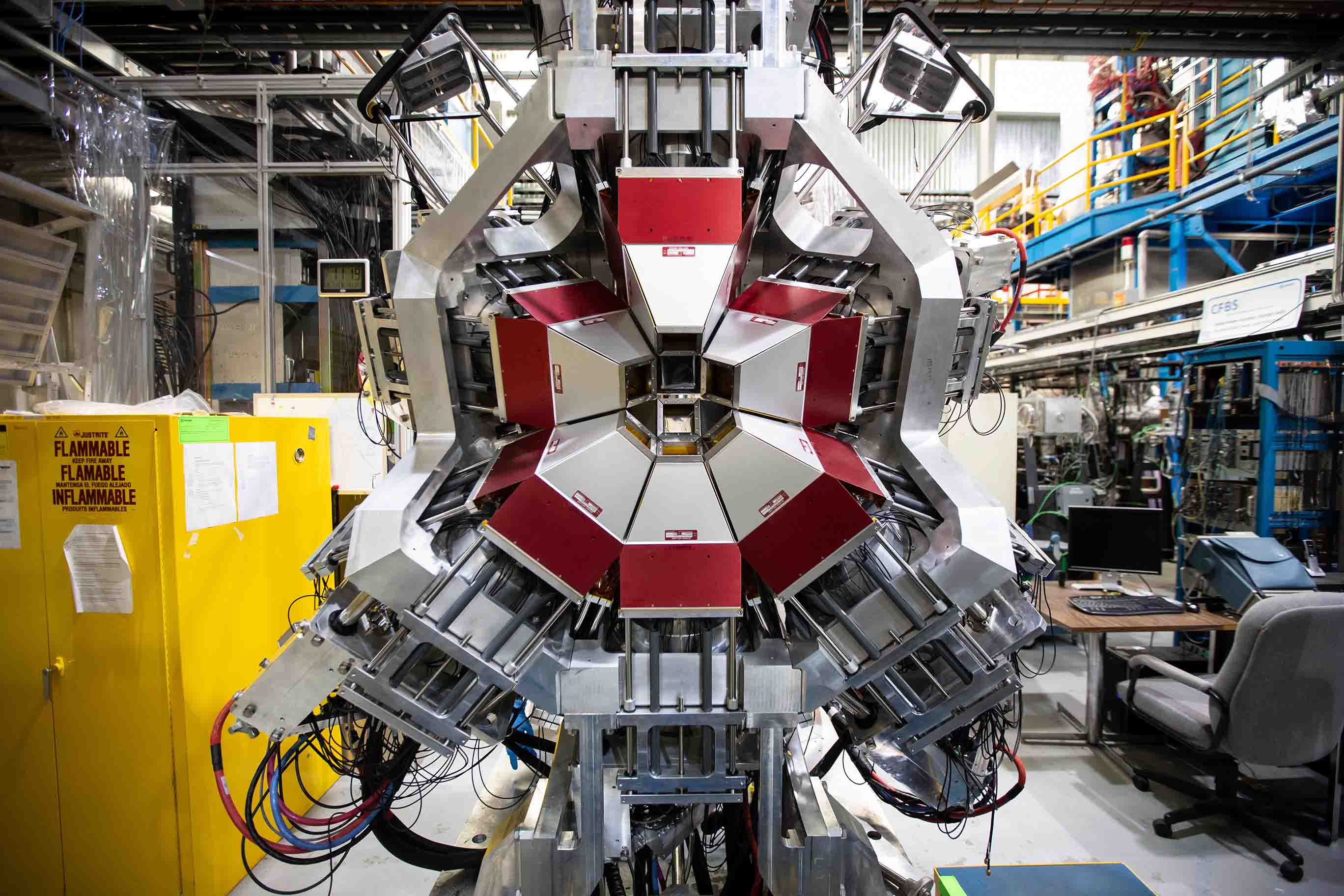

This next generation of science requires more sensitive technology with higher spectra resolution than the Karl G. Jansky Very Large Array (JVLA) can provide. It also requires sensitivity in a particular chunk of the spectrum that neither the JVLA nor Atacama Large Millimeter/submillimeter Array (ALMA) can achieve. Wilcots described just what piece of technology astronomers and engineers need to create to reach this level of sensitivity. “We’re looking to build the Next Generation Very Large Array (ngVLA)…an instrument that will cover a huge chunk of spectrum from 1 GHz to 116 GHz.”

+

+

The fundamentals of the ngVLA

+

+

“The unique and wonderful thing about interferometry, or the basis of radio astronomy,” Wilcots discussed, “is the ability to have many individual detectors or dishes to form a telescope.” Each dish collects signals, creating an image or spectrum of the sky when combined. Because of this capability, engineers working on these detectors can begin to collect signals right away, and as more dishes get added, the telescope grows larger and larger.

+

+

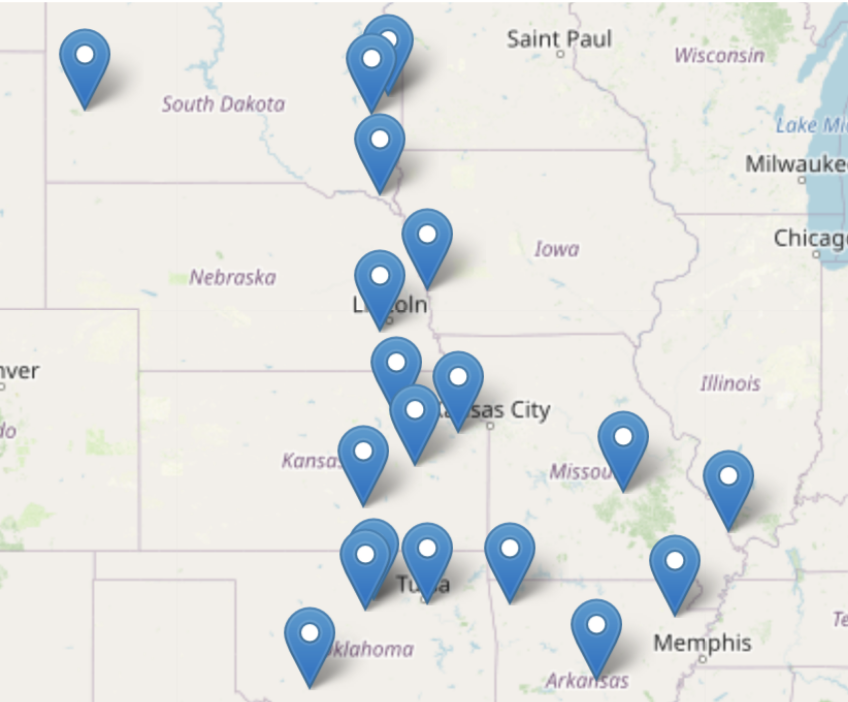

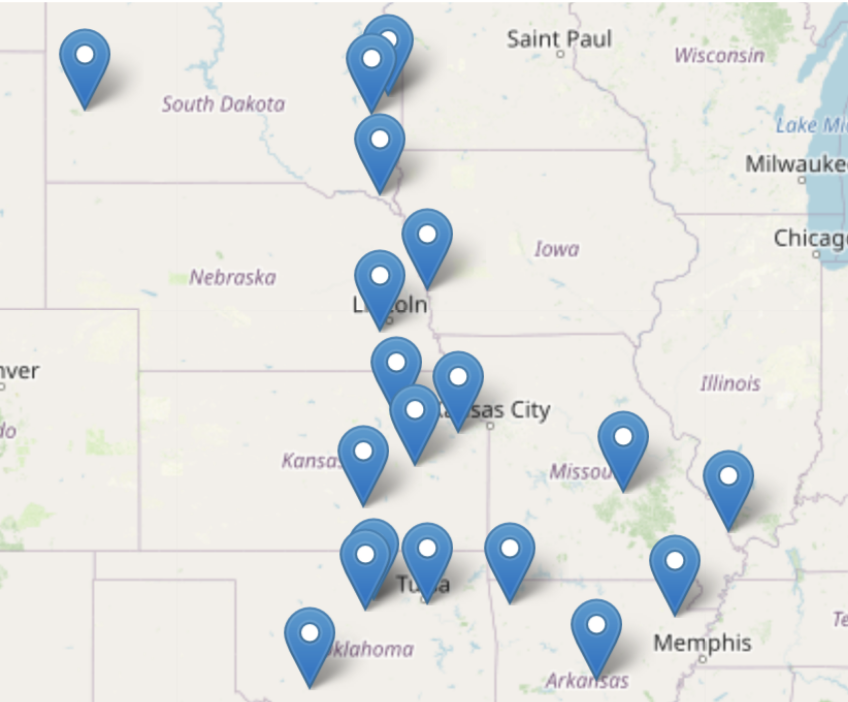

Many individual detectors also mean lots of flexibility in the telescope arrays built, Wilcots explained. Here, the idea is to do several different arrays to make up one telescope. A particular scientific case drives each of these arrays:

+

+ - Main Array: a dish that you can control and point accurately but is also robust; it’ll be the workhorse of the ngVLA, simultaneously capable of high sensitivity and high-resolution observations.

+ - Short Baseline Array: dishes that are very close together, which allows you to have a large field of view of the sky.

+ - Long Baseline Array: spread out across the continental United States. The idea here is the longer the baseline, the higher the resolution. Dishes that are well separated allow the user to get spectacular spatial resolution of the sky. For example, the Event Horizon Telescope that took the image of the black hole is a telescope that spans the globe, which is the longest baseline we can get without putting it into orbit.

+

+

+

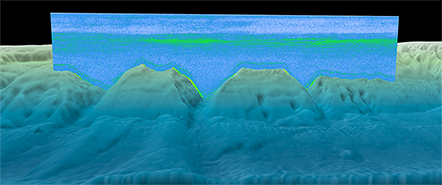

+  + The ngVLA will be spread out over the southwest United States and Mexico.

+ The ngVLA will be spread out over the southwest United States and Mexico.

+

+

+

A consensus study report called Pathways to Discovery in Astronomy and Astrophysics for the 2020s (Astro2020) identified the ngVLA as a high priority. The construction of this telescope should begin this decade and be completed by the middle of the 2020s.

+

+

Future of radio astronomy: planet formation

+

+

An area of research that radio astronomers are interested in examining in the future is imaging the formation of planets, Wilcot notes. Right now, astronomers can detect a planet’s presence and deduce specific characteristics, but being able to detect a planet directly is the next huge priority.

+

+

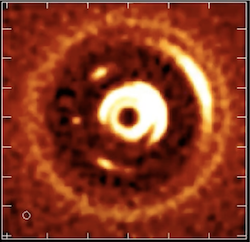

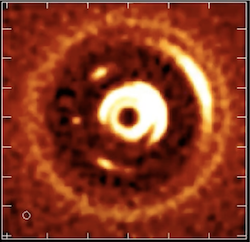

+  + A planetary system forming

+ A planetary system forming

+

+

+

One place astronomers might be able to do this with something like the ngVLA is in the early phases of planet formation within a planetary system. The thermal emissions from this process are bright enough to be detected by a telescope like the ngVLA. So the idea is to use this telescope to map an image of nearby planetary systems and begin to image the early stages of planet formation directly. A catalog of these planets forming will allow astronomers to understand what happens when planetary systems, like our own, form.

+

+

Future of radio astronomy: molecular systems

+

+

Wilcots explains that radio astronomers have discovered the spectral signature of innumerable molecules within the past fifty years. The ngVLA is being designed to probe, detect, catalog, and understand the origin of complex molecules and what they might tell us about star and planet formation. Wilcots comments in his talk that “this type of work is spawning a new type of science…a remarkable new discipline of astrobiology is emerging from our ability to identify and trace complex organic molecules.”

+

+

Future of radio astronomy: galaxy completion

+

+

Next, Wilcots discusses that radio astronomers want to understand how stars form in the first place and the processes that drive the collapse of clouds of gas into regions of star formations.

+

+

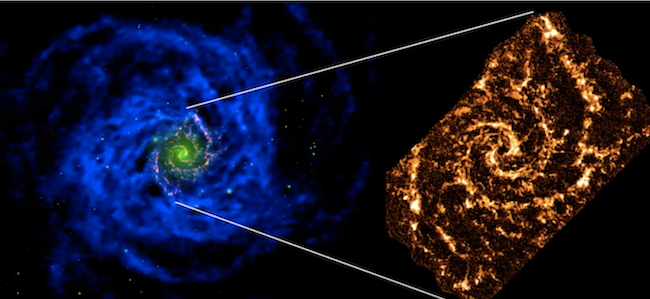

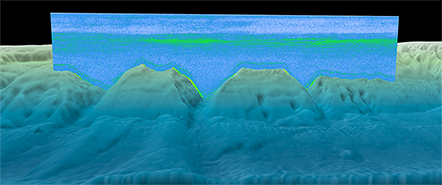

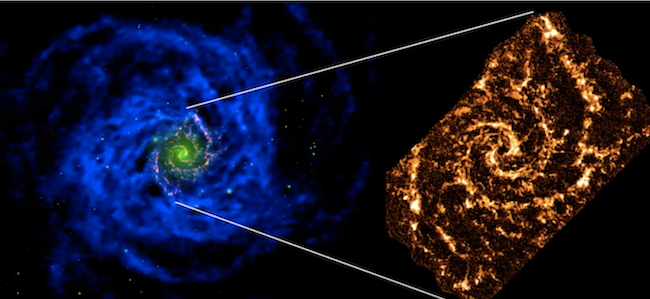

+  + An image of a blue spiral from the VLA of a nearby spiral galaxy is on the left. On the right an optical extent of the galaxy.

+ An image of a blue spiral from the VLA of a nearby spiral galaxy is on the left. On the right an optical extent of the galaxy.

+

+

+

The gas in a galaxy tends to extend well beyond the visible part of the galaxy, and this enormous gas reservoir is how the galaxy can make stars.

+

+

Astronomers like Wilcots want to know where the gas is, what drives that process of converting the gas into stars, what role the environment might play, and finally, what makes a galaxy stop creating stars.

+

+

ngVLA will be able to answer these questions as it combines the sensitivity and spatial resolution needed to take images of gas clouds in nearby galaxies while also capturing the full extent of that gas.

+

+

Future of radio astronomy: black holes

+

+

Wilcots’ look into the future of radio astronomy finishes with the idea and understanding of black holes.

+

+

Multi-messenger astrophysics helps experts recognize that information about the universe is not simply electromagnetic, as it is known best; there is more than one way astronomers can look at the universe.

+

+

More recently, astronomers have been looking at gravitational waves. In particular, they’ve been looking at how they can find a way to detect the gravitational waves produced by two black holes orbiting around one another to determine each black hole’s mass and learn something about them. As the recent EHT images show, we need radio telescopes’ high resolution and sensitivity to understand the nature of black holes fully.

+

+

A look toward the future

+

+

The next step is for the NRAO to create a prototype of the dishes they want to install for the telescope. Then, it’s just a question of whether or not they can build and install enough dishes to deliver this instrument to its full capacity. Wilcots elaborates, “we hope to transition to full scientific operations by the middle of next decade (the 2030s).”

+

+

The distinguished administrator expressed that “something that’s haunted radio astronomy for a while is that to do the imaging, you have to ‘be in the club,’ ” meaning that not just anyone can access the science coming out of these telescopes. The goal of the NRAO moving forward is to create science-ready data products so that this information can be more widely available to anyone, not just those with intimate knowledge of the subject.

+

+

This effort to make this science more accessible has been part of a budding collaboration between UW-Madison, the NRAO, and a consortium of Historically Black Colleges and Universities and other Minority Serving Institutions in what is called Project RADIAL.

+

+

“The idea behind RADIAL is to broaden the community; not just of individuals engaged in radio astronomy, but also of individuals engaged in the computing that goes into doing the great kind of science we have,” Wilcots explains.

+

+

On the UW-Madison campus in the Summer of 2022, half a dozen undergraduate students from the RADIAL consortium will be on campus doing summer research. The goal is to broaden awareness and increase the participation of communities not typically involved in these discussions in the kind of research in the radial astronomy field.

+

+

“We laid the groundwork for a partnership with a number of these institutions, and that partnership is alive and well,” Wilcots remarks, “so stay tuned for more of that, and we will be advancing that in the upcoming years.”

+

+

…

+

+

Watch a video recording of Eric Wilcots’ talk at HTCondor Week 2022.

+

+

+

+

+

+

+

+

+

+ European HTCondor Workshop: Abstract Submission Open

+

+

Share your experiences with HTCSS at the European HTCondor Workshop in Amsterdam!

+

+

See this recent post in htcondor-users for details.

+

+

+

+

+

+

+

+ Using HTC expanded scale of research using noninvasive measurements of tendons and ligaments

+

+

With this technique and the computing power of high throughput computing (HTC) combined, researchers can obtain thousands of simulations to study the pathology of tendons

+and ligaments.

+

+

A recent paper published in the Journal of the Mechanical Behavior of Biomedical Materials by former Ph.D.

+student in the Department of Mechanical Engineering (and current post-doctoral researcher at the University of Pennsylvania)

+Jonathon Blank and John Bollinger Chair of Mechanical Engineering

+Darryl Thelen used the Center for High Throughput Computing (CHTC) to obtain their results.

+Results that, Blank says, would not have been obtained at the same scale without HTC. “[This project], and a number of other projects, would have had a very small snapshot of the

+problem at hand, which would not have allowed me to obtain the understanding of shear waves that I did. Throughout my time at UW, I ran tens of thousands of simulations — probably

+even hundreds of thousands.”

+

+

+  + Post-doctoral researcher at the University of Pennsylvania Jonathon Blank.

+ Post-doctoral researcher at the University of Pennsylvania Jonathon Blank.

+

+

+

Using noninvasive sensors called shear wave tensiometers, researchers on this project applied HTC to study tendon structure and function. Currently, research in this field is hard

+to translate because most assessments of tendon and ligament structure-function relationships are performed on the benchtop in a lab, Blank explains. To translate the benchtop

+experiments into studying tendons in humans, the researchers use tensiometers as a measurement tool, and this study developed from trying to better understand these measurements

+and how they can be applied to humans. “Tendons are very complex materials from an engineering perspective. When stretched, they can bear loads far exceeding your body weight, and

+interestingly, even though they serve their roles in transmitting force from muscle to bone really well, the mechanisms that give rise to injury and pathology in these tissues aren’t

+well understood.”

+

+

+  + John Bollinger Chair of Mechanical Engineering Darryl Thelen.

+ John Bollinger Chair of Mechanical Engineering Darryl Thelen.

+

+

+

In living organisms, researchers have used tensiometers to study the loading of muscles and tendons, including the triceps surae, which connects to the Achilles tendon, Blank notes.

+Since humans are variable regarding the size, stiffness, composition, and length of their tendons or ligaments, it’s “challenging to use a model to accurately represent a parameter

+space of human biomechanics in the real world. High throughput computing is particularly useful for our field just because we can readily express that variability at a large scale”

+through HTC. With Thelen and Orthopedics and Rehabilitation assistant professor Josh Roth, Blank developed a pipeline for

+simulating shear wave propagation in tendons and ligaments with HTC, which Blank and Thelen used in the paper.

+

+

With HTC, the researchers of this paper were able to further explore the mechanistic causes of changes in wave speed. “The advantage of this technique is being able to fully explore

+an input space of different stiffnesses, geometries, microstructures, and applied forces. The advantage of the capabilities offered by the CHTC is that we can fill the entire input

+space, not just between two data points, and thereby study changes in shear wave speed due to physiological factors and the mechanical underpinning driving those changes,” Blank

+elaborates.

+

+

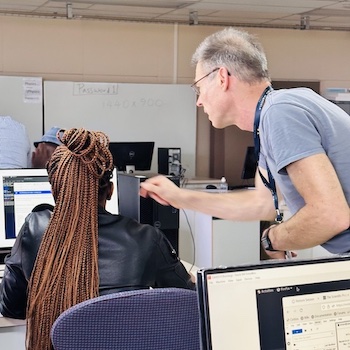

It wasn’t challenging to implement, Blank states, since facilitators were readily available to help and meet with him. When he first started using HTC, Blank attended the CHTC

+office hours to get answers to his questions, even during COVID-19; during this time, there were also numerous one-on-one meetings. Having this backbone of support from the CHTC

+research facilitators propelled Blank’s research and made it much easier. “For a lot of modeling studies, you’ll have this sparse input space where you change a couple of parameters

+and investigate the sensitivity of your model that way. But it’s hard to interpret what goes on in between, so the CHTC quite literally saved me a lot of time. There were some

+1,000 simulations in the paper, and HTC by scaling out the workload turned a couple thousand hours of simulation time into two or three hours of wall clock time. It’s a unique tool

+for this kind of research.”

+

+

The next step from this paper’s findings, Blank describes, is providing subject-specific measurements of wave speeds. This involves “understanding if when we use a tensiometer on

+someone’s Achilles tendon, for example, can we account for the tendon’s shape, size, injury status, etcetera — all of these variables matter when measuring shear wave speeds.”

+Researchers from the lab can then use wearable tensiometers to measure tension in the Achilles and other tendons to study human movement in the real world.

+

+

From his CHTC-supported studies, Blank learned how to design computational research, diagnose different parameter spaces, and manage data. “For my field, it [HTC] is very important

+because people are extremely variable — so our models should be too. The automation and capacity enabled by HTC makes it easy to understand whether our models are useful, and if

+they are, how best to tune them to inform human biomechanics,” Blank says.

+

+

+

+

+

+

+

+ About Our Approach

+

+

CHTC’s specialty is High

+Throughput Computing (HTC), which involves breaking up a single large

+computational task into many smaller tasks for the fastest overall

+turnaround. Most of our users find HTC to be invaluable

+in accelerating their computational work and thus their research.

+We support thousands of multi-core computers and use the task

+scheduling software called HTCondor, developed right here in Madison, to

+run thousands of independent jobs on as many total processors as

+possible. These computers, or “machines”, are distributed across several

+collections that we call pools (similar to “clusters”). Because machines are

+assigned to individual jobs, many users can be running jobs on a pool at any

+given time, all managed by HTCondor.

+

+

The diagram below shows some of the largest pools on campus and also

+shows our connection to the US-wide OS Pool where UW computing

+work can “backfill” available computers all over the country. The number

+under each resource name shows an approximate number of computing hours

+available to campus researchers for a typical week in Fall 2013. As

+demonstrated in the diagram, we help users to submit their work not only

+to our CHTC-owned machines, but to improve their throughput even further

+by seamlessly accessing as many available computers as possible, all

+over campus AND all over the country.

+

+

The vast majority of the computational work that campus researcher have

+is HTC, though we are happy to support researchers with a variety of

+beyond-the-desktop needs, including tightly-coupled computations (e.g.

+MPI), high-memory work (e.g. metagenomics), and specialized

+hardware like GPUs.

+

+

+

+

What kinds of applications run best in the CHTC?

+

+

“Pleasantly parallel” tasks, where many jobs can run independently,

+is what works best in the CHTC, and is what we can offer the greatest

+computational capacity for.

+Analyzing thousands of images, inferring statistical significance of hundreds of

+thousands of samples, optimizing an electric motor design with millions

+of constraints, aligning genomes, and performing deep linguistic search

+on a 30 TB sample of the internet are a few of the applications that

+campus researchers run every day in the CHTC. If you are not sure if

+your application is a good fit for CHTC resources, get in

+touch and we will be happy to help you figure it out.

+

+

Within a single compute system, we also support GPUs, high-memory

+servers, and specialized hardware owned by individual research groups.

+For tightly-coupled computations (e.g. MPI and similar programmed

+parallelization), our resources include an HPC Cluster, with faster

+inter-node networking.

+

+

How to Get Access

+

+

While you may be excited at the prospect of harnessing 100,000 compute

+hours a day for your research, the most valuable thing we offer is,

+well, us. We have a small, yet dedicated team of professionals who eat,

+breathe and sleep distributed computing. If you are a UW-Madison Researcher, you can request an

+account, and one of our dedicated Research Computing

+Facilitators will follow up to provide specific recommendations to

+accelerate YOUR science.

+

+

+

+

+

+

`-`` all receive top and bottom margins. We nuke the top\n// margin for easier control within type scales as it avoids margin collapsing.\n\n%heading {\n margin-top: 0; // 1\n margin-bottom: $headings-margin-bottom;\n font-family: $headings-font-family;\n font-style: $headings-font-style;\n font-weight: $headings-font-weight;\n line-height: $headings-line-height;\n color: $headings-color;\n}\n\nh1 {\n @extend %heading;\n @include font-size($h1-font-size);\n}\n\nh2 {\n @extend %heading;\n @include font-size($h2-font-size);\n}\n\nh3 {\n @extend %heading;\n @include font-size($h3-font-size);\n}\n\nh4 {\n @extend %heading;\n @include font-size($h4-font-size);\n}\n\nh5 {\n @extend %heading;\n @include font-size($h5-font-size);\n}\n\nh6 {\n @extend %heading;\n @include font-size($h6-font-size);\n}\n\n\n// Reset margins on paragraphs\n//\n// Similarly, the top margin on `

`s get reset. However, we also reset the\n// bottom margin to use `rem` units instead of `em`.\n\np {\n margin-top: 0;\n margin-bottom: $paragraph-margin-bottom;\n}\n\n\n// Abbreviations\n//\n// 1. Duplicate behavior to the data-bs-* attribute for our tooltip plugin\n// 2. Add the correct text decoration in Chrome, Edge, Opera, and Safari.\n// 3. Add explicit cursor to indicate changed behavior.\n// 4. Prevent the text-decoration to be skipped.\n\nabbr[title],\nabbr[data-bs-original-title] { // 1\n text-decoration: underline dotted; // 2\n cursor: help; // 3\n text-decoration-skip-ink: none; // 4\n}\n\n\n// Address\n\naddress {\n margin-bottom: 1rem;\n font-style: normal;\n line-height: inherit;\n}\n\n\n// Lists\n\nol,\nul {\n padding-left: 2rem;\n}\n\nol,\nul,\ndl {\n margin-top: 0;\n margin-bottom: 1rem;\n}\n\nol ol,\nul ul,\nol ul,\nul ol {\n margin-bottom: 0;\n}\n\ndt {\n font-weight: $dt-font-weight;\n}\n\n// 1. Undo browser default\n\ndd {\n margin-bottom: .5rem;\n margin-left: 0; // 1\n}\n\n\n// Blockquote\n\nblockquote {\n margin: 0 0 1rem;\n}\n\n\n// Strong\n//\n// Add the correct font weight in Chrome, Edge, and Safari\n\nb,\nstrong {\n font-weight: $font-weight-bolder;\n}\n\n\n// Small\n//\n// Add the correct font size in all browsers\n\nsmall {\n @include font-size($small-font-size);\n}\n\n\n// Mark\n\nmark {\n padding: $mark-padding;\n background-color: $mark-bg;\n}\n\n\n// Sub and Sup\n//\n// Prevent `sub` and `sup` elements from affecting the line height in\n// all browsers.\n\nsub,\nsup {\n position: relative;\n @include font-size($sub-sup-font-size);\n line-height: 0;\n vertical-align: baseline;\n}\n\nsub { bottom: -.25em; }\nsup { top: -.5em; }\n\n\n// Links\n\na {\n color: $link-color;\n text-decoration: $link-decoration;\n\n &:hover {\n color: $link-hover-color;\n text-decoration: $link-hover-decoration;\n }\n}\n\n// And undo these styles for placeholder links/named anchors (without href).\n// It would be more straightforward to just use a[href] in previous block, but that\n// causes specificity issues in many other styles that are too complex to fix.\n// See https://github.com/twbs/bootstrap/issues/19402\n\na:not([href]):not([class]) {\n &,\n &:hover {\n color: inherit;\n text-decoration: none;\n }\n}\n\n\n// Code\n\npre,\ncode,\nkbd,\nsamp {\n font-family: $font-family-code;\n @include font-size(1em); // Correct the odd `em` font sizing in all browsers.\n direction: ltr #{\"/* rtl:ignore */\"};\n unicode-bidi: bidi-override;\n}\n\n// 1. Remove browser default top margin\n// 2. Reset browser default of `1em` to use `rem`s\n// 3. Don't allow content to break outside\n\npre {\n display: block;\n margin-top: 0; // 1\n margin-bottom: 1rem; // 2\n overflow: auto; // 3\n @include font-size($code-font-size);\n color: $pre-color;\n\n // Account for some code outputs that place code tags in pre tags\n code {\n @include font-size(inherit);\n color: inherit;\n word-break: normal;\n }\n}\n\ncode {\n @include font-size($code-font-size);\n color: $code-color;\n word-wrap: break-word;\n\n // Streamline the style when inside anchors to avoid broken underline and more\n a > & {\n color: inherit;\n }\n}\n\nkbd {\n padding: $kbd-padding-y $kbd-padding-x;\n @include font-size($kbd-font-size);\n color: $kbd-color;\n background-color: $kbd-bg;\n @include border-radius($border-radius-sm);\n\n kbd {\n padding: 0;\n @include font-size(1em);\n font-weight: $nested-kbd-font-weight;\n }\n}\n\n\n// Figures\n//\n// Apply a consistent margin strategy (matches our type styles).\n\nfigure {\n margin: 0 0 1rem;\n}\n\n\n// Images and content\n\nimg,\nsvg {\n vertical-align: middle;\n}\n\n\n// Tables\n//\n// Prevent double borders\n\ntable {\n caption-side: bottom;\n border-collapse: collapse;\n}\n\ncaption {\n padding-top: $table-cell-padding-y;\n padding-bottom: $table-cell-padding-y;\n color: $table-caption-color;\n text-align: left;\n}\n\n// 1. Removes font-weight bold by inheriting\n// 2. Matches default `

` alignment by inheriting `text-align`.\n// 3. Fix alignment for Safari\n\nth {\n font-weight: $table-th-font-weight; // 1\n text-align: inherit; // 2\n text-align: -webkit-match-parent; // 3\n}\n\nthead,\ntbody,\ntfoot,\ntr,\ntd,\nth {\n border-color: inherit;\n border-style: solid;\n border-width: 0;\n}\n\n\n// Forms\n//\n// 1. Allow labels to use `margin` for spacing.\n\nlabel {\n display: inline-block; // 1\n}\n\n// Remove the default `border-radius` that macOS Chrome adds.\n// See https://github.com/twbs/bootstrap/issues/24093\n\nbutton {\n // stylelint-disable-next-line property-disallowed-list\n border-radius: 0;\n}\n\n// Explicitly remove focus outline in Chromium when it shouldn't be\n// visible (e.g. as result of mouse click or touch tap). It already\n// should be doing this automatically, but seems to currently be\n// confused and applies its very visible two-tone outline anyway.\n\nbutton:focus:not(:focus-visible) {\n outline: 0;\n}\n\n// 1. Remove the margin in Firefox and Safari\n\ninput,\nbutton,\nselect,\noptgroup,\ntextarea {\n margin: 0; // 1\n font-family: inherit;\n @include font-size(inherit);\n line-height: inherit;\n}\n\n// Remove the inheritance of text transform in Firefox\nbutton,\nselect {\n text-transform: none;\n}\n// Set the cursor for non-` |

+

+

+

+

+ |

+

+

+| |

+ |

+ |

+ |

+

+

+

+

+

+|

+

+

+ Feedback or content questions:

+ send email to "condor-admin" at the cs.wisc.edu server

+

+ Technical or accessibility issues:

+ chtc@cs.wisc.edu

+

+ |

+

+

+

+

+

+

diff --git a/preview-fall2024-info/includes/chtc_on_campus.png b/preview-fall2024-info/includes/chtc_on_campus.png

new file mode 100644

index 000000000..b77707bb1

Binary files /dev/null and b/preview-fall2024-info/includes/chtc_on_campus.png differ

diff --git a/preview-fall2024-info/includes/chtcusers.jpg b/preview-fall2024-info/includes/chtcusers.jpg

new file mode 100644

index 000000000..f647dbcaa

Binary files /dev/null and b/preview-fall2024-info/includes/chtcusers.jpg differ

diff --git a/preview-fall2024-info/includes/chtcusers_400.jpg b/preview-fall2024-info/includes/chtcusers_400.jpg

new file mode 100644

index 000000000..efa7e6675

Binary files /dev/null and b/preview-fall2024-info/includes/chtcusers_400.jpg differ

diff --git a/preview-fall2024-info/includes/chtcusers_L.jpg b/preview-fall2024-info/includes/chtcusers_L.jpg

new file mode 100644

index 000000000..fac6f5084

Binary files /dev/null and b/preview-fall2024-info/includes/chtcusers_L.jpg differ

diff --git a/preview-fall2024-info/includes/cron-generated/dynamic-resources-noedit.html b/preview-fall2024-info/includes/cron-generated/dynamic-resources-noedit.html

new file mode 100644

index 000000000..f4161e936

--- /dev/null

+++ b/preview-fall2024-info/includes/cron-generated/dynamic-resources-noedit.html

@@ -0,0 +1,39 @@

+| Pool/Mem | ≥1GB | ≥2GB | ≥4GB | ≥8GB | ≥16GB | ≥32GB | ≥64GB |

|---|

+| cm.chtc.wisc.edu |

+0 |

+0 |

+0 |

+0 |

+0 |

+0 |

+0 |

+

+| condor.cs.wisc.edu |

+0 |

+0 |

+0 |

+0 |

+0 |

+0 |

+0 |

+

+| condor.cae.wisc.edu |

+0 |

+0 |

+0 |

+0 |

+0 |

+0 |

+0 |

+

+| Totals |

+0 |

+0 |

+0 |

+0 |

+0 |

+0 |

+0 |

+

|---|

+

+As of Mon Jun 12 07:30:02 CDT 2017

diff --git a/preview-fall2024-info/includes/jahns/Bundle Proximity Losses Paragraph.doc b/preview-fall2024-info/includes/jahns/Bundle Proximity Losses Paragraph.doc

new file mode 100644

index 000000000..2695061cf

Binary files /dev/null and b/preview-fall2024-info/includes/jahns/Bundle Proximity Losses Paragraph.doc differ

diff --git a/preview-fall2024-info/includes/jahns/Thumbs.db b/preview-fall2024-info/includes/jahns/Thumbs.db

new file mode 100644

index 000000000..dd3f84614

Binary files /dev/null and b/preview-fall2024-info/includes/jahns/Thumbs.db differ

diff --git a/preview-fall2024-info/includes/old.intro.html b/preview-fall2024-info/includes/old.intro.html

new file mode 100755

index 000000000..7b02b4a01

--- /dev/null

+++ b/preview-fall2024-info/includes/old.intro.html

@@ -0,0 +1,26 @@

+Research is a computationally expensive endeavor, demanding on any computing resources available. Quite often, a researcher will require resources for computations for short bursts of time, frequently leaving the computer idle. This often results in wasted potential computation time. This issue can be addressed by means of high-throughput computing.

+High-throughput computing allows for many computational tasks to be done over a long period of time. It is concerned largely with the number of compute resources that are available to people who wish to use the system. It is a very useful system for researchers, who are more concerned with the number of computations they can do over long spans of time than they are with short-burst computations. Because of its value to research computations, the Univeristy of Wisconsin set up the Center for High-Throughput Computing to bring researchers and compute resources together.

+The Center for High-Throughput Computing (CHTC), approved in August 2006, has numerous resources at its disposal to keep up with the computational needs of UW Madison. These resources are being funded by the National Institute of Health (NIH), the Department of Energy (DOE), the National Science Foundation (NSF), and various grants from the University itself. Email us to see what we can do to help automate your research project at chtc@cs.wisc.edu It aims to pull four different resources together into one operation:

+

+ HTC Technologies: The CHTC leans heavily on the HTCondor project to provide a framework where high-throughput computing can take place. The HTCondor project aims to make grid and high-throughput computing a reality in any number of environments.

Dedicated Resources: CHTC HTCondor pool

+ The CHTC cluster is now composed of 1900 cores for use by researches across our campus. These rack mounted blade systems run Linux. Each core is 2.8Ghz with 1.5GB RAM or better. CHTC has provided 10 million CPU hours of research computation between 05/17/2008 and 02/23/2010 prior to the additional 960 cores. With the recent server purchase, CHTC provides in excess of 37,000 CPU hours per day.

+

+

+ CHTC Policy Description here

+

+ Middleware: The GRIDS branch at UW Madison will be an essential part towards keeping the CHTC running efficiently. GRIDS is funded by the NSF Middleware Initiative (NMI). At the University of Wisconsin, the HTCondor project makes heavy use of this system with their NMI Build & Test facility. The NMI Build & Test facility provides a framework to build and test software on a wide variety of platform and hardware combinations.

Computing Laboratory: The University of Wisconsin has many compute clusters at its disposal. In 2004 the university won an award to build the Grid Laboratory of Wisconsin (GLOW). GLOW is an interdepartmental pool of HTCondor nodes, containing 3000 CPUs and about 1 PB of storage.

+

+The University of Wisconsin-Madison (UW-Madison) campus is an excellent match for meeting the computational needs of your project. Existing UW technology infrastructure that can be leveraged includes CPU capacity, network connectivity, storage availability, and middleware connectivity. But perhaps most important, the UW has significant staff experience and core competency in deploying, managing, and using computational technology.

+

+

+To reiterate: The UW launched and funded the Center for High Throughput Computing (CHTC), a campus-wide organization dedicated to supercharging research on campus by working side-by-side with you, the domain scientists on infusing high throughput computing and grid computing techniques into your routine. Between the CHTC and the aforementioned HTCondor Project, the UW is home to over 20 full-time staff with a proven track record of making compute middleware work for scientists. Far beyond just being familiar with deployment and use of such software, UW staff has been intimately involved in its design and implementation.

+

+

+Applications: Many researchers are already using these facilities. More information about a sampling of those using the CHTC can be found here.

+And less recent projects in CHTC Older projects.

+

+

diff --git a/preview-fall2024-info/includes/onerow b/preview-fall2024-info/includes/onerow

new file mode 100644

index 000000000..9d97cdfc9

--- /dev/null

+++ b/preview-fall2024-info/includes/onerow

@@ -0,0 +1,18 @@

+

+

+

+

+ |

+

+

+Professor

+

+

+1,401,361 CPU hours since 1/1/2009

+

+

+

+

+

+ |

+

diff --git a/preview-fall2024-info/includes/web.css b/preview-fall2024-info/includes/web.css

new file mode 100644

index 000000000..293031cab

--- /dev/null

+++ b/preview-fall2024-info/includes/web.css

@@ -0,0 +1,447 @@

+/* The following six styles set attibutes for heading tags H1-H6 */

+

+div.announcement {

+ border: 1px solid #787878;

+ background-color: #efefef;

+ color: #787878;

+ padding: .5em;

+ margin: 1em 2em 1em 2em;

+ }

+

+table.gtable {

+ background: #B70101;

+ padding: 5px 10px;

+ border-radius: 5px;

+ -moz-border-radius: 5px;

+ -webkit-border-radius: 5px;

+

+ color: #FFFFFF;

+ overflow: hidden;

+ margin-bottom: 20px;

+

+ background: #ddd;

+ color: #333;

+ border: 0;

+ border-bottom: 3px solid #bbb;

+

+ -moz-box-shadow: 0px 2px 7px 1px #bbb;

+ -webkit-box-shadow: 0px 2px 7px 1px #bbb;

+ box-shadow: 0px 2px 7px 1px #bbb;

+}

+

+table.gtable img{

+ border-radius: 5px;

+ -moz-border-radius: 5px;

+ -webkit-border-radius: 5px;

+}

+

+table.gtable td {

+ padding: 0.6em 0.8em 0.6em 0.8em;

+ background-color: #ddd;

+ border-bottom: 1px solid #bbb;

+ border-top: 0px;

+ overflow: visible;

+}

+

+table.gtable th {

+ padding: 0.6em 0.8em 0.6em 0.8em;

+ background-color: #b70101;

+ color: #FFFFFF;

+ border: 0px;

+ border-bottom: 3px solid #920000;

+}

+

+H1 {

+ margin-bottom: -5px;

+ text-align: left;

+ color: #000000;

+ font-size: 180%;

+ font-weight: bold;

+ font-family: verdana, geneva, arial, sans-serif;

+ line-height: 195%

+ }

+

+

+H2 {

+ margin-top: 20px;

+ margin-bottom: 0px;

+ text-align: left;

+ color: #000000;

+ font-size: 150%;

+ font-weight: bold;

+ font-family: verdana, geneva, arial, sans-serif;

+ line-height: 180%

+ }

+

+

+

+H3 {

+ margin-top: 20px;

+ margin-bottom: -10px;

+ text-align: left;

+ color: #000000;

+ font-size: 120%;

+ line-height: 150%;

+ font-weight: bold;

+ font-family: verdana, geneva, arial, sans-serif;

+ width: 100%

+ }

+

+

+H4 {

+ margin-top: 15px;

+ margin-bottom: -10px;

+ text-align: left;

+ color: #000000;

+ font-size: 100%;

+ font-weight: bold;

+ font-family: verdana, geneva, arial, sans-serif;

+ width: 100%

+ }

+

+

+

+H5 {

+ margin-top: 10px;

+ margin-bottom: -10px;

+ text-align: left;

+ color: #000000;

+ font-size: 95%;

+ font-weight: bold;

+ font-family: verdana, geneva, arial, sans-serif;

+ width: 100%

+ }

+

+

+H6 {

+ margin-top: 10px;

+ margin-bottom: -10px;

+ text-align: left;

+ color: #000000;

+ font-size: 95%;

+ font-weight: bold;

+ font-family: verdana, geneva, arial, sans-serif;

+ width: 100%

+ }

+

+body {

+ background-color: #eee;

+ font-family: Verdana, Arial, Helvetica,sans-serif;

+}

+

+#main {

+ background: #fff;

+ margin: 10px 5px;

+ padding: 20px;

+ min-height: 1300px;

+ border-bottom: 3px solid #bbb;

+ border-left: 1px solid #ddd;

+ border-right: 1px solid #ddd;

+ border-radius: 5px;

+ -moz-border-radius-right: 5px;

+ -webkit-border-radius-right: 5px;

+

+ -moz-box-shadow: 0px 2px 7px 1px #bbb;

+ -webkit-box-shadow: 0px 2px 7px 1px #bbb;

+ box-shadow: 0px 2px 7px 1px #bbb;

+

+}

+

+.bgred {

+ background-color: #B70101;

+ -moz-border-top-right-radius: 10px;

+ -webkit-border-top-right-radius: 10px;

+ border-bottom-right-radius: 10px;

+ -moz-border-bottom-right-radius: 10px;

+ -webkit-border-bottom-right-radius: 10px;

+ margin: 10px 0px;

+

+ -moz-box-shadow: 0px 2px 7px 1px #bbb;

+ -webkit-box-shadow: 0px 2px 7px 1px #bbb;

+ box-shadow: 0px 2px 7px 1px #bbb;

+}

+

+#copyright {

+font-family: Verdana, Arial, Helvetica, sans-serif;

+font-size: 75%;

+

+background: #ddd;

+color: #333;

+border: 1px solid #bbb;

+border-bottom: 3px solid #bbb;

+border-top: 0px;

+

+padding: 5px 10px;

+border-radius: 5px;

+-moz-border-radius: 5px;

+-webkit-border-radius: 5px;

+

+ -moz-box-shadow: 0px 2px 7px 1px #bbb;

+ -webkit-box-shadow: 0px 2px 7px 1px #bbb;

+ box-shadow: 0px 2px 7px 1px #bbb;

+

+

+margin-top: 40px;

+}

+

+#copyright a {

+ color: #66a;

+}

+

+

+.navbodyblack {

+ font-family: Verdana,Arial, Helvetica, sans-serif;

+ color: #ffffff; background-color: #B70101;

+ text-decoration: none; padding-left: 8px;

+ padding-top: 5px; padding-bottom: 5px;

+ padding-right: 2px;

+ font-weight: normal;

+ margin: 0px;

+}

+

+.navbodyblack a:link {

+ color:#ffffff;

+ text-decoration: none;

+}

+

+.navbodyblack a:visited {

+ color:#ffffff;

+ text-decoration: none;

+}

+

+.navbodyblack a:hover {

+ color:#cc9900;

+ text-decoration: none;

+}

+

+code {

+ font-size: 120%;

+}

+

+pre {

+ border-radius: 5px;

+ -moz-border-radius: 5px;

+ -webkit-border-radius: 5px;

+ font-size: 120%;

+ margin: 1em 2em;

+

+ background: #ddd;

+ color: #333;

+ border: 1px solid #bbb;

+ border-bottom: 3px solid #bbb;

+ border-top: 0px;

+ border-left: 5px solid #b70101;

+ padding: 0.5em 1.2em;

+

+ -moz-box-shadow: 0px 2px 7px 1px #bbb;

+ -webkit-box-shadow: 0px 2px 7px 1px #bbb;

+ box-shadow: 0px 2px 7px 1px #bbb;

+}

+ul.sidebar {

+ width = 20%;

+ font-size: 80%;

+ margin: 0.8em;

+}

+ul.sidebar,ul.sidebar ul {

+ padding: 0;

+ border: 0;

+ color: white;

+}

+ul.sidebar ul {

+ margin: 0 0.8em;

+}

+ul.sidebar a:link, ul.sidebar a:visited, ul.sidebar a:active {

+ color: white;

+ text-decoration: none;

+ display: block;

+}

+ul.sidebar a:hover, ul.sidebar a:active {

+ /*text-decoration: underline;

+ color:#cc9900; */

+ color: #b70101;

+ background-color: white;

+ transition: all linear 0.2s 0s;

+ position: relative;

+ left:5px;

+

+ border-left:5px solid #d41a1a;

+}

+ul.sidebar li {

+ font-size: 16px;

+ margin: 0;

+ margin-top: .5em;

+ padding: 2px 4px;

+ border: 0;

+ list-style-type: none;

+}

+ul.sidebar li a{

+ position: relative;

+ top: 0px;

+

+ transition: all linear 0.2s 0s;

+

+ padding: 5px 10px;

+ border-left:5px solid #d41a1a;

+ background: #d41a1a;

+

+ -moz-box-shadow: 0px 2px 4px 1px #9E0000;

+ -webkit-box-shadow: 0px 2px 4px 1px #9E0000;

+ box-shadow: 0px 2px 4px 1px #9E0000;

+

+ color: white;

+ border-radius: 5px;

+ -moz-border-radius: 5px;

+ -webkit-border-radius: 5px;

+}

+

+ul.sidebar ul li a{

+ font-size: 14px;

+

+}

+

+ul.sidebar li.spacer {

+}

+

+#tile-wrapper {

+ margin-left:auto;

+ margin-right:auto;

+ padding:20px;

+}

+

+a.tile {

+ display: inline-block;

+ position:relative;

+ width:800px;

+ text-decoration: none;

+

+ overflow:hidden;

+

+ margin-left:auto;

+ margin-right:auto;

+

+ font-size:75%;

+ margin: 10px;

+ background: #cdcdcd;

+ padding: 10px;

+ border-radius: 5px;

+ border-bottom: 5px solid #aaa;

+ border-top: 5px solid #ddd;

+ color: #555;

+

+ -moz-box-shadow: 0px 1px 8px 1px #bbb;

+ -webkit-box-shadow: 0px 1px 8px 1px #bbb;

+ box-shadow: 0px 1px 8px 1px #bbb;

+}

+

+a.tile:hover {

+ top:-5px;

+

+

+ -moz-box-shadow: 0px 6px 8px 1px #bbb;

+ -webkit-box-shadow: 0px 6px 8px 1px #bbb;

+ box-shadow: 0px 6px 8px 1px #bbb;

+

+}

+

+

+a.tile p{

+ display:inline-block;

+ width:84%;

+ height:65px;

+ float:right;

+

+ text-align: left;

+

+ background: #fff;

+ color: #000;

+ padding: 10px;

+

+ font-size: 10px;

+

+ border-color: #fff;

+ background-color: #fff;

+ padding: 10px;

+ margin:5px;

+ border-radius: 5px;

+}

+

+a.tile img{

+ width:75px;

+ float:left;

+

+ display:inline-block;

+

+ border-color: #fff;

+ background-color: #fff;

+ padding: 5px;

+ margin:5px;

+ border-radius: 5px;

+}

+

+a.tile h2{

+ text-decoration: none;

+ color:#555;

+ margin:0px 5px;

+ font-size: 140%;

+}

+

+#hours {

+ width: 120px;

+ height: 92px;

+ font-size:75%;

+ margin-left:10px;

+ float: right;

+ background: #B70101;

+ padding: 5px 10px;

+ border-radius: 5px;

+ border-bottom: 5px solid #920000;

+ border-top: 5px solid #d41a1a;

+ color: #fff;

+

+ -moz-box-shadow: 0px 1px 4px 1px #bbb;

+ -webkit-box-shadow: 0px 1px 4px 1px #bbb;

+ box-shadow: 0px 1px 4px 1px #bbb;

+}

+

+#osg_power {

+ height: 92px;

+ margin-left:10px;

+ float: right;

+ background: #F29B12;

+ padding: 5px 10px;

+ border-radius: 5px;

+ border-bottom: 5px solid #EF7821;

+ border-top: 5px solid #FDC10A;

+ color: #fff;

+

+ -moz-box-shadow: 0px 1px 4px 1px #bbb;

+ -webkit-box-shadow: 0px 1px 4px 1px #bbb;

+ box-shadow: 0px 1px 4px 1px #bbb;

+}

+

+#osg_power img {

+ border-radius: 5px;

+}

+

+p.underconstruction {

+ border: 1px solid #666;

+ background-color: #FFA;

+ padding: 0.1em 0.5em;

+ margin-left: 2em;

+ margin-right: 2em;

+ font-style: italic;

+

+ -moz-box-shadow: 0px 2px 7px 1px #bbb;

+ -webkit-box-shadow: 0px 2px 7px 1px #bbb;

+ box-shadow: 0px 2px 7px 1px #bbb;

+}

+.num {

+ text-align: right;

+}

+table {

+ border-collapse: collapse;

+}

+td,tr {

+ padding-left: 0.2em;

+ padding-right: 0.2em;

+}

diff --git a/preview-fall2024-info/index.html b/preview-fall2024-info/index.html

new file mode 100644

index 000000000..05f7609c5

--- /dev/null

+++ b/preview-fall2024-info/index.html

@@ -0,0 +1,746 @@

+

+

+

+

+

+

+Home

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

CHTC is hiring, view the new position on the jobs page and apply now!

+ View Job Posting

+

+

+

+

+  +

+

+

+

+

+

+

+

+ The Center for High Throughput Computing (CHTC), established in 2006, aims to bring the power

+ of High Throughput Computing to all fields of research, and to allow the future of HTC to be shaped

+ by insight from all fields.

+

+

+

+

+

+

+ Are you a UW-Madison researcher looking to expand your computing beyond your local resources? Request

+ an account now to take advantage of the open computing services offered by the CHTC!

+

+

+

+

+

+

+ High Throughput Computing is a collection of principles and techniques which maximize the effective throughput

+ of computing resources towards a given problem. When applied for scientific computing, HTC can result in

+ improved use of a computing resource, improved automation, and help drive the scientific problem forward.

+

+

+ The team at CHTC develops technologies and services for HTC. CHTC is the home of the HTCondor Software

+ Suite which has over 30 years of experience in tackling HTC problems;

+ it manages shared computing resources

+ for researchers on the UW-Madison campus; and it leads the OSG Consortium,

+ a national-scale environment for distributed HTC.

+

+

+

+

+

+

+ Last Year Serving UW-Madison

+

+

+

+

+

+

+

+

+

+ HTCSS

+

+

+

+

The HTCondor Software Suite (HTCSS) provides sites and users with the ability to manage and execute

+HTC workloads. Whether it’s managing a single laptop or 250,000 cores at CERN, HTCondor

+helps solve computational problems through the application of the HTC principles.

+

+

+

+

+

+

+

+

+

+

+

+

+ Services

+

+

+

+

+

CHTC manages over 20,000 cores and dozens of GPUs for the UW-Madison

+campus; this resource, which is free and shared, aims to advance the

+mission of the University of Wisconsin in support of the Wisconsin