+

+

+ +

+

+ -

-

-

- +🤖🔬 **PathML: Tools for computational pathology**

+[](https://pepy.tech/project/pathml)

[](https://pathml.readthedocs.io/en/latest/?badge=latest)

+[](https://codecov.io/gh/Dana-Farber-AIOS/pathml)

[](https://github.com/psf/black)

[](https://pypi.org/project/pathml/)

-[](https://pepy.tech/project/pathml)

-[](https://codecov.io/gh/Dana-Farber-AIOS/pathml)

+

+⭐ **PathML objective is to lower the barrier to entry to digital pathology**

+

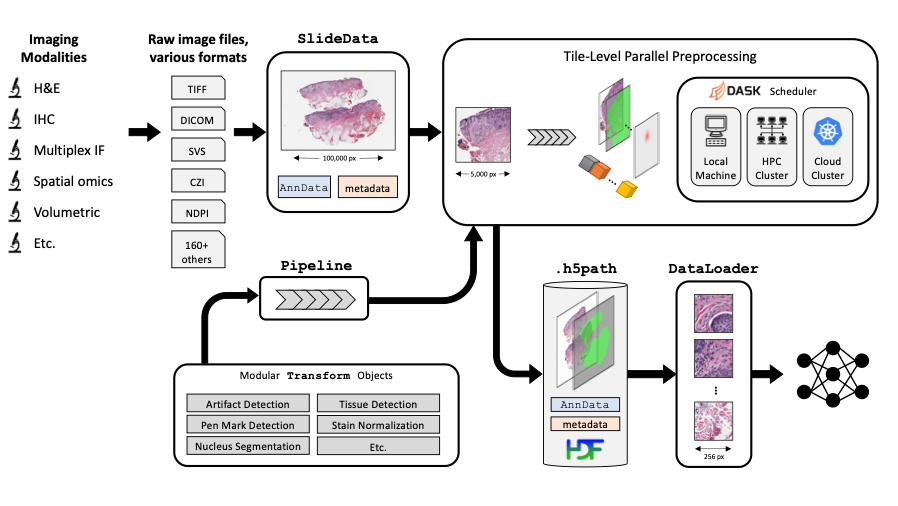

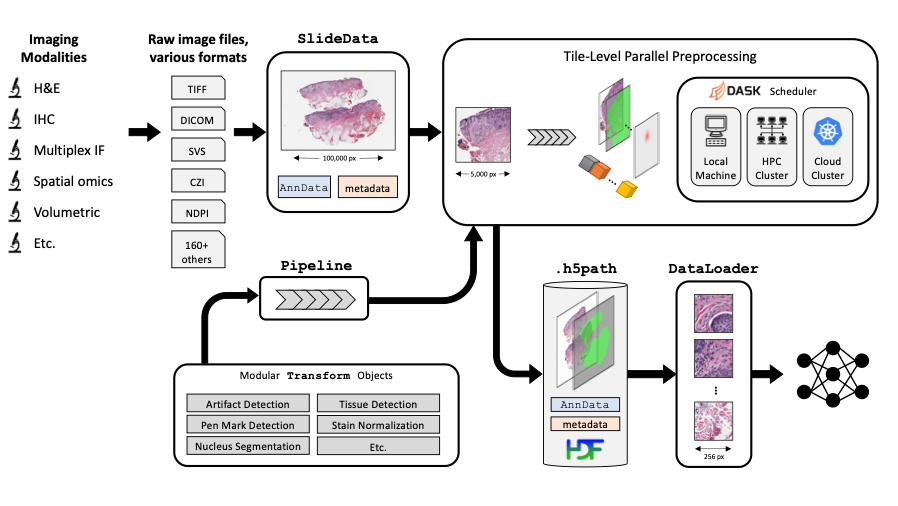

+Imaging datasets in cancer research are growing exponentially in both quantity and information density. These massive datasets may enable derivation of insights for cancer research and clinical care, but only if researchers are equipped with the tools to leverage advanced computational analysis approaches such as machine learning and artificial intelligence. In this work, we highlight three themes to guide development of such computational tools: scalability, standardization, and ease of use. We then apply these principles to develop PathML, a general-purpose research toolkit for computational pathology. We describe the design of the PathML framework and demonstrate applications in diverse use cases.

+

+🚀 **The fastest way to get started?**

+

+ docker pull pathml/pathml && docker run -it -p 8888:8888 pathml/pathml

| Branch | Test status |

| ------ | ------------- |

| master |  |

| dev |  |

-An open-source toolkit for computational pathology and machine learning.

+

+🤖🔬 **PathML: Tools for computational pathology**

+[](https://pepy.tech/project/pathml)

[](https://pathml.readthedocs.io/en/latest/?badge=latest)

+[](https://codecov.io/gh/Dana-Farber-AIOS/pathml)

[](https://github.com/psf/black)

[](https://pypi.org/project/pathml/)

-[](https://pepy.tech/project/pathml)

-[](https://codecov.io/gh/Dana-Farber-AIOS/pathml)

+

+⭐ **PathML objective is to lower the barrier to entry to digital pathology**

+

+Imaging datasets in cancer research are growing exponentially in both quantity and information density. These massive datasets may enable derivation of insights for cancer research and clinical care, but only if researchers are equipped with the tools to leverage advanced computational analysis approaches such as machine learning and artificial intelligence. In this work, we highlight three themes to guide development of such computational tools: scalability, standardization, and ease of use. We then apply these principles to develop PathML, a general-purpose research toolkit for computational pathology. We describe the design of the PathML framework and demonstrate applications in diverse use cases.

+

+🚀 **The fastest way to get started?**

+

+ docker pull pathml/pathml && docker run -it -p 8888:8888 pathml/pathml

| Branch | Test status |

| ------ | ------------- |

| master |  |

| dev |  |

-An open-source toolkit for computational pathology and machine learning.

+ +

+

+

+ **View [documentation](https://pathml.readthedocs.io/en/latest/)**

@@ -125,6 +133,24 @@ Note that these instructions assume that there are no other processes using port

Please refer to the `Docker run` [documentation](https://docs.docker.com/engine/reference/run/) for further instructions

on accessing the container, e.g. for mounting volumes to access files on a local machine from within the container.

+## Option 4: Google Colab

+

+To get PathML running in a Colab environment:

+

+````

+import os

+!pip install openslide-python

+!apt-get install openslide-tools

+!apt-get install openjdk-8-jdk-headless -qq > /dev/null

+os.environ["JAVA_HOME"] = "/usr/lib/jvm/java-8-openjdk-amd64"

+!update-alternatives --set java /usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java

+!java -version

+!pip install pathml

+````

+

+*Thanks to all of our open-source collaborators for helping maintain these installation instructions!*

+*Please open an issue for any bugs or other problems during installation process.*

+

## CUDA

To use GPU acceleration for model training or other tasks, you must install CUDA.

@@ -191,12 +217,36 @@ See [contributing](https://github.com/Dana-Farber-AIOS/pathml/blob/master/CONTRI

# Citing

-If you use `PathML` in your work, please cite our paper:

+If you use `PathML` please cite:

+

+- [**J. Rosenthal et al., "Building tools for machine learning and artificial intelligence in cancer research: best practices and a case study with the PathML toolkit for computational pathology." Molecular Cancer Research, 2022.**](https://doi.org/10.1158/1541-7786.MCR-21-0665)

+

+So far, PathML was used in the following manuscripts:

+

+- [J. Linares et al. **Molecular Cell** 2021](https://www.cell.com/molecular-cell/fulltext/S1097-2765(21)00729-2)

+- [A. Shmatko et al. **Nature Cancer** 2022](https://www.nature.com/articles/s43018-022-00436-4)

+- [J. Pocock et al. **Nature Communications Medicine** 2022](https://www.nature.com/articles/s43856-022-00186-5)

+- [S. Orsulic et al. **Frontiers in Oncology** 2022](https://www.frontiersin.org/articles/10.3389/fonc.2022.924945/full)

+- [D. Brundage et al. **arXiv** 2022](https://arxiv.org/abs/2203.13888)

+- [A. Marcolini et al. **SoftwareX** 2022](https://www.sciencedirect.com/science/article/pii/S2352711022001558)

+- [M. Rahman et al. **Bioengineering** 2022](https://www.mdpi.com/2306-5354/9/8/335)

+- [C. Lama et al. **bioRxiv** 2022](https://www.biorxiv.org/content/10.1101/2022.09.28.509751v1.full)

+- the list continues [**here 🔗 for 2023 and onwards**](https://scholar.google.com/scholar?oi=bibs&hl=en&cites=1157052756975292108)

+

+# Users

+

+

**View [documentation](https://pathml.readthedocs.io/en/latest/)**

@@ -125,6 +133,24 @@ Note that these instructions assume that there are no other processes using port

Please refer to the `Docker run` [documentation](https://docs.docker.com/engine/reference/run/) for further instructions

on accessing the container, e.g. for mounting volumes to access files on a local machine from within the container.

+## Option 4: Google Colab

+

+To get PathML running in a Colab environment:

+

+````

+import os

+!pip install openslide-python

+!apt-get install openslide-tools

+!apt-get install openjdk-8-jdk-headless -qq > /dev/null

+os.environ["JAVA_HOME"] = "/usr/lib/jvm/java-8-openjdk-amd64"

+!update-alternatives --set java /usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java

+!java -version

+!pip install pathml

+````

+

+*Thanks to all of our open-source collaborators for helping maintain these installation instructions!*

+*Please open an issue for any bugs or other problems during installation process.*

+

## CUDA

To use GPU acceleration for model training or other tasks, you must install CUDA.

@@ -191,12 +217,36 @@ See [contributing](https://github.com/Dana-Farber-AIOS/pathml/blob/master/CONTRI

# Citing

-If you use `PathML` in your work, please cite our paper:

+If you use `PathML` please cite:

+

+- [**J. Rosenthal et al., "Building tools for machine learning and artificial intelligence in cancer research: best practices and a case study with the PathML toolkit for computational pathology." Molecular Cancer Research, 2022.**](https://doi.org/10.1158/1541-7786.MCR-21-0665)

+

+So far, PathML was used in the following manuscripts:

+

+- [J. Linares et al. **Molecular Cell** 2021](https://www.cell.com/molecular-cell/fulltext/S1097-2765(21)00729-2)

+- [A. Shmatko et al. **Nature Cancer** 2022](https://www.nature.com/articles/s43018-022-00436-4)

+- [J. Pocock et al. **Nature Communications Medicine** 2022](https://www.nature.com/articles/s43856-022-00186-5)

+- [S. Orsulic et al. **Frontiers in Oncology** 2022](https://www.frontiersin.org/articles/10.3389/fonc.2022.924945/full)

+- [D. Brundage et al. **arXiv** 2022](https://arxiv.org/abs/2203.13888)

+- [A. Marcolini et al. **SoftwareX** 2022](https://www.sciencedirect.com/science/article/pii/S2352711022001558)

+- [M. Rahman et al. **Bioengineering** 2022](https://www.mdpi.com/2306-5354/9/8/335)

+- [C. Lama et al. **bioRxiv** 2022](https://www.biorxiv.org/content/10.1101/2022.09.28.509751v1.full)

+- the list continues [**here 🔗 for 2023 and onwards**](https://scholar.google.com/scholar?oi=bibs&hl=en&cites=1157052756975292108)

+

+# Users

+

+| This is where in the world our most enthusiastic supporters are located:

+ +  +

+ |

+and this is where they work:

+ +  +

+ |

+

diff --git a/docs/readthedocs-requirements.txt b/docs/readthedocs-requirements.txt

index 831e134d..0e8f22d3 100644

--- a/docs/readthedocs-requirements.txt

+++ b/docs/readthedocs-requirements.txt

@@ -1,7 +1,7 @@

-sphinx==4.3.2

+sphinx==7.1.2

nbsphinx==0.8.8

nbsphinx-link==1.3.0

-sphinx-rtd-theme==1.0.0

-sphinx-autoapi==1.8.4

-ipython==7.31.1

+sphinx-rtd-theme==1.3.0

+sphinx-autoapi==3.0.0

+ipython==8.10.0

sphinx-copybutton==0.4.0

diff --git a/environment.yml b/environment.yml

index 22754c02..513592b7 100644

--- a/environment.yml

+++ b/environment.yml

@@ -1,33 +1,37 @@

name: pathml

channels:

- - conda-forge

- pytorch

+ - conda-forge

dependencies:

- pip==21.3.1

- - python==3.8

- - numpy==1.19.5

- - scipy==1.7.3

- - scikit-image==0.18.3

+ - numpy # orig = 1.19.5

+ - scipy # orig = 1.7.3

+ - scikit-image # orig 0.18.3

- matplotlib==3.5.1

- - python-spams==2.6.1

- - openjdk==8.0.152

- - pytorch==1.10.1

+ - openjdk<=18.0.0

+ - pytorch==1.13.1 # orig = 1.10.1

- h5py==3.1.0

- dask==2021.12.0

- pydicom==2.2.2

- - pytest==6.2.5

+ - pytest==7.4.0 # orig = 6.2.5

- pre-commit==2.16.0

- coverage==5.5

+ - networkx==3.1

- pip:

- python-bioformats==4.0.0

- python-javabridge==4.0.0

- - protobuf==3.20.1

- - deepcell==0.11.0

+ - protobuf==3.20.3

+ - deepcell==0.12.7 # orig = 0.11.0

+ - onnx==1.14.0

+ - onnxruntime==1.15.1

- opencv-contrib-python==4.5.3.56

- - openslide-python==1.1.2

+ - openslide-python==1.2.0

- scanpy==1.8.2

- anndata==0.7.8

- tqdm==4.62.3

- loguru==0.5.3

+ - pandas==1.5.2 # orig no req

+ - torch-geometric==2.3.1

+ - jpype1

diff --git a/examples/construct_graphs.ipynb b/examples/construct_graphs.ipynb

new file mode 100644

index 00000000..0b10bc84

--- /dev/null

+++ b/examples/construct_graphs.ipynb

@@ -0,0 +1,494 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "id": "14070544-7803-40fb-8f4b-99724b49f224",

+ "metadata": {},

+ "source": [

+ "# PathML Graph construction and processing "

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "8886bc5f-83db-4abe-97e0-b8bc9b3aab56",

+ "metadata": {},

+ "source": [

+ "In this notebook, we will demonstrate the ability of the new pathml.graph API to construct cell and tissue graphs. Specifically, we will do the following:\n",

+ "\n",

+ "1. Use a pre-trained HoVer-Net model to detect cells in a given Region of Interested (ROI)\n",

+ "2. Use boundary detection techniques to detect tissues in a given ROI\n",

+ "3. Featurize the detected cell and tissue patches using a ResNet model\n",

+ "4. Construct both tissue and cell graphs using k-Nearest Neighbour (k-NN) and Region-Adjacency Graph (RAG) methods and save them as torch tensors.\n",

+ "\n",

+ "To get the full functionality of this notebook for a real-world dataset, we suggest you download the BRACS ROI set from the [BRACS dataset](https://www.bracs.icar.cnr.it/download/). To do so, you will have to sign up and create an account. Next, you will just have to replace the root folder in this tutorial to whatever directory you download the BRACS dataset to. \n",

+ "\n",

+ "In this notebook, we will use a representative image from this [link](https://github.com/histocartography/hact-net/tree/main/data) stored in `data/`."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "a2399d96-abf7-46b6-b783-c4c292259bdc",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "from glob import glob\n",

+ "import argparse\n",

+ "from PIL import Image\n",

+ "import numpy as np\n",

+ "from tqdm import tqdm\n",

+ "import torch \n",

+ "import h5py\n",

+ "import warnings\n",

+ "import math\n",

+ "from skimage.measure import regionprops, label\n",

+ "import networkx as nx\n",

+ "import traceback\n",

+ "from glob import glob\n",

+ "\n",

+ "from pathml.core import HESlide, SlideData\n",

+ "import matplotlib.pyplot as plt \n",

+ "from pathml.preprocessing.transforms import Transform\n",

+ "from pathml.core import HESlide\n",

+ "from pathml.preprocessing import Pipeline, BoxBlur, TissueDetectionHE, NucleusDetectionHE\n",

+ "import pathml.core.tile\n",

+ "from pathml.ml import HoVerNet, loss_hovernet, post_process_batch_hovernet\n",

+ "\n",

+ "from pathml.datasets.utils import DeepPatchFeatureExtractor\n",

+ "from pathml.preprocessing import StainNormalizationHE\n",

+ "from pathml.graph import RAGGraphBuilder, KNNGraphBuilder\n",

+ "from pathml.graph import ColorMergedSuperpixelExtractor\n",

+ "from pathml.graph.utils import _valid_image, _exists, plot_graph_on_image, get_full_instance_map, build_assignment_matrix"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "ee47ddb6-2edf-42b5-9227-65c9ac1ecbf1",

+ "metadata": {},

+ "source": [

+ "## Building a HoverNetNucleusDetectionHE class using pathml.transforms API "

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "5d58d8d0-e7a9-4e92-bfd0-2ec1cecafcf6",

+ "metadata": {},

+ "source": [

+ "First, we will use a pre-trained HoVer-Net model to detect cells and return a instance map containing masks that corresponds to cells. We will use the `pathml.preprocessing.transforms` class that can be used to apply a function over each ROI. The new `HoverNetNucleusDetectionHE` simply inherits this class and applies a HoVer-Net model onto each ROI that is passed into it. \n",

+ "\n",

+ "To obtain the pre-trained HoVer-Net model, we follow the steps in this [tutorial](https://pathml.readthedocs.io/en/latest/examples/link_train_hovernet.html). For simplicity, we provide a pre-trained model under the `pretrained_models` folder. "

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "e0358a83-e93a-4525-a7bb-f697e2252ce7",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "class HoverNetNucleusDetectionHE(Transform):\n",

+ " \n",

+ " \"\"\"\n",

+ " Nucleus detection algorithm for H&E stained images using pre-trained HoverNet Model.\n",

+ "\n",

+ " Args:\n",

+ " mask_name (str): Name of mask that is created.\n",

+ " model_path (str): Path to the pretrained model. \n",

+ " \n",

+ " References:\n",

+ " Graham, S., Vu, Q.D., Raza, S.E.A., Azam, A., Tsang, Y.W., Kwak, J.T. and Rajpoot, N., 2019. \n",

+ " Hover-net: Simultaneous segmentation and classification of nuclei in multi-tissue histology images. \n",

+ " Medical image analysis, 58, p.101563.\n",

+ " \n",

+ " \"\"\"\n",

+ " \n",

+ " def __init__(\n",

+ " self,\n",

+ " mask_name,\n",

+ " model_path = None\n",

+ " ):\n",

+ " self.mask_name = mask_name\n",

+ " \n",

+ " cuda = torch.cuda.is_available()\n",

+ " self.device = torch.device(\"cuda:0\" if cuda else \"cpu\")\n",

+ " \n",

+ " if model_path is None:\n",

+ " raise NotImplementedError(\"Downloadable models not available\")\n",

+ " else:\n",

+ " checkpoint = torch.load(model_path)\n",

+ " self.model = HoVerNet(n_classes=6)\n",

+ " self.model.load_state_dict(checkpoint)\n",

+ " \n",

+ " self.model = self.model.to(self.device)\n",

+ " self.model.eval()\n",

+ "\n",

+ " def F(self, image):\n",

+ " assert (\n",

+ " image.dtype == np.uint8\n",

+ " ), f\"Input image dtype {image.dtype} must be np.uint8\"\n",

+ " \n",

+ " image = torch.from_numpy(image).float()\n",

+ " image = image.permute(2, 0, 1)\n",

+ " image = image.unsqueeze(0)\n",

+ " image = image.to(self.device)\n",

+ " with torch.no_grad():\n",

+ " out = self.model(image)\n",

+ " preds_detection, _ = post_process_batch_hovernet(out, n_classes=6)\n",

+ " preds_detection = preds_detection.transpose(1,2,0)\n",

+ " return preds_detection\n",

+ "\n",

+ " def apply(self, tile):\n",

+ " assert isinstance(\n",

+ " tile, pathml.core.tile.Tile\n",

+ " ), f\"tile is type {type(tile)} but must be pathml.core.tile.Tile\"\n",

+ " assert (\n",

+ " self.mask_name is not None\n",

+ " ), \"mask_name is None. Must supply a valid mask name\"\n",

+ " assert (\n",

+ " tile.slide_type.stain == \"HE\"\n",

+ " ), f\"Tile has slide_type.stain={tile.slide_type.stain}, but must be 'HE'\"\n",

+ " \n",

+ " nucleus_mask = self.F(tile.image)\n",

+ " tile.masks[self.mask_name] = nucleus_mask"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "3c2e1bc1-6825-44b6-8909-8d47574295ed",

+ "metadata": {},

+ "source": [

+ "A simple example on using this class is given below. The input image for this is present in the `data` folder. "

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "a2bbe1db-17b0-4ddf-8e74-c338bae2c740",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "wsi = SlideData('../data/example_0_N_0.png', name = 'example', backend = \"openslide\", stain = 'HE')\n",

+ "region = wsi.slide.extract_region(location = (900, 800), size = (256, 256))\n",

+ "plt.imshow(region)\n",

+ "plt.title('Input image', fontsize=11)\n",

+ "plt.gca().set_xticks([])\n",

+ "plt.gca().set_yticks([])\n",

+ "plt.show()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "d098e3eb-a32f-4d67-88bc-a056fe0207e6",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "nuclei_detect = HoverNetNucleusDetectionHE(mask_name = 'cell', model_path = '../pretrained_models/hovernet_fully_trained.pt')\n",

+ "cell_mask = nuclei_detect.F(region)\n",

+ "plt.imshow(cell_mask)\n",

+ "plt.title('Nuclei mask', fontsize=11)\n",

+ "plt.gca().set_xticks([])\n",

+ "plt.gca().set_yticks([])\n",

+ "plt.show()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "b84c3b68-9028-4724-97ad-6d9934e0ce2e",

+ "metadata": {},

+ "source": [

+ "## Cell and Tissue graph construction"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "993b2866-1c88-485c-810b-60f0be998174",

+ "metadata": {},

+ "source": [

+ "Next, we can move on to applying a function that uses the new `pathml.graph` API to construct cell and tissue graphs.\n",

+ "\n",

+ "We have to first define some constants. "

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "b9377c10-d38c-4bea-ada9-aaff38cd92a9",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Convert the tumor time given in the filename to a label\n",

+ "TUMOR_TYPE_TO_LABEL = {\n",

+ " 'N': 0,\n",

+ " 'PB': 1,\n",

+ " 'UDH': 2,\n",

+ " 'ADH': 3,\n",

+ " 'FEA': 4,\n",

+ " 'DCIS': 5,\n",

+ " 'IC': 6\n",

+ "}\n",

+ "\n",

+ "# Define minimum and maximum pixels for processing a ROI\n",

+ "MIN_NR_PIXELS = 50000\n",

+ "MAX_NR_PIXELS = 50000000 \n",

+ "\n",

+ "# Define the reference image and HoVer-Net model path\n",

+ "ref_path = '../data/example_0_N_0.png'\n",

+ "hovernet_model_path = '../pretrained_models/hovernet_fully_trained.pt'\n",

+ "\n",

+ "# Define the patch size for applying HoverNetNucleusDetectionHE \n",

+ "PATCH_SIZE = 256"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "d7b5798f-3d93-4179-9bdc-8ae80b38573a",

+ "metadata": {},

+ "source": [

+ "Next, we write the main preprocessing loop as a function. "

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "2aa61a77-b882-4161-8fb5-72e57ad2be17",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "def process(image_path, save_path, split, plot=True, overwrite=False):\n",

+ " # 1. get image path\n",

+ " subdirs = os.listdir(image_path)\n",

+ " image_fnames = []\n",

+ " for subdir in (subdirs + ['']): \n",

+ " image_fnames += glob(os.path.join(image_path, subdir, '*.png'))\n",

+ " \n",

+ " image_ids_failing = []\n",

+ " \n",

+ " print('*** Start analysing {} image(s) ***'.format(len(image_fnames)))\n",

+ " \n",

+ " ref_image = np.array(Image.open(ref_path))\n",

+ " norm = StainNormalizationHE(stain_estimation_method='vahadane')\n",

+ " norm.fit_to_reference(ref_image)\n",

+ " ref_stain_matrix = norm.stain_matrix_target_od \n",

+ " ref_max_C = norm.max_c_target \n",

+ " \n",

+ " for image_path in tqdm(image_fnames):\n",

+ " \n",

+ " # a. load image & check if already there \n",

+ " _, image_name = os.path.split(image_path)\n",

+ " image = np.array(Image.open(image_path))\n",

+ "\n",

+ " # Compute number of pixels in image and check the label of the image\n",

+ " nr_pixels = image.shape[0] * image.shape[1]\n",

+ " image_label = TUMOR_TYPE_TO_LABEL[image_name.split('_')[2]]\n",

+ "\n",

+ " # Get the output file paths of cell graphs, tissue graphs and assignment matrices\n",

+ " cg_out = os.path.join(save_path, 'cell_graphs', split, image_name.replace('.png', '.pt'))\n",

+ " tg_out = os.path.join(save_path, 'tissue_graphs', split, image_name.replace('.png', '.pt'))\n",

+ " assign_out = os.path.join(save_path, 'assignment_matrices', split, image_name.replace('.png', '.pt')) \n",

+ "\n",

+ " # If file was not already created or not too big or not too small, then process \n",

+ " if not _exists(cg_out, tg_out, assign_out, overwrite) and _valid_image(nr_pixels):\n",

+ " \n",

+ " print(f'Image size: {image.shape[0], image.shape[1]}')\n",

+ "\n",

+ " if plot:\n",

+ " print('Input ROI:')\n",

+ " plt.imshow(image)\n",

+ " plt.show()\n",

+ " \n",

+ " try:\n",

+ " # Read the image as a pathml.core.SlideData class\n",

+ " print('\\nReading image')\n",

+ " wsi = SlideData(image_path, name = image_path, backend = \"openslide\", stain = 'HE')\n",

+ "\n",

+ " # Apply our HoverNetNucleusDetectionHE as a pathml.preprocessing.Pipeline over all patches\n",

+ " print('Detecting nuclei')\n",

+ " pipeline = Pipeline([HoverNetNucleusDetectionHE(mask_name='cell', \n",

+ " model_path=hovernet_model_path)])\n",

+ " \n",

+ " # Run the Pipeline \n",

+ " wsi.run(pipeline, overwrite_existing_tiles=True, distributed=False, tile_pad=True, tile_size=PATCH_SIZE)\n",

+ "\n",

+ " # Extract the ROI, nuclei instance maps as an np.array from a pathml.core.SlideData object\n",

+ " image, nuclei_map, nuclei_centroid = get_full_instance_map(wsi, patch_size = PATCH_SIZE)\n",

+ "\n",

+ " # Use a ResNet-34 to extract the features from each detected cell in the ROI\n",

+ " print('Extracting features from cells')\n",

+ " extractor = DeepPatchFeatureExtractor(patch_size=64, \n",

+ " batch_size=64, \n",

+ " entity = 'cell',\n",

+ " architecture='resnet34', \n",

+ " fill_value=255, \n",

+ " resize_size=224,\n",

+ " threshold=0)\n",

+ " features = extractor.process(image, nuclei_map)\n",

+ "\n",

+ " # Build a kNN graph with nodes as cells, node features as ResNet-34 computed features, and edges within\n",

+ " # a threshold of 50\n",

+ " print('Building graphs')\n",

+ " knn_graph_builder = KNNGraphBuilder(k=5, thresh=50, add_loc_feats=True)\n",

+ " cell_graph = knn_graph_builder.process(nuclei_map, features, target = image_label)\n",

+ "\n",

+ " # Plot cell graph on ROI image \n",

+ " if plot:\n",

+ " print('Cell graph on ROI:')\n",

+ " plot_graph_on_image(cell_graph, image)\n",

+ "\n",

+ " # Save the cell graph \n",

+ " torch.save(cell_graph, cg_out)\n",

+ "\n",

+ " # Detect tissue using pathml.graph.ColorMergedSuperpixelExtractor class\n",

+ " print('\\nDetecting tissue')\n",

+ " tissue_detector = ColorMergedSuperpixelExtractor(superpixel_size=200,\n",

+ " compactness=20,\n",

+ " blur_kernel_size=1,\n",

+ " threshold=0.05,\n",

+ " downsampling_factor=4)\n",

+ "\n",

+ " superpixels, _ = tissue_detector.process(image)\n",

+ "\n",

+ " # Use a ResNet-34 to extract the features from each detected tissue in the ROI\n",

+ " print('Extracting features from tissues')\n",

+ " tissue_feature_extractor = DeepPatchFeatureExtractor(architecture='resnet34',\n",

+ " patch_size=144,\n",

+ " entity = 'tissue',\n",

+ " resize_size=224,\n",

+ " fill_value=255,\n",

+ " batch_size=32,\n",

+ " threshold = 0.25)\n",

+ " features = tissue_feature_extractor.process(image, superpixels)\n",

+ "\n",

+ " # Build a RAG with tissues as nodes, node features as ResNet-34 computed features, and edges using the \n",

+ " # RAG algorithm\n",

+ " print('Building graphs')\n",

+ " rag_graph_builder = RAGGraphBuilder(add_loc_feats=True)\n",

+ " tissue_graph = rag_graph_builder.process(superpixels, features, target = image_label)\n",

+ "\n",

+ " # Plot tissue graph on ROI image\n",

+ " if plot:\n",

+ " print('Tissue graph on ROI:')\n",

+ " plot_graph_on_image(tissue_graph, image)\n",

+ "\n",

+ " # Save the tissue graph \n",

+ " torch.save(tissue_graph, tg_out) \n",

+ "\n",

+ " # Build as assignment matrix that maps each cell to the tissue it is a part of \n",

+ " assignment = build_assignment_matrix(nuclei_centroid, superpixels)\n",

+ "\n",

+ " # Save the assignment matrix\n",

+ " torch.save(torch.tensor(assignment), assign_out)\n",

+ " \n",

+ " except:\n",

+ " print(f'Failed {image_path}')\n",

+ " image_ids_failing.append(image_path)\n",

+ " \n",

+ " print('\\nOut of {} images, {} successful graph generations.'.format(\n",

+ " len(image_fnames),\n",

+ " len(image_fnames) - len(image_ids_failing)\n",

+ " ))\n",

+ " print('Failing IDs are:', image_ids_failing)\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "7fe9a5cd-4e01-4f4f-b5cd-91f1b204835b",

+ "metadata": {},

+ "source": [

+ "Finally, we write a main function that calls the process function for a specified root and output directory, along with the name of the split (either train, test or validation if using BRACS). "

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "5e6a71aa-babc-47e7-a641-d9688962350b",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "def main(base_path, save_path, split=None):\n",

+ " if split is not None:\n",

+ " root_path = os.path.join(base_path, split)\n",

+ " else:\n",

+ " root_path = base_path\n",

+ " \n",

+ " print(root_path)\n",

+ " \n",

+ " os.makedirs(os.path.join(save_path, 'cell_graphs', split), exist_ok=True)\n",

+ " os.makedirs(os.path.join(save_path, 'tissue_graphs', split), exist_ok=True)\n",

+ " os.makedirs(os.path.join(save_path, 'assignment_matrices', split), exist_ok=True)\n",

+ " \n",

+ " process(root_path, save_path, split, plot=True, overwrite=True)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "54d660e9-b32c-4101-9d64-7fb9cdc14c93",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Folder containing all images\n",

+ "base = '../data/'\n",

+ "\n",

+ "# Output path \n",

+ "save_path = '../data/output/'\n",

+ "\n",

+ "# Start preprocessing\n",

+ "main(base, save_path, split='')"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "8c7cfdb1-e016-4d9c-acff-b3eb0f40ce55",

+ "metadata": {},

+ "source": [

+ "## References\n",

+ "\n",

+ "* Pati, Pushpak, Guillaume Jaume, Antonio Foncubierta-Rodriguez, Florinda Feroce, Anna Maria Anniciello, Giosue Scognamiglio, Nadia Brancati et al. \"Hierarchical graph representations in digital pathology.\" Medical image analysis 75 (2022): 102264.\n",

+ "* Brancati, Nadia, Anna Maria Anniciello, Pushpak Pati, Daniel Riccio, Giosuè Scognamiglio, Guillaume Jaume, Giuseppe De Pietro et al. \"Bracs: A dataset for breast carcinoma subtyping in h&e histology images.\" Database 2022 (2022): baac093."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "2307dfb9-19cc-4f6b-aa96-7f5f40322e0b",

+ "metadata": {},

+ "source": [

+ "## Session info"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "1e1d903d-01ef-492f-a806-d974a0940c4c",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import IPython\n",

+ "print(IPython.sys_info())\n",

+ "print(f\"torch version: {torch.__version__}\")"

+ ]

+ }

+ ],

+ "metadata": {

+ "kernelspec": {

+ "display_name": "pathml_graph_dev",

+ "language": "python",

+ "name": "pathml_graph_dev"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.9.18"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 5

+}

diff --git a/examples/train_hactnet.ipynb b/examples/train_hactnet.ipynb

new file mode 100644

index 00000000..72d83e20

--- /dev/null

+++ b/examples/train_hactnet.ipynb

@@ -0,0 +1,318 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "id": "655f37d3-1591-4a7c-8f54-f7fc8148dcfd",

+ "metadata": {},

+ "source": [

+ "# Training a HACTNet model"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "88157b8c-0368-42a4-af82-800b7bab74d7",

+ "metadata": {},

+ "source": [

+ "In this notebook, we will train the HACTNet graph neural network (GNN) model on input cell and tissue graphs using the new `pathml.graph` API.\n",

+ "\n",

+ "To run the notebook and train the model, you will have to first download the BRACS ROI set from the [BRACS dataset](https://www.bracs.icar.cnr.it/download/). To do so, you will have to sign up and create an account. Next, you will have to construct the cell and tissue graphs using the tutorial in `examples/construct_graphs.ipynb`. Use the output directory specified there as the input to the functions in this tutorial. "

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 1,

+ "id": "f682f702-b590-4e3d-8c97-a362411acade",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "from glob import glob\n",

+ "import argparse\n",

+ "from PIL import Image\n",

+ "import numpy as np\n",

+ "from tqdm import tqdm\n",

+ "import torch \n",

+ "import h5py\n",

+ "import warnings\n",

+ "import math\n",

+ "from skimage.measure import regionprops, label\n",

+ "import networkx as nx\n",

+ "import traceback\n",

+ "from glob import glob\n",

+ "import torch\n",

+ "import torch.nn as nn\n",

+ "from torch_geometric.data import Batch\n",

+ "from torch_geometric.data import Data\n",

+ "from torch.utils.data import Dataset\n",

+ "from torch_geometric.loader import DataLoader\n",

+ "from torch.optim.lr_scheduler import StepLR\n",

+ "from sklearn.metrics import f1_score\n",

+ "\n",

+ "from pathml.datasets import EntityDataset\n",

+ "from pathml.ml.utils import get_degree_histogram, get_class_weights\n",

+ "from pathml.ml import HACTNet"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "6fb4ee6f-7b17-424d-9cdd-710e36c7341c",

+ "metadata": {},

+ "source": [

+ "## Model Training"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "10601c10-d069-481d-b502-f98f76e18e3c",

+ "metadata": {},

+ "source": [

+ "Here we define the main training loop for loading the constructed graphs, initializing and training the model. "

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "00cb474e-0441-4ff0-a495-709d3df3759d",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "def train_hactnet(root_dir, load_histogram=True, histogram_dir=None, calc_class_weights=True):\n",

+ "\n",

+ " # Read the train, validation and test dataset into the pathml.datasets.EntityDataset class \n",

+ " train_dataset = EntityDataset(os.path.join(root_dir, 'cell_graphs/train/'),\n",

+ " os.path.join(root_dir, 'tissue_graphs/train/'),\n",

+ " os.path.join(root_dir, 'assignment_matrices/train/'))\n",

+ " val_dataset = EntityDataset(os.path.join(root_dir, 'cell_graphs/val/'),\n",

+ " os.path.join(root_dir, 'tissue_graphs/val/'),\n",

+ " os.path.join(root_dir, 'assignment_matrices/val/'))\n",

+ " test_dataset = EntityDataset(os.path.join(root_dir, 'cell_graphs/test/'),\n",

+ " os.path.join(root_dir, 'tissue_graphs/test/'),\n",

+ " os.path.join(root_dir, 'assignment_matrices/test/'))\n",

+ "\n",

+ " # Print the lengths of each dataset split\n",

+ " print(f\"Length of training dataset: {len(train_dataset)}\")\n",

+ " print(f\"Length of validation dataset: {len(val_dataset)}\")\n",

+ " print(f\"Length of test dataset: {len(test_dataset)}\")\n",

+ "\n",

+ " # Define the torch_geometric.DataLoader object for each dataset split with a batch size of 4\n",

+ " train_batch = DataLoader(train_dataset, batch_size=4, shuffle=False, follow_batch =['x_cell', 'x_tissue'], drop_last=True)\n",

+ " val_batch = DataLoader(val_dataset, batch_size=4, shuffle=True, follow_batch =['x_cell', 'x_tissue'], drop_last=True)\n",

+ " test_batch = DataLoader(test_dataset, batch_size=4, shuffle=True, follow_batch =['x_cell', 'x_tissue'], drop_last=True)\n",

+ "\n",

+ " # The GNN layer we use in this model, PNAConv, requires the computation of a node degree histogram of the \n",

+ " # train dataset. We only need to compute it once. If it is precomputed already, set the load_histogram=True.\n",

+ " # Else, the degree histogram is calculated. \n",

+ " if load_histogram:\n",

+ " histogram_dir = \"./\"\n",

+ " cell_deg = torch.load(os.path.join(histogram_dir, 'cell_degree_norm.pt'))\n",

+ " tissue_deg = torch.load(os.path.join(histogram_dir, 'tissue_degree_norm.pt'))\n",

+ " else:\n",

+ " train_batch_hist = DataLoader(train_dataset, batch_size=20, shuffle=True, follow_batch =['x_cell', 'x_tissue'])\n",

+ " print('Calculating degree histogram for cell graph')\n",

+ " cell_deg = get_degree_histogram(train_batch_hist, 'edge_index_cell', 'x_cell')\n",

+ " print('Calculating degree histogram for tissue graph')\n",

+ " tissue_deg = get_degree_histogram(train_batch_hist, 'edge_index_tissue', 'x_tissue')\n",

+ " torch.save(cell_deg, 'cell_degree_norm.pt')\n",

+ " torch.save(tissue_deg, 'tissue_degree_norm.pt')\n",

+ "\n",

+ " # Since the BRACS dataset has unbalanced data, it is important to calculate the class weights in the training set\n",

+ " # and provide that as an argument to our loss function. \n",

+ " if calc_class_weights:\n",

+ " train_w = get_class_weights(train_batch)\n",

+ " torch.save(torch.tensor(train_w), 'loss_weights_norm.pt')\n",

+ "\n",

+ " # Here we define the keyword arguments for the PNAConv layer in the model for both cell and tissue processing \n",

+ " # layers. \n",

+ " kwargs_pna_cell = {'aggregators': [\"mean\", \"max\", \"min\", \"std\"],\n",

+ " \"scalers\": [\"identity\", \"amplification\", \"attenuation\"],\n",

+ " \"deg\": cell_deg}\n",

+ " kwargs_pna_tissue = {'aggregators': [\"mean\", \"max\", \"min\", \"std\"],\n",

+ " \"scalers\": [\"identity\", \"amplification\", \"attenuation\"],\n",

+ " \"deg\": tissue_deg}\n",

+ " \n",

+ " cell_params = {'layer':'PNAConv', 'in_channels':514, 'hidden_channels':64, \n",

+ " 'num_layers':3, 'out_channels':64, 'readout_op':'lstm', \n",

+ " 'readout_type':'mean', 'kwargs':kwargs_pna_cell}\n",

+ " \n",

+ " tissue_params = {'layer':'PNAConv', 'in_channels':514, 'hidden_channels':64, \n",

+ " 'num_layers':3, 'out_channels':64, 'readout_op':'lstm', \n",

+ " 'readout_type':'mean', 'kwargs':kwargs_pna_tissue}\n",

+ " \n",

+ " classifier_params = {'in_channels':128, 'hidden_channels':128,\n",

+ " 'out_channels':7, 'num_layers': 2}\n",

+ "\n",

+ " # Transfer the model to GPU\n",

+ " device = torch.device(\"cuda\")\n",

+ "\n",

+ " # Initialize the pathml.ml.HACTNet model\n",

+ " model = HACTNet(cell_params, tissue_params, classifier_params)\n",

+ "\n",

+ " # Set up optimizer\n",

+ " opt = torch.optim.Adam(model.parameters(), lr = 0.0005)\n",

+ "\n",

+ " # Learning rate scheduler to reduce LR by factor of 10 each 25 epochs\n",

+ " scheduler = StepLR(opt, step_size=25, gamma=0.1)\n",

+ "\n",

+ " # Send the model to GPU\n",

+ " model = model.to(device)\n",

+ "\n",

+ " # Define number of epochs \n",

+ " n_epochs = 60\n",

+ "\n",

+ " # Keep a track of best epoch and metric for saving only the best models\n",

+ " best_epoch = 0\n",

+ " best_metric = 0\n",

+ "\n",

+ " # Load the computed class weights if calc_class_weights = True\n",

+ " if calc_class_weights:\n",

+ " loss_weights = torch.load('loss_weights_norm.pt')\n",

+ "\n",

+ " # Define the loss function\n",

+ " loss_fn = nn.CrossEntropyLoss(weight=loss_weights.float().to(device) if calc_class_weights else None)\n",

+ "\n",

+ " # Define the evaluate function to compute metrics for validation and test set to keep track of performance.\n",

+ " # The metrics used are per-class and weighted F1 score. \n",

+ " def evaluate(data_loader):\n",

+ " model.eval()\n",

+ " y_true = []\n",

+ " y_pred = []\n",

+ " with torch.no_grad():\n",

+ " for data in tqdm(data_loader):\n",

+ " data = data.to(device)\n",

+ " outputs = model(data)\n",

+ " y_true.append(torch.argmax(outputs.detach().cpu().softmax(dim=1), dim=-1).numpy())\n",

+ " y_pred.append(data.target.cpu().numpy())\n",

+ " y_true = np.array(y_true).ravel()\n",

+ " y_pred = np.array(y_pred).ravel()\n",

+ " per_class = f1_score(y_true, y_pred, average=None)\n",

+ " weighted = f1_score(y_true, y_pred, average='weighted')\n",

+ " print(f'Per class F1: {per_class}')\n",

+ " print(f'Weighted F1: {weighted}')\n",

+ " return np.append(per_class, weighted)\n",

+ "\n",

+ " # Start the training loop\n",

+ " for i in range(n_epochs):\n",

+ " print(f'\\n>>>>>>>>>>>>>>>>Epoch number {i}>>>>>>>>>>>>>>>>')\n",

+ " minibatch_train_losses = []\n",

+ " \n",

+ " # Put model in training mode\n",

+ " model.train()\n",

+ " \n",

+ " print('Training')\n",

+ " \n",

+ " for data in tqdm(train_batch):\n",

+ " \n",

+ " # Send the data to the GPU\n",

+ " data = data.to(device)\n",

+ " \n",

+ " # Zero out gradient\n",

+ " opt.zero_grad()\n",

+ " \n",

+ " # Forward pass\n",

+ " outputs = model(data)\n",

+ " \n",

+ " # Compute loss\n",

+ " loss = loss_fn(outputs, data.target)\n",

+ " \n",

+ " # Compute gradients\n",

+ " loss.backward()\n",

+ " \n",

+ " # Step optimizer and scheduler\n",

+ " opt.step() \n",

+ "\n",

+ " # Track loss\n",

+ " minibatch_train_losses.append(loss.detach().cpu().numpy())\n",

+ " \n",

+ " print(f'Loss: {np.array(minibatch_train_losses).ravel().mean()}')\n",

+ "\n",

+ " # Print performance metrics on validation set\n",

+ " print('\\nEvaluating on validation')\n",

+ " val_metrics = evaluate(val_batch)\n",

+ "\n",

+ " # Save the model only if it is better than previous checkpoint in validation metrics\n",

+ " if val_metrics[-1] > best_metric:\n",

+ " print('Saving checkpoint')\n",

+ " torch.save(model.state_dict(), \"hact_net_norm.pt\")\n",

+ " best_metric = val_metrics[-1]\n",

+ "\n",

+ " # Print performance metrics on test set\n",

+ " print('\\nEvaluating on test')\n",

+ " _ = evaluate(test_batch)\n",

+ " \n",

+ " # Step LR scheduler\n",

+ " scheduler.step()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "7f408188-5804-4ac4-9a1e-642c6e5e6d09",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "root_dir = '../../../../mnt/disks/data/varun/BRACS_RoI/latest_version/pathml_graph_data_norm/'\n",

+ "train_hactnet(root_dir, load_histogram=True, calc_class_weights=False)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "5c4d6c48-c5be-4dcd-a38e-6277d1fd5956",

+ "metadata": {},

+ "source": [

+ "## References\n",

+ "\n",

+ "* Pati, Pushpak, Guillaume Jaume, Antonio Foncubierta-Rodriguez, Florinda Feroce, Anna Maria Anniciello, Giosue Scognamiglio, Nadia Brancati et al. \"Hierarchical graph representations in digital pathology.\" Medical image analysis 75 (2022): 102264.\n",

+ "* Brancati, Nadia, Anna Maria Anniciello, Pushpak Pati, Daniel Riccio, Giosuè Scognamiglio, Guillaume Jaume, Giuseppe De Pietro et al. \"Bracs: A dataset for breast carcinoma subtyping in h&e histology images.\" Database 2022 (2022): baac093."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "363ea74a-da2b-4e92-8d29-cbf7f59792cb",

+ "metadata": {},

+ "source": [

+ "## Session info"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "b0c5f9f8-cb8c-4d61-9147-7d82bcb45c9c",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import IPython\n",

+ "print(IPython.sys_info())\n",

+ "print(f\"torch version: {torch.__version__}\")"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "e7872fb6-7b62-422b-a7db-0b7e169d82c7",

+ "metadata": {},

+ "outputs": [],

+ "source": []

+ }

+ ],

+ "metadata": {

+ "kernelspec": {

+ "display_name": "pathml_graph_dev",

+ "language": "python",

+ "name": "pathml_graph_dev"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.9.18"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 5

+}

diff --git a/examples/train_hovernet.ipynb b/examples/train_hovernet.ipynb

index 5d152ee3..5384dd85 100644

--- a/examples/train_hovernet.ipynb

+++ b/examples/train_hovernet.ipynb

@@ -736,9 +736,9 @@

"uri": "gcr.io/deeplearning-platform-release/pytorch-gpu.1-6:m59"

},

"kernelspec": {

- "display_name": "hovernet",

+ "display_name": "pathml_graph_dev",

"language": "python",

- "name": "hovernet"

+ "name": "pathml_graph_dev"

},

"language_info": {

"codemirror_mode": {

@@ -750,7 +750,7 @@

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

- "version": "3.6.12"

+ "version": "3.9.18"

}

},

"nbformat": 4,

diff --git a/pathml/.coverage_others b/pathml/.coverage_others

new file mode 100644

index 00000000..c60bd5c7

Binary files /dev/null and b/pathml/.coverage_others differ

diff --git a/pathml/_version.py b/pathml/_version.py

index b91684f4..2704c284 100644

--- a/pathml/_version.py

+++ b/pathml/_version.py

@@ -3,4 +3,4 @@

License: GNU GPL 2.0

"""

-__version__ = "2.1.0"

+__version__ = "2.1.1"

diff --git a/pathml/datasets/__init__.py b/pathml/datasets/__init__.py

index 1f5ececd..6784fde3 100644

--- a/pathml/datasets/__init__.py

+++ b/pathml/datasets/__init__.py

@@ -3,5 +3,6 @@

License: GNU GPL 2.0

"""

+from .datasets import EntityDataset, TileDataset

from .deepfocus import DeepFocusDataModule

from .pannuke import PanNukeDataModule

diff --git a/pathml/datasets/datasets.py b/pathml/datasets/datasets.py

new file mode 100644

index 00000000..d6dbbe01

--- /dev/null

+++ b/pathml/datasets/datasets.py

@@ -0,0 +1,446 @@

+"""

+Copyright 2021, Dana-Farber Cancer Institute and Weill Cornell Medicine

+License: GNU GPL 2.0

+"""

+

+import copy

+import os

+import warnings

+from glob import glob

+

+import h5py

+import numpy as np

+import torch

+from skimage.measure import regionprops

+from skimage.transform import resize

+

+from pathml.graph.utils import HACTPairData

+

+

+class TileDataset(torch.utils.data.Dataset):

+ """

+ PyTorch Dataset class for h5path files

+

+ Each item is a tuple of (``tile_image``, ``tile_masks``, ``tile_labels``, ``slide_labels``) where:

+

+ - ``tile_image`` is a torch.Tensor of shape (C, H, W) or (T, Z, C, H, W)

+ - ``tile_masks`` is a torch.Tensor of shape (n_masks, tile_height, tile_width)

+ - ``tile_labels`` is a dict

+ - ``slide_labels`` is a dict

+

+ This is designed to be wrapped in a PyTorch DataLoader for feeding tiles into ML models.

+ Note that label dictionaries are not standardized, as users are free to store whatever labels they want.

+ For that reason, PyTorch cannot automatically stack labels into batches.

+ When creating a DataLoader from a TileDataset, it may therefore be necessary to create a custom ``collate_fn`` to

+ specify how to create batches of labels. See: https://discuss.pytorch.org/t/how-to-use-collate-fn/27181

+

+ Args:

+ file_path (str): Path to .h5path file on disk

+ """

+

+ def __init__(self, file_path):

+ self.file_path = file_path

+ self.h5 = None

+ with h5py.File(self.file_path, "r") as file:

+ self.tile_shape = eval(file["tiles"].attrs["tile_shape"])

+ self.tile_keys = list(file["tiles"].keys())

+ self.dataset_len = len(self.tile_keys)

+ self.slide_level_labels = {

+ key: val

+ for key, val in file["fields"]["labels"].attrs.items()

+ if val is not None

+ }

+

+ def __len__(self):

+ return self.dataset_len

+

+ def __getitem__(self, ix):

+ if self.h5 is None:

+ self.h5 = h5py.File(self.file_path, "r")

+

+ k = self.tile_keys[ix]

+ # this part copied from h5manager.get_tile()

+ tile_image = self.h5["tiles"][str(k)]["array"][:]

+

+ # get corresponding masks if there are masks

+ if "masks" in self.h5["tiles"][str(k)].keys():

+ masks = {

+ mask: self.h5["tiles"][str(k)]["masks"][mask][:]

+ for mask in self.h5["tiles"][str(k)]["masks"]

+ }

+ else:

+ masks = None

+

+ labels = {

+ key: val for key, val in self.h5["tiles"][str(k)]["labels"].attrs.items()

+ }

+

+ if tile_image.ndim == 3:

+ # swap axes from HWC to CHW for pytorch

+ im = tile_image.transpose(2, 0, 1)

+ elif tile_image.ndim == 5:

+ # in this case, we assume that we have XYZCT channel order (OME-TIFF)

+ # so we swap axes to TCZYX for batching

+ im = tile_image.transpose(4, 3, 2, 1, 0)

+ else:

+ raise NotImplementedError(

+ f"tile image has shape {tile_image.shape}. Expecting an image with 3 dims (HWC) or 5 dims (XYZCT)"

+ )

+

+ masks = np.stack(list(masks.values()), axis=0) if masks else None

+

+ return im, masks, labels, self.slide_level_labels

+

+

+class EntityDataset(torch.utils.data.Dataset):

+ """

+ Torch Geometric Dataset class for storing cell or tissue graphs. Each item returns a

+ pathml.graph.utils.HACTPairData object.

+

+ Args:

+ cell_dir (str): Path to folder containing cell graphs

+ tissue_dir (str): Path to folder containing tissue graphs

+ assign_dir (str): Path to folder containing assignment matrices

+ """

+

+ def __init__(self, cell_dir=None, tissue_dir=None, assign_dir=None):

+ self.cell_dir = cell_dir

+ self.tissue_dir = tissue_dir

+ self.assign_dir = assign_dir

+

+ if self.cell_dir is not None:

+ if not os.path.exists(cell_dir):

+ raise FileNotFoundError(f"Directory not found: {self.cell_dir}")

+ self.cell_graphs = glob(os.path.join(cell_dir, "*.pt"))

+

+ if self.tissue_dir is not None:

+ if not os.path.exists(tissue_dir):

+ raise FileNotFoundError(f"Directory not found: {self.tissue_dir}")

+ self.tissue_graphs = glob(os.path.join(tissue_dir, "*.pt"))

+

+ if self.assign_dir is not None:

+ if not os.path.exists(assign_dir):

+ raise FileNotFoundError(f"Directory not found: {self.assign_dir}")

+ self.assigns = glob(os.path.join(assign_dir, "*.pt"))

+

+ def __len__(self):

+ return len(self.cell_graphs)

+

+ def __getitem__(self, index):

+

+ # Load cell graphs, tissue graphs and assignments if they are provided

+ if self.cell_dir is not None:

+ cell_graph = torch.load(self.cell_graphs[index])

+ target = cell_graph["target"]

+

+ if self.tissue_dir is not None:

+ tissue_graph = torch.load(self.tissue_graphs[index])

+ target = tissue_graph["target"]

+

+ if self.assign_dir is not None:

+ assignment = torch.load(self.assigns[index])

+

+ # Create pathml.graph.utils.HACTPairData object with prvided objects

+ data = HACTPairData(

+ x_cell=cell_graph.node_features if self.cell_dir is not None else None,

+ edge_index_cell=cell_graph.edge_index

+ if self.cell_dir is not None

+ else None,

+ x_tissue=tissue_graph.node_features

+ if self.tissue_dir is not None

+ else None,

+ edge_index_tissue=tissue_graph.edge_index

+ if self.tissue_dir is not None

+ else None,

+ assignment=assignment[1, :] if self.assign_dir is not None else None,

+ target=target,

+ )

+ return data

+

+

+class InstanceMapPatchDataset(torch.utils.data.Dataset):

+ """

+ Create a dataset for a given image and extracted instance map with desired patches

+ of (patch_size, patch_size, 3).

+ Args:

+ image (np.ndarray): RGB input image.

+ instance map (np.ndarray): Extracted instance map.

+ entity (str): Entity to be processed. Must be one of 'cell' or 'tissue'. Defaults to 'cell'.

+ patch_size (int): Desired size of patch.

+ threshold (float): Minimum threshold for processing a patch or not.

+ resize_size (int): Desired resized size to input the network. If None, no resizing is done and the

+ patches of size patch_size are provided to the network. Defaults to None.

+ fill_value (Optional[int]): Value to fill outside the instance maps. Defaults to 255.

+ mean (list[float], optional): Channel-wise mean for image normalization.

+ std (list[float], optional): Channel-wise std for image normalization.

+ with_instance_masking (bool): If pixels outside instance should be masked. Defaults to False.

+ """

+

+ def __init__(

+ self,

+ image,

+ instance_map,

+ entity="cell",

+ patch_size=64,

+ threshold=0.2,

+ resize_size=None,

+ fill_value=255,

+ mean=None,

+ std=None,

+ with_instance_masking=False,

+ ):

+

+ self.image = image

+ self.instance_map = instance_map

+ self.entity = entity

+ self.patch_size = patch_size

+ self.with_instance_masking = with_instance_masking

+ self.fill_value = fill_value

+ self.resize_size = resize_size

+ self.mean = mean

+ self.std = std

+

+ self.patch_size_2 = int(self.patch_size // 2)

+

+ self.image = np.pad(

+ self.image,

+ (

+ (self.patch_size_2, self.patch_size_2),

+ (self.patch_size_2, self.patch_size_2),

+ (0, 0),

+ ),

+ mode="constant",

+ constant_values=self.fill_value,

+ )

+ self.instance_map = np.pad(

+ self.instance_map,

+ (

+ (self.patch_size_2, self.patch_size_2),

+ (self.patch_size_2, self.patch_size_2),

+ ),

+ mode="constant",

+ constant_values=0,

+ )

+

+ self.threshold = int(self.patch_size * self.patch_size * threshold)

+ self.warning_threshold = 0.75

+

+ try:

+ from torchvision import transforms

+

+ self.use_torchvision = True

+ except ImportError:

+ print(

+ "Torchvision is not installed, using base modules for resizing patches and skipping normalization"

+ )

+ self.use_torchvision = False

+

+ if self.use_torchvision:

+ basic_transforms = [transforms.ToPILImage()]

+ if self.resize_size is not None:

+ basic_transforms.append(transforms.Resize(self.resize_size))

+ basic_transforms.append(transforms.ToTensor())

+ if self.mean is not None and self.std is not None:

+ basic_transforms.append(transforms.Normalize(self.mean, self.std))

+ self.dataset_transform = transforms.Compose(basic_transforms)

+

+ if self.entity not in ["cell", "tissue"]:

+ raise ValueError(

+ "Invalid value for entity. Expected 'cell' or 'tissue', got '{}'.".format(

+ self.entity

+ )

+ )

+

+ if self.entity == "cell":

+ self._precompute_cell()

+ elif self.entity == "tissue":

+ self._precompute_tissue()

+

+ def _add_patch(self, center_x, center_y, instance_index, region_count):

+ """Extract and include patch information."""

+

+ # Get a patch for each entity in the instance map

+ mask = self.instance_map[

+ center_y - self.patch_size_2 : center_y + self.patch_size_2,

+ center_x - self.patch_size_2 : center_x + self.patch_size_2,

+ ]

+

+ # Check the overlap between the extracted patch and the entity

+ overlap = np.sum(mask == instance_index)

+

+ # Add patch coordinates if overlap is greated than threshold

+ if overlap > self.threshold:

+ loc = [center_x - self.patch_size_2, center_y - self.patch_size_2]

+ self.patch_coordinates.append(loc)

+ self.patch_region_count.append(region_count)

+ self.patch_instance_ids.append(instance_index)

+ self.patch_overlap.append(overlap)

+

+ def _get_patch_tissue(self, loc, region_id=None):

+ """Extract tissue patches from image."""

+

+ # Get bounding box of given location

+ min_x = loc[0]

+ min_y = loc[1]

+ max_x = min_x + self.patch_size

+ max_y = min_y + self.patch_size

+

+ patch = copy.deepcopy(self.image[min_y:max_y, min_x:max_x])

+

+ # Fill background pixels with instance masking value

+ if self.with_instance_masking:

+ instance_mask = ~(self.instance_map[min_y:max_y, min_x:max_x] == region_id)

+ patch[instance_mask, :] = self.fill_value

+ return patch

+

+ def _get_patch_cell(self, loc, region_id):

+ """Extract cell patches from image."""

+

+ # Get bounding box of given location

+ min_y, min_x = loc

+ patch = self.image[

+ min_y : min_y + self.patch_size, min_x : min_x + self.patch_size, :

+ ]

+

+ # Fill background pixels with instance masking value

+ if self.with_instance_masking:

+ instance_mask = ~(

+ self.instance_map[

+ min_y : min_y + self.patch_size, min_x : min_x + self.patch_size

+ ]

+ == region_id

+ )

+ patch[instance_mask, :] = self.fill_value

+

+ return patch

+

+ def _precompute_cell(self):

+ """Precompute instance-wise patch information for all cell instances in the input image."""

+

+ # Get location of all entities from the instance map

+ self.entities = regionprops(self.instance_map)

+ self.patch_coordinates = []

+ self.patch_overlap = []

+ self.patch_region_count = []

+ self.patch_instance_ids = []

+

+ # Get coordinates for all entities and add them to the pile

+ for region_count, region in enumerate(self.entities):

+ min_y, min_x, max_y, max_x = region.bbox

+

+ cy, cx = region.centroid

+ cy, cx = int(cy), int(cx)

+

+ coord = [cy - self.patch_size_2, cx - self.patch_size_2]

+

+ instance_mask = self.instance_map[

+ coord[0] : coord[0] + self.patch_size,

+ coord[1] : coord[1] + self.patch_size,

+ ]

+ overlap = np.sum(instance_mask == region.label)

+ if overlap >= self.threshold:

+ self.patch_coordinates.append(coord)

+ self.patch_region_count.append(region_count)

+ self.patch_instance_ids.append(region.label)

+ self.patch_overlap.append(overlap)

+

+ def _precompute_tissue(self):

+ """Precompute instance-wise patch information for all tissue instances in the input image."""

+

+ # Get location of all entities from the instance map

+ self.patch_coordinates = []

+ self.patch_region_count = []

+ self.patch_instance_ids = []

+ self.patch_overlap = []

+

+ self.entities = regionprops(self.instance_map)

+ self.stride = self.patch_size

+

+ # Get coordinates for all entities and add them to the pile

+ for region_count, region in enumerate(self.entities):

+

+ # Extract centroid

+ center_y, center_x = region.centroid

+ center_x = int(round(center_x))

+ center_y = int(round(center_y))

+

+ # Extract bounding box

+ min_y, min_x, max_y, max_x = region.bbox

+

+ # Extract patch information around the centroid patch

+ # quadrant 1 (includes centroid patch)

+ y_ = copy.deepcopy(center_y)

+ while y_ >= min_y:

+ x_ = copy.deepcopy(center_x)

+ while x_ >= min_x:

+ self._add_patch(x_, y_, region.label, region_count)

+ x_ -= self.stride

+ y_ -= self.stride

+

+ # quadrant 4

+ y_ = copy.deepcopy(center_y)

+ while y_ >= min_y:

+ x_ = copy.deepcopy(center_x) + self.stride

+ while x_ <= max_x:

+ self._add_patch(x_, y_, region.label, region_count)

+ x_ += self.stride

+ y_ -= self.stride

+

+ # quadrant 2

+ y_ = copy.deepcopy(center_y) + self.stride

+ while y_ <= max_y:

+ x_ = copy.deepcopy(center_x)

+ while x_ >= min_x:

+ self._add_patch(x_, y_, region.label, region_count)

+ x_ -= self.stride

+ y_ += self.stride

+

+ # quadrant 3

+ y_ = copy.deepcopy(center_y) + self.stride

+ while y_ <= max_y:

+ x_ = copy.deepcopy(center_x) + self.stride

+ while x_ <= max_x:

+ self._add_patch(x_, y_, region.label, region_count)

+ x_ += self.stride

+ y_ += self.stride

+

+ def _warning(self):

+ """Check patch coverage statistics to identify if provided patch size includes too much background."""

+

+ self.patch_overlap = np.array(self.patch_overlap) / (

+ self.patch_size * self.patch_size

+ )

+ if np.mean(self.patch_overlap) < self.warning_threshold:

+ warnings.warn("Provided patch size is large")

+ warnings.warn("Suggestion: Reduce patch size to include relevant context.")

+

+ def __getitem__(self, index):

+ """Loads an image for a given patch index."""

+

+ if self.entity == "cell":

+ patch = self._get_patch_cell(

+ self.patch_coordinates[index], self.patch_instance_ids[index]

+ )

+ elif self.entity == "tissue":

+ patch = self._get_patch_tissue(

+ self.patch_coordinates[index], self.patch_instance_ids[index]

+ )

+ else:

+ raise ValueError(

+ "Invalid value for entity. Expected 'cell' or 'tissue', got '{}'.".format(

+ self.entity

+ )

+ )

+

+ if self.use_torchvision:

+ patch = self.dataset_transform(patch)

+ else:

+ patch = patch / 255.0 if patch.max() > 1 else patch

+ patch = resize(patch, (self.resize_size, self.resize_size))

+ patch = torch.from_numpy(patch).permute(2, 0, 1).float()

+

+ return patch, self.patch_region_count[index]

+

+ def __len__(self):

+ """Returns the length of the dataset."""

+ return len(self.patch_coordinates)

diff --git a/pathml/datasets/pannuke.py b/pathml/datasets/pannuke.py

index 63bb35e8..9e801515 100644

--- a/pathml/datasets/pannuke.py

+++ b/pathml/datasets/pannuke.py

@@ -17,7 +17,7 @@

from pathml.datasets.base_data_module import BaseDataModule

from pathml.datasets.utils import pannuke_multiclass_mask_to_nucleus_mask

-from pathml.ml.hovernet import compute_hv_map

+from pathml.ml.models.hovernet import compute_hv_map

from pathml.utils import download_from_url

diff --git a/pathml/datasets/utils.py b/pathml/datasets/utils.py

index daf408f5..cd18c522 100644

--- a/pathml/datasets/utils.py

+++ b/pathml/datasets/utils.py

@@ -3,7 +3,15 @@

License: GNU GPL 2.0

"""

+import importlib

+

import numpy as np

+import torch

+from torch import nn

+from torch.utils.data import DataLoader

+from tqdm.auto import tqdm

+

+from pathml.datasets.datasets import InstanceMapPatchDataset

def pannuke_multiclass_mask_to_nucleus_mask(multiclass_mask):

@@ -30,3 +38,230 @@ def pannuke_multiclass_mask_to_nucleus_mask(multiclass_mask):

# ignore last channel

out = np.sum(multiclass_mask[:-1, :, :], axis=0)

return out

+

+

+def _remove_modules(model, last_layer):

+ """

+ Remove all modules in the model that come after a given layer.

+

+ Args:

+ model (nn.Module): A PyTorch model.

+ last_layer (str): Last layer to keep in the model.

+

+ Returns:

+ Model (nn.Module) without pruned modules.

+ """

+ modules = [n for n, _ in model.named_children()]

+ modules_to_remove = modules[modules.index(last_layer) + 1 :]

+ for mod in modules_to_remove:

+ setattr(model, mod, nn.Sequential())

+ return model

+

+

+class DeepPatchFeatureExtractor:

+ """

+ Patch feature extracter of a given architecture and put it on GPU if available using

+ Pathml.datasets.InstanceMapPatchDataset.

+

+ Args:

+ patch_size (int): Desired size of patch.

+ batch_size (int): Desired size of batch.

+ architecture (str or nn.Module): String of architecture. According to torchvision.models syntax, path to local model or nn.Module class directly.

+ entity (str): Entity to be processed. Must be one of 'cell' or 'tissue'. Defaults to 'cell'.

+ device (torch.device): Torch Device used for inference.

+ fill_value (int): Value to fill outside the instance maps. Defaults to 255.

+ threshold (float): Threshold for processing a patch or not.

+ resize_size (int): Desired resized size to input the network. If None, no resizing is done and

+ the patches of size patch_size are provided to the network. Defaults to None.

+ with_instance_masking (bool): If pixels outside instance should be masked. Defaults to False.

+ extraction_layer (str): Name of the network module from where the features are

+ extracted.

+

+ Returns:

+ Tensor of features computed for each entity.

+ """

+

+ def __init__(

+ self,

+ patch_size,

+ batch_size,

+ architecture,

+ device="cpu",

+ entity="cell",

+ fill_value=255,

+ threshold=0.2,

+ resize_size=224,

+ with_instance_masking=False,

+ extraction_layer=None,

+ ):

+

+ self.fill_value = fill_value

+ self.patch_size = patch_size

+ self.batch_size = batch_size

+ self.resize_size = resize_size

+ self.threshold = threshold

+ self.with_instance_masking = with_instance_masking

+ self.entity = entity

+ self.device = device

+

+ if isinstance(architecture, nn.Module):

+ self.model = architecture.to(self.device)

+ elif architecture.endswith(".pth"):

+ model = self._get_local_model(path=architecture)

+ self._validate_model(model)

+ self.model = self._remove_layers(model, extraction_layer)

+ else:

+ try:

+ global torchvision

+ import torchvision

+

+ model = self._get_torchvision_model(architecture).to(self.device)

+ self._validate_model(model)

+ self.model = self._remove_layers(model, extraction_layer)

+ except (ImportError, ModuleNotFoundError):

+ raise Exception(

+ "Using online models require torchvision to be installed"

+ )

+

+ self.normalizer_mean = [0.485, 0.456, 0.406]

+ self.normalizer_std = [0.229, 0.224, 0.225]

+

+ self.num_features = self._get_num_features(patch_size)

+ self.model.eval()

+

+ @staticmethod

+ def _validate_model(model):

+ """Raise an error if the model does not have the required attributes."""

+

+ if not isinstance(model, torchvision.models.resnet.ResNet):

+ if not hasattr(model, "classifier"):

+ raise ValueError(

+ "Please provide either a ResNet-type architecture or"

+ + ' an architecture that has the attribute "classifier".'

+ )

+

+ if not (hasattr(model, "features") or hasattr(model, "model")):

+ raise ValueError(

+ "Please provide an architecture that has the attribute"

+ + ' "features" or "model".'

+ )

+

+ def _get_num_features(self, patch_size):

+ """Get the number of features of a given model."""

+ dummy_patch = torch.zeros(1, 3, self.resize_size, self.resize_size).to(

+ self.device

+ )

+ features = self.model(dummy_patch)

+ return features.shape[-1]

+

+ def _get_local_model(self, path):

+ """Load a model from a local path."""

+ model = torch.load(path, map_location=self.device)

+ return model

+

+ def _get_torchvision_model(self, architecture):

+ """Returns a torchvision model from a given architecture string."""

+

+ module = importlib.import_module("torchvision.models")

+ model_class = getattr(module, architecture)

+ model = model_class(weights="IMAGENET1K_V1")

+ model = model.to(self.device)

+ return model

+

+ @staticmethod

+ def _remove_layers(model, extraction_layer=None):

+ """Returns the model without the unused layers to get embeddings."""

+

+ if hasattr(model, "model"):

+ model = model.model

+ if extraction_layer is not None:

+ model = _remove_modules(model, extraction_layer)

+ if isinstance(model, torchvision.models.resnet.ResNet):

+ if extraction_layer is None:

+ # remove classifier

+ model.fc = nn.Sequential()

+ else:

+ # remove all layers after the extraction layer

+ model = _remove_modules(model, extraction_layer)

+ else:

+ # remove classifier

+ model.classifier = nn.Sequential()

+ if extraction_layer is not None:

+ # remove average pooling layer if necessary

+ if hasattr(model, "avgpool"):

+ model.avgpool = nn.Sequential()

+ # remove all layers in the feature extractor after the extraction layer

+ model.features = _remove_modules(model.features, extraction_layer)

+ return model

+

+ @staticmethod

+ def _preprocess_architecture(architecture):

+ """Preprocess the architecture string to avoid characters that are not allowed as paths."""

+ if architecture.endswith(".pth"):

+ return f"Local({architecture.replace('/', '_')})"

+ else:

+ return architecture

+

+ def _collate_patches(self, batch):

+ """Patch collate function"""

+

+ instance_indices = [item[1] for item in batch]

+ patches = [item[0] for item in batch]

+ patches = torch.stack(patches)

+ return instance_indices, patches

+

+ def process(self, input_image, instance_map):

+ """Main processing function that takes in an input image and an instance map and returns features for all

+ entities in the instance map"""

+

+ # Create a pathml.datasets.datasets.InstanceMapPatchDataset class

+ image_dataset = InstanceMapPatchDataset(

+ image=input_image,

+ instance_map=instance_map,

+ entity=self.entity,

+ patch_size=self.patch_size,

+ threshold=self.threshold,

+ resize_size=self.resize_size,

+ fill_value=self.fill_value,

+ mean=self.normalizer_mean,

+ std=self.normalizer_std,

+ with_instance_masking=self.with_instance_masking,

+ )

+

+ # Create a torch DataLoader

+ image_loader = DataLoader(

+ image_dataset,

+ shuffle=False,

+ batch_size=self.batch_size,

+ num_workers=0,

+ collate_fn=self._collate_patches,

+ )

+

+ # Initialize feature tensor

+ features = torch.zeros(

+ size=(len(image_dataset.entities), self.num_features),

+ dtype=torch.float32,

+ device=self.device,

+ )

+ embeddings = {}

+

+ # Get features for batches of patches and add to feature tensor

+ for instance_indices, patches in tqdm(image_loader, total=len(image_loader)):

+

+ # Send to device

+ patches = patches.to(self.device)

+

+ # Inference mode

+ with torch.no_grad():

+ emb = self.model(patches).squeeze()

+ for j, key in enumerate(instance_indices):

+

+ # If entity already exists, add features on top of previous features

+ if key in embeddings:

+ embeddings[key][0] += emb[j]

+ embeddings[key][1] += 1

+ else:

+ embeddings[key] = [emb[j], 1]

+ for k, v in embeddings.items():

+ features[k, :] = v[0] / v[1]

+ return features.cpu().detach()

diff --git a/pathml/graph/__init__.py b/pathml/graph/__init__.py

new file mode 100644

index 00000000..b8a871ed

--- /dev/null

+++ b/pathml/graph/__init__.py

@@ -0,0 +1,11 @@

+"""

+Copyright 2021, Dana-Farber Cancer Institute and Weill Cornell Medicine

+License: GNU GPL 2.0

+"""

+

+from .preprocessing import (

+ ColorMergedSuperpixelExtractor,

+ KNNGraphBuilder,

+ RAGGraphBuilder,

+)

+from .utils import Graph, HACTPairData, build_assignment_matrix, get_full_instance_map

diff --git a/pathml/graph/preprocessing.py b/pathml/graph/preprocessing.py

new file mode 100644

index 00000000..e38a44d0

--- /dev/null

+++ b/pathml/graph/preprocessing.py

@@ -0,0 +1,663 @@

+"""

+Copyright 2021, Dana-Farber Cancer Institute and Weill Cornell Medicine

+License: GNU GPL 2.0

+"""

+

+import math

+from abc import abstractmethod

+

+import cv2

+import networkx as nx

+import numpy as np

+import pandas as pd

+import skimage

+import torch

+

+if skimage.__version__ < "0.20.0":

+ from skimage.future import graph

+else:

+ from skimage import graph

+

+from skimage.color.colorconv import rgb2hed

+from skimage.measure import regionprops

+from skimage.segmentation import slic

+from sklearn.neighbors import kneighbors_graph

+

+from pathml.graph.utils import Graph, two_hop

+

+

+class GraphFeatureExtractor:

+ """

+ Extracts features from a networkx graph object.

+

+ Args:

+ use_weight (bool, optional): Whether to use edge weights for feature computation. Defaults to False.

+ alpha (float, optional): Alpha value for personalized page-rank. Defaults to 0.85.

+

+ Returns:

+ Dictionary of keys as feature type and values as features

+ """

+

+ def __init__(self, use_weight=False, alpha=0.85):

+ self.use_weight = use_weight

+ self.feature_dict = {}

+ self.alpha = alpha

+

+ def get_stats(self, dct, prefix="add_pre"):

+ local_dict = {}

+ lst = list(dct.values())

+ local_dict[f"{prefix}_mean"] = np.mean(lst)

+ local_dict[f"{prefix}_median"] = np.median(lst)

+ local_dict[f"{prefix}_max"] = np.max(lst)

+ local_dict[f"{prefix}_min"] = np.min(lst)

+ local_dict[f"{prefix}_sum"] = np.sum(lst)

+ local_dict[f"{prefix}_std"] = np.std(lst)

+ return local_dict

+

+ def process(self, G):

+ if self.use_weight:

+ if "weight" in list(list(G.edges(data=True))[0][-1].keys()):

+ weight = "weight"

+ else:

+ raise ValueError(

+ "No edge attribute called 'weight' when use_weight is True"

+ )

+ else:

+ weight = None

+

+ self.feature_dict["diameter"] = nx.diameter(G)

+ self.feature_dict["radius"] = nx.radius(G)

+ self.feature_dict["assortativity_degree"] = nx.degree_assortativity_coefficient(

+ G

+ )

+ self.feature_dict["density"] = nx.density(G)

+ self.feature_dict["transitivity_undir"] = nx.transitivity(G)

+

+ self.feature_dict.update(self.get_stats(nx.hits(G)[0], prefix="hubs"))

+ self.feature_dict.update(self.get_stats(nx.hits(G)[1], prefix="authorities"))

+ self.feature_dict.update(

+ self.get_stats(nx.constraint(G, weight=weight), prefix="constraint")

+ )

+ self.feature_dict.update(self.get_stats(nx.core_number(G), prefix="coreness"))

+ self.feature_dict.update(

+ self.get_stats(

+ nx.eigenvector_centrality(G, weight=weight), prefix="egvec_centr"

+ )

+ )

+ self.feature_dict.update(

+ self.get_stats(

+ {node: val for (node, val) in G.degree(weight=weight)}, prefix="degree"

+ )

+ )

+ self.feature_dict.update(

+ self.get_stats(

+ nx.pagerank(G, alpha=self.alpha), prefix="personalized_pgrank"

+ )

+ )

+

+ return self.feature_dict

+

+

+class BaseGraphBuilder:

+ """Base interface class for graph building.

+

+ Args:

+ nr_annotation_classes (int): Number of classes in annotation. Used only if setting node labels.

+ annotation_background_class (int): Background class label in annotation. Used only if setting node labels.

+ add_loc_feats (bool): Flag to include location-based features (ie normalized centroids) in node feature representation.

+ Defaults to False.

+ """

+

+ def __init__(

+ self,

+ nr_annotation_classes: int = 5,

+ annotation_background_class=None,

+ add_loc_feats=False,

+ **kwargs,

+ ):

+ """Base Graph Builder constructor."""

+ self.nr_annotation_classes = nr_annotation_classes

+ self.annotation_background_class = annotation_background_class

+ self.add_loc_feats = add_loc_feats