-

Notifications

You must be signed in to change notification settings - Fork 0

/

Copy pathcompleted_lacc_2021_m2_pj2_toifall.py

287 lines (202 loc) · 11.5 KB

/

completed_lacc_2021_m2_pj2_toifall.py

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

# -*- coding: utf-8 -*-

"""Completed LACC-2021-M2-PJ2-ToiFall.ipynb

Automatically generated by Colaboratory.

Original file is located at

https://colab.research.google.com/drive/1DZv9l0827mq9vwJ_3ci0cSUXSMN8Ro7Y

# ToiFall: WiFi CSI Activity Recognition

## Introduction

Falls in restrooms and bathrooms can lead to severe injuries and even pose life threats to patients. ToiFall project collects Channel State Information (CSI) from commodity Wi-Fi devices. Channel State Information indicates the Wi-Fi signal channel properties (frequency response) over time, which is leveraged initially to improve Wi-Fi communication qualities.

As part of the indoor channel, the human body reflects and deflects the Wi-Fi signals, and then the CSI is affected by human activities. Different human movements form various textures on images drawn with CSI data, and such textures can be used for feature extraction and classification.

This dataset consists of 1750 pictures collected from 17 volunteers, who are asked to perform one of the six frequent movements in restrooms. These movements are illustrated in the figure below: walk, stand up, sit down, fall to the left, fall to the right, and fall to the front.

For more information, please refer to the following publication:

[1] Wang, Ziqi, Zhihao Gu, Junwei Yin, Zhe Chen, and Yuedong Xu. "Syncope detection in toilet environments using Wi-Fi channel state information." In Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, pp. 287-290. 2018.

The ToiFall dataset is available at:

https://drive.google.com/file/d/1RJvLL58m__km6vPTZbW2IV3FqgplvOj4/view?usp=sharing

Other References:

[2]Gabor Filter - Wikipedia: https://en.wikipedia.org/wiki/Gabor_filter

[3]Gabor filter banks for texture classification: Haghighat, Mohammad, Saman Zonouz, and Mohamed Abdel-Mottaleb. "Identification using encrypted biometrics." In International Conference on Computer Analysis of Images and Patterns, pp. 440-448. Springer, Berlin, Heidelberg, 2013.

This project consists of the following steps:

Load pre-processed dataset -> Gabor Filter feature extraction -> SVM Classification

"""

!pip install numpy

!pip install scipy

!pip install matplotlib

!pip install opencv-python

!pip install tqdm

!pip install pandas

!pip install scikit-image

!pip install scikit-learn

"""## Load and Prepare Dataset

Download the preprocessed dataset from the link below, then upload the zip file to your Google Drive:

https://drive.google.com/file/d/1RJvLL58m__km6vPTZbW2IV3FqgplvOj4/view?usp=sharing

Each image in the dataset is collected from two WiFi links when some activity is performed.

"""

# Mount Google Drive and unzip the dataset

from google.colab import drive

drive.mount('/content/gdrive')

!unzip "/content/gdrive/MyDrive/toifall_dataset.zip" -d "/content/gdrive/MyDrive/toifall_dataset/"

# open a sample image

from skimage import io

import numpy as np

img = io.imread("/content/gdrive/MyDrive/toifall_dataset/walk/walk_077.jpg")

io.imshow(img)

print("Min value in this geryscale image is {}".format(np.min(img)))

print("Max value in this geryscale image is {}".format(np.max(img)))

"""**The data has already gone through the following pre-processing.** The information below is just to provide you with some background information to help you understand the full scale of this project. It is **not** related to the algorithm coding tasks that you are going to finish.

(1) Channel State Information from all the 180 channels is juxtaposed to form a (180 * timestamp) size matrix. The 180 channels are 2 WiFi links x 3 Antennas/Link x 30 Subcarriers/Antenna.

(2) Each data point is a complex number. We first extract the amplitude of CSI data. Then we apply a 10-order Butterworth filter with a cutoff frequency of 40Hz on each channel to remove the high-frequency noise.

(3) We calculate the signal variance within a sliding window of 500 points and segment out the section with the highest variance -- they are more likely to be related to human activities.

(4) We further enhance the signal by removing the static frequency response caused by the indoor environment. This is achieved by performing an SVD decomposition of the data matrix, then reconstructing the data matrix with the first eigenvalue set to zero.

"""

# load the dataset into memory

import os

activity = ["empty", "fall_forward", "fall_left", "fall_right", "sit", "stand", "walk"]

data = []

label = []

for i in range(len(activity)):

path = "/content/gdrive/MyDrive/toifall_dataset/" + activity[i]

dir_list = os.listdir(path)

cnt = 0

for each in dir_list:

img_path = path + "/" + each

data.append(io.imread(img_path))

label.append(i)

cnt += 1

if cnt >= 100: # reduce the number if the feature extraction take too long to execute

cnt=0

# break # if comment out the break: load the whole dataset to memory

# open an example

data = np.array(data)

label = np.array(label)

io.imshow(data[277])

print("Label: {}".format(activity[label[277]]))

data.shape

# (7xN, 180, 500), N of your choice

"""## Gabor Filter Feature Extraction

In this section, we are going to extract the feature from the images using Gabor filter. Gabor filters have been widely used in pattern analysis applications. The most important advantage of Gabor filters is their invariance to illumination, rotation, scale, and translation. [3]

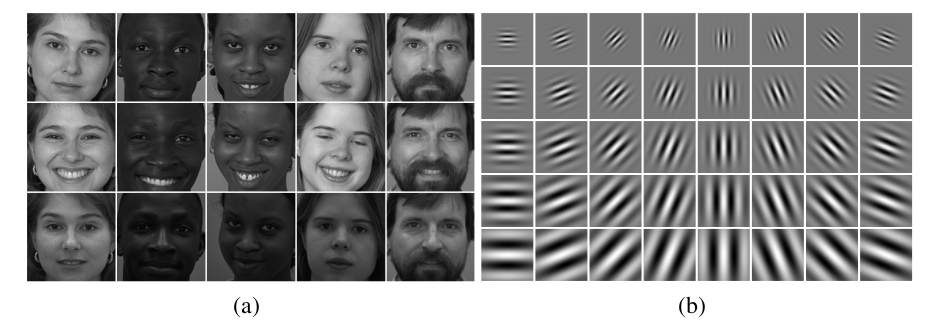

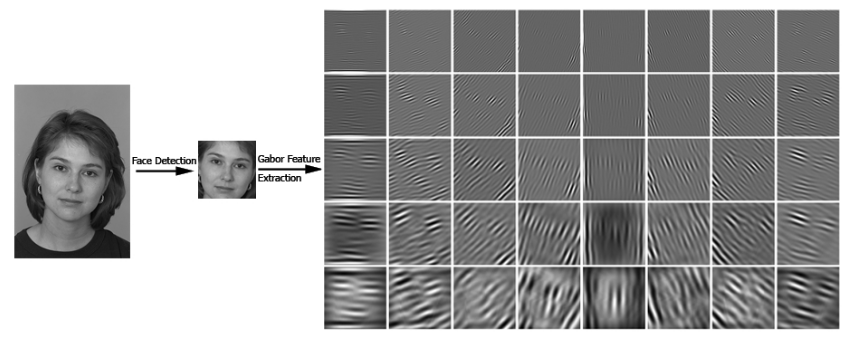

Here we will privide you with a high-level non-precise intuition about this feature extraction process. A typical group of Gabor filter kernels is shown in (b) below. It looks like a number of stripes with different scales and orientations.

We will do a cross-correlation between each image and each kernels. From Project 1 we already know that the resulting value will be higher if the image region being calculated looks alike the template. In this case, the Gabor filter kernels servers as the template. Different iamges will react differntly to different kernels. For example, if the image of walking contains a lot of wide vertical stripes, when calcuating correlation with a kernal looking similar, the average value will be large. In this way, the "reaction" of the image w.r.t to different kernels can serve as a feature for future classification. See the example below, the figures in this block come from [3].

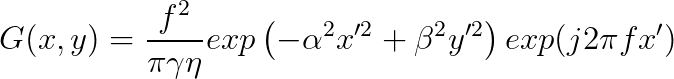

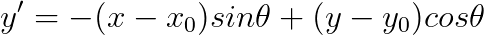

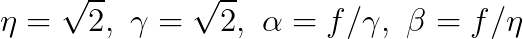

**Now it is time to implement you own *gaborFilterBank* with different scales and orientations.** Please follow the following equation:

,where

$f$ is related to the scales, where we start from $f_0 = 0.25$, then for the next scales, $f_1 = \frac{0.25}{\sqrt 2}$, $f_2 = \frac{0.25}{(\sqrt 2)^2}$, $f_3 = \frac{0.25}{(\sqrt 2)^3}$, so on so forth.

$\theta$ is related to the orientations, where $\theta_i = \frac{i}{number\_of\_orientations} \times \pi $

"""

# prepare filter bank kernels

def gaborFilterBank(u=8, v=6, m=15, n=15):

'''

Inputs:

u: No. of scales (usually set to 8)

v: No. of orientations (usually set to 6)

m: No. of rows in a 2-D Gabor filter (an odd integer number usually set to 15)

n: No. of columns in a 2-D Gabor filter (an odd integer number usually set to 39)

Output:

gaborArray: A 1D array of size (u x v), in which each element is a m by n matrix

(a 2-D Gabor filter)

'''

assert m%2 != 0

assert n%2 != 0

gaborArray = []

fmax = 0.25;

gama = np.sqrt(2);

eta = np.sqrt(2);

pi = np.pi

x0 = m//2

y0 = m//2

#FIND THIS OUT ALSO WHAT ARE X AND Y

##########################################################

# scales

for scale in range(u):

f = fmax/np.sqrt(2)**scale

alpha = f/gama

beta = f/eta

# orientation

for ori in range(v):

theta = ori/v * pi

filter = np.zeros((m,n), dtype = np.complex64)

for row in range(m):

for col in range(n):

x = (row-x0)*np.cos(theta) + (col-y0) * np.sin(theta)

y = -(row-x0)*np.sin(theta) + (col-y0) * np.cos(theta)

# print(x, alpha)

a = (-alpha**2) * (x**2) + (beta**2) * (y**2)

b = (1j*2*pi*f*x)

filter[row, col] = (f**2)/(pi*gama*eta) * np.exp(a) * np.exp(b)

gaborArray.append(filter)

##########################################################

return np.array(gaborArray, dtype = np.complex64)

# x0 y0 is center

# Generate Gabor Filter Kernels

kernels = gaborFilterBank()

# Visualize the generated kernels

import matplotlib.pyplot as plt

fig, axs = plt.subplots(nrows=8, ncols=6, figsize=(15,15))

for i in range(8):

for j in range(6):

axs[i][j].imshow(np.real(kernels[i*6+j]))

plt.show()

"""Compare your results with this one:

"""

from tqdm import tqdm

from scipy import signal

from scipy.ndimage import correlate

processed_data = []

def compute_feats(image, kernels):

# compute cross-corr of one image with all the Gabor kernels

feats = np.zeros((len(kernels), 2), dtype=np.double)

for k, kernel in enumerate(kernels):

# filtered = np.abs(signal.correlate2d(image, kernel, mode='valid'))

filtered = np.abs(correlate(image, kernel, mode='constant'))

feats[k, 0] = filtered.mean()

feats[k, 1] = filtered.std()

return feats

# result = np.zeros((row_bound, col_bound))

# Extract features from each element of the dataset

processed_data = []

for each_img in tqdm(data):

feats = compute_feats(each_img, kernels)

processed_data.append(np.array(feats).flatten())

print(np.shape(processed_data))

print(processed_data[0])

print(processed_data[300])

"""## SVM Classification"""

# Prepare training-testing datasets for SVM

# Normalize the extracted features

processed_data = np.array(processed_data)

for i in range(len(processed_data)):

processed_data[i] = (processed_data[i] - np.min(processed_data[i]))/ (np.max(processed_data[i])

- np.min(processed_data[i]))

# Split the data into training and testing set

from sklearn.model_selection import StratifiedShuffleSplit

stratSplit = StratifiedShuffleSplit(n_splits=1, test_size=0.25, random_state=0)

for train_idx, test_idx in stratSplit.split(processed_data, label):

X_train=processed_data[train_idx]

y_train=label[train_idx]

X_test=processed_data[test_idx]

y_test=label[test_idx]

print(np.shape(X_train))

print(np.shape(X_test))

print(np.shape(y_train))

print(np.shape(y_test))

print(np.unique(y_train, return_counts=True))

print(np.unique(y_test, return_counts=True))

processed_data[0]

# Train the SVM classifier

from sklearn import datasets, svm, metrics

clf = svm.SVC(C=10, kernel="poly", degree=6)

clf.fit(X_train, y_train)

# Make predictions with the trained SVM classifier

predicted = clf.predict(X_test)

predicted

disp = metrics.plot_confusion_matrix(clf, X_test, y_test)

disp.figure_.suptitle("Confusion Matrix")

print(f"Confusion matrix:\n{disp.confusion_matrix}")

accuracy = np.sum(predicted==y_test)/len(predicted)

print(accuracy)