-

Notifications

You must be signed in to change notification settings - Fork 1.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Multimodal Cross-attention incorrect results in #2796

Comments

|

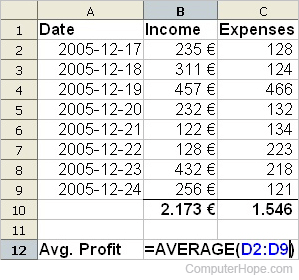

Ai2d eval reports show 60% accuracy, which is lower than 65% threshold shown in multimodal guide Meta self-reported results show it should be ~91% |

|

Hi, I ran into the same issue for Llama-3.2-11B-Vision-Instruct. The engine works for some image but fails for some while transformers model works fine with those images. |

|

@JC1DA @mutkach i tried to run the non instruct version which is in README of the guide and that seems to be working fine In the above I used the image from the previous comment. So is this specific to the instruct version? |

|

hi @mayani-nv, I used the instruct version. the FP16 version does not generate anything while the BF16 version generated incorrect description. I can test the non-instruct version, can you also test the instruct version to confirm? |

|

System Info

System Info

cpu: x86_64

mem: 128G

gpu: H100 80G

docker: tritonserver:24.12-trtllm-python-py3

Cuda: 12.6

Driver: 535.216.01

TensorRT: 10.7.0

TensorRT-LLM: v0.17.0

Who can help?

@kaiyux @byshiue

Information

Tasks

examplesfolder (such as GLUE/SQuAD, ...)Reproduction

Steps to reproduce:

Expected behavior

The cross-attention block output should be closer to the torch implementation. Otherwise the accuracy will be markedly lower for specific OCR-related tasks. May provide specific examples if that's needed. I understand that exactly equal output is not to be expected for the optimized trtllm engine but observed difference is of a magnitude higher than expected.

actual behavior

For context phase there is a growing discrepancy between the torch outputs and resulting outputs which leads to incorrect results during the decoding phase. Note that only vision capabilities are affected (when cross-attention blocks are not skipped).

additional notes

Regarding the matter,

I tried turning off some or most the trtllm-build flags, which led to even more incorrect results.

Visual transformer engine outpus seem to differ slightly too, so I injected the torch output tensor into the trtllm pipeline directly in order to rule out the visual encoder for now.

The problem seem to be inside the cross-attention block and the discrepancies show only when the multimodality is involved.

Is there a way to fall back to an unoptimzed cross-attention implementation that I could use for now until the underlying problem is solved? Turning off gpt-plugin does not seem to be supported right now.

There's probability that the problem is on my side somewhere (previously I had to change some code, as I was trying to solve a different issue (triton-inference-server/tensorrtllm_backend#692), though I think that probability is minimal now that I double and triple checked everything.

Also I would appreciate if you could share some advanced debugging techniques for ruling out similar issues in the future.

P.S. thank you for your work, it is much appreciated!

The text was updated successfully, but these errors were encountered: