-

+

+

+

+

+

+

- + + + + + + Home + + + + + + + + + + + + + + +

- + + + + Basic setup + + + + + + + + + + + + + +

- + + + + Containers + + + + + + + + + + + + + +

- + + + + Developers + + + + + + + + + + + + + +

- + + + + Updates + + + + + + + +

Accessing your device from the internet¶

+The challenge most of us face with remotely accessing our home networks is that our routers usually have a dynamically-allocated IP address on the public (WAN) interface.

+From time to time the IP address that your ISP assigns changes and it's difficult to keep up. Fortunately, there is a solution: Dynamic DNS. The section below shows you how to set up an easy-to-remember domain name that follows your public IP address no matter when it changes.

+Secondly, how do you get into your home network? Your router has a firewall that is designed to keep the rest of the internet out of your network to protect you. The solution to that is a Virtual Private Network (VPN) or "tunnel".

+Dynamic DNS¶

+There are two parts to a Dynamic DNS service:

+-

+

- You have to register with a Dynamic DNS service provider and obtain a domain name that is not already taken by someone else. +

- Something on your side of the network needs to propagate updates so that your chosen domain name remains in sync with your router's dynamically-allocated public IP address. +

Register with a Dynamic DNS service provider¶

+The first part is fairly simple and there are quite a few Dynamic DNS service providers including:

+-

+

- DuckDNS.org +

- NoIP.com +

++You can find more service providers by Googling "Dynamic DNS service".

+

Some router vendors also provide their own built-in Dynamic DNS capabilities for registered customers so it's a good idea to check your router's capabilities before you plough ahead.

+Dynamic DNS propagation¶

+The "something" on your side of the network propagating WAN IP address changes can be either:

+-

+

- your router; or +

- a "behind the router" technique, typically a periodic job running on the same Raspberry Pi that is hosting IOTstack and WireGuard. +

If you have the choice, your router is to be preferred. That's because your router is usually the only device in your network that actually knows when its WAN IP address changes. A Dynamic DNS client running on your router will propagate changes immediately and will only transmit updates when necessary. More importantly, it will persist through network interruptions or Dynamic DNS service provider outages until it receives an acknowledgement that the update has been accepted.

+Nevertheless, your router may not support the Dynamic DNS service provider you wish to use, or may come with constraints that you find unsatisfactory so any behind-the-router technique is always a viable option, providing you understand its limitations.

+A behind-the-router technique usually relies on sending updates according to a schedule. An example is a cron job that runs every five minutes. That means any router WAN IP address changes won't be propagated until the next scheduled update. In the event of network interruptions or service provider outages, it may take close to ten minutes before everything is back in sync. Moreover, given that WAN IP address changes are infrequent events, most scheduled updates will be sending information unnecessarily.

DuckDNS container¶

+The recommended and easiest solution is to install the Duckdns docker-container +from the menu. It includes the cron service and logs are handled by Docker.

+For configuration see Containers/Duck DNS.

+Note

+This is a recently added container, please don't hesitate to report any +possible faults to Discord or as Github issues.

+DuckDNS client script¶

+Info

+This method will soon be deprecated in favor of the DuckDNS container.

+IOTstack provides a solution for DuckDNS. The best approach to running it is:

+$ mkdir -p ~/.local/bin

+$ cp ~/IOTstack/duck/duck.sh ~/.local/bin

+++The reason for recommending that you make a copy of

+duck.shis because the "original" is under Git control. If you change the "original", Git will keep telling you that the file has changed and it may block incoming updates from GitHub.

Then edit ~/.local/bin/duck.sh to add your DuckDNS domain name(s) and token:

DOMAINS="YOURS.duckdns.org"

+DUCKDNS_TOKEN="YOUR_DUCKDNS_TOKEN"

+For example:

+DOMAINS="downunda.duckdns.org"

+DUCKDNS_TOKEN="8a38f294-b5b6-4249-b244-936e997c6c02"

+Note:

+-

+

-

+

The

+DOMAINS=variable can be simplified to just "YOURS", with the.duckdns.orgportion implied, as in:+DOMAINS="downunda" +

+

Once your credentials are in place, test the result by running:

+$ ~/.local/bin/duck.sh

+ddd, dd mmm yyyy hh:mm:ss ±zzzz - updating DuckDNS

+OK

+The timestamp is produced by the duck.sh script. The expected responses from the DuckDNS service are:

-

+

- "OK" - indicating success; or +

- "KO" - indicating failure. +

Check your work if you get "KO" or any other errors.

+Next, assuming dig is installed on your Raspberry Pi (sudo apt install dnsutils), you can test propagation by sending a directed query to a DuckDNS name server. For example, assuming the domain name you registered was downunda.duckdns.org, you would query like this:

$ dig @ns1.duckdns.org downunda.duckdns.org +short

+The expected result is the IP address of your router's WAN interface. It is a good idea to confirm that it is the same as you get from whatismyipaddress.com.

+A null result indicates failure so check your work.

+Remember, the Domain Name System is a distributed database. It takes time for changes to propagate. The response you get from directing a query to ns1.duckdns.org may not be the same as the response you get from any other DNS server. You often have to wait until cached records expire and a recursive query reaches the authoritative DuckDNS name-servers.

+Running the DuckDNS client automatically¶

+The recommended arrangement for keeping your Dynamic DNS service up-to-date is to invoke duck.sh from cron at five minute intervals.

If you are new to cron, see these guides for more information about setting up and editing your crontab:

A typical crontab will look like this:

SHELL=/bin/bash

+HOME=/home/pi

+PATH=/home/pi/.local/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

+

+*/5 * * * * duck.sh >/dev/null 2>&1

+The first three lines construct the runtime environment correctly and should be at the start of any crontab.

The last line means "run duck.sh every five minutes". See crontab.guru if you want to understand the syntax of the last line.

+When launched in the background by cron, the script supplied with IOTstack adds a random delay of up to one minute to try to reduce the "hammering effect" of a large number of users updating DuckDNS simultaneously.

Standard output and standard error are redirected to /dev/null which is appropriate in this instance. When DuckDNS is working correctly (which is most of the time), the only output from the curl command is "OK". Logging that every five minutes would add wear and tear to SD cards for no real benefit.

If you suspect DuckDNS is misbehaving, you can run the duck.sh command from a terminal session, in which case you will see all the curl output in the terminal window.

If you wish to keep a log of duck.sh activity, the following will get the job done:

-

+

-

+

Make a directory to hold log files:

++$ mkdir -p ~/Logs +

+ -

+

Edit the last line of the

+crontablike this:+*/5 * * * * duck.sh >>./Logs/duck.log 2>&1 +

+

Remember to prune the log from time to time. The generally-accepted approach is:

+$ cat /dev/null >~/Logs/duck.log

+Virtual Private Network¶

+WireGuard¶

+WireGuard is supplied as part of IOTstack. See WireGuard documentation.

+PiVPN¶

+pimylifeup.com has an excellent tutorial on how to install PiVPN

+In point 17 and 18 they mention using noip for their dynamic DNS. Here you can use the DuckDNS address if you created one.

+Don't forget you need to open the port 1194 on your firewall. Most people won't be able to VPN from inside their network so download OpenVPN client for your mobile phone and try to connect over mobile data. (More info.)

+Once you activate your VPN (from your phone/laptop/work computer) you will effectively be on your home network and you can access your devices as if you were on the wifi at home.

+I personally use the VPN any time I'm on public wifi, all your traffic is secure.

+Zerotier¶

+https://www.zerotier.com/

+Zerotier is an alternative to PiVPN that doesn't require port forwarding on your router. It does however require registering for their free tier service here.

+Kevin Zhang has written a how to guide here. Just note that the install link is outdated and should be:

+$ curl -s 'https://raw.githubusercontent.com/zerotier/ZeroTierOne/master/doc/contact%40zerotier.com.gpg' | gpg --import && \

+if z=$(curl -s 'https://install.zerotier.com/' | gpg); then echo "$z" | sudo bash; fi

+-

+

+

+

+

+

+

- + + + + + + Home + + + + + + + + + + + + + + +

- + + + + Basic setup + + + + + + + + + + + + + +

- + + + + Containers + + + + + + + + + + + + + +

- + + + + Developers + + + + + + + + + + + + + +

- + + + + Updates + + + + + + + +

Backing up and restoring IOTstack¶

+This page explains how to use the backup and restore functionality of IOTstack.

+Backup¶

+The backup command can be executed from IOTstack's menu, or from a cronjob.

+Running backup¶

+To ensure that all your data is saved correctly, the stack should be brought down. This is mainly due to databases potentially being in a state that could cause data loss.

+There are 2 ways to run backups:

+-

+

- From the menu:

Backup and Restore>Run backup

+ - Running the following command:

bash ./scripts/backup.sh

+

The command that's run from the command line can also be executed from a cronjob:

+0 2 * * * cd /home/pi/IOTstack && /bin/bash ./scripts/backup.sh

The current directory of bash must be in IOTstack's directory, to ensure that it can find the relative paths of the files it's meant to back up. In the example above, it's assume that it's inside the pi user's home directory.

Arguments¶

+./scripts/backup.sh {TYPE=3} {USER=$(whoami)}

+-

+

- Types: +

- 1 = Backup with Date

-

+

- A tarball file will be created that contains the date and time the backup was started, in the filename. +

+ - 2 = Rolling Date

-

+

- A tarball file will be created that contains the day of the week (0-6) the backup was started, in the filename. +

- If a tarball already exists with the same name, it will be overwritten. +

+ - 3 = Both +

- User: + This parameter only becomes active if run as root. This script will default to the current logged in user + If this parameter is not supplied when run as root, the script will ask for the username as input +

Backups:

+-

+

- You can find the backups in the ./backups/ folder. With rolling being in ./backups/rolling/ and date backups in ./backups/backup/ +

- Log files can also be found in the ./backups/logs/ directory. +

Examples:¶

+-

+

./scripts/backup.sh

+./scripts/backup.sh 3

+

Either of these will run both backups.

+-

+

./scripts/backup.sh 2

+

This will only produce a backup in the rollowing folder. It will be called 'backup_XX.tar.gz' where XX is the current day of the week (as an int)

+-

+

sudo bash ./scripts/backup.sh 2 pi

+

This will only produce a backup in the rollowing folder and change all the permissions to the 'pi' user.

+Restore¶

+There are 2 ways to run a restore:

+-

+

- From the menu:

Backup and Restore>Restore from backup

+ - Running the following command:

bash ./scripts/restore.sh

+

Important: The restore script assumes that the IOTstack directory is fresh, as if it was just cloned. If it is not fresh, errors may occur, or your data may not correctly be restored even if no errors are apparent.

+Note: It is suggested that you test that your backups can be restored after initially setting up, and anytime you add or remove a service. Major updates to services can also break backups.

+Arguments¶

+./scripts/restore.sh {FILENAME=backup.tar.gz} {noask}

+-

+

- Filename: The name of the backup file. The file must be present in the

./backups/directory, or a subfolder in it. That means it should be moved from./backups/backupto./backups/, or that you need to specify thebackupportion of the directory (see examples)

+ - NoAsk: If a second parameter is present, is acts as setting the no ask flag to true. +

Pre and post script hooks¶

+The script checks if there are any pre and post back up hooks to execute commands. Both of these files will be included in the backup, and have also been added to the .gitignore file, so that they will not be touched when IOTstack updates.

Prebackup script hook¶

+The prebackup hook script is executed before any compression happens and before anything is written to the temporary backup manifest file (./.tmp/backup-list_{{NAME}}.txt). It can be used to prepare any services (such as databases that IOTstack isn't aware of) for backing up.

To use it, simple create a ./pre_backup.sh file in IOTstack's main directory. It will be executed next time a backup runs.

Postbackup script hook¶

+The postbackup hook script is executed after the tarball file has been written to disk, and before the final backup log information is written to disk.

+To use it, simple create a ./post_backup.sh file in IOTstack's main directory. It will be executed after the next time a backup runs.

Post restore script hook¶

+The post restore hook script is executed after all files have been extracted and written to disk. It can be used to apply permissions that your custom services may require.

+To use it, simple create a ./post_restore.sh file in IOTstack's main directory. It will be executed after a restore happens.

Third party integration¶

+This section explains how to backup your files with 3rd party software.

+Dropbox¶

+Coming soon.

+Google Drive¶

+Coming soon.

+rsync¶

+Coming soon.

+Duplicati¶

+Coming soon.

+SFTP¶

+Coming soon.

+ + + + + + + + + + + + + +-

+

+

+

+

+

+

- + + + + + + Home + + + + + + + + + + + + + + +

- + + + + Basic setup + + + + + + + + + + + + + +

- + + + + Containers + + + + + + + + + + + + + +

- + + + + Developers + + + + + + + + + + + + + +

- + + + + Updates + + + + + + + +

Custom overrides¶

+Each time you build the stack from the menu, the Docker Compose file

+docker-compose.yml is recreated, losing any custom changes you've made. There

+are different ways of dealing with this:

-

+

- Not using the menu after you've made changes. Do remember to backup your

+ customized

docker-compose.yml, in case you overwrite it by mistake or + habit from the menu.

+ - Use the Docker Compose inbuilt override mechanism by creating a file named

+

docker-compose.override.yml. This limits you to changing values and + appending to lists already present in your docker-compose.yml, but it's + handy as changes are immediately picked up by docker-compose commands. To + see the resulting final config rundocker-compose config.

+ - IOTstack menu, in the default master-branch, implements a mechanism to

+ merge the yaml file

compose-override.ymlwith the menu-generated stack + intodocker-compose.yml. This can be used to add even complete new + services. See below for details.

+ - This is not an actual extension mechanism, but well worth mentioning: If

+ you need a new services that doesn't communicate with the services in

+ IOTstack, create it completely separately and independently into its own

+ folder, e.g.

~/customStack/docker-compose.yml. This composition can then + be independently managed from that folder:cd ~/customStackand use +docker-composecommands as normal. The best override is the one you don't + have to make.

+

Custom services and overriding default settings for IOTstack¶

+You can specify modifcations to the docker-compose.yml file, including your own networks and custom containers/services.

Create a file called compose-override.yml in the main directory, and place your modifications into it. These changes will be merged into the docker-compose.yml file next time you run the build script.

The compose-override.yml file has been added to the .gitignore file, so it shouldn't be touched when upgrading IOTstack. It has been added to the backup script, and so will be included when you back up and restore IOTstack. Always test your backups though! New versions of IOTstack may break previous builds.

How it works¶

+-

+

- After the build process has been completed, a temporary docker compose file is created in the

tmpdirectory.

+ - The script then checks if

compose-override.ymlexists:-

+

- If it exists, then continue to step

3

+ - If it does not exist, copy the temporary docker compose file to the main directory and rename it to

docker-compose.yml.

+

+ - If it exists, then continue to step

- Using the

yaml_merge.pyscript, merge both thecompose-override.ymland the temporary docker compose file together; Using the temporary file as the default values and interating through each level of the yaml structure, check to see if thecompose-override.ymlhas a value set.

+ - Output the final file to the main directory, calling it

docker-compose.yml.

+

A word of caution¶

+If you specify an override for a service, and then rebuild the docker-compose.yml file, but deselect the service from the list, then the YAML merging will still produce that override.

For example, lets say NodeRed was selected to have have the following override specified in compose-override.yml:

+

services:

+ nodered:

+ restart: always

+When rebuilding the menu, ensure to have NodeRed service always included because if it's no longer included, the only values showing in the final docker-compose.yml file for NodeRed will be the restart key and its value. Docker Compose will error with the following message:

Service nodered has neither an image nor a build context specified. At least one must be provided.

When attempting to bring the services up with docker-compose up -d.

Either remove the override for NodeRed in compose-override.yml and rebuild the stack, or ensure that NodeRed is built with the stack to fix this.

Examples¶

+Overriding default settings¶

+Lets assume you put the following into the compose-override.yml file:

+

services:

+ mosquitto:

+ ports:

+ - 1996:1996

+ - 9001:9001

+Normally the mosquitto service would be built like this inside the docker-compose.yml file:

+

version: '3.6'

+services:

+ mosquitto:

+ container_name: mosquitto

+ image: eclipse-mosquitto

+ restart: unless-stopped

+ user: "1883"

+ ports:

+ - 1883:1883

+ - 9001:9001

+ volumes:

+ - ./volumes/mosquitto/data:/mosquitto/data

+ - ./volumes/mosquitto/log:/mosquitto/log

+ - ./volumes/mosquitto/pwfile:/mosquitto/pwfile

+ - ./services/mosquitto/mosquitto.conf:/mosquitto/config/mosquitto.conf

+ - ./services/mosquitto/filter.acl:/mosquitto/config/filter.acl

+Take special note of the ports list.

+If you run the build script with the compose-override.yml file in place, and open up the final docker-compose.yml file, you will notice that the port list have been replaced with the ones you specified in the compose-override.yml file.

+

version: '3.6'

+services:

+ mosquitto:

+ container_name: mosquitto

+ image: eclipse-mosquitto

+ restart: unless-stopped

+ user: "1883"

+ ports:

+ - 1996:1996

+ - 9001:9001

+ volumes:

+ - ./volumes/mosquitto/data:/mosquitto/data

+ - ./volumes/mosquitto/log:/mosquitto/log

+ - ./volumes/mosquitto/pwfile:/mosquitto/pwfile

+ - ./services/mosquitto/mosquitto.conf:/mosquitto/config/mosquitto.conf

+ - ./services/mosquitto/filter.acl:/mosquitto/config/filter.acl

+Do note that it will replace the entire list, if you were to specify +

services:

+ mosquitto:

+ ports:

+ - 1996:1996

+Then the final output will be: +

version: '3.6'

+services:

+ mosquitto:

+ container_name: mosquitto

+ image: eclipse-mosquitto

+ restart: unless-stopped

+ user: "1883"

+ ports:

+ - 1996:1996

+ volumes:

+ - ./volumes/mosquitto/data:/mosquitto/data

+ - ./volumes/mosquitto/log:/mosquitto/log

+ - ./volumes/mosquitto/pwfile:/mosquitto/pwfile

+ - ./services/mosquitto/mosquitto.conf:/mosquitto/config/mosquitto.conf

+ - ./services/mosquitto/filter.acl:/mosquitto/config/filter.acl

+Using env files instead of docker-compose variables¶

+If you need or prefer to use *.env files for docker-compose environment variables in a separate file instead of using overrides, you can do so like this:

+services:

+ grafana:

+ env_file:

+ - ./services/grafana/grafana.env

+ environment:

+This will remove the default environment variables set in the template, and tell docker-compose to use the variables specified in your file. It is not mandatory that the .env file be placed in the service's service directory, but is strongly suggested. Keep in mind the PostBuild Script functionality to automatically copy your .env files into their directories on successful build if you need to.

+Adding custom services¶

+Custom services can be added in a similar way to overriding default settings for standard services. Lets add a Minecraft and rcon server to IOTstack.

+Firstly, put the following into compose-override.yml:

+

services:

+ mosquitto:

+ ports:

+ - 1996:1996

+ - 9001:9001

+ minecraft:

+ image: itzg/minecraft-server

+ ports:

+ - "25565:25565"

+ volumes:

+ - "./volumes/minecraft:/data"

+ environment:

+ EULA: "TRUE"

+ TYPE: "PAPER"

+ ENABLE_RCON: "true"

+ RCON_PASSWORD: "PASSWORD"

+ RCON_PORT: 28016

+ VERSION: "1.15.2"

+ REPLACE_ENV_VARIABLES: "TRUE"

+ ENV_VARIABLE_PREFIX: "CFG_"

+ CFG_DB_HOST: "http://localhost:3306"

+ CFG_DB_NAME: "IOTstack Minecraft"

+ CFG_DB_PASSWORD_FILE: "/run/secrets/db_password"

+ restart: unless-stopped

+ rcon:

+ image: itzg/rcon

+ ports:

+ - "4326:4326"

+ - "4327:4327"

+ volumes:

+ - "./volumes/rcon_data:/opt/rcon-web-admin/db"

+secrets:

+ db_password:

+ file: ./db_password

+Then create the service directory that the new instance will use to store persistant data:

+mkdir -p ./volumes/minecraft

and

+mkdir -p ./volumes/rcon_data

Obviously you will need to give correct folder names depending on the volumes you specify for your custom services. If your new service doesn't require persistant storage, then you can skip this step.

Then simply run the ./menu.sh command, and rebuild the stack with what ever services you had before.

Using the Mosquitto example above, the final docker-compose.yml file will look like:

version: '3.6'

+services:

+ mosquitto:

+ ports:

+ - 1996:1996

+ - 9001:9001

+ container_name: mosquitto

+ image: eclipse-mosquitto

+ restart: unless-stopped

+ user: '1883'

+ volumes:

+ - ./volumes/mosquitto/data:/mosquitto/data

+ - ./volumes/mosquitto/log:/mosquitto/log

+ - ./services/mosquitto/mosquitto.conf:/mosquitto/config/mosquitto.conf

+ - ./services/mosquitto/filter.acl:/mosquitto/config/filter.acl

+ minecraft:

+ image: itzg/minecraft-server

+ ports:

+ - 25565:25565

+ volumes:

+ - ./volumes/minecraft:/data

+ environment:

+ EULA: 'TRUE'

+ TYPE: PAPER

+ ENABLE_RCON: 'true'

+ RCON_PASSWORD: PASSWORD

+ RCON_PORT: 28016

+ VERSION: 1.15.2

+ REPLACE_ENV_VARIABLES: 'TRUE'

+ ENV_VARIABLE_PREFIX: CFG_

+ CFG_DB_HOST: http://localhost:3306

+ CFG_DB_NAME: IOTstack Minecraft

+ CFG_DB_PASSWORD_FILE: /run/secrets/db_password

+ restart: unless-stopped

+ rcon:

+ image: itzg/rcon

+ ports:

+ - 4326:4326

+ - 4327:4327

+ volumes:

+ - ./volumes/rcon_data:/opt/rcon-web-admin/db

+secrets:

+ db_password:

+ file: ./db_password

+Do note that the order of the YAML keys is not guaranteed.

+ + + + + + + + + + + + + +-

+

+

+

+

+

+

- + + + + + + Home + + + + + + + + + + + + + + +

- + + + + Basic setup + + + + + + + + + + + + + +

- + + + + Containers + + + + + + + + + + + + + +

- + + + + Developers + + + + + + + + + + + + + +

- + + + + Updates + + + + + + + +

Default ports¶

+Here you can find a list of the default mode and ports used by each service found in the .templates directory.

+This list can be generated by running the default_ports_md_generator.sh script.

+| Service Name | +Mode | +Port(s) External:Internal |

+

|---|---|---|

| adguardhome | +non-host | +53:53 8089:8089 3001:3000 |

+

| adminer | +non-host | +9080:8080 |

+

| blynk_server | +non-host | +8180:8080 8440:8440 9443:9443 |

+

| chronograf | +non-host | +8888:8888 |

+

| dashmachine | +non-host | +5000:5000 |

+

| deconz | +non-host | +8090:80 443:443 5901:5900 |

+

| diyhue | +non-host | +8070:80 1900:1900 1982:1982 2100:2100 |

+

| domoticz | +non-host | +8083:8080 6144:6144 1443:1443 |

+

| dozzle | +non-host | +8889:8080 |

+

| duckdns | +host | ++ |

| espruinohub | +host | ++ |

| gitea | +non-host | +7920:3000 2222:22 |

+

| grafana | +non-host | +3000:3000 |

+

| heimdall | +non-host | +8880:80 8883:443 |

+

| home_assistant | +host | ++ |

| homebridge | +host | ++ |

| homer | +non-host | +8881:8080 |

+

| influxdb | +non-host | +8086:8086 |

+

| influxdb2 | +non-host | +8087:8086 |

+

| kapacitor | +non-host | +9092:9092 |

+

| mariadb | +non-host | +3306:3306 |

+

| mosquitto | +non-host | +1883:1883 |

+

| "motioneye" | +non-host | +8765:8765 8081:8081 |

+

| "n8n" | +non-host | +5678:5678 |

+

| nextcloud | +non-host | +9321:80 |

+

| nodered | +non-host | +1880:1880 |

+

| octoprint | +non-host | +9980:80 |

+

| openhab | +host | ++ |

| pihole | +non-host | +8089:80 53:53 67:67 |

+

| plex | +host | ++ |

| portainer-ce | +non-host | +8000:8000 9000:9000 |

+

| portainer-agent | +non-host | +9001:9001 |

+

| postgres | +non-host | +5432:5432 |

+

| prometheus-cadvisor | +non-host | +8082:8080 |

+

| prometheus-nodeexporter | +non-host | ++ |

| prometheus | +non-host | +9090:9090 |

+

| python | +non-host | ++ |

| qbittorrent | +non-host | +6881:6881 15080:15080 1080:1080 |

+

| ring-mqtt | +non-host | +8554:8554 55123:55123 |

+

| rtl_433 | +non-host | ++ |

| scrypted | +host | +10443:10443 |

+

| syncthing | +host | ++ |

| tasmoadmin | +non-host | +8088:80 |

+

| telegraf | +non-host | +8092:8092 8094:8094 8125:8125 |

+

| timescaledb | +non-host | ++ |

| transmission | +non-host | +9091:9091 51413:51413 |

+

| webthingsio_gateway | +host | ++ |

| wireguard | +non-host | +51820:51820 |

+

| zerotier | +host | ++ |

| zerotier | +host | ++ |

| zigbee2mqtt | +non-host | +8080:8080 |

+

| zigbee2mqtt_assistant | +non-host | +8880:80 |

+

-

+

+

+

+

+

+

- + + + + + + Home + + + + + + + + + + + + + + +

- + + + + Basic setup + + + + + + + + + + + + + +

- + + + + Containers + + + + + + + + + + + + + +

- + + + + Developers + + + + + + + + + + + + + +

- + + + + Updates + + + + + + + +

Docker

+ +Logging¶

+When Docker starts a container, it executes its entrypoint command. Any +output produced by this command is logged by Docker. By default Docker stores +logs internally together with other data associated to the container image.

+This has the effect that when recreating or updating a container, logs shown by

+docker-compose logs won't show anything associated with the previous

+instance. Use docker system prune to remove old instances and free up disk

+space. Keeping logs only for the latest instance is helpful when testing, but

+may not be desirable for production.

By default there is no limit on the log size. Surprisingly, when using a +SD-card this is exactly what you want. If a runaway container floods the log +with output, writing will stop when the disk becomes full. Without a mechanism +to prevent such excessive writing, the SD-card would keep being written to +until the flash hardware program-erase cycle limit is +reached, after which it is permanently broken.

+When using a quality SSD-drive, potential flash-wear isn't usually a +concern. Then you can enable log-rotation by either:

+-

+

-

+

Configuring Docker to do it for you automatically. Edit your +

+docker-compose.ymland add a top-level x-logging and a logging: to + each service definition. The Docker compose reference documentation has + a good example.

+ -

+

Configuring Docker to log to the host system's journald.

+ps. if

+/etc/docker/daemon.jsondoesn't exist, just create it.

+

Aliases¶

+Bash aliases for stopping and starting the stack and other common operations

+are in the file .bash_aliases. To use them immediately and in future logins,

+run in a console:

$ source ~/IOTstack/.bash_aliases

+$ echo "source ~/IOTstack/.bash_aliases" >> ~/.profile

+These commands no longer need to be executed from the IOTstack directory and can be executed in any directory

+IOTSTACK_HOME="$(cd "$(dirname "${BASH_SOURCE[0]}")" && pwd)"

+alias iotstack_up="cd "$IOTSTACK_HOME" && docker-compose up -d --remove-orphans"

+alias iotstack_down="cd "$IOTSTACK_HOME" && docker-compose down --remove-orphans"

+alias iotstack_start="cd "$IOTSTACK_HOME" && docker-compose start"

+alias iotstack_stop="cd "$IOTSTACK_HOME" && docker-compose stop"

+alias iotstack_pull="cd "$IOTSTACK_HOME" && docker-compose pull"

+alias iotstack_build="cd "$IOTSTACK_HOME" && docker-compose build --pull --no-cache"

+alias iotstack_update_docker_images='f(){ iotstack_pull "$@" && iotstack_build "$@" && iotstack_up --build "$@"; }; f'

+You can now type iotstack_up. The aliases also accept additional parameters,

+e.g. iotstack_stop portainer.

The iotstack_update_docker_images alias will update docker images to newest

+released images, build and recreate containers. Do note that using this will

+result in a broken containers from time to time, as upstream may release faulty

+docker images. Have proper backups, or be prepared to manually pin a previous

+release build by editing docker-compose.yml.

-

+

+

+

+

+

+

- + + + + + + Home + + + + + + + + + + + + + + +

- + + + + Basic setup + + + + + + + + + + + + + +

- + + + + Containers + + + + + + + + + + + + + +

- + + + + Developers + + + + + + + + + + + + + +

- + + + + Updates + + + + + + + +

Menu

+ +The menu.sh-script is used to create or modify the docker-compose.yml-file.

+This file defines how all containers added to the stack are configured.

Miscellaneous¶

+log2ram¶

+One of the drawbacks of an sd card is that it has a limited lifespan. One way +to reduce the load on the sd card is to move your log files to RAM. log2ram is a convenient tool to simply set this up. +It can be installed from the miscellaneous menu.

+This only affects logs written to /var/log, and won't have any effect on Docker +logs or logs stored inside containers.

+Dropbox-Uploader¶

+This a great utility to easily upload data from your PI to the cloud. The +MagPi has an +excellent explanation of the process of setting up the Dropbox API. +Dropbox-Uploader is used in the backup script.

+Backup and Restore¶

+See Backing up and restoring IOTstack

+Native Installs¶

+RTL_433¶

+RTL_433 can be installed from the "Native install sections"

+This video demonstrates +how to use RTL_433

+RPIEasy¶

+The installer will install any dependencies. If ~/rpieasy exists it will

+update the project to its latest, if not it will clone the project

RPIEasy can be run by sudo ~/rpieasy/RPIEasy.py

To have RPIEasy start on boot in the webui under hardware look for "RPIEasy +autostart at boot"

+RPIEasy will select its ports from the first available one in the list +(80,8080,8008). If you run Hass.io then there will be a conflict so check the +next available port

+Old-menu branch details¶

+The build script creates the ./services directory and populates it from the +template file in .templates . The script then appends the text withing each +service.yml file to the docker-compose.yml . When the stack is rebuilt the menu +does not overwrite the service folder if it already exists. Make sure to sync +any alterations you have made to the docker-compose.yml file with the +respective service.yml so that on your next build your changes pull through.

+The .gitignore file is setup such that if you do a git pull origin master it

+does not overwrite the files you have already created. Because the build script

+does not overwrite your service directory any changes in the .templates

+directory will have no affect on the services you have already made. You will

+need to move your service folder out to get the latest version of the template.

-

+

+

+

+

+

+

- + + + + + + Home + + + + + + + + + + + + + + +

- + + + + Basic setup + + + + + + + + + + + + + +

- + + + + Containers + + + + + + + + + + + + + +

- + + + + Developers + + + + + + + + + + + + + +

- + + + + Updates + + + + + + + +

Networking¶

+The docker-compose instruction creates an internal network for the containers to communicate in, the ports get exposed to the PI's IP address when you want to connect from outside. It also creates a "DNS" the name being the container name. So it is important to note that when one container talks to another they talk by name. All the containers names are lowercase like nodered, influxdb...

+An easy way to find out your IP is by typing ip address in the terminal and look next to eth0 or wlan0 for your IP. It is highly recommended that you set a static IP for your PI or at least reserve an IP on your router so that you know it

Check the docker-compose.yml to see which ports have been used

+

Examples¶

+-

+

- You want to connect your nodered to your mqtt server. In nodered drop an mqtt node, when you need to specify the address type

mosquitto

+ - You want to connect to your influxdb from grafana. You are in the Docker network and you need to use the name of the Container. The address you specify in the grafana is

http://influxdb:8086

+ - You want to connect to the web interface of grafana from your laptop. Now you are outside the container environment you type PI's IP eg 192.168.n.m:3000 +

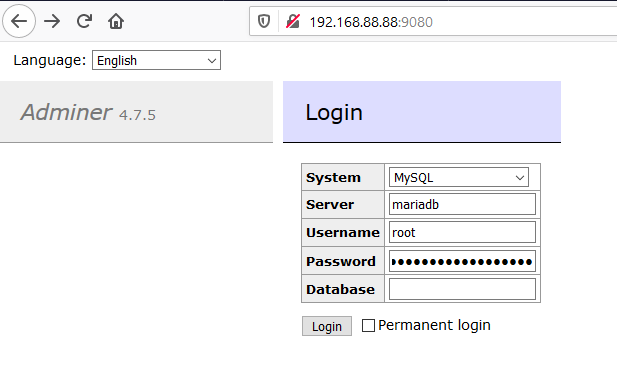

Ports¶

+Many containers try to use popular ports such as 80,443,8080. For example openHAB and Adminer both want to use port 8080 for their web interface. Adminer's port has been moved 9080 to accommodate this. Please check the description of the container in the README to see if there are any changes as they may not be the same as the port you are used to.

+Port mapping is done in the docker-compose.yml file. Each service should have a section that reads like this: +

ports:

+ - HOST_PORT:CONTAINER_PORT

+ ports:

+ - 9080:8080

+-

+

+

+

+

+

+

- + + + + + + Home + + + + + + + + + + + + + + +

- + + + + Basic setup + + + + + + + + + + + + + +

- + + + + Containers + + + + + + + + + + + + + +

- + + + + Developers + + + + + + + + + + + + + +

- + + + + Updates + + + + + + + +

Troubleshooting

+ +Resources¶

+-

+

-

+

Search github issues.

+-

+

- Closed issues or pull-requests may also have valuable hints. +

+ -

+

Ask questions on IOTStack Discord. Or report + how you were able to fix a problem.

+

+ -

+

There are over 40 gists about IOTstack. These address a diverse range of + topics from small convenience scripts to complete guides. These are + individual contributions that aren't reviewed.

+You can add your own keywords into the search: +https://gist.github.com/search?q=iotstack

+

+

FAQ¶

+Breaking update

+A change done 2022-01-18 will require manual steps

+or you may get an error like:

+ERROR: Service "influxdb" uses an undefined network "iotstack_nw"

Device Errors¶

+If you are trying to run IOTstack on non-Raspberry Pi hardware, you will probably get the following error from docker-compose when you try to bring up your stack for the first time:

Error response from daemon: error gathering device information while adding custom device "/dev/ttyAMA0": no such file or directory

+++You will get a similar message about any device which is not known to your hardware.

+

The /dev/ttyAMA0 device is the Raspberry Pi's built-in serial port so it is guaranteed to exist on any "real" Raspberry Pi. As well as being referenced by containers that can actually use the serial port, ttyAMA0 is often employed as a placeholder.

Examples:

+-

+

- Node-RED flows can use the

node-red-node-serialportnode to access the serial port. This is an example of "actual use";

+ -

+

The Zigbee2MQTT container employs

+ttyAMA0as a placeholder. This allows the container to start. Once you have worked out how your Zigbee adapter appears on your system, you will substitute your adapter's actual device path. For example:+- "/dev/serial/by-id/usb-Texas_Instruments_TI_CC2531_USB_CDC___0X00125B0028EEEEE0-if00:/dev/ttyACM0" +

+

The simplest approach to solving "error gathering device information" problems is just to comment-out every device mapping that produces an error and, thereafter, treat the comments as documentation about what the container is expecting at run-time. For example, this is the devices list for Node-RED:

+ devices:

+ - "/dev/ttyAMA0:/dev/ttyAMA0"

+ - "/dev/vcio:/dev/vcio"

+ - "/dev/gpiomem:/dev/gpiomem"

+Those are, in turn, the Raspberry Pi's:

+-

+

- serial port +

- videoCore multimedia processor +

- mechanism for accessing GPIO pin headers +

If none of those is available on your chosen platform (the usual situation on non-Pi hardware), commenting-out the entire block is appropriate:

+# devices:

+# - "/dev/ttyAMA0:/dev/ttyAMA0"

+# - "/dev/vcio:/dev/vcio"

+# - "/dev/gpiomem:/dev/gpiomem"

+You interpret each line in a device map like this:

+ - "«external»:«internal»"

+The «external» device is what the platform (operating system plus hardware) sees. The «internal» device is what the container sees. Although it is reasonably common for the two sides to be the same, this is not a requirement. It is usual to replace the «external» device with the actual device while leaving the «internal» device unchanged.

+Here is an example. On macOS, a CP2102 USB-to-Serial adapter shows up as:

+/dev/cu.SLAB_USBtoUART

+Assume you are running the Node-RED container in macOS Docker Desktop, and that you want a flow to communicate with the CP2102. You would change the service definition like this:

+ devices:

+ - "/dev/cu.SLAB_USBtoUART:/dev/ttyAMA0"

+# - "/dev/vcio:/dev/vcio"

+# - "/dev/gpiomem:/dev/gpiomem"

+In other words, the «external» (real world) device cu.SLAB_USBtoUART is mapped to the «internal» (container) device ttyAMA0. The flow running in the container is expecting to communicate with ttyAMA0 and is none-the-wiser.

Needing to use sudo to run docker commands¶

+You should never (repeat never) use sudo to run docker or docker compose commands. Forcing docker to do something with sudo almost always creates more problems than it solves. Please see What is sudo? to understand how sudo actually works.

If docker or docker-compose commands seem to need elevated privileges, the most likely explanation is incorrect group membership. Please read the next section about errors involving docker.sock. The solution (two usermod commands) is the same.

If, however, the current user is a member of the docker group but you still get error responses that seem to imply a need for sudo, it implies that something fundamental is broken. Rather than resorting to sudo, you are better advised to rebuild your system.

Errors involving docker.sock¶

+If you encounter permission errors that mention /var/run/docker.sock, the most likely explanation is the current user (usually "pi") not being a member of the "docker" group.

You can check membership with the groups command:

$ groups

+pi adm dialout cdrom sudo audio video plugdev games users input render netdev bluetooth lpadmin docker gpio i2c spi

+In that list, you should expect to see both bluetooth and docker. If you do not, you can fix the problem like this:

$ sudo usermod -G docker -a $USER

+$ sudo usermod -G bluetooth -a $USER

+$ exit

+The exit statement is required. You must logout and login again for the two usermod commands to take effect. An alternative is to reboot.

System freezes or SSD problems¶

+You should read this section if you experience any of the following problems:

+-

+

- Apparent system hangs, particularly if Docker containers were running at the time the system was shutdown or rebooted; +

- Much slower than expected performance when reading/writing your SSD; or +

- Suspected data-corruption on your SSD. +

Try a USB2 port¶

+Start by shutting down your Pi and moving your SSD to one of the USB2 ports. The slower speed will often alleviate the problem.

+Tips:

+-

+

-

+

If you don't have sufficient control to issue a shutdown and/or your Pi won't shut down cleanly:

+-

+

- remove power +

- move the SSD to a USB2 port +

- apply power again. +

+ -

+

If you run "headless" and find that the Pi responds to pings but you can't connect via SSH:

+-

+

- remove power +

- connect the SSD to a support platform (Linux, macOS, Windows) +

- create a file named "ssh" at the top level of the boot partition +

- eject the SSD from your support platform +

- connect the SSD to a USB2 port on your Pi +

- apply power again. +

+

Check the dhcpcd patch¶

+Next, verify that the dhcpcd patch is installed. There seems to be a timing component to the deadlock which is why it can be alleviated, to some extent, by switching the SSD to a USB2 port.

+If the dhcpcd patch was not installed but you have just installed it, try returning the SSD to a USB3 port.

Try a quirks string¶

+If problems persist even when the dhcpcd patch is in place, you may have an SSD which isn't up to the Raspberry Pi's expectations. Try the following:

-

+

- If your IOTstack is running, take it down. +

- If your SSD is attached to a USB3 port, shut down your Pi, move the SSD to a USB2 port, and apply power. +

-

+

Run the following command:

++$ dmesg | grep "\] usb [[:digit:]]-" +In the output, identify your SSD. Example:

++[ 1.814248] usb 2-1: new SuperSpeed Gen 1 USB device number 2 using xhci_hcd +[ 1.847688] usb 2-1: New USB device found, idVendor=f0a1, idProduct=f1b2, bcdDevice= 1.00 +[ 1.847708] usb 2-1: New USB device strings: Mfr=99, Product=88, SerialNumber=77 +[ 1.847723] usb 2-1: Product: Blazing Fast SSD +[ 1.847736] usb 2-1: Manufacturer: Suspect Drives +In the above output, the second line contains the Vendor and Product codes that you need:

+-

+

idVendor=f0a1

+idProduct=f1b2

+

+ -

+

Substitute the values of «idVendor» and «idProduct» into the following command template:

++sed -i.bak '1s/^/usb-storage.quirks=«idVendor»:«idProduct»:u /' "$CMDLINE" +This is known as a "quirks string". Given the

+dmesgoutput above, the string would be:+sed -i.bak '1s/^/usb-storage.quirks=f0a1:f1b2:u /' "$CMDLINE" +Make sure that you keep the space between the

+:uand/'. You risk breaking your system if that space is not there.

+ -

+

Run these commands - the second line is the one you prepared in step 4 using

+sudo:+$ CMDLINE="/boot/firmware/cmdline.txt" && [ -e "$CMDLINE" ] || CMDLINE="/boot/cmdline.txt" +$ sudo sed -i.bak '1s/^/usb-storage.quirks=f0a1:f1b2:u /' "$CMDLINE" +The command:

+-

+

- makes a backup copy of

cmdline.txtascmdline.txt.bak

+ - inserts the quirks string at the start of

cmdline.txt.

+

You can confirm the result as follows:

+-

+

-

+

display the original (baseline reference):

++$ cat "$CMDLINE.bak" +console=serial0,115200 console=tty1 root=PARTUUID=06c69364-02 rootfstype=ext4 fsck.repair=yes rootwait quiet splash plymouth.ignore-serial-consoles +

+ -

+

display the modified version:

++$ cat "$CMDLINE" +usb-storage.quirks=f0a1:f1b2:u console=serial0,115200 console=tty1 root=PARTUUID=06c69364-02 rootfstype=ext4 fsck.repair=yes rootwait quiet splash plymouth.ignore-serial-consoles +

+

+ - makes a backup copy of

-

+

Shutdown your Pi.

+

+ - Connect your SSD to a USB3 port and apply power. +

There is more information about this problem on the Raspberry Pi forum.

+Getting a clean slate¶

+If you create a mess and can't see how to recover, try proceeding like this:

+$ cd ~/IOTstack

+$ docker-compose down

+$ cd

+$ mv IOTstack IOTstack.old

+$ git clone https://github.com/SensorsIot/IOTstack.git IOTstack

+In words:

+-

+

- Be in the right directory. +

- Take the stack down. +

- The

cdcommand without any arguments changes your working directory to + your home directory (variously known as~or$HOMEor/home/pi).

+ -

+

Move your existing IOTstack directory out of the way. If you get a + permissions problem:

+-

+

- Re-try the command with

sudo; and

+ - Read a word about the

sudocommand. Needingsudo+ in this situation is an example of over-usingsudo.

+

+ - Re-try the command with

-

+

Check out a clean copy of IOTstack.

+

+

Now, you have a clean slate and can start afresh by running the menu:

+$ cd ~/IOTstack

+$ ./menu.sh

+The IOTstack.old directory remains available as a reference for as long as

+you need it. Once you have no further use for it, you can clean it up via:

$ cd

+$ sudo rm -rf ./IOTstack.old # (1)

+-

+

- The

sudocommand is needed in this situation because some files and + folders (eg the "volumes" directory and most of its contents) are owned by + root.

+

-

+

+

+

+

+

+

- + + + + + + Home + + + + + + + + + + + + + + +

- + + + + Basic setup + + + + + + + + + + + + + +

- + + + + Containers + + + + + + + + + + + + + +

- + + + + Developers + + + + + + + + + + + + + +

- + + + + Updates + + + + + + + +

What is Docker?¶

+In simple terms, Docker is a software platform that simplifies the process of building, running, +managing and distributing applications. It does this by virtualizing the operating system of the +computer on which it is installed and running.

+The Problem¶

+Let’s say you have three different Python-based applications that you plan to host on a single server +(which could either be a physical or a virtual machine).

+Each of these applications makes use of a different version of Python, as well as the associated +libraries and dependencies, differ from one application to another.

+Since we cannot have different versions of Python installed on the same machine, this prevents us from +hosting all three applications on the same computer.

+The Solution¶

+Let’s look at how we could solve this problem without making use of Docker. In such a scenario, we +could solve this problem either by having three physical machines, or a single physical machine, which +is powerful enough to host and run three virtual machines on it.

+Both the options would allow us to install different versions of Python on each of these machines, +along with their associated dependencies.

+The machine on which Docker is installed and running is usually referred to as a Docker Host or Host in +simple terms. So, whenever you plan to deploy an application on the host, it would create a logical +entity on it to host that application. In Docker terminology, we call this logical entity a Container or +Docker Container to be more precise.

+Whereas the kernel of the host’s operating system is shared across all the containers that are running +on it.

+This allows each container to be isolated from the other present on the same host. Thus it supports +multiple containers with different application requirements and dependencies to run on the same host, +as long as they have the same operating system requirements.

+Docker Terminology¶

+Docker Images and Docker Containers are the two essential things that you will come across daily while +working with Docker.

+In simple terms, a Docker Image is a template that contains the application, and all the dependencies +required to run that application on Docker.

+On the other hand, as stated earlier, a Docker Container is a logical entity. In more precise terms, +it is a running instance of the Docker Image.

+What is Docker-Compose?¶

+Docker Compose provides a way to orchestrate multiple containers that work together. Docker compose +is a simple yet powerful tool that is used to run multiple containers as a single service. +For example, suppose you have an application which requires Mqtt as a communication service between IOT devices +and OpenHAB instance as a Smarthome application service. In this case by docker-compose, you can create one +single file (docker-compose.yml) which will create both the containers as a single service without starting +each separately. It wires up the networks (literally), mounts all volumes and exposes the ports.

+The IOTstack with the templates and menu is a generator for that docker-compose service descriptor.

+How Docker Compose Works?¶

+use yaml files to configure application services (docker-compose.yaml) +can start all the services with a single command ( docker-compose up ) +can stop all the service with a single command ( docker-compose down )

+How are the containers connected¶

+The containers are automagically connected when we run the stack with docker-compose up. +The containers using same logical network (by default) where the instances can access each other with the instance +logical name. Means if there is an instance called mosquitto and an openhab, when openHAB instance need +to access mqtt on that case the domain name of mosquitto will be resolved as the runnuning instance of mosquitto.

+How the container are connected to host machine¶

+Volumes¶

+The containers are enclosed processes which state are lost with the restart of container. To be able to +persist states volumes (images or directories) can be used to share data with the host. +Which means if you need to persist some database, configuration or any state you have to bind volumes where the +running service inside the container will write files to that binded volume. +In order to understand what a Docker volume is, we first need to be clear about how the filesystem normally works +in Docker. Docker images are stored as series of read-only layers. When we start a container, Docker takes +the read-only image and adds a read-write layer on top. If the running container modifies an existing file, +the file is copied out of the underlying read-only layer and into the top-most read-write layer where the +changes are applied. The version in the read-write layer hides the underlying file, but does not +destroy it -- it still exists in the underlying layer. When a Docker container is deleted, +relaunching the image will start a fresh container without any of the changes made in the previously +running container -- those changes are lost, thats the reason that configs, databases are not persisted,

+Volumes are the preferred mechanism for persisting data generated by and used by Docker containers. +While bind mounts are dependent on the directory structure of the host machine, volumes are completely +managed by Docker. In IOTstack project uses the volumes directory in general to bind these container volumes.

+Ports¶

+When containers running a we would like to delegate some services to the outside world, for example +OpenHAB web frontend have to be accessible for users. There are several ways to achive that. One is +mounting the port to the most machine, this called port binding. On that case service will have a dedicated +port which can be accessed, one drawback is one host port can be used one serice only. Another way is reverse proxy. +The term reverse proxy (or Load Balancer in some terminology) is normally applied to a service that sits in front +of one or more servers (in our case containers), accepting requests from clients for resources located on the +server(s). From the client point of view, the reverse proxy appears to be the web server and so is +totally transparent to the remote user. Which means several service can share same port the server +will route the request by the URL (virtual domain or context path). For example, there is grafana and openHAB +instances, where the opeanhab.domain.tld request will be routed to openHAB instance 8181 port while +grafana.domain.tld to grafana instance 3000 port. On that case the proxy have to be mapped for host port 80 and/or +444 on host machine, the proxy server will access the containers via the docker virtual network.

+Source materials used:

+https://takacsmark.com/docker-compose-tutorial-beginners-by-example-basics/ +https://www.freecodecamp.org/news/docker-simplified-96639a35ff36/ +https://www.cloudflare.com/learning/cdn/glossary/reverse-proxy/ +https://blog.container-solutions.com/understanding-volumes-docker

+ + + + + + + + + + + + + +-

+

+

+

+

+

+

- + + + + + + Home + + + + + + + + + + + + + + +

- + + + + Basic setup + + + + + + + + + + + + + +

- + + + + Containers + + + + + + + + + + + + + +

- + + + + Developers + + + + + + + + + + + + + +

- + + + + Updates + + + + + + + +

What is sudo?¶

+Many first-time users of IOTstack get into difficulty by misusing the sudo command. The problem is best understood by example. In the following, you would expect ~ (tilde) to expand to /home/pi. It does:

$ echo ~/IOTstack

+/home/pi/IOTstack

+The command below sends the same echo command to bash for execution. This is what happens when you type the name of a shell script. You get a new instance of bash to run the script:

$ bash -c 'echo ~/IOTstack'

+/home/pi/IOTstack

+Same answer. Again, this is what you expect. But now try it with sudo on the front:

$ sudo bash -c 'echo ~/IOTstack'

+/root/IOTstack

+Different answer. It is different because sudo means "become root, and then run the command". The process of becoming root changes the home directory, and that changes the definition of ~.

Any script designed for working with IOTstack assumes ~ (or the equivalent $HOME variable) expands to /home/pi. That assumption is invalidated if the script is run by sudo.

Of necessity, any script designed for working with IOTstack will have to invoke sudo inside the script when it is required. You do not need to second-guess the script's designer.

Please try to minimise your use of sudo when you are working with IOTstack. Here are some rules of thumb:

-

+

-

+

Is what you are about to run a script? If yes, check whether the script already contains

+sudocommands. Usingmenu.shas the example:+$ grep -c 'sudo' ~/IOTstack/menu.sh +28 +There are numerous uses of

+sudowithinmenu.sh. That means the designer thought about whensudowas needed.

+ -

+

Did the command you just executed work without

+sudo? Note the emphasis on the past tense. If yes, then your work is done. If no, and the error suggests elevated privileges are necessary, then re-execute the last command like this:+$ sudo !! +

+

It takes time, patience and practice to learn when sudo is actually needed. Over-using sudo out of habit, or because you were following a bad example you found on the web, is a very good way to find that you have created so many problems for yourself that will need to reinstall your IOTstack. Please err on the side of caution!

Configuration¶

+To edit sudo functionality and permissions use: sudo visudo

For instance, to allow sudo usage without prompting for a password: +

# Allow members of group sudo to execute any command without password prompt

+%sudo ALL=(ALL:ALL) NOPASSWD:ALL

+For more information: man sudoers

-

+

+

+

+

+

+

- + + + + + + Home + + + + + + + + + + + + + + +

- + + + + Basic setup + + + + + + + + + + + + + +

- + + + + Containers + + + + + + + + + + + + + +

- + + + + Developers + + + + + + + + + + + + + +

- + + + + Updates + + + + + + + +

Getting Started¶

+About IOTstack¶

+IOTstack is not a system. It is a set of conventions for assembling arbitrary collections of containers into something that has a reasonable chance of working out-of-the-box. The three most important conventions are:

+-

+

-

+

If a container needs information to persist across restarts (and most containers do) then the container's persistent store will be found at:

++~/IOTstack/volumes/«container» +Most service definitions examples found on the web have a scattergun approach to this problem. IOTstack imposes order on this chaos.

+

+ -

+

To the maximum extent possible, network port conflicts have been sorted out in advance.

+Sometimes this is not possible. For example, Pi-hole and AdGuardHome both offer Domain Name System services. The DNS relies on port 53. You can't have two containers claiming port 53 so the only way to avoid this is to pick either Pi-hole or AdGuardHome. +3. Where multiple containers are needed to implement a single user-facing service, the IOTstack service definition will include everything needed. A good example is NextCloud which relies on MariaDB. IOTstack implements MariaDB as a private instance which is only available to NextCloud. This strategy ensures that you are able to run your own separate MariaDB container without any risk of interference with your NextCloud service.

+

+

Requirements¶

+IOTstack makes the following assumptions:

+-

+

-

+

Your hardware is capable of running Debian or one of its derivatives. Examples that are known to work include:

+-

+

-

+

a Raspberry Pi (typically a 3B+ or 4B)

++

+The Raspberry Pi Zero W2 has been tested with IOTstack. It works but the 512MB RAM means you should not try to run too many containers concurrently.

+

+ -

+

Orange Pi Win/Plus see also issue 375

+

+ - an Intel-based Mac running macOS plus Parallels with a Debian guest. +

- an Intel-based platform running Proxmox with a Debian guest. +

+ -

+

-

+

Your host or guest system is running a reasonably-recent version of Debian or an operating system which is downstream of Debian in the Linux family tree, such as Raspberry Pi OS (aka "Raspbian") or Ubuntu.

+IOTstack is known to work in 32-bit mode but not all containers have images on DockerHub that support 320bit mode. If you are setting up a new system from scratch, you should choose a 64-bit option.

+IOTstack was known to work with Buster but it has not been tested recently. Bullseye is known to work but if you are setting up a new system from scratch, you should choose Bookworm.

+Please don't waste your own time trying Linux distributions from outside the Debian family tree. They are unlikely to work.

+

+ -

+

You are logged-in as the default user (ie not root). In most cases, this is the user with ID=1000 and is what you get by default on either a Raspberry Pi OS or Debian installation.

+This assumption is not really an IOTstack requirement as such. However, many containers assume UID=1000 exists and you are less likely to encounter issues if this assumption holds.

+

+

Please don't read these assumptions as saying that IOTstack will not run on other hardware, other operating systems, or as a different user. It is just that IOTstack gets most of its testing under these conditions. The further you get from these implicit assumptions, the more your mileage may vary.

+New installation¶

+You have two choices:

+-

+

- If you have an existing system and you want to add IOTstack to it, then the add-on method is your best choice. +

- If you are setting up a new system from scratch, then PiBuilder is probably your best choice. You can, however, also use the add-on method in a green-fields installation. +

add-on method¶

+This method assumes an existing system rather than a green-fields installation. The script uses the principle of least interference. It only installs the bare minimum of prerequisites and, with the exception of adding some boot time options to your Raspberry Pi (but not any other kind of hardware), makes no attempt to tailor your system.

+To use this method:

+-

+

-

+

Install

+curl:+$ sudo apt install -y curl +

+ -

+

Run the following command:

++$ curl -fsSL https://raw.githubusercontent.com/SensorsIot/IOTstack/master/install.sh | bash +

+

The install.sh script is designed to be run multiple times. If the script discovers a problem, it will explain how to fix that problem and, assuming you follow the instructions, you can safely re-run the script. You can repeat this process until the script completes normally.

PiBuilder method¶

+Compared with the add-on method, PiBuilder is far more comprehensive. PiBuilder:

+-

+

- Does everything the add-on method does. +

- Adds support packages and debugging tools that have proven useful in the IOTstack context. +

- Installs all required system patches (see next section). +

-

+

In addition to cloning IOTstack (this repository), PiBuilder also clones:

+-

+

- IOTstackBackup which is an alternative to the backup script supplied with IOTstack but does not require your stack to be taken down to perform backups; and +

- IOTstackAliases which provides shortcuts for common IOTstack operations. +

+ -

+

Performs extra tailoring intended to deliver a rock-solid platform for IOTstack.

+

+

PiBuilder does, however, assume a green fields system rather than an existing installation. Although the PiBuilder scripts will probably work on an existing system, that scenario has never been tested so it's entirely at your own risk.

+PiBuilder actually has two specific use-cases:

+-

+

- A first-time build of a system to run IOTstack; and +

- The ability to create your own customised version of PiBuilder so that you can quickly rebuild your Raspberry Pi or Proxmox guest after a disaster. Combined with IOTstackBackup, you can go from bare metal to a running system with data restored in about half an hour. +

Required system patches¶

+You can skip this section if you used PiBuilder to construct your system. That's because PiBuilder installs all necessary patches automatically.

+If you used the add-on method, you should consider applying these patches by hand. Unless you know that a patch is not required, assume that it is needed.

+patch 1 – restrict DHCP¶

+Run the following commands:

+$ sudo bash -c '[ $(egrep -c "^allowinterfaces eth\*,wlan\*" /etc/dhcpcd.conf) -eq 0 ] && echo "allowinterfaces eth*,wlan*" >> /etc/dhcpcd.conf'

+This patch prevents the dhcpcd daemon from trying to allocate IP addresses to Docker's docker0 and veth interfaces. Docker assigns the IP addresses itself and dhcpcd trying to get in on the act can lead to a deadlock condition which can freeze your Pi.

See Issue 219 and Issue 253 for more information.

+patch 2 – update libseccomp2¶

+This patch is ONLY for Raspbian Buster. Do NOT install this patch if you are running Raspbian Bullseye or Bookworm.

+-

+

-

+

check your OS release

+Run the following command:

++$ grep "PRETTY_NAME" /etc/os-release +PRETTY_NAME="Raspbian GNU/Linux 10 (buster)" +If you see the word "buster", proceed to step 2. Otherwise, skip this patch.

+

+ -

+

if you are indeed running "buster"

+Without this patch on Buster, Docker images will fail if:

+-

+

- the image is based on Alpine and the image's maintainer updates to Alpine 3.13; and/or +

- an image's maintainer updates to a library that depends on 64-bit values for Unix epoch time (the so-called Y2038 problem). +

To install the patch:

++$ sudo apt-key adv --keyserver hkps://keyserver.ubuntu.com:443 --recv-keys 04EE7237B7D453EC 648ACFD622F3D138 +$ echo "deb http://httpredir.debian.org/debian buster-backports main contrib non-free" | sudo tee -a "/etc/apt/sources.list.d/debian-backports.list" +$ sudo apt update +$ sudo apt install libseccomp2 -t buster-backports +

+

patch 3 - kernel control groups¶

+Kernel control groups need to be enabled in order to monitor container specific

+usage. This makes commands like docker stats fully work. Also needed for full

+monitoring of docker resource usage by the telegraf container.

Enable by running (takes effect after reboot):

+$ CMDLINE="/boot/firmware/cmdline.txt" && [ -e "$CMDLINE" ] || CMDLINE="/boot/cmdline.txt"

+$ echo $(cat "$CMDLINE") cgroup_memory=1 cgroup_enable=memory | sudo tee "$CMDLINE"

+$ sudo reboot

+the IOTstack menu¶

+The menu is used to construct your docker-compose.yml file. That file is read by docker-compose which issues the instructions necessary for starting your stack.

The menu is a great way to get started quickly but it is only an aid. It is a good idea to learn the various docker and docker-compose commands so you can use them outside the menu. It is also a good idea to study the docker-compose.yml generated by the menu to see how everything is put together. You will gain a lot of flexibility if you learn how to add containers by hand.

In essence, the menu is a concatenation tool which appends service definitions that exist inside the hidden ~/IOTstack/.templates folder to your docker-compose.yml.

Once you understand what the menu does (and, more importantly, what it doesn't do), you will realise that the real power of IOTstack lies not in its menu system but resides in its conventions.

+menu item: Build Stack¶

+To create your first docker-compose.yml:

$ cd ~/IOTstack

+$ ./menu.sh

+Select "Build Stack"

+Follow the on-screen prompts and select the containers you need.

+++The best advice we can give is "start small". Limit yourself to the core containers you actually need (eg Mosquitto, Node-RED, InfluxDB, Grafana, Portainer). You can always add more containers later. Some users have gone overboard with their initial selections and have run into what seem to be Raspberry Pi OS limitations.

+

Key point:

+-

+

- If you are running "new menu" (master branch) and you select Node-RED, you must press the right-arrow and choose at least one add-on node. If you skip this step, Node-RED will not build properly. +

- Old menu forces you to choose add-on nodes for Node-RED. +

The process finishes by asking you to bring up the stack:

+$ cd ~/IOTstack

+$ docker-compose up -d

+The first time you run up the stack docker will download all the images from DockerHub. How long this takes will depend on how many containers you selected and the speed of your internet connection.

Some containers also need to be built locally. Node-RED is an example. Depending on the Node-RED nodes you select, building the image can also take a very long time. This is especially true if you select the SQLite node.

+Be patient (and, if you selected the SQLite node, ignore the huge number of warnings).

+menu item: Docker commands¶

+The commands in this menu execute shell scripts in the root of the project.

+other menu items¶

+The old and new menus differ in the options they offer. You should come back and explore them once your stack is built and running.

+useful commands: docker & docker-compose¶

+Handy rules:

+-

+

dockercommands can be executed from anywhere, but

+docker-composecommands need to be executed from within~/IOTstack

+

starting your IOTstack¶

+To start the stack:

+$ cd ~/IOTstack

+$ docker-compose up -d

+Once the stack has been brought up, it will stay up until you take it down. This includes shutdowns and reboots of your Raspberry Pi. If you do not want the stack to start automatically after a reboot, you need to stop the stack before you issue the reboot command.

+logging journald errors¶

+If you get docker logging error like:

+Cannot create container for service [service name here]: unknown log opt 'max-file' for journald log driver

+-

+

-

+

Run the command:

++$ sudo nano /etc/docker/daemon.json +

+ -

+

change:

++"log-driver": "journald", +to:

++"log-driver": "json-file", +

+

Logging limits were added to prevent Docker using up lots of RAM if log2ram is enabled, or SD cards being filled with log data and degraded from unnecessary IO. See Docker Logging configurations

+You can also turn logging off or set it to use another option for any service by using the IOTstack docker-compose-override.yml file mentioned at IOTstack/Custom.

Another approach is to change daemon.json to be like this:

{

+ "log-driver": "local",

+ "log-opts": {

+ "max-size": "1m"

+ }

+}

+The local driver is specifically designed to prevent disk exhaustion. Limiting log size to one megabyte also helps, particularly if you only have a limited amount of storage.

If you are familiar with system logging where it is best practice to retain logs spanning days or weeks, you may feel that one megabyte is unreasonably small. However, before you rush to increase the limit, consider that each container is the equivalent of a small computer dedicated to a single task. By their very nature, containers tend to either work as expected or fail outright. That, in turn, means that it is usually only recent container logs showing failures as they happen that are actually useful for diagnosing problems.

+starting an individual container¶

+To start a particular container:

+$ cd ~/IOTstack

+$ docker-compose up -d «container»

+stopping your IOTstack¶

+Stopping aka "downing" the stack stops and deletes all containers, and removes the internal network:

+$ cd ~/IOTstack

+$ docker-compose down

+To stop the stack without removing containers, run:

+$ cd ~/IOTstack

+$ docker-compose stop

+stopping an individual container¶

+stop can also be used to stop individual containers, like this:

$ cd ~/IOTstack

+$ docker-compose stop «container»

+This puts the container in a kind of suspended animation. You can resume the container with

+$ cd ~/IOTstack

+$ docker-compose start «container»

+You can also down a container:

$ cd ~/IOTstack

+$ docker-compose down «container»

+-

+

-

+

If the

+downcommand returns an error suggesting that you can't use it to down a container, it actually means that you have an obsolete version ofdocker-compose. You should upgrade your system. The workaround is to you the old syntax:+$ cd ~/IOTstack +$ docker-compose rm --force --stop -v «container» +

+

To reactivate a container which has been stopped and removed:

+$ cd ~/IOTstack

+$ docker-compose up -d «container»

+checking container status¶

+You can check the status of containers with:

+$ docker ps

+or

+$ cd ~/IOTstack

+$ docker-compose ps

+viewing container logs¶

+You can inspect the logs of most containers like this:

+$ docker logs «container»

+for example:

+$ docker logs nodered

+You can also follow a container's log as new entries are added by using the -f flag:

$ docker logs -f nodered

+Terminate with a Control+C. Note that restarting a container will also terminate a followed log.

+restarting a container¶

+You can restart a container in several ways:

+$ cd ~/IOTstack

+$ docker-compose restart «container»

+This kind of restart is the least-powerful form of restart. A good way to think of it is "the container is only restarted, it is not rebuilt".

+If you change a docker-compose.yml setting for a container and/or an environment variable file referenced by docker-compose.yml then a restart is usually not enough to bring the change into effect. You need to make docker-compose notice the change:

$ cd ~/IOTstack

+$ docker-compose up -d «container»

+This type of "restart" rebuilds the container.

+Alternatively, to force a container to rebuild (without changing either docker-compose.yml or an environment variable file):

$ cd ~/IOTstack

+$ docker-compose up -d --force-recreate «container»

+See also updating images built from Dockerfiles if you need to force docker-compose to notice a change to a Dockerfile.

persistent data¶

+Docker allows a container's designer to map folders inside a container to a folder on your disk (SD, SSD, HD). This is done with the "volumes" key in docker-compose.yml. Consider the following snippet for Node-RED:

volumes:

+ - ./volumes/nodered/data:/data

+You read this as two paths, separated by a colon. The:

+-

+

- external path is

./volumes/nodered/data

+ - internal path is

/data

+

In this context, the leading "." means "the folder containingdocker-compose.yml", so the external path is actually:

-

+

~/IOTstack/volumes/nodered/data

+

This type of volume is a +bind-mount, where the +container's internal path is directly linked to the external path. All +file-system operations, reads and writes, are mapped to directly to the files +and folders at the external path.

+deleting persistent data¶

+If you need a "clean slate" for a container, you can delete its volumes. Using InfluxDB as an example:

+$ cd ~/IOTstack

+$ docker-compose rm --force --stop -v influxdb

+$ sudo rm -rf ./volumes/influxdb

+$ docker-compose up -d influxdb

+When docker-compose tries to bring up InfluxDB, it will notice this volume mapping in docker-compose.yml:

volumes:

+ - ./volumes/influxdb/data:/var/lib/influxdb

+and check to see whether ./volumes/influxdb/data is present. Finding it not there, it does the equivalent of:

$ sudo mkdir -p ./volumes/influxdb/data

+When InfluxDB starts, it sees that the folder on right-hand-side of the volumes mapping (/var/lib/influxdb) is empty and initialises new databases.

This is how most containers behave. There are exceptions so it's always a good idea to keep a backup.

+stack maintenance¶

+Breaking update

+Recent changes will require manual steps

+or you may get an error like:

+ERROR: Service "influxdb" uses an undefined network "iotstack_nw"

update Raspberry Pi OS¶