diff --git a/README.md b/README.md

index 5498dbc7..3ee255ad 100644

--- a/README.md

+++ b/README.md

@@ -26,119 +26,179 @@

-# What is Promptulate?

-`Promptulate AI` focuses on building a developer platform for large language model applications, dedicated to providing developers and businesses with the ability to build, extend, and evaluate large language model applications. `Promptulate` is a large language model automation and application development framework under `Promptulate AI`, designed to help developers build industry-level large model applications at a lower cost. It includes most of the common components for application layer development in the LLM field, such as external tool components, model components, Agent intelligent agents, external data source integration modules, data storage modules, and lifecycle modules. With `Promptulate`, you can easily build your own LLM applications.

+## Overview

+

+**Promptulate** is an AI Agent application development framework crafted by **Cogit Lab**, which offers developers an extremely concise and efficient way to build Agent applications through a Pythonic development paradigm. The core philosophy of Promptulate is to borrow and integrate the wisdom of the open-source community, incorporating the highlights of various development frameworks to lower the barrier to entry and unify the consensus among developers. With Promptulate, you can manipulate components like LLM, Agent, Tool, RAG, etc., with the most succinct code, as most tasks can be easily completed with just a few lines of code. 🚀

+

+## 💡 Features

+

+- 🐍 Pythonic Code Style: Embraces the habits of Python developers, providing a Pythonic SDK calling approach, putting everything within your grasp with just one `pne.chat` function to encapsulate all essential functionalities.

+- 🧠 Model Compatibility: Supports nearly all types of large models on the market and allows for easy customization to meet specific needs.

+- 🕵️♂️ Diverse Agents: Offers various types of Agents, such as WebAgent, ToolAgent, CodeAgent, etc., capable of planning, reasoning, and acting to handle complex problems.

+- 🔗 Low-Cost Integration: Effortlessly integrates tools from different frameworks like LangChain, significantly reducing integration costs.

+- 🔨 Functions as Tools: Converts any Python function directly into a tool usable by Agents, simplifying the tool creation and usage process.

+- 🪝 Lifecycle and Hooks: Provides a wealth of Hooks and comprehensive lifecycle management, allowing the insertion of custom code at various stages of Agents, Tools, and LLMs.

+- 💻 Terminal Integration: Easily integrates application terminals, with built-in client support, offering rapid debugging capabilities for prompts.

+- ⏱️ Prompt Caching: Offers a caching mechanism for LLM Prompts to reduce repetitive work and enhance development efficiency.

+

+> Below, `pne` stands for Promptulate, which is the nickname for Promptulate. The `p` and `e` represent the beginning and end of Promptulate, respectively, and `n` stands for 9, which is a shorthand for the nine letters between `p` and `e`.

+

+## Supported Base Models

+

+Promptulate integrates the capabilities of [litellm](https://github.com/BerriAI/litellm), supporting nearly all types of large models on the market, including but not limited to the following models:

+

+| Provider | [Completion](https://docs.litellm.ai/docs/#basic-usage) | [Streaming](https://docs.litellm.ai/docs/completion/stream#streaming-responses) | [Async Completion](https://docs.litellm.ai/docs/completion/stream#async-completion) | [Async Streaming](https://docs.litellm.ai/docs/completion/stream#async-streaming) | [Async Embedding](https://docs.litellm.ai/docs/embedding/supported_embedding) | [Async Image Generation](https://docs.litellm.ai/docs/image_generation) |

+| ------------- | ------------- | ------------- | ------------- | ------------- | ------------- | ------------- |

+| [openai](https://docs.litellm.ai/docs/providers/openai) | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [azure](https://docs.litellm.ai/docs/providers/azure) | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [aws - sagemaker](https://docs.litellm.ai/docs/providers/aws_sagemaker) | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [aws - bedrock](https://docs.litellm.ai/docs/providers/bedrock) | ✅ | ✅ | ✅ | ✅ |✅ |

+| [google - vertex_ai [Gemini]](https://docs.litellm.ai/docs/providers/vertex) | ✅ | ✅ | ✅ | ✅ |

+| [google - palm](https://docs.litellm.ai/docs/providers/palm) | ✅ | ✅ | ✅ | ✅ |

+| [google AI Studio - gemini](https://docs.litellm.ai/docs/providers/gemini) | ✅ | | ✅ | | |

+| [mistral ai api](https://docs.litellm.ai/docs/providers/mistral) | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [cloudflare AI Workers](https://docs.litellm.ai/docs/providers/cloudflare_workers) | ✅ | ✅ | ✅ | ✅ |

+| [cohere](https://docs.litellm.ai/docs/providers/cohere) | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [anthropic](https://docs.litellm.ai/docs/providers/anthropic) | ✅ | ✅ | ✅ | ✅ |

+| [huggingface](https://docs.litellm.ai/docs/providers/huggingface) | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [replicate](https://docs.litellm.ai/docs/providers/replicate) | ✅ | ✅ | ✅ | ✅ |

+| [together_ai](https://docs.litellm.ai/docs/providers/togetherai) | ✅ | ✅ | ✅ | ✅ |

+| [openrouter](https://docs.litellm.ai/docs/providers/openrouter) | ✅ | ✅ | ✅ | ✅ |

+| [ai21](https://docs.litellm.ai/docs/providers/ai21) | ✅ | ✅ | ✅ | ✅ |

+| [baseten](https://docs.litellm.ai/docs/providers/baseten) | ✅ | ✅ | ✅ | ✅ |

+| [vllm](https://docs.litellm.ai/docs/providers/vllm) | ✅ | ✅ | ✅ | ✅ |

+| [nlp_cloud](https://docs.litellm.ai/docs/providers/nlp_cloud) | ✅ | ✅ | ✅ | ✅ |

+| [aleph alpha](https://docs.litellm.ai/docs/providers/aleph_alpha) | ✅ | ✅ | ✅ | ✅ |

+| [petals](https://docs.litellm.ai/docs/providers/petals) | ✅ | ✅ | ✅ | ✅ |

+| [ollama](https://docs.litellm.ai/docs/providers/ollama) | ✅ | ✅ | ✅ | ✅ |

+| [deepinfra](https://docs.litellm.ai/docs/providers/deepinfra) | ✅ | ✅ | ✅ | ✅ |

+| [perplexity-ai](https://docs.litellm.ai/docs/providers/perplexity) | ✅ | ✅ | ✅ | ✅ |

+| [Groq AI](https://docs.litellm.ai/docs/providers/groq) | ✅ | ✅ | ✅ | ✅ |

+| [anyscale](https://docs.litellm.ai/docs/providers/anyscale) | ✅ | ✅ | ✅ | ✅ |

+| [voyage ai](https://docs.litellm.ai/docs/providers/voyage) | | | | | ✅ |

+| [xinference [Xorbits Inference]](https://docs.litellm.ai/docs/providers/xinference) | | | | | ✅ |

+

+For more details, please visit the [litellm documentation](https://docs.litellm.ai/docs/providers).

+

+You can easily build any third-party model calls using the following method:

-# Envisage

-To create a powerful and flexible LLM application development platform for creating autonomous agents that can automate various tasks and applications, `Promptulate` implements an automated AI platform through six components: Core AI Engine, Agent System, APIs and Tools Provider, Multimodal Processing, Knowledge Base, and Task-specific Modules. The Core AI Engine is the core component of the framework, responsible for processing and understanding various inputs, generating outputs, and making decisions. The Agent System is a module that provides high-level guidance and control over AI agent behavior. The APIs and Tools Provider offers APIs and integration libraries for interacting with tools and services. Multimodal Processing is a set of modules for processing and understanding different data types, such as text, images, audio, and video, using deep learning models to extract meaningful information from different data modalities. The Knowledge Base is a large structured knowledge repository for storing and organizing world information, enabling AI agents to access and reason about a vast amount of knowledge. The Task-specific Modules are a set of modules specifically designed to perform specific tasks, such as sentiment analysis, machine translation, or object detection. By combining these components, the framework provides a comprehensive, flexible, and powerful platform for automating various complex tasks and applications.

-

-

-# Features

+```python

+import promptulate as pne

-- Large language model support: Support for various types of large language models through extensible interfaces.

-- Dialogue terminal: Provides a simple dialogue terminal for direct interaction with large language models.

-- Role presets: Provides preset roles for invoking GPT from different perspectives.

-- Long conversation mode: Supports long conversation chat and persistence in multiple ways.

-- External tools: Integrated external tool capabilities for powerful functions such as web search and executing Python code.

-- KEY pool: Provides an API key pool to completely solve the key rate limiting problem.

-- Intelligent agent: Integrates advanced agents such as ReAct and self-ask, empowering LLM with external tools.

-- Autonomous agent mode: Supports calling official API interfaces, autonomous agents, or using agents provided by Promptulate.

-- Chinese optimization: Specifically optimized for the Chinese context, more suitable for Chinese scenarios.

-- Data export: Supports dialogue export in formats such as markdown.

-- Hooks and lifecycles: Provides Agent, Tool, and LLM lifecycles and hook systems.

-- Advanced abstraction: Supports plugin extensions, storage extensions, and large language model extensions.

+resp: str = pne.chat(model="ollama/llama2", messages=[{"content": "Hello, how are you?", "role": "user"}])

+```

-# Quick Start

+## 📗 Related Documentation

-- [Quick Start/Official Documentation](https://undertone0809.github.io/promptulate/#/)

+- [Getting Started/Official Documentation](https://undertone0809.github.io/promptulate/#/)

- [Current Development Plan](https://undertone0809.github.io/promptulate/#/other/plan)

-- [Contribution/Developer's Guide](https://undertone0809.github.io/promptulate/#/other/contribution)

-- [FAQ](https://undertone0809.github.io/promptulate/#/other/faq)

+- [Contributing/Developer's Manual](https://undertone0809.github.io/promptulate/#/other/contribution)

+- [Frequently Asked Questions](https://undertone0809.github.io/promptulate/#/other/faq)

- [PyPI Repository](https://pypi.org/project/promptulate/)

-To install the framework, open the terminal and run the following command:

+## 🛠 Quick Start

+

+- Open the terminal and enter the following command to install the framework:

```shell script

pip install -U promptulate

```

-> Your Python version should be 3.8 or higher.

+> Note: Your Python version should be 3.8 or higher.

-Get started with your "HelloWorld" using the simple program below:

+Robust output formatting is a fundamental basis for LLM application development. We hope that LLMs can return stable data. With pne, you can easily perform formatted output. In the following example, we use Pydantic's BaseModel to encapsulate a data structure that needs to be returned.

```python

-import os

+from typing import List

import promptulate as pne

+from pydantic import BaseModel, Field

-os.environ['OPENAI_API_KEY'] = "your-key"

+class LLMResponse(BaseModel):

+ provinces: List[str] = Field(description="List of provinces' names")

-agent = pne.WebAgent()

-answer = agent.run("What is the temperature tomorrow in Shanghai")

-print(answer)

+resp: LLMResponse = pne.chat("Please tell me all provinces in China.", output_schema=LLMResponse)

+print(resp)

```

-```

-The temperature tomorrow in Shanghai is expected to be 23°C.

-```

+**Output:**

-> Most of the time, we refer to template as pne, where p and e represent the words that start and end template, and n represents 9, which is the abbreviation of the nine words between p and e.

+```text

+provinces=['Anhui', 'Fujian', 'Gansu', 'Guangdong', 'Guizhou', 'Hainan', 'Hebei', 'Heilongjiang', 'Henan', 'Hubei', 'Hunan', 'Jiangsu', 'Jiangxi', 'Jilin', 'Liaoning', 'Qinghai', 'Shaanxi', 'Shandong', 'Shanxi', 'Sichuan', 'Yunnan', 'Zhejiang', 'Taiwan', 'Guangxi', 'Nei Mongol', 'Ningxia', 'Xinjiang', 'Xizang', 'Beijing', 'Chongqing', 'Shanghai', 'Tianjin', 'Hong Kong', 'Macao']

+```

-To integrate a variety of external tools, including web search, calculators, and more, into your LLM Agent application, you can use the promptulate library alongside langchain. The langchain library allows you to build a ToolAgent with a collection of tools, such as an image generator based on OpenAI's DALL-E model.

+Additionally, influenced by the [Plan-and-Solve](https://arxiv.org/abs/2305.04091) paper, pne also allows developers to build Agents capable of dealing with complex problems through planning, reasoning, and action. The Agent's planning abilities can be activated using the `enable_plan` parameter.

-Below is an example of how to use the promptulate and langchain libraries to create an image from a text description:

+

-> You need to set the `OPENAI_API_KEY` environment variable to your OpenAI API key. Click [here](https://undertone0809.github.io/promptulate/#/modules/tools/langchain_tool_usage?id=langchain-tool-usage) to see the detail.

+In this example, we use [Tavily](https://app.tavily.com/) as the search engine, which is a powerful tool for searching information on the web. To use Tavily, you need to obtain an API key from Tavily.

```python

-import promptulate as pne

-from langchain.agents import load_tools

+import os

-tools: list = load_tools(["dalle-image-generator"])

-agent = pne.ToolAgent(tools=tools)

-output = agent.run("Create an image of a halloween night at a haunted museum")

+os.environ["TAVILY_API_KEY"] = "your_tavily_api_key"

+os.environ["OPENAI_API_KEY"] = "your_openai_api_key"

```

-output:

+In this case, we are using the TavilySearchResults Tool wrapped by LangChain.

-```text

-Here is the generated image: []

+```python

+from langchain_community.tools.tavily_search import TavilySearchResults

+

+tools = [TavilySearchResults(max_results=5)]

```

-

+```python

+import promptulate as pne

-For more detailed information, please refer to the [Quick Start/Official Documentation](https://undertone0809.github.io/promptulate/#/).

+pne.chat("what is the hometown of the 2024 Australia open winner?", model="gpt-4-1106-preview", enable_plan=True)

+```

-# Architecture

+**Output:**

-Currently, `promptulate` is in the rapid development stage and there are still many aspects that need to be improved and discussed. Your participation and discussions are highly welcome. As a large language model automation and application development framework, `promptulate` mainly consists of the following components:

+```text

+[Agent] Assistant Agent start...

+[User instruction] what is the hometown of the 2024 Australia open winner?

+[Plan] {"goals": ["Find the hometown of the 2024 Australian Open winner"], "tasks": [{"task_id": 1, "description": "Identify the winner of the 2024 Australian Open."}, {"task_id": 2, "description": "Research the identified winner to find their place of birth or hometown."}, {"task_id": 3, "description": "Record the hometown of the 2024 Australian Open winner."}], "next_task_id": 1}

+[Agent] Tool Agent start...

+[User instruction] Identify the winner of the 2024 Australian Open.

+[Thought] Since the current date is March 26, 2024, and the Australian Open typically takes place in January, the event has likely concluded for the year. To identify the winner, I should use the Tavily search tool to find the most recent information on the 2024 Australian Open winner.

+[Action] tavily_search_results_json args: {'query': '2024 Australian Open winner'}

+[Observation] [{'url': 'https://ausopen.com/articles/news/sinner-winner-italian-takes-first-major-ao-2024', 'content': 'The agile right-hander, who had claimed victory from a two-set deficit only once previously in his young career, is the second Italian man to achieve singles glory at a major, following Adriano Panatta in1976.With victories over Andrey Rublev, 10-time AO champion Novak Djokovic, and Medvedev, the Italian is the youngest player to defeat top 5 opponents in the final three matches of a major since Michael Stich did it at Wimbledon in 1991 – just weeks before Sinner was born.\n He saved the only break he faced with an ace down the tee, and helped by scoreboard pressure, broke Medvedev by slamming a huge forehand to force an error from his more experienced rival, sealing the fourth set to take the final to a decider.\n Sensing a shift in momentum as Medvedev served to close out the second at 5-3, Sinner set the RLA crowd alight with a pair of brilliant passing shots en route to creating a break point opportunity, which Medvedev snuffed out with trademark patience, drawing a forehand error from his opponent. “We are trying to get better every day, even during the tournament we try to get stronger, trying to understand every situation a little bit better, and I’m so glad to have you there supporting me, understanding me, which sometimes it’s not easy because I am a little bit young sometimes,” he said with a smile.\n Medvedev, who held to love in his first three service games of the second set, piled pressure on the Italian, forcing the right-hander to produce his best tennis to save four break points in a nearly 12-minute second game.\n'}, {'url': 'https://www.cbssports.com/tennis/news/australian-open-2024-jannik-sinner-claims-first-grand-slam-title-in-epic-comeback-win-over-daniil-medvedev/', 'content': '"\nOur Latest Tennis Stories\nSinner makes epic comeback to win Australian Open\nSinner, Sabalenka win Australian Open singles titles\n2024 Australian Open odds, Sinner vs. Medvedev picks\nSabalenka defeats Zheng to win 2024 Australian Open\n2024 Australian Open odds, Sabalenka vs. Zheng picks\n2024 Australian Open odds, Medvedev vs. Zverev picks\nAustralian Open odds: Djokovic vs. Sinner picks, bets\nAustralian Open odds: Gauff vs. Sabalenka picks, bets\nAustralian Open odds: Zheng vs. Yastremska picks, bets\nNick Kyrgios reveals he\'s contemplating retirement\n© 2004-2024 CBS Interactive. Jannik Sinner claims first Grand Slam title in epic comeback win over Daniil Medvedev\nSinner, 22, rallied back from a two-set deficit to become the third ever Italian Grand Slam men\'s singles champion\nAfter almost four hours, Jannik Sinner climbed back from a two-set deficit to win his first ever Grand Slam title with an epic 3-6, 3-6, 6-4, 6-4, 6-3 comeback victory against Daniil Medvedev. Sinner became the first Italian man to win the Australian Open since 1976, and just the eighth man to successfully come back from two sets down in a major final.\n He did not drop a single set until his meeting with Djokovic, and that win in itself was an accomplishment as Djokovic was riding a 33-match winning streak at the Australian Open and had never lost a semifinal in Melbourne.\n @janniksin • @wwos • @espn • @eurosport • @wowowtennis pic.twitter.com/DTCIqWoUoR\n"We are trying to get better everyday, and even during the tournament, trying to get stronger and understand the situation a little bit better," Sinner said.'}, {'url': 'https://www.bbc.com/sport/tennis/68120937', 'content': 'Live scores, results and order of play\nAlerts: Get tennis news sent to your phone\nRelated Topics\nTop Stories\nFA Cup: Blackburn Rovers v Wrexham - live text commentary\nRussian skater Valieva given four-year ban for doping\nLinks to Barcelona are \'totally untrue\' - Arteta\nElsewhere on the BBC\nThe truth behind the fake grooming scandal\nFeaturing unseen police footage and interviews with the officers at the heart of the case\nDid their father and uncle kill Nazi war criminals?\n A real-life murder mystery following three brothers in their quest for the truth\nWhat was it like to travel on the fastest plane?\nTake a behind-the-scenes look at the supersonic story of the Concorde\nToxic love, ruthless ambition and shocking betrayal\nTell Me Lies follows a passionate college relationship with unimaginable consequences...\n "\nMarathon man Medvedev runs out of steam\nMedvedev is the first player to lose two Grand Slam finals after winning the opening two sets\nSo many players with the experience of a Grand Slam final have talked about how different the occasion can be, particularly if it is the first time, and potentially overwhelming.\n Jannik Sinner beats Daniil Medvedev in Melbourne final\nJannik Sinner is the youngest player to win the Australian Open men\'s title since Novak Djokovic in 2008\nJannik Sinner landed the Grand Slam title he has long promised with an extraordinary fightback to beat Daniil Medvedev in the Australian Open final.\n "\nSinner starts 2024 in inspired form\nSinner won the first Australian Open men\'s final since 2005 which did not feature Roger Federer, Rafael Nadal or Novak Djokovic\nSinner was brought to the forefront of conversation when discussing Grand Slam champions in 2024 following a stunning end to last season.\n'}]

+[Execute Result] {'thought': "The search results have provided consistent information about the winner of the 2024 Australian Open. Jannik Sinner is mentioned as the winner in multiple sources, which confirms the answer to the user's question.", 'action_name': 'finish', 'action_parameters': {'content': 'Jannik Sinner won the 2024 Australian Open.'}}

+[Execute] Execute End.

+[Revised Plan] {"goals": ["Find the hometown of the 2024 Australian Open winner"], "tasks": [{"task_id": 2, "description": "Research Jannik Sinner to find his place of birth or hometown."}, {"task_id": 3, "description": "Record the hometown of Jannik Sinner, the 2024 Australian Open winner."}], "next_task_id": 2}

+[Agent] Tool Agent start...

+[User instruction] Research Jannik Sinner to find his place of birth or hometown.

+[Thought] To find Jannik Sinner's place of birth or hometown, I should use the search tool to find the most recent and accurate information.

+[Action] tavily_search_results_json args: {'query': 'Jannik Sinner place of birth hometown'}

+[Observation] [{'url': 'https://www.sportskeeda.com/tennis/jannik-sinner-nationality', 'content': "During the semifinal of the Cup, Sinner faced Djokovic for the third time in a row and became the first player to defeat him in a singles match. Jannik Sinner Nationality\nJannik Sinner is an Italian national and was born in Innichen, a town located in the mainly German-speaking area of South Tyrol in northern Italy. A. Jannik Sinner won his maiden Masters 1000 title at the 2023 Canadian Open defeating Alex de Minaur in the straight sets of the final.\n Apart from his glorious triumph at Melbourne Park in 2024, Jannik Sinner's best Grand Slam performance came at the 2023 Wimbledon, where he reached the semifinals. In 2020, Sinner became the youngest player since Novak Djokovic in 2006 to reach the quarter-finals of the French Open."}, {'url': 'https://en.wikipedia.org/wiki/Jannik_Sinner', 'content': "At the 2023 Australian Open, Sinner lost in the 4th round to eventual runner-up Stefanos Tsitsipas in 5 sets.[87]\nSinner then won his seventh title at the Open Sud de France in Montpellier, becoming the first player to win a tour-level title in the season without having dropped a single set and the first since countryman Lorenzo Musetti won the title in Naples in October 2022.[88]\nAt the ABN AMRO Open he defeated top seed and world No. 3 Stefanos Tsitsipas taking his revenge for the Australian Open loss, for his biggest win ever.[89] At the Cincinnati Masters, he lost in the third round to Félix Auger-Aliassime after being up a set, a break, and 2 match points.[76]\nSeeded 11th at the US Open, he reached the fourth round after defeating Brandon Nakashima in four sets.[77] Next, he defeated Ilya Ivashka in a five set match lasting close to four hours to reach the quarterfinals for the first time at this Major.[78] At five hours and 26 minutes, it was the longest match of Sinner's career up until this point and the fifth-longest in the tournament history[100] as well as the second longest of the season after Andy Murray against Thanasi Kokkinakis at the Australian Open.[101]\nHe reached back to back quarterfinals in Wimbledon after defeating Juan Manuel Cerundolo, Diego Schwartzman, Quentin Halys and Daniel Elahi Galan.[102] He then reached his first Major semifinal after defeating Roman Safiullin, before losing to Novak Djokovic in straight sets.[103] In the following round in the semifinals, he lost in straight sets to career rival and top seed Carlos Alcaraz who returned to world No. 1 following the tournament.[92] In Miami, he reached the quarterfinals of this tournament for a third straight year after defeating Grigor Dimitrov and Andrey Rublev, thus returning to the top 10 in the rankings at world No. In the final, he came from a two-set deficit to beat Daniil Medvedev to become the first Italian player, male or female, to win the Australian Open singles title, and the third man to win a Major (the second of which is in the Open Era), the first in 48 years.[8][122]"}, {'url': 'https://www.thesportreview.com/biography/jannik-sinner/', 'content': '• Date of birth: 16 August 2001\n• Age: 22 years old\n• Place of birth: San Candido, Italy\n• Nationality: Italian\n• Height: 188cm / 6ft 2ins\n• Weight: 76kg / 167lbs\n• Plays: Right-handed\n• Turned Pro: 2018\n• Career Prize Money: US$ 4,896,338\n• Instagram: @janniksin\nThe impressive 22-year-old turned professional back in 2018 and soon made an impact on the tour, breaking into the top 100 in the world rankings for the first time in 2019.\n Jannik Sinner (Photo: Dubai Duty Free Tennis Championships)\nSinner ended the season as number 78 in the world, becoming the youngest player since Rafael Nadal in 2003 to end the year in the top 80.\n The Italian then ended the 2019 season in style, qualifying for the 2019 Next Gen ATP Finals and going on to win the tournament with a win over Alex de Minaur in the final.\n Sinner then reached the main draw of a grand slam for the first time at the 2019 US Open, when he came through qualifying to reach the first round, where he lost to Stan Wawrinka.\n Asked to acknowledge some of the key figures in his development, Sinner replied: “I think first of all, my family who always helped me and gave me the confidence to actually change my life when I was 13-and-a-half, 14 years old.\n'}]

+[Execute Result] {'thought': 'The search results have provided two different places of birth for Jannik Sinner: Innichen and San Candido. These are actually the same place, as San Candido is the Italian name and Innichen is the German name for the town. Since the user asked for the place of birth or hometown, I can now provide this information.', 'action_name': 'finish', 'action_parameters': {'content': 'Jannik Sinner was born in San Candido (Italian) / Innichen (German), Italy.'}}

+[Execute] Execute End.

+[Revised Plan] {"goals": ["Find the hometown of the 2024 Australian Open winner"], "tasks": [], "next_task_id": null}

+[Agent Result] Jannik Sinner was born in San Candido (Italian) / Innichen (German), Italy.

+[Agent] Agent End.

+```

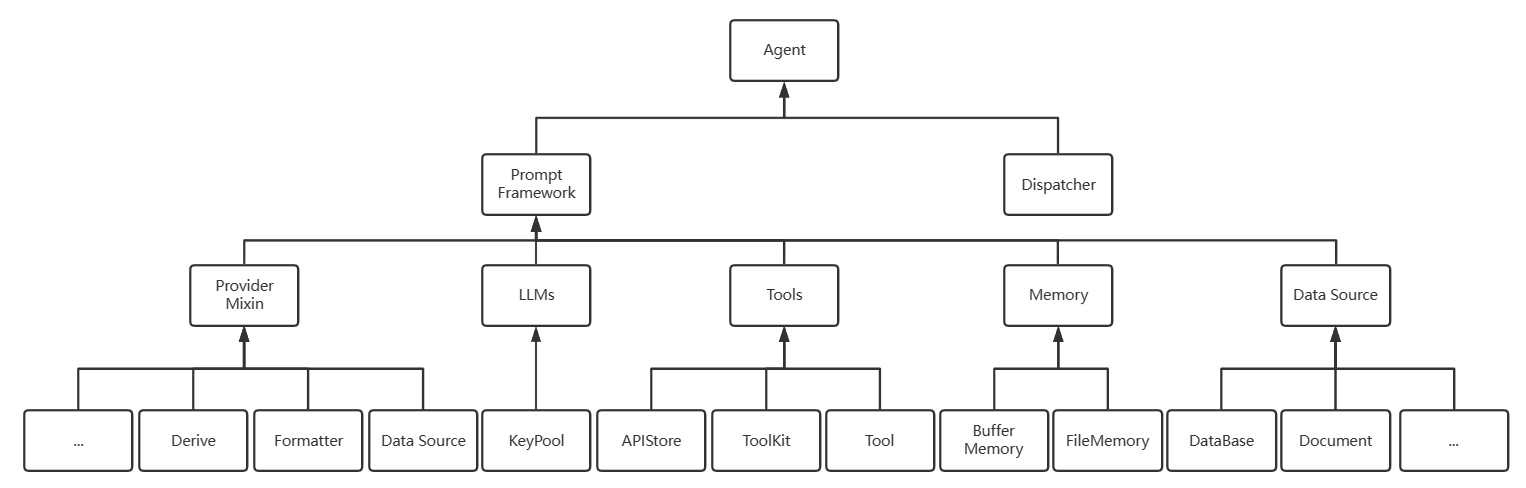

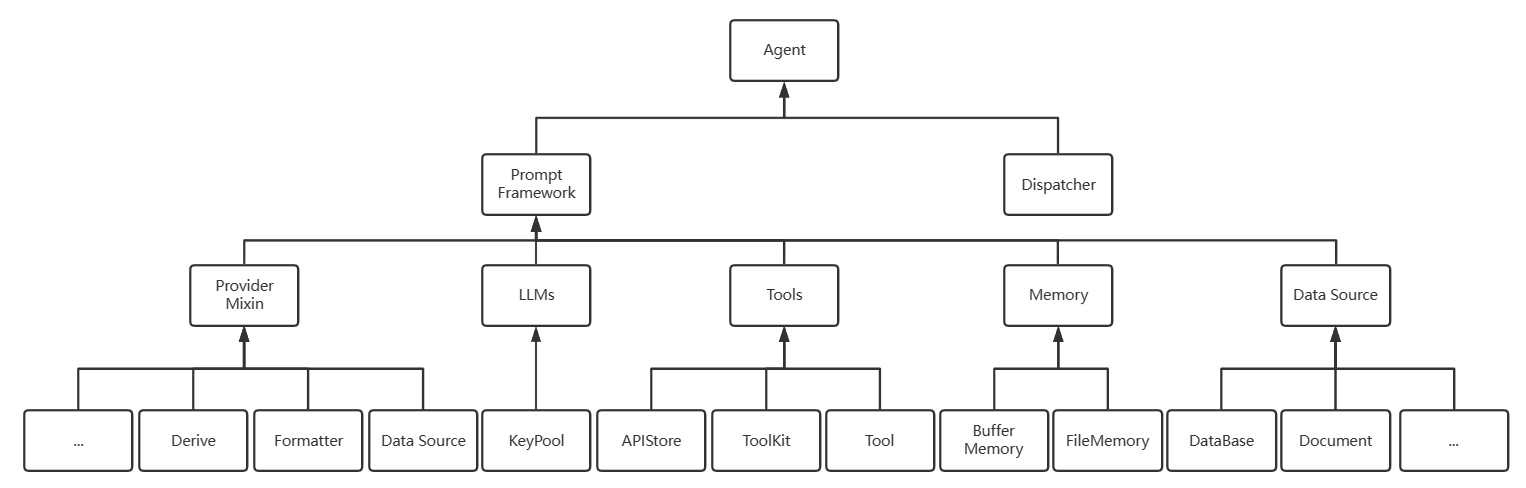

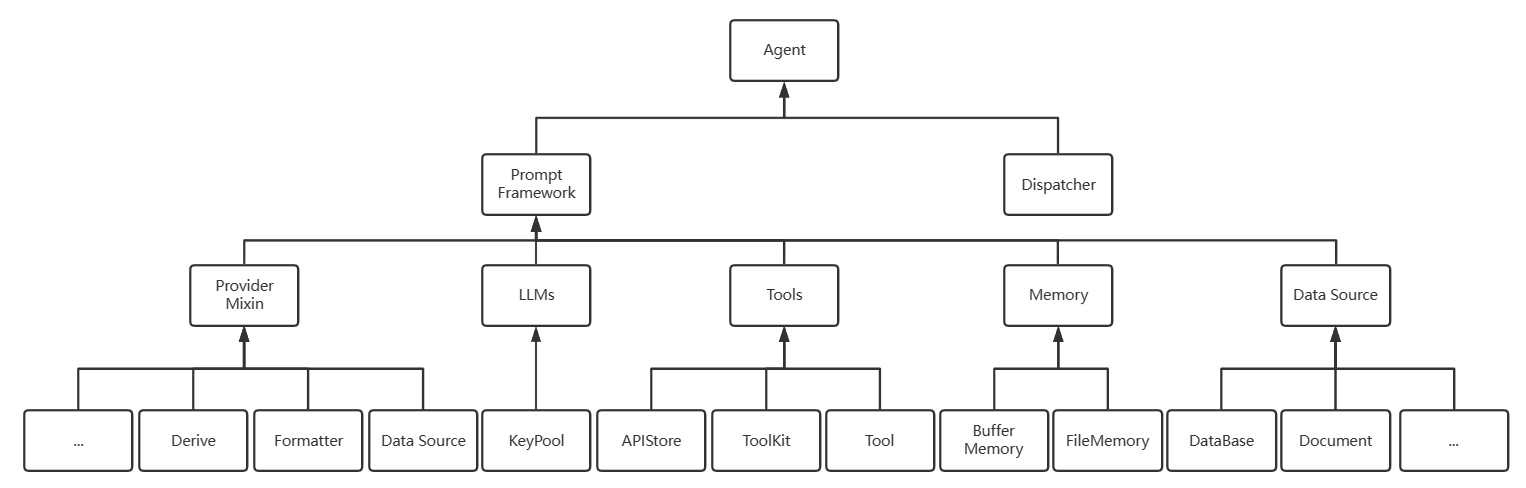

-- `Agent`: More advanced execution units responsible for task scheduling and distribution.

-- `llm`: Large language model responsible for generating answers, supporting different types of large language models.

-- `Memory`: Responsible for storing conversations, supporting different storage methods and extensions such as file storage and database storage.

-- `Framework`: Framework layer that implements different prompt frameworks, including the basic `Conversation` model and models such as `self-ask` and `ReAct`.

-- `Tool`: Provides external tool extensions for search engines, calculators, etc.

-- `Hook&Lifecycle`: Hook system and lifecycle system that allows developers to customize lifecycle logic control.

-- `Role presets`: Provides preset roles for customized conversations.

-- `Provider`: Provides more data sources or autonomous operations for the system, such as connecting to databases.

+For more detailed information, please check the [Getting Started/Official Documentation](https://undertone0809.github.io/promptulate/#/).

-

-# What is Promptulate?

-`Promptulate AI` focuses on building a developer platform for large language model applications, dedicated to providing developers and businesses with the ability to build, extend, and evaluate large language model applications. `Promptulate` is a large language model automation and application development framework under `Promptulate AI`, designed to help developers build industry-level large model applications at a lower cost. It includes most of the common components for application layer development in the LLM field, such as external tool components, model components, Agent intelligent agents, external data source integration modules, data storage modules, and lifecycle modules. With `Promptulate`, you can easily build your own LLM applications.

+## Overview

+

+**Promptulate** is an AI Agent application development framework crafted by **Cogit Lab**, which offers developers an extremely concise and efficient way to build Agent applications through a Pythonic development paradigm. The core philosophy of Promptulate is to borrow and integrate the wisdom of the open-source community, incorporating the highlights of various development frameworks to lower the barrier to entry and unify the consensus among developers. With Promptulate, you can manipulate components like LLM, Agent, Tool, RAG, etc., with the most succinct code, as most tasks can be easily completed with just a few lines of code. 🚀

+

+## 💡 Features

+

+- 🐍 Pythonic Code Style: Embraces the habits of Python developers, providing a Pythonic SDK calling approach, putting everything within your grasp with just one `pne.chat` function to encapsulate all essential functionalities.

+- 🧠 Model Compatibility: Supports nearly all types of large models on the market and allows for easy customization to meet specific needs.

+- 🕵️♂️ Diverse Agents: Offers various types of Agents, such as WebAgent, ToolAgent, CodeAgent, etc., capable of planning, reasoning, and acting to handle complex problems.

+- 🔗 Low-Cost Integration: Effortlessly integrates tools from different frameworks like LangChain, significantly reducing integration costs.

+- 🔨 Functions as Tools: Converts any Python function directly into a tool usable by Agents, simplifying the tool creation and usage process.

+- 🪝 Lifecycle and Hooks: Provides a wealth of Hooks and comprehensive lifecycle management, allowing the insertion of custom code at various stages of Agents, Tools, and LLMs.

+- 💻 Terminal Integration: Easily integrates application terminals, with built-in client support, offering rapid debugging capabilities for prompts.

+- ⏱️ Prompt Caching: Offers a caching mechanism for LLM Prompts to reduce repetitive work and enhance development efficiency.

+

+> Below, `pne` stands for Promptulate, which is the nickname for Promptulate. The `p` and `e` represent the beginning and end of Promptulate, respectively, and `n` stands for 9, which is a shorthand for the nine letters between `p` and `e`.

+

+## Supported Base Models

+

+Promptulate integrates the capabilities of [litellm](https://github.com/BerriAI/litellm), supporting nearly all types of large models on the market, including but not limited to the following models:

+

+| Provider | [Completion](https://docs.litellm.ai/docs/#basic-usage) | [Streaming](https://docs.litellm.ai/docs/completion/stream#streaming-responses) | [Async Completion](https://docs.litellm.ai/docs/completion/stream#async-completion) | [Async Streaming](https://docs.litellm.ai/docs/completion/stream#async-streaming) | [Async Embedding](https://docs.litellm.ai/docs/embedding/supported_embedding) | [Async Image Generation](https://docs.litellm.ai/docs/image_generation) |

+| ------------- | ------------- | ------------- | ------------- | ------------- | ------------- | ------------- |

+| [openai](https://docs.litellm.ai/docs/providers/openai) | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [azure](https://docs.litellm.ai/docs/providers/azure) | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [aws - sagemaker](https://docs.litellm.ai/docs/providers/aws_sagemaker) | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [aws - bedrock](https://docs.litellm.ai/docs/providers/bedrock) | ✅ | ✅ | ✅ | ✅ |✅ |

+| [google - vertex_ai [Gemini]](https://docs.litellm.ai/docs/providers/vertex) | ✅ | ✅ | ✅ | ✅ |

+| [google - palm](https://docs.litellm.ai/docs/providers/palm) | ✅ | ✅ | ✅ | ✅ |

+| [google AI Studio - gemini](https://docs.litellm.ai/docs/providers/gemini) | ✅ | | ✅ | | |

+| [mistral ai api](https://docs.litellm.ai/docs/providers/mistral) | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [cloudflare AI Workers](https://docs.litellm.ai/docs/providers/cloudflare_workers) | ✅ | ✅ | ✅ | ✅ |

+| [cohere](https://docs.litellm.ai/docs/providers/cohere) | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [anthropic](https://docs.litellm.ai/docs/providers/anthropic) | ✅ | ✅ | ✅ | ✅ |

+| [huggingface](https://docs.litellm.ai/docs/providers/huggingface) | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [replicate](https://docs.litellm.ai/docs/providers/replicate) | ✅ | ✅ | ✅ | ✅ |

+| [together_ai](https://docs.litellm.ai/docs/providers/togetherai) | ✅ | ✅ | ✅ | ✅ |

+| [openrouter](https://docs.litellm.ai/docs/providers/openrouter) | ✅ | ✅ | ✅ | ✅ |

+| [ai21](https://docs.litellm.ai/docs/providers/ai21) | ✅ | ✅ | ✅ | ✅ |

+| [baseten](https://docs.litellm.ai/docs/providers/baseten) | ✅ | ✅ | ✅ | ✅ |

+| [vllm](https://docs.litellm.ai/docs/providers/vllm) | ✅ | ✅ | ✅ | ✅ |

+| [nlp_cloud](https://docs.litellm.ai/docs/providers/nlp_cloud) | ✅ | ✅ | ✅ | ✅ |

+| [aleph alpha](https://docs.litellm.ai/docs/providers/aleph_alpha) | ✅ | ✅ | ✅ | ✅ |

+| [petals](https://docs.litellm.ai/docs/providers/petals) | ✅ | ✅ | ✅ | ✅ |

+| [ollama](https://docs.litellm.ai/docs/providers/ollama) | ✅ | ✅ | ✅ | ✅ |

+| [deepinfra](https://docs.litellm.ai/docs/providers/deepinfra) | ✅ | ✅ | ✅ | ✅ |

+| [perplexity-ai](https://docs.litellm.ai/docs/providers/perplexity) | ✅ | ✅ | ✅ | ✅ |

+| [Groq AI](https://docs.litellm.ai/docs/providers/groq) | ✅ | ✅ | ✅ | ✅ |

+| [anyscale](https://docs.litellm.ai/docs/providers/anyscale) | ✅ | ✅ | ✅ | ✅ |

+| [voyage ai](https://docs.litellm.ai/docs/providers/voyage) | | | | | ✅ |

+| [xinference [Xorbits Inference]](https://docs.litellm.ai/docs/providers/xinference) | | | | | ✅ |

+

+For more details, please visit the [litellm documentation](https://docs.litellm.ai/docs/providers).

+

+You can easily build any third-party model calls using the following method:

-# Envisage

-To create a powerful and flexible LLM application development platform for creating autonomous agents that can automate various tasks and applications, `Promptulate` implements an automated AI platform through six components: Core AI Engine, Agent System, APIs and Tools Provider, Multimodal Processing, Knowledge Base, and Task-specific Modules. The Core AI Engine is the core component of the framework, responsible for processing and understanding various inputs, generating outputs, and making decisions. The Agent System is a module that provides high-level guidance and control over AI agent behavior. The APIs and Tools Provider offers APIs and integration libraries for interacting with tools and services. Multimodal Processing is a set of modules for processing and understanding different data types, such as text, images, audio, and video, using deep learning models to extract meaningful information from different data modalities. The Knowledge Base is a large structured knowledge repository for storing and organizing world information, enabling AI agents to access and reason about a vast amount of knowledge. The Task-specific Modules are a set of modules specifically designed to perform specific tasks, such as sentiment analysis, machine translation, or object detection. By combining these components, the framework provides a comprehensive, flexible, and powerful platform for automating various complex tasks and applications.

-

-

-# Features

+```python

+import promptulate as pne

-- Large language model support: Support for various types of large language models through extensible interfaces.

-- Dialogue terminal: Provides a simple dialogue terminal for direct interaction with large language models.

-- Role presets: Provides preset roles for invoking GPT from different perspectives.

-- Long conversation mode: Supports long conversation chat and persistence in multiple ways.

-- External tools: Integrated external tool capabilities for powerful functions such as web search and executing Python code.

-- KEY pool: Provides an API key pool to completely solve the key rate limiting problem.

-- Intelligent agent: Integrates advanced agents such as ReAct and self-ask, empowering LLM with external tools.

-- Autonomous agent mode: Supports calling official API interfaces, autonomous agents, or using agents provided by Promptulate.

-- Chinese optimization: Specifically optimized for the Chinese context, more suitable for Chinese scenarios.

-- Data export: Supports dialogue export in formats such as markdown.

-- Hooks and lifecycles: Provides Agent, Tool, and LLM lifecycles and hook systems.

-- Advanced abstraction: Supports plugin extensions, storage extensions, and large language model extensions.

+resp: str = pne.chat(model="ollama/llama2", messages=[{"content": "Hello, how are you?", "role": "user"}])

+```

-# Quick Start

+## 📗 Related Documentation

-- [Quick Start/Official Documentation](https://undertone0809.github.io/promptulate/#/)

+- [Getting Started/Official Documentation](https://undertone0809.github.io/promptulate/#/)

- [Current Development Plan](https://undertone0809.github.io/promptulate/#/other/plan)

-- [Contribution/Developer's Guide](https://undertone0809.github.io/promptulate/#/other/contribution)

-- [FAQ](https://undertone0809.github.io/promptulate/#/other/faq)

+- [Contributing/Developer's Manual](https://undertone0809.github.io/promptulate/#/other/contribution)

+- [Frequently Asked Questions](https://undertone0809.github.io/promptulate/#/other/faq)

- [PyPI Repository](https://pypi.org/project/promptulate/)

-To install the framework, open the terminal and run the following command:

+## 🛠 Quick Start

+

+- Open the terminal and enter the following command to install the framework:

```shell script

pip install -U promptulate

```

-> Your Python version should be 3.8 or higher.

+> Note: Your Python version should be 3.8 or higher.

-Get started with your "HelloWorld" using the simple program below:

+Robust output formatting is a fundamental basis for LLM application development. We hope that LLMs can return stable data. With pne, you can easily perform formatted output. In the following example, we use Pydantic's BaseModel to encapsulate a data structure that needs to be returned.

```python

-import os

+from typing import List

import promptulate as pne

+from pydantic import BaseModel, Field

-os.environ['OPENAI_API_KEY'] = "your-key"

+class LLMResponse(BaseModel):

+ provinces: List[str] = Field(description="List of provinces' names")

-agent = pne.WebAgent()

-answer = agent.run("What is the temperature tomorrow in Shanghai")

-print(answer)

+resp: LLMResponse = pne.chat("Please tell me all provinces in China.", output_schema=LLMResponse)

+print(resp)

```

-```

-The temperature tomorrow in Shanghai is expected to be 23°C.

-```

+**Output:**

-> Most of the time, we refer to template as pne, where p and e represent the words that start and end template, and n represents 9, which is the abbreviation of the nine words between p and e.

+```text

+provinces=['Anhui', 'Fujian', 'Gansu', 'Guangdong', 'Guizhou', 'Hainan', 'Hebei', 'Heilongjiang', 'Henan', 'Hubei', 'Hunan', 'Jiangsu', 'Jiangxi', 'Jilin', 'Liaoning', 'Qinghai', 'Shaanxi', 'Shandong', 'Shanxi', 'Sichuan', 'Yunnan', 'Zhejiang', 'Taiwan', 'Guangxi', 'Nei Mongol', 'Ningxia', 'Xinjiang', 'Xizang', 'Beijing', 'Chongqing', 'Shanghai', 'Tianjin', 'Hong Kong', 'Macao']

+```

-To integrate a variety of external tools, including web search, calculators, and more, into your LLM Agent application, you can use the promptulate library alongside langchain. The langchain library allows you to build a ToolAgent with a collection of tools, such as an image generator based on OpenAI's DALL-E model.

+Additionally, influenced by the [Plan-and-Solve](https://arxiv.org/abs/2305.04091) paper, pne also allows developers to build Agents capable of dealing with complex problems through planning, reasoning, and action. The Agent's planning abilities can be activated using the `enable_plan` parameter.

-Below is an example of how to use the promptulate and langchain libraries to create an image from a text description:

+

-> You need to set the `OPENAI_API_KEY` environment variable to your OpenAI API key. Click [here](https://undertone0809.github.io/promptulate/#/modules/tools/langchain_tool_usage?id=langchain-tool-usage) to see the detail.

+In this example, we use [Tavily](https://app.tavily.com/) as the search engine, which is a powerful tool for searching information on the web. To use Tavily, you need to obtain an API key from Tavily.

```python

-import promptulate as pne

-from langchain.agents import load_tools

+import os

-tools: list = load_tools(["dalle-image-generator"])

-agent = pne.ToolAgent(tools=tools)

-output = agent.run("Create an image of a halloween night at a haunted museum")

+os.environ["TAVILY_API_KEY"] = "your_tavily_api_key"

+os.environ["OPENAI_API_KEY"] = "your_openai_api_key"

```

-output:

+In this case, we are using the TavilySearchResults Tool wrapped by LangChain.

-```text

-Here is the generated image: []

+```python

+from langchain_community.tools.tavily_search import TavilySearchResults

+

+tools = [TavilySearchResults(max_results=5)]

```

-

+```python

+import promptulate as pne

-For more detailed information, please refer to the [Quick Start/Official Documentation](https://undertone0809.github.io/promptulate/#/).

+pne.chat("what is the hometown of the 2024 Australia open winner?", model="gpt-4-1106-preview", enable_plan=True)

+```

-# Architecture

+**Output:**

-Currently, `promptulate` is in the rapid development stage and there are still many aspects that need to be improved and discussed. Your participation and discussions are highly welcome. As a large language model automation and application development framework, `promptulate` mainly consists of the following components:

+```text

+[Agent] Assistant Agent start...

+[User instruction] what is the hometown of the 2024 Australia open winner?

+[Plan] {"goals": ["Find the hometown of the 2024 Australian Open winner"], "tasks": [{"task_id": 1, "description": "Identify the winner of the 2024 Australian Open."}, {"task_id": 2, "description": "Research the identified winner to find their place of birth or hometown."}, {"task_id": 3, "description": "Record the hometown of the 2024 Australian Open winner."}], "next_task_id": 1}

+[Agent] Tool Agent start...

+[User instruction] Identify the winner of the 2024 Australian Open.

+[Thought] Since the current date is March 26, 2024, and the Australian Open typically takes place in January, the event has likely concluded for the year. To identify the winner, I should use the Tavily search tool to find the most recent information on the 2024 Australian Open winner.

+[Action] tavily_search_results_json args: {'query': '2024 Australian Open winner'}

+[Observation] [{'url': 'https://ausopen.com/articles/news/sinner-winner-italian-takes-first-major-ao-2024', 'content': 'The agile right-hander, who had claimed victory from a two-set deficit only once previously in his young career, is the second Italian man to achieve singles glory at a major, following Adriano Panatta in1976.With victories over Andrey Rublev, 10-time AO champion Novak Djokovic, and Medvedev, the Italian is the youngest player to defeat top 5 opponents in the final three matches of a major since Michael Stich did it at Wimbledon in 1991 – just weeks before Sinner was born.\n He saved the only break he faced with an ace down the tee, and helped by scoreboard pressure, broke Medvedev by slamming a huge forehand to force an error from his more experienced rival, sealing the fourth set to take the final to a decider.\n Sensing a shift in momentum as Medvedev served to close out the second at 5-3, Sinner set the RLA crowd alight with a pair of brilliant passing shots en route to creating a break point opportunity, which Medvedev snuffed out with trademark patience, drawing a forehand error from his opponent. “We are trying to get better every day, even during the tournament we try to get stronger, trying to understand every situation a little bit better, and I’m so glad to have you there supporting me, understanding me, which sometimes it’s not easy because I am a little bit young sometimes,” he said with a smile.\n Medvedev, who held to love in his first three service games of the second set, piled pressure on the Italian, forcing the right-hander to produce his best tennis to save four break points in a nearly 12-minute second game.\n'}, {'url': 'https://www.cbssports.com/tennis/news/australian-open-2024-jannik-sinner-claims-first-grand-slam-title-in-epic-comeback-win-over-daniil-medvedev/', 'content': '"\nOur Latest Tennis Stories\nSinner makes epic comeback to win Australian Open\nSinner, Sabalenka win Australian Open singles titles\n2024 Australian Open odds, Sinner vs. Medvedev picks\nSabalenka defeats Zheng to win 2024 Australian Open\n2024 Australian Open odds, Sabalenka vs. Zheng picks\n2024 Australian Open odds, Medvedev vs. Zverev picks\nAustralian Open odds: Djokovic vs. Sinner picks, bets\nAustralian Open odds: Gauff vs. Sabalenka picks, bets\nAustralian Open odds: Zheng vs. Yastremska picks, bets\nNick Kyrgios reveals he\'s contemplating retirement\n© 2004-2024 CBS Interactive. Jannik Sinner claims first Grand Slam title in epic comeback win over Daniil Medvedev\nSinner, 22, rallied back from a two-set deficit to become the third ever Italian Grand Slam men\'s singles champion\nAfter almost four hours, Jannik Sinner climbed back from a two-set deficit to win his first ever Grand Slam title with an epic 3-6, 3-6, 6-4, 6-4, 6-3 comeback victory against Daniil Medvedev. Sinner became the first Italian man to win the Australian Open since 1976, and just the eighth man to successfully come back from two sets down in a major final.\n He did not drop a single set until his meeting with Djokovic, and that win in itself was an accomplishment as Djokovic was riding a 33-match winning streak at the Australian Open and had never lost a semifinal in Melbourne.\n @janniksin • @wwos • @espn • @eurosport • @wowowtennis pic.twitter.com/DTCIqWoUoR\n"We are trying to get better everyday, and even during the tournament, trying to get stronger and understand the situation a little bit better," Sinner said.'}, {'url': 'https://www.bbc.com/sport/tennis/68120937', 'content': 'Live scores, results and order of play\nAlerts: Get tennis news sent to your phone\nRelated Topics\nTop Stories\nFA Cup: Blackburn Rovers v Wrexham - live text commentary\nRussian skater Valieva given four-year ban for doping\nLinks to Barcelona are \'totally untrue\' - Arteta\nElsewhere on the BBC\nThe truth behind the fake grooming scandal\nFeaturing unseen police footage and interviews with the officers at the heart of the case\nDid their father and uncle kill Nazi war criminals?\n A real-life murder mystery following three brothers in their quest for the truth\nWhat was it like to travel on the fastest plane?\nTake a behind-the-scenes look at the supersonic story of the Concorde\nToxic love, ruthless ambition and shocking betrayal\nTell Me Lies follows a passionate college relationship with unimaginable consequences...\n "\nMarathon man Medvedev runs out of steam\nMedvedev is the first player to lose two Grand Slam finals after winning the opening two sets\nSo many players with the experience of a Grand Slam final have talked about how different the occasion can be, particularly if it is the first time, and potentially overwhelming.\n Jannik Sinner beats Daniil Medvedev in Melbourne final\nJannik Sinner is the youngest player to win the Australian Open men\'s title since Novak Djokovic in 2008\nJannik Sinner landed the Grand Slam title he has long promised with an extraordinary fightback to beat Daniil Medvedev in the Australian Open final.\n "\nSinner starts 2024 in inspired form\nSinner won the first Australian Open men\'s final since 2005 which did not feature Roger Federer, Rafael Nadal or Novak Djokovic\nSinner was brought to the forefront of conversation when discussing Grand Slam champions in 2024 following a stunning end to last season.\n'}]

+[Execute Result] {'thought': "The search results have provided consistent information about the winner of the 2024 Australian Open. Jannik Sinner is mentioned as the winner in multiple sources, which confirms the answer to the user's question.", 'action_name': 'finish', 'action_parameters': {'content': 'Jannik Sinner won the 2024 Australian Open.'}}

+[Execute] Execute End.

+[Revised Plan] {"goals": ["Find the hometown of the 2024 Australian Open winner"], "tasks": [{"task_id": 2, "description": "Research Jannik Sinner to find his place of birth or hometown."}, {"task_id": 3, "description": "Record the hometown of Jannik Sinner, the 2024 Australian Open winner."}], "next_task_id": 2}

+[Agent] Tool Agent start...

+[User instruction] Research Jannik Sinner to find his place of birth or hometown.

+[Thought] To find Jannik Sinner's place of birth or hometown, I should use the search tool to find the most recent and accurate information.

+[Action] tavily_search_results_json args: {'query': 'Jannik Sinner place of birth hometown'}

+[Observation] [{'url': 'https://www.sportskeeda.com/tennis/jannik-sinner-nationality', 'content': "During the semifinal of the Cup, Sinner faced Djokovic for the third time in a row and became the first player to defeat him in a singles match. Jannik Sinner Nationality\nJannik Sinner is an Italian national and was born in Innichen, a town located in the mainly German-speaking area of South Tyrol in northern Italy. A. Jannik Sinner won his maiden Masters 1000 title at the 2023 Canadian Open defeating Alex de Minaur in the straight sets of the final.\n Apart from his glorious triumph at Melbourne Park in 2024, Jannik Sinner's best Grand Slam performance came at the 2023 Wimbledon, where he reached the semifinals. In 2020, Sinner became the youngest player since Novak Djokovic in 2006 to reach the quarter-finals of the French Open."}, {'url': 'https://en.wikipedia.org/wiki/Jannik_Sinner', 'content': "At the 2023 Australian Open, Sinner lost in the 4th round to eventual runner-up Stefanos Tsitsipas in 5 sets.[87]\nSinner then won his seventh title at the Open Sud de France in Montpellier, becoming the first player to win a tour-level title in the season without having dropped a single set and the first since countryman Lorenzo Musetti won the title in Naples in October 2022.[88]\nAt the ABN AMRO Open he defeated top seed and world No. 3 Stefanos Tsitsipas taking his revenge for the Australian Open loss, for his biggest win ever.[89] At the Cincinnati Masters, he lost in the third round to Félix Auger-Aliassime after being up a set, a break, and 2 match points.[76]\nSeeded 11th at the US Open, he reached the fourth round after defeating Brandon Nakashima in four sets.[77] Next, he defeated Ilya Ivashka in a five set match lasting close to four hours to reach the quarterfinals for the first time at this Major.[78] At five hours and 26 minutes, it was the longest match of Sinner's career up until this point and the fifth-longest in the tournament history[100] as well as the second longest of the season after Andy Murray against Thanasi Kokkinakis at the Australian Open.[101]\nHe reached back to back quarterfinals in Wimbledon after defeating Juan Manuel Cerundolo, Diego Schwartzman, Quentin Halys and Daniel Elahi Galan.[102] He then reached his first Major semifinal after defeating Roman Safiullin, before losing to Novak Djokovic in straight sets.[103] In the following round in the semifinals, he lost in straight sets to career rival and top seed Carlos Alcaraz who returned to world No. 1 following the tournament.[92] In Miami, he reached the quarterfinals of this tournament for a third straight year after defeating Grigor Dimitrov and Andrey Rublev, thus returning to the top 10 in the rankings at world No. In the final, he came from a two-set deficit to beat Daniil Medvedev to become the first Italian player, male or female, to win the Australian Open singles title, and the third man to win a Major (the second of which is in the Open Era), the first in 48 years.[8][122]"}, {'url': 'https://www.thesportreview.com/biography/jannik-sinner/', 'content': '• Date of birth: 16 August 2001\n• Age: 22 years old\n• Place of birth: San Candido, Italy\n• Nationality: Italian\n• Height: 188cm / 6ft 2ins\n• Weight: 76kg / 167lbs\n• Plays: Right-handed\n• Turned Pro: 2018\n• Career Prize Money: US$ 4,896,338\n• Instagram: @janniksin\nThe impressive 22-year-old turned professional back in 2018 and soon made an impact on the tour, breaking into the top 100 in the world rankings for the first time in 2019.\n Jannik Sinner (Photo: Dubai Duty Free Tennis Championships)\nSinner ended the season as number 78 in the world, becoming the youngest player since Rafael Nadal in 2003 to end the year in the top 80.\n The Italian then ended the 2019 season in style, qualifying for the 2019 Next Gen ATP Finals and going on to win the tournament with a win over Alex de Minaur in the final.\n Sinner then reached the main draw of a grand slam for the first time at the 2019 US Open, when he came through qualifying to reach the first round, where he lost to Stan Wawrinka.\n Asked to acknowledge some of the key figures in his development, Sinner replied: “I think first of all, my family who always helped me and gave me the confidence to actually change my life when I was 13-and-a-half, 14 years old.\n'}]

+[Execute Result] {'thought': 'The search results have provided two different places of birth for Jannik Sinner: Innichen and San Candido. These are actually the same place, as San Candido is the Italian name and Innichen is the German name for the town. Since the user asked for the place of birth or hometown, I can now provide this information.', 'action_name': 'finish', 'action_parameters': {'content': 'Jannik Sinner was born in San Candido (Italian) / Innichen (German), Italy.'}}

+[Execute] Execute End.

+[Revised Plan] {"goals": ["Find the hometown of the 2024 Australian Open winner"], "tasks": [], "next_task_id": null}

+[Agent Result] Jannik Sinner was born in San Candido (Italian) / Innichen (German), Italy.

+[Agent] Agent End.

+```

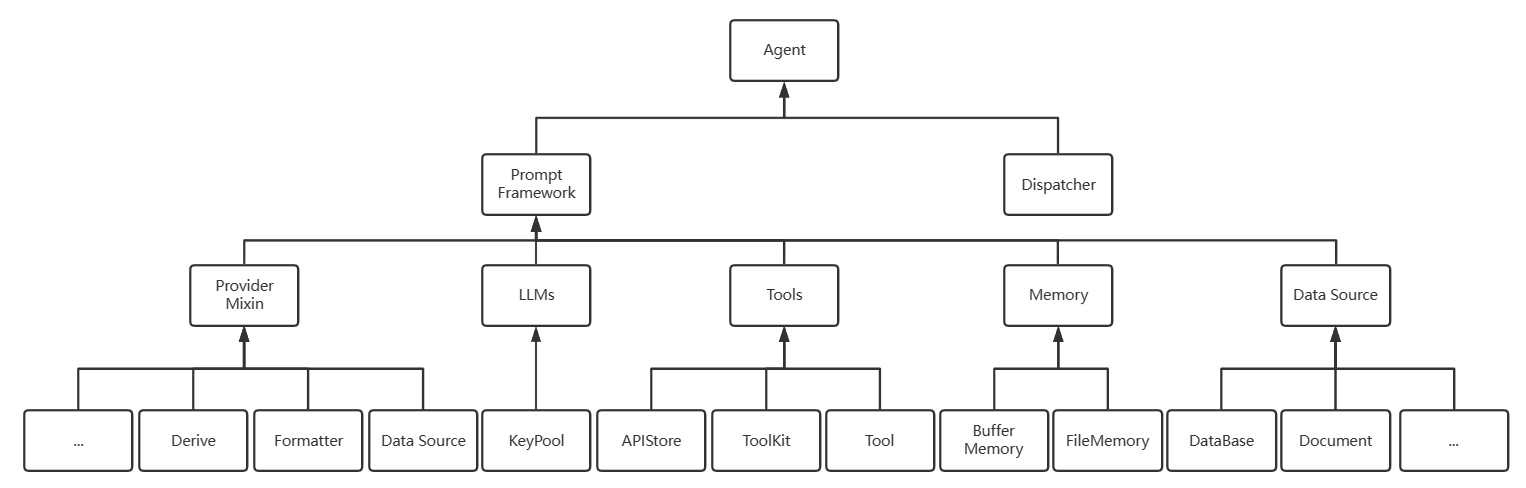

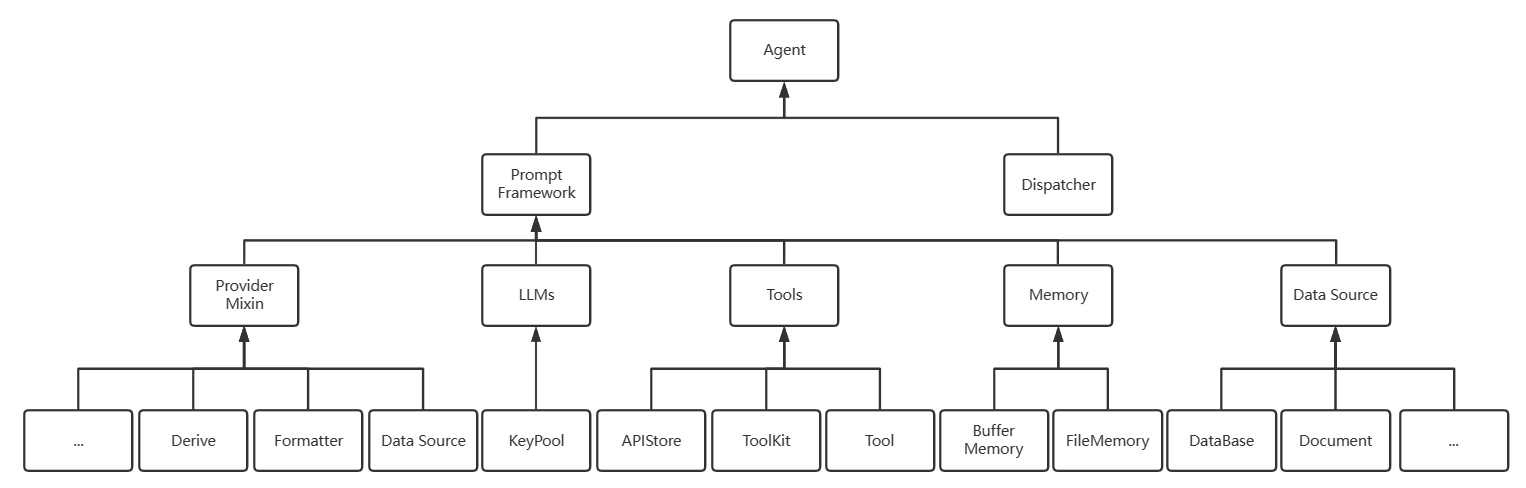

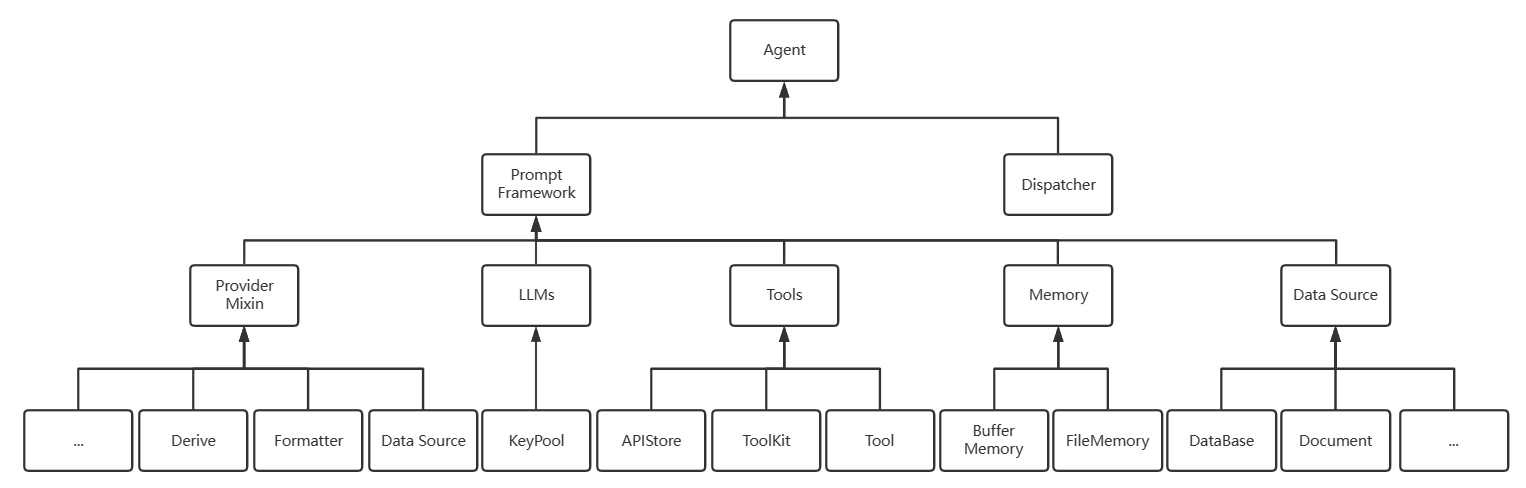

-- `Agent`: More advanced execution units responsible for task scheduling and distribution.

-- `llm`: Large language model responsible for generating answers, supporting different types of large language models.

-- `Memory`: Responsible for storing conversations, supporting different storage methods and extensions such as file storage and database storage.

-- `Framework`: Framework layer that implements different prompt frameworks, including the basic `Conversation` model and models such as `self-ask` and `ReAct`.

-- `Tool`: Provides external tool extensions for search engines, calculators, etc.

-- `Hook&Lifecycle`: Hook system and lifecycle system that allows developers to customize lifecycle logic control.

-- `Role presets`: Provides preset roles for customized conversations.

-- `Provider`: Provides more data sources or autonomous operations for the system, such as connecting to databases.

+For more detailed information, please check the [Getting Started/Official Documentation](https://undertone0809.github.io/promptulate/#/).

- +## 📚 Design Principles

-# Design Principles

+The design principles of the pne framework include modularity, extensibility, interoperability, robustness, maintainability, security, efficiency, and usability.

-The design principles of the `promptulate` framework include modularity, scalability, interoperability, robustness, maintainability, security, efficiency, and usability.

+- Modularity refers to using modules as the basic unit, allowing for easy integration of new components, models, and tools.

+- Extensibility refers to the framework's ability to handle large amounts of data, complex tasks, and high concurrency.

+- Interoperability means the framework is compatible with various external systems, tools, and services and can achieve seamless integration and communication.

+- Robustness indicates the framework has strong error handling, fault tolerance, and recovery mechanisms to ensure reliable operation under various conditions.

+- Security implies the framework has implemented strict measures to protect against unauthorized access and malicious behavior.

+- Efficiency is about optimizing the framework's performance, resource usage, and response times to ensure a smooth and responsive user experience.

+- Usability means the framework uses user-friendly interfaces and clear documentation, making it easy to use and understand.

-- Modularity refers to the ability to integrate new components, models, and tools conveniently, using modules as the basic unit.

-- Scalability refers to the framework's capability to handle large amounts of data, complex tasks, and high concurrency.

-- Interoperability means that the framework is compatible with various external systems, tools, and services, allowing seamless integration and communication.

-- Robustness refers to the framework's ability to handle errors, faults, and recovery mechanisms to ensure reliable operation under different conditions.

-- Security involves strict security measures to protect the framework, its data, and users from unauthorized access and malicious behavior.

-- Efficiency focuses on optimizing the framework's performance, resource utilization, and response time to ensure a smooth and responsive user experience.

-- Usability involves providing a user-friendly interface and clear documentation to make the framework easy to use and understand.

+Following these principles and applying the latest artificial intelligence technologies, `pne` aims to provide a powerful and flexible framework for creating automated agents.

-By following these principles and incorporating the latest advancements in artificial intelligence technology, `promptulate` aims to provide a powerful and flexible application development framework for creating automated agents.

+## 💌 Contact

-# Contributions

+For more information, please contact: [zeeland4work@gmail.com](mailto:zeeland4work@gmail.com)

-I am currently exploring more comprehensive abstraction patterns to improve compatibility with the framework and the extended use of external tools. If you have any suggestions, I welcome discussions and exchanges.

+## ⭐ Contribution

-If you would like to contribute to this project, please refer to the [current development plan](https://undertone0809.github.io/promptulate/#/other/plan) and [contribution/developer's guide](https://undertone0809.github.io/promptulate/#/other/contribution). I'm excited to see more people getting involved and optimizing it.

+We appreciate your interest in contributing to our open-source initiative. We have provided a [Developer's Guide](https://undertone0809.github.io/promptulate/#/other/contribution) outlining the steps to contribute to Promptulate. Please refer to this guide to ensure smooth collaboration and successful contributions. Additionally, you can view the [Current Development Plan](https://undertone0809.github.io/promptulate/#/other/plan) to see the latest development progress 🤝🚀

diff --git a/README_zh.md b/README_zh.md

index d858e684..b0431b11 100644

--- a/README_zh.md

+++ b/README_zh.md

@@ -21,31 +21,69 @@

+## 📚 Design Principles

-# Design Principles

+The design principles of the pne framework include modularity, extensibility, interoperability, robustness, maintainability, security, efficiency, and usability.

-The design principles of the `promptulate` framework include modularity, scalability, interoperability, robustness, maintainability, security, efficiency, and usability.

+- Modularity refers to using modules as the basic unit, allowing for easy integration of new components, models, and tools.

+- Extensibility refers to the framework's ability to handle large amounts of data, complex tasks, and high concurrency.

+- Interoperability means the framework is compatible with various external systems, tools, and services and can achieve seamless integration and communication.

+- Robustness indicates the framework has strong error handling, fault tolerance, and recovery mechanisms to ensure reliable operation under various conditions.

+- Security implies the framework has implemented strict measures to protect against unauthorized access and malicious behavior.

+- Efficiency is about optimizing the framework's performance, resource usage, and response times to ensure a smooth and responsive user experience.

+- Usability means the framework uses user-friendly interfaces and clear documentation, making it easy to use and understand.

-- Modularity refers to the ability to integrate new components, models, and tools conveniently, using modules as the basic unit.

-- Scalability refers to the framework's capability to handle large amounts of data, complex tasks, and high concurrency.

-- Interoperability means that the framework is compatible with various external systems, tools, and services, allowing seamless integration and communication.

-- Robustness refers to the framework's ability to handle errors, faults, and recovery mechanisms to ensure reliable operation under different conditions.

-- Security involves strict security measures to protect the framework, its data, and users from unauthorized access and malicious behavior.

-- Efficiency focuses on optimizing the framework's performance, resource utilization, and response time to ensure a smooth and responsive user experience.

-- Usability involves providing a user-friendly interface and clear documentation to make the framework easy to use and understand.

+Following these principles and applying the latest artificial intelligence technologies, `pne` aims to provide a powerful and flexible framework for creating automated agents.

-By following these principles and incorporating the latest advancements in artificial intelligence technology, `promptulate` aims to provide a powerful and flexible application development framework for creating automated agents.

+## 💌 Contact

-# Contributions

+For more information, please contact: [zeeland4work@gmail.com](mailto:zeeland4work@gmail.com)

-I am currently exploring more comprehensive abstraction patterns to improve compatibility with the framework and the extended use of external tools. If you have any suggestions, I welcome discussions and exchanges.

+## ⭐ Contribution

-If you would like to contribute to this project, please refer to the [current development plan](https://undertone0809.github.io/promptulate/#/other/plan) and [contribution/developer's guide](https://undertone0809.github.io/promptulate/#/other/contribution). I'm excited to see more people getting involved and optimizing it.

+We appreciate your interest in contributing to our open-source initiative. We have provided a [Developer's Guide](https://undertone0809.github.io/promptulate/#/other/contribution) outlining the steps to contribute to Promptulate. Please refer to this guide to ensure smooth collaboration and successful contributions. Additionally, you can view the [Current Development Plan](https://undertone0809.github.io/promptulate/#/other/plan) to see the latest development progress 🤝🚀

diff --git a/README_zh.md b/README_zh.md

index d858e684..b0431b11 100644

--- a/README_zh.md

+++ b/README_zh.md

@@ -21,31 +21,69 @@

-`Promptulate AI` 专注于构建大语言模型应用与 AI Agent 的开发者平台,致力于为开发者和企业提供构建、扩展、评估大语言模型应用的能力。`Promptulate` 是 `Promptulate AI` 旗下的大语言模型自动化与应用开发框架,旨在帮助开发者通过更小的成本构建行业级的大模型应用,其包含了LLM领域应用层开发的大部分常用组件,如外部工具组件、模型组件、Agent 智能代理、外部数据源接入模块、数据存储模块、生命周期模块等。 通过 `Promptulate`,你可以用 pythonic 的方式轻松构建起属于自己的 LLM 应用程序。

-

-更多地,为构建一个强大而灵活的 LLM 应用开发平台与 AI Agent 构建平台,以创建能够自动化各种任务和应用程序的自主代理,`Promptulate` 通过Core

-AI Engine、Agent System、Tools Provider、Multimodal Processing、Knowledge Base 和 Task-specific Modules

-6个组件实现自动化AI平台。 Core AI Engine 是该框架的核心组件,负责处理和理解各种输入,生成输出和作出决策。Agent

-System 是提供高级指导和控制AI代理行为的模块;APIs and Tools Provider 提供工具和服务交互的API和集成库;Multimodal

-Processing 是一组处理和理解不同数据类型(如文本、图像、音频和视频)的模块,使用深度学习模型从不同数据模式中提取有意义的信息;Knowledge

-Base 是一个存储和组织世界信息的大型结构化知识库,使AI代理能够访问和推理大量的知识;Task-specific

-Modules 是一组专门设计用于执行特定任务的模块,例如情感分析、机器翻译或目标检测等。通过这些组件的组合,框架提供了一个全面、灵活和强大的平台,能够实现各种复杂任务和应用程序的自动化。

-

-## 特性

-

-- 大语言模型支持:支持不同类型的大语言模型的扩展接口

-- 对话终端:提供简易对话终端,直接体验与大语言模型的对话

-- AgentGroup:提供WebAgent、ToolAgent、CodeAgent等不同的Agent,进行复杂能力处理

-- 长对话模式:支持长对话聊天,支持多种方式的对话持久化

-- 外部工具:集成外部工具能力,可以进行网络搜索、执行Python代码等强大的功能

-- KEY池:提供API key池,彻底解决key限速的问题

-- 智能代理:集成 ReAct,self-ask 等 Prompt 框架,结合外部工具赋能 LLM

-- 中文优化:针对中文语境进行特别优化,更适合中文场景

-- 数据导出:支持 Markdown 等格式的对话导出

-- Hook与生命周期:提供 Agent,Tool,llm 的生命周期及 Hook 系统

-- 高级抽象:支持插件扩展、存储扩展、大语言模型扩展

-

-## 快速开始

+## Overview

+

+**Promptulate** 是 **Cogit Lab** 打造的 AI Agent 应用开发框架,通过 Pythonic 的开发范式,旨在为开发者们提供一种极其简洁而高效的 Agent 应用构建体验。 🛠️ Promptulate 的核心理念在于借鉴并融合开源社区的智慧,集成各种开发框架的亮点,以此降低开发门槛并统一开发者的共识。通过 Promptulate,你可以用最简洁的代码来操纵 LLM, Agent, Tool, RAG 等组件,大多数任务仅需几行代码即可轻松完成。🚀

+

+## 💡特性

+

+- 🐍 Pythonic Code Style: 采用 Python 开发者的习惯,提供 Pythonic 的 SDK 调用方式,一切尽在掌握,仅需一个 pne.chat 函数便可封装所有必需功能。

+- 🧠 模型兼容性: 支持市面上几乎所有类型的大模型,并且可以轻松自定义模型以满足特定需求。

+- 🕵️♂️ 多样化 Agent: 提供 WebAgent、ToolAgent、CodeAgent 等多种类型的 Agent,具备计划、推理、行动等处理复杂问题的能力。

+- 🔗 低成本集成: 轻而易举地集成如 LangChain 等不同框架的工具,大幅降低集成成本。

+- 🔨 函数即工具: 将任意 Python 函数直接转化为 Agent 可用的工具,简化了工具的创建和使用过程。

+- 🪝 生命周期与钩子: 提供丰富的 Hook 和完善的生命周期管理,允许在 Agent、Tool、LLM 的各个阶段插入自定义代码。

+- 💻 终端集成: 轻松集成应用终端,自带客户端支持,提供 prompt 的快速调试能力。

+- ⏱️ Prompt 缓存: 提供 LLM Prompt 缓存机制,减少重复工作,提升开发效率。

+

+> 下面用 pne 表示 promptulate,pne 是 Promptulate 的昵称,其中 p 和 e 分别代表 promptulate 的开头和结尾,n 代表 9,即 p 和 e 中间的九个字母的简写。

+

+## 支持的基础模型

+

+pne 集成了 [litellm](https://github.com/BerriAI/litellm) 的能力,支持几乎市面上所有类型的大模型,包括但不限于以下模型:

+

+| Provider | [Completion](https://docs.litellm.ai/docs/#basic-usage) | [Streaming](https://docs.litellm.ai/docs/completion/stream#streaming-responses) | [Async Completion](https://docs.litellm.ai/docs/completion/stream#async-completion) | [Async Streaming](https://docs.litellm.ai/docs/completion/stream#async-streaming) | [Async Embedding](https://docs.litellm.ai/docs/embedding/supported_embedding) | [Async Image Generation](https://docs.litellm.ai/docs/image_generation) |

+| ------------- | ------------- | ------------- | ------------- | ------------- | ------------- | ------------- |

+| [openai](https://docs.litellm.ai/docs/providers/openai) | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [azure](https://docs.litellm.ai/docs/providers/azure) | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [aws - sagemaker](https://docs.litellm.ai/docs/providers/aws_sagemaker) | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [aws - bedrock](https://docs.litellm.ai/docs/providers/bedrock) | ✅ | ✅ | ✅ | ✅ |✅ |

+| [google - vertex_ai [Gemini]](https://docs.litellm.ai/docs/providers/vertex) | ✅ | ✅ | ✅ | ✅ |

+| [google - palm](https://docs.litellm.ai/docs/providers/palm) | ✅ | ✅ | ✅ | ✅ |

+| [google AI Studio - gemini](https://docs.litellm.ai/docs/providers/gemini) | ✅ | | ✅ | | |

+| [mistral ai api](https://docs.litellm.ai/docs/providers/mistral) | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [cloudflare AI Workers](https://docs.litellm.ai/docs/providers/cloudflare_workers) | ✅ | ✅ | ✅ | ✅ |

+| [cohere](https://docs.litellm.ai/docs/providers/cohere) | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [anthropic](https://docs.litellm.ai/docs/providers/anthropic) | ✅ | ✅ | ✅ | ✅ |

+| [huggingface](https://docs.litellm.ai/docs/providers/huggingface) | ✅ | ✅ | ✅ | ✅ | ✅ |

+| [replicate](https://docs.litellm.ai/docs/providers/replicate) | ✅ | ✅ | ✅ | ✅ |

+| [together_ai](https://docs.litellm.ai/docs/providers/togetherai) | ✅ | ✅ | ✅ | ✅ |

+| [openrouter](https://docs.litellm.ai/docs/providers/openrouter) | ✅ | ✅ | ✅ | ✅ |

+| [ai21](https://docs.litellm.ai/docs/providers/ai21) | ✅ | ✅ | ✅ | ✅ |

+| [baseten](https://docs.litellm.ai/docs/providers/baseten) | ✅ | ✅ | ✅ | ✅ |

+| [vllm](https://docs.litellm.ai/docs/providers/vllm) | ✅ | ✅ | ✅ | ✅ |

+| [nlp_cloud](https://docs.litellm.ai/docs/providers/nlp_cloud) | ✅ | ✅ | ✅ | ✅ |

+| [aleph alpha](https://docs.litellm.ai/docs/providers/aleph_alpha) | ✅ | ✅ | ✅ | ✅ |

+| [petals](https://docs.litellm.ai/docs/providers/petals) | ✅ | ✅ | ✅ | ✅ |

+| [ollama](https://docs.litellm.ai/docs/providers/ollama) | ✅ | ✅ | ✅ | ✅ |

+| [deepinfra](https://docs.litellm.ai/docs/providers/deepinfra) | ✅ | ✅ | ✅ | ✅ |

+| [perplexity-ai](https://docs.litellm.ai/docs/providers/perplexity) | ✅ | ✅ | ✅ | ✅ |

+| [Groq AI](https://docs.litellm.ai/docs/providers/groq) | ✅ | ✅ | ✅ | ✅ |

+| [anyscale](https://docs.litellm.ai/docs/providers/anyscale) | ✅ | ✅ | ✅ | ✅ |

+| [voyage ai](https://docs.litellm.ai/docs/providers/voyage) | | | | | ✅ |

+| [xinference [Xorbits Inference]](https://docs.litellm.ai/docs/providers/xinference) | | | | | ✅ |

+

+详情可以跳转 [litellm documentation](https://docs.litellm.ai/docs/providers) 查看。

+

+你可以使用下面的方式十分轻松的构建起任何第三方模型的调用。

+

+```python

+import promptulate as pne

+

+resp: str = pne.chat(model="ollama/llama2", messages = [{ "content": "Hello, how are you?","role": "user"}])

+```

+

+## 📗 相关文档

- [快速上手/官方文档](https://undertone0809.github.io/promptulate/#/)

- [当前开发计划](https://undertone0809.github.io/promptulate/#/other/plan)

@@ -53,81 +91,115 @@ Modules 是一组专门设计用于执行特定任务的模块,例如情感分

- [常见问题](https://undertone0809.github.io/promptulate/#/other/faq)

- [pypi仓库](https://pypi.org/project/promptulate/)

+## 🛠 快速开始

+

- 打开终端,输入以下命令安装框架:

```shell script

pip install -U promptulate

```

-> Your Python version should be 3.8 or higher.

+> 注意:Your Python version should be 3.8 or higher.

-- 通过下面这个简单的程序开始你的 “HelloWorld”。

+格式化输出是 LLM 应用开发鲁棒性的重要基础,我们希望 LLM 可以返回稳定的数据,使用 pne,你可以轻松的进行格式化输出,下面的示例中,我们使用 pydantic 的 BaseModel 封装起一个需要返回的数据结构。

```python

-import os

+from typing import List

import promptulate as pne

+from pydantic import BaseModel, Field

-os.environ['OPENAI_API_KEY'] = "your-key"

-

-agent = pne.WebAgent()

-answer = agent.run("What is the temperature tomorrow in Shanghai")

-print(answer)

-```

+class LLMResponse(BaseModel):

+ provinces: List[str] = Field(description="List of provinces name")

-```

-The temperature tomorrow in Shanghai is expected to be 23°C.

+resp: LLMResponse = pne.chat("Please tell me all provinces in China.?", output_schema=LLMResponse)

+print(resp)

```

-> 大多数时候我们会将 promptulate 称之为 pne,其中 p 和 e 表示 promptulate 开头和结尾的单词,而 n 表示 9,即 p 和 e 中间的九个单词的简写。

+**Output:**

-要将包括网络搜索、计算器等在内的各种外部工具集成到您的Python应用程序中,您可以使用promptulate库与langchain库一起使用。langchain库允许您构建一个带有工具集的ToolAgent,例如基于OpenAI的DALL-E模型的图像生成器。

+```text

+provinces=['Anhui', 'Fujian', 'Gansu', 'Guangdong', 'Guizhou', 'Hainan', 'Hebei', 'Heilongjiang', 'Henan', 'Hubei', 'Hunan', 'Jiangsu', 'Jiangxi', 'Jilin', 'Liaoning', 'Qinghai', 'Shaanxi', 'Shandong', 'Shanxi', 'Sichuan', 'Yunnan', 'Zhejiang', 'Taiwan', 'Guangxi', 'Nei Mongol', 'Ningxia', 'Xinjiang', 'Xizang', 'Beijing', 'Chongqing', 'Shanghai', 'Tianjin', 'Hong Kong', 'Macao']

+```

-下面是一个如何使用promptulate和langchain库根据文本描述创建图像的例子:

+在 pne,你可以轻松集成各种不同类型不同框架(如LangChain,llama-index)的 tools,如网络搜索、计算器等在外部工具,下面的示例中,我们使用 LangChain 的 duckduckgo 的搜索工具,来获取明天上海的天气。

```python

+import os

import promptulate as pne

from langchain.agents import load_tools

-tools: list = load_tools(["dalle-image-generator"])

-agent = pne.ToolAgent(tools=tools)

-output = agent.run("创建一个万圣节夜晚在一个闹鬼的博物馆的图像")

+os.environ["OPENAI_API_KEY"] = "your-key"

+

+tools: list = load_tools(["ddg-search", "arxiv"])

+resp: str = pne.chat(model="gpt-4-1106-preview", messages = [{ "content": "What is the temperature tomorrow in Shanghai","role": "user"}], tools=tools)

```

-output:

+在这个示例中,pne 内部集成了拥有推理和反思能力的 [ReAct](https://arxiv.org/abs/2210.03629) 研究,封装成 ToolAgent,拥有强大的推理能力和工具调用能力,可以选择合适的工具进行调用,从而获取更加准确的结果。

+

+**Output:**

```text

-Here is the generated image: []

+The temperature tomorrow in Shanghai is expected to be 23°C.

```

-

+此外,受到 [Plan-and-Solve](https://arxiv.org/abs/2305.04091) 论文的影响,pne 还允许开发者构建具有计划、推理、行动等处理复杂问题的能力的 Agent,通过 enable_plan 参数,可以开启 Agent 的计划能力。

-更多详细资料,请查看[快速上手/官方文档](https://undertone0809.github.io/promptulate/#/)

+

+

+在这个例子中,我们使用 [Tavily](https://app.tavily.com/) 作为搜索引擎,它是一个强大的搜索引擎,可以从网络上搜索信息。要使用 Tavily,您需要从 Tavily 获得一个API密钥。

+

+```python

+import os

+

+os.environ["TAVILY_API_KEY"] = "your_tavily_api_key"

+os.environ["OPENAI_API_KEY"] = "your_openai_api_key"

+```

+

+在这个例子中,我们使用 LangChain 封装好的 TavilySearchResults Tool。

+

+```python

+from langchain_community.tools.tavily_search import TavilySearchResults

-## 基础架构

+tools = [TavilySearchResults(max_results=5)]

+```

-当前`promptulate`正处于快速开发阶段,仍有许多内容需要完善与讨论,十分欢迎大家的讨论与参与,而其作为一个大语言模型自动化与应用开发框架,主要由以下几部分组成:

+```python

+import promptulate as pne

-- 大语言模型支持:支持不同类型的大语言模型的扩展接口

-- AI Agent:提供WebAgent、ToolAgent、CodeAgent等不同的Agent以及自定Agent能力,进行复杂能力处理

-- 对话终端:提供简易对话终端,直接体验与大语言模型的对话

-- 角色预设:提供预设角色,以不同的角度调用LLM

-- 长对话模式:支持长对话聊天,支持多种方式的对话持久化

-- 外部工具:集成外部工具能力,可以进行网络搜索、执行Python代码等强大的功能

-- KEY池:提供API key池,彻底解决key限速的问题

-- 智能代理:集成ReAct,self-ask等高级Agent,结合外部工具赋能LLM

-- 中文优化:针对中文语境进行特别优化,更适合中文场景

-- 数据导出:支持 Markdown 等格式的对话导出

-- 高级抽象:支持插件扩展、存储扩展、大语言模型扩展

-- 格式化输出:原生支持大模型的格式化输出,大大提升复杂场景下的任务处理能力与鲁棒性

-- Hook与生命周期:提供Agent,Tool,llm的生命周期及Hook系统

-- 物联网能力:框架为物联网应用开发提供了多种工具,方便物联网开发者使用大模型能力。

+pne.chat("what is the hometown of the 2024 Australia open winner?", model="gpt-4-1106-preview", enable_plan=True)

+```

+**Output:**

-

-`Promptulate AI` 专注于构建大语言模型应用与 AI Agent 的开发者平台,致力于为开发者和企业提供构建、扩展、评估大语言模型应用的能力。`Promptulate` 是 `Promptulate AI` 旗下的大语言模型自动化与应用开发框架,旨在帮助开发者通过更小的成本构建行业级的大模型应用,其包含了LLM领域应用层开发的大部分常用组件,如外部工具组件、模型组件、Agent 智能代理、外部数据源接入模块、数据存储模块、生命周期模块等。 通过 `Promptulate`,你可以用 pythonic 的方式轻松构建起属于自己的 LLM 应用程序。

-

-更多地,为构建一个强大而灵活的 LLM 应用开发平台与 AI Agent 构建平台,以创建能够自动化各种任务和应用程序的自主代理,`Promptulate` 通过Core

-AI Engine、Agent System、Tools Provider、Multimodal Processing、Knowledge Base 和 Task-specific Modules

-6个组件实现自动化AI平台。 Core AI Engine 是该框架的核心组件,负责处理和理解各种输入,生成输出和作出决策。Agent

-System 是提供高级指导和控制AI代理行为的模块;APIs and Tools Provider 提供工具和服务交互的API和集成库;Multimodal

-Processing 是一组处理和理解不同数据类型(如文本、图像、音频和视频)的模块,使用深度学习模型从不同数据模式中提取有意义的信息;Knowledge

-Base 是一个存储和组织世界信息的大型结构化知识库,使AI代理能够访问和推理大量的知识;Task-specific

-Modules 是一组专门设计用于执行特定任务的模块,例如情感分析、机器翻译或目标检测等。通过这些组件的组合,框架提供了一个全面、灵活和强大的平台,能够实现各种复杂任务和应用程序的自动化。

-

-## 特性

-

-- 大语言模型支持:支持不同类型的大语言模型的扩展接口

-- 对话终端:提供简易对话终端,直接体验与大语言模型的对话

-- AgentGroup:提供WebAgent、ToolAgent、CodeAgent等不同的Agent,进行复杂能力处理

-- 长对话模式:支持长对话聊天,支持多种方式的对话持久化

-- 外部工具:集成外部工具能力,可以进行网络搜索、执行Python代码等强大的功能

-- KEY池:提供API key池,彻底解决key限速的问题

-- 智能代理:集成 ReAct,self-ask 等 Prompt 框架,结合外部工具赋能 LLM

-- 中文优化:针对中文语境进行特别优化,更适合中文场景

-- 数据导出:支持 Markdown 等格式的对话导出

-- Hook与生命周期:提供 Agent,Tool,llm 的生命周期及 Hook 系统

-- 高级抽象:支持插件扩展、存储扩展、大语言模型扩展

-

-## 快速开始

+## Overview

+

+**Promptulate** 是 **Cogit Lab** 打造的 AI Agent 应用开发框架,通过 Pythonic 的开发范式,旨在为开发者们提供一种极其简洁而高效的 Agent 应用构建体验。 🛠️ Promptulate 的核心理念在于借鉴并融合开源社区的智慧,集成各种开发框架的亮点,以此降低开发门槛并统一开发者的共识。通过 Promptulate,你可以用最简洁的代码来操纵 LLM, Agent, Tool, RAG 等组件,大多数任务仅需几行代码即可轻松完成。🚀

+

+## 💡特性

+

+- 🐍 Pythonic Code Style: 采用 Python 开发者的习惯,提供 Pythonic 的 SDK 调用方式,一切尽在掌握,仅需一个 pne.chat 函数便可封装所有必需功能。

+- 🧠 模型兼容性: 支持市面上几乎所有类型的大模型,并且可以轻松自定义模型以满足特定需求。

+- 🕵️♂️ 多样化 Agent: 提供 WebAgent、ToolAgent、CodeAgent 等多种类型的 Agent,具备计划、推理、行动等处理复杂问题的能力。

+- 🔗 低成本集成: 轻而易举地集成如 LangChain 等不同框架的工具,大幅降低集成成本。

+- 🔨 函数即工具: 将任意 Python 函数直接转化为 Agent 可用的工具,简化了工具的创建和使用过程。

+- 🪝 生命周期与钩子: 提供丰富的 Hook 和完善的生命周期管理,允许在 Agent、Tool、LLM 的各个阶段插入自定义代码。

+- 💻 终端集成: 轻松集成应用终端,自带客户端支持,提供 prompt 的快速调试能力。

+- ⏱️ Prompt 缓存: 提供 LLM Prompt 缓存机制,减少重复工作,提升开发效率。

+

+> 下面用 pne 表示 promptulate,pne 是 Promptulate 的昵称,其中 p 和 e 分别代表 promptulate 的开头和结尾,n 代表 9,即 p 和 e 中间的九个字母的简写。

+

+## 支持的基础模型

+

+pne 集成了 [litellm](https://github.com/BerriAI/litellm) 的能力,支持几乎市面上所有类型的大模型,包括但不限于以下模型:

+