diff --git a/learning_resources/README.md b/learning_resources/README.md

new file mode 100644

index 000000000..03b74fa66

--- /dev/null

+++ b/learning_resources/README.md

@@ -0,0 +1,3 @@

+# Resources for Adversarial Machine Learning

+>

+This folder contains resources to learn about Adversarial Machine Learning, different attacks, defenses and other concepts.

diff --git a/learning_resources/fast_gradient_method.ipynb b/learning_resources/fast_gradient_method.ipynb

new file mode 100644

index 000000000..89f85570f

--- /dev/null

+++ b/learning_resources/fast_gradient_method.ipynb

@@ -0,0 +1,629 @@

+{

+ "nbformat": 4,

+ "nbformat_minor": 0,

+ "metadata": {

+ "colab": {

+ "name": "FGSM Attack & Adversarial Training.ipynb",

+ "version": "0.3.2",

+ "provenance": [],

+ "collapsed_sections": [

+ "Vs9wD38he0cM",

+ "b4kgPQqp-hwR",

+ "QpC4KgTS_Fj8",

+ "3NkfwLkjtNNy",

+ "qc_hLUbLAN55",

+ "ZuO0xjqKKMmt",

+ "cSVL3kesCBAd",

+ "9SGiWcOE7B4X",

+ "ulbHU1bLNh7c",

+ "whIrvHnzUSAu",

+ "vgPdPVUdbbJu",

+ "AWyWu49L4LRy",

+ "zta5bsYB88yO",

+ "9_EDE61bMzgW"

+ ],

+ "include_colab_link": true

+ },

+ "kernelspec": {

+ "name": "python3",

+ "display_name": "Python 3"

+ }

+ },

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "view-in-github",

+ "colab_type": "text"

+ },

+ "source": [

+ " "

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "RHwgLwN4nXPi",

+ "colab_type": "text"

+ },

+ "source": [

+ "# Fast Gradient Sign Method (FGSM) Attack \n",

+ "\n",

+ "#### This guide explores the FGSM attack which was first described by I. Goodfellow et al.* and is implemented with Tensorflow 2.0. \n",

+ ">\n",

+ "*[Explaining and Harnessing Adversarial Examples](https://arxiv.org/pdf/1412.6572.pdf)\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "Vs9wD38he0cM",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Import dependent libraries\n",

+ "Tensorflow 2.0 required\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "eBsLelavnv0u",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "!pip install -q tensorflow==2.0.0b1\n",

+ "\n",

+ "import tensorflow as tf\n",

+ "import numpy as np\n",

+ "import matplotlib.pyplot as plt\n",

+ "\n",

+ "print(tf.__version__)\n",

+ "print(\"GPU Available: \", tf.test.is_gpu_available())"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "b4kgPQqp-hwR",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Training a simple model on the MNIST dataset\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "_z99mq1bKkjz",

+ "colab_type": "text"

+ },

+ "source": [

+ "> If you would like to experiment with other datasets feel free to replace this code by whatever you need to do so. Just remember that to make the rest of the notebook work without any other major changes keep the variables train_images, train_labels, test_images, test_labels and assign them to the corresponding new data. I recommend any of the datasets offered by keras as they use the same mechanics to import them.\n",

+ "\n",

+ ">Keep in mind that the MNIST dataset was used in this guide because of the little amount of time it takes to both train a good model and craft the attacks."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "uQVP1re__pbf",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "mnist = tf.keras.datasets.mnist\n",

+ "(train_images, train_labels), (test_images, test_labels) = mnist.load_data()\n",

+ "\n",

+ "train_images = train_images / 255.0\n",

+ "test_images = test_images / 255.0\n",

+ "\n",

+ "num_classes = 10\n",

+ "\n",

+ "model = tf.keras.Sequential([\n",

+ " tf.keras.layers.Flatten(input_shape=(28, 28)),\n",

+ " tf.keras.layers.Dense(32, activation=tf.nn.relu),\n",

+ " tf.keras.layers.Dense(16, activation=tf.nn.relu),\n",

+ " tf.keras.layers.Dense(10, activation=tf.nn.softmax)\n",

+ "])\n",

+ "\n",

+ "model.compile(optimizer='adam',\n",

+ " loss= 'sparse_categorical_crossentropy',\n",

+ " metrics=['accuracy'])\n",

+ "\n",

+ "model.fit(train_images, train_labels, epochs=10, validation_split=0.2)\n",

+ "test_loss, test_acc = model.evaluate(test_images, test_labels)\n",

+ "\n",

+ "print('Test accuracy:', test_acc)"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "QpC4KgTS_Fj8",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Implementing the FGSM attack to craft an adversarial example\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "3NkfwLkjtNNy",

+ "colab_type": "text"

+ },

+ "source": [

+ "### What is a fast gradient sign method attack and what are adversarial examples?"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "iu66gSMTtYwO",

+ "colab_type": "text"

+ },

+ "source": [

+ "An FGSM attack is a way of exploiting a natural vulnerability in machine learning models and confuse them. It creates images that look like real, normal data but that are designed so that a model missclassifies them. This images are called adversarial examples. There are many different types of attacks that accomplish this same thing, crafting adversarial examples, but the fgsm attack provides the quickest way to so. It is also a white box attack which means that it assumes the attacker has access to the model's architecture, parameters and weights."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "qc_hLUbLAN55",

+ "colab_type": "text"

+ },

+ "source": [

+ "### We must start off by choosing the image we will craft into an adversarial example. \n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "pobla8qJOZf8",

+ "colab_type": "text"

+ },

+ "source": [

+ "To do this, we pick a random image from the training set and convert its corresponding label into its one-hot encoded version. [*Info on one-hot*](https://blog.cambridgespark.com/robust-one-hot-encoding-in-python-3e29bfcec77e)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "colab_type": "code",

+ "id": "wA7_JKrm1wsm",

+ "colab": {}

+ },

+ "source": [

+ "random_index = np.random.randint(test_images.shape[0])\n",

+ "\n",

+ "original_image = train_images[random_index]\n",

+ "original_image = tf.convert_to_tensor(original_image.reshape((1,28,28))) #The .reshape just gives it the proper form to input into the model, a batch of 1 a.k.a a tensor\n",

+ "\n",

+ "original_label = tf.one_hot(train_labels[random_index], num_classes)"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "sklj9G-JJPRY",

+ "colab_type": "text"

+ },

+ "source": [

+ "We can then look at the image and at it's label."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "k0ir_rYeJW6m",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "\n",

+ "plt.imshow(np.reshape(original_image, (28,28)))\n",

+ "plt.title(\"Label: {}\".format(np.argmax(original_label)))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "ZuO0xjqKKMmt",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Now, we create the perturbation we will use to perform the attack"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "-Gbgb2dZ4KE-",

+ "colab_type": "text"

+ },

+ "source": [

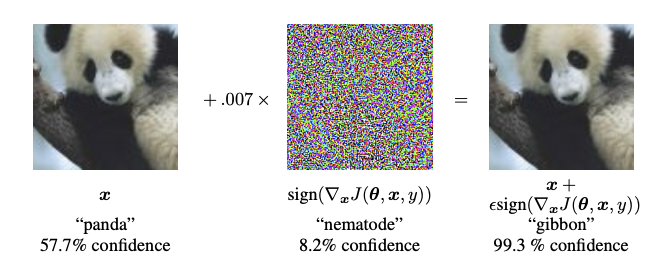

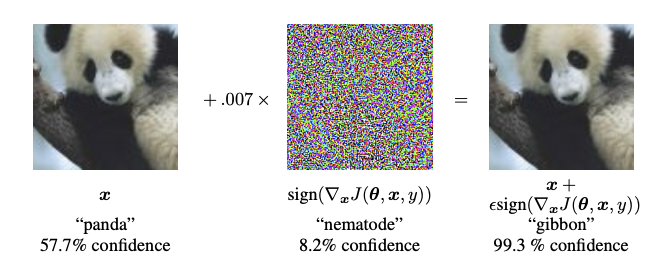

+ "The execution of this attack consists on creating a small perturbation that we can add to a benign, normal image so as to make a machine learning model missclassify it but have it still look unaffected to the human eye.\n",

+ ">\n",

+ "Here we can see exactly what this looks like:\n",

+ "\n",

+ "\n",

+ "\n",

+ "[Fig 1. Visualisation of fgsm](https://www.tensorflow.org/beta/tutorials/generative/images/adversarial_example.png)\n",

+ "\n",

+ ">\n",

+ "\n",

+ "The way we create this perturbation is by calculating the gradient of the cost or loss function used by the model with respect to the image. The loss function is one that returns a value that describes how different the classification of a given image is from what it should be classified as. This gives us an indication of how to change each pixel in order to affect the value of the loss function the most. We then take the sign of these gradients as we are only interested in wether to increase or decrease each pixel, not by how much.\n",

+ "\n",

+ "The formula for the perturbation is described by *I. Goodfellow et al* as:\n",

+ "\n",

+ "$$\\text{sign}(\\nabla_xJ(\\theta, x, y))$$\n",

+ "\n",

+ "or as (they are both equal):\n",

+ "\n",

+ "$$\\text{sign}(\\frac{\\partial J(\\theta, x, y)}{\\partial x})$$\n",

+ "\n",

+ "where:\n",

+ "* J : The loss function used by the model\n",

+ "* $\\theta$ : The model parameters\n",

+ "* $x$ : The input image\n",

+ "* $y$ : the original label\n",

+ "\n",

+ ">\n",

+ "This part of the tutorial was loosely adapted from [this guide](https://www.tensorflow.org/beta/tutorials/generative/adversarial_fgsm) made by the Tensorflow team.\n",

+ ">\n",

+ "The following function defines the implementation of this perturbation:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "d4y5A8-L3gqh",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "def create_perturbation(modelfn, input_image, original_label):\n",

+ " loss_object = tf.keras.losses.CategoricalCrossentropy()\n",

+ " \n",

+ " # Reference the docs for GradientTape in tf2.0 for more info\n",

+ " with tf.GradientTape() as gt:\n",

+ " # Define the calculation that needs to be derived\n",

+ " gt.watch(input_image)\n",

+ " prediction = modelfn(input_image)\n",

+ " loss = loss_object(original_label, prediction)\n",

+ " \n",

+ " # Get the gradients for the loss w.r.t image\n",

+ " grads = gt.gradient(loss, input_image)\n",

+ " perturbation = tf.sign(grads)\n",

+ "\n",

+ " return perturbation"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "KF8IBC-2k8Pf",

+ "colab_type": "text"

+ },

+ "source": [

+ "We can now create the respective perturbation for the image we chose earlier and see what it looks like and what the model classifies it as.\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "2SnsIwWkSbZn",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "perturbation = create_perturbation(model, original_image, original_label)\n",

+ "perturbation_pred = model.predict(perturbation)\n",

+ "\n",

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "plt.imshow(np.reshape(perturbation,(28,28)))\n",

+ "plt.title(\"Classification of: {}\".format(np.argmax(perturbation_pred)))\n",

+ "plt.xlabel(\"Confidence of: {}\".format(np.max(perturbation_pred)))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "cSVL3kesCBAd",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Finally, we implement the attack\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "o2c1lU4w8SMC",

+ "colab_type": "text"

+ },

+ "source": [

+ "To implement the attack we add the perturbation to the image, which we scale down by a value epsilon ($\\epsilon$) to try to make it unperceivable. Although, depending on the model, the minimum for this value may differ.\n",

+ ">\n",

+ "By adding this perturbation to the image we change the pixels in the direction where the value of the loss function will increase or be maximised. An increase in the value of the loss function translates to the image being further away from being classified as the correct label.\n",

+ ">\n",

+ "The adversarial image or example is then the original image with the perturbation. It can be formalised as the following expression:\n",

+ ">\n",

+ "$$ \\text{adv_example} = x + \\epsilon * \\text{sign}(\\nabla_xJ(\\theta, x, y)) $$\n",

+ ">\n",

+ "This next function implements the fgsm attack and creates the adversarial example:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "HpiGG_U0pkCP",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "def fgsm(modelfn, input_image, original_label, epsilon):\n",

+ " perturbation = create_perturbation(modelfn, input_image, original_label)\n",

+ "\n",

+ " adv_example = input_image + (perturbation * epsilon)\n",

+ "\n",

+ " return adv_example"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "9SGiWcOE7B4X",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Now, let's create the Adversarial Image\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "6mnZFQKK_8mt",

+ "colab_type": "text"

+ },

+ "source": [

+ "To do this we'll pass the original image we chose and it's correct label to the function we just defined to create its adversarial image. Then let's ask the model to predict what it thinks the image is, it should hopefully be different than what it looks like."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "-iH1hlEvy13G",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "epsilon = 0.1\n",

+ "\n",

+ "adv_image = fgsm(model, original_image, original_label, epsilon)\n",

+ "adv_image_pred = model.predict(adv_image)"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "ukkD4c7jAeOP",

+ "colab_type": "text"

+ },

+ "source": [

+ "Let's se what this looks like:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "hTnu62E769Ut",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "\n",

+ "plt.subplot(1,3,1)\n",

+ "plt.subplots_adjust(right = 2)\n",

+ "plt.title(\"Original Image\")\n",

+ "plt.imshow(np.reshape(original_image,(28,28)))\n",

+ "plt.xlabel(\"Label: {}\".format(np.argmax(original_label)))\n",

+ "\n",

+ "plt.subplot(1,3,2)\n",

+ "plt.subplots_adjust(right = 2)\n",

+ "plt.title(\"Perturbation\")\n",

+ "plt.imshow(np.reshape(perturbation,(28,28)))\n",

+ "\n",

+ "\n",

+ "plt.subplot(1,3,3)\n",

+ "plt.subplots_adjust(right = 2)\n",

+ "plt.title(\"Adversarial Image\")\n",

+ "plt.imshow(np.reshape(adv_image,(28,28)))\n",

+ "plt.xlabel(\"Classification: {} - Confidence: {}\".format(np.argmax(adv_image_pred),np.max(adv_image_pred)))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "HbGZQsIK8Zaf",

+ "colab_type": "text"

+ },

+ "source": [

+ "> I encourage you to play around with changing the value for epsilon and try to find the limits for when an image is still classified correctly to when it gets missclassified. \n",

+ "\n",

+ "Keep in mind that, as these are 28 * 28 images with pixel values ranging from 0 to 255, perturbations are easily seen and the perturbation required to missclassify the image might be visible. Something else to note is that some images may require bigger perturbations to be missclassified.\n",

+ "\n",

+ "However, when this attack is applied identically to RGB images the perturbations are much more easily hidden and the attack is still effective. An example of this can be seen in [this guide](https://www.tensorflow.org/beta/tutorials/generative/adversarial_fgsm) provided by the Tensorflow team. I also encourage you to try to replicate this code with more complex datasets like CIFAR-10 or even ImageNet. You might also easily do this by just changing the code that trains the model and loads the data to something else keep the rest of the code the same."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "ulbHU1bLNh7c",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Extra // Targeted FGSM: A version of fgsm that can missclassify to a chosen desired label"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "pFZA-IgUNtmK",

+ "colab_type": "text"

+ },

+ "source": [

+ "What if we deliberately wanted to craft an image that was classified as something but that looked like something else? By changing a little of the logic behind the fgsm attack this exact thing can be achieved. \n",

+ ">\n",

+ "By instead of looking for what pixels to change to make the image be missclassified the most, let's look at what pixels to change to make the model be more confident about it being a certain class. \n",

+ "\n",

+ "Technically, what we do is, again calculate the gradients of the loss with respect to the image but now the difference is that the loss is calculated with the target label like so.\n",

+ ">\n",

+ "$$\\text{sign}(\\nabla_xJ(\\theta, x, y_{target}))$$\n",

+ ">\n",

+ "In this case, this creates a perturbation that tells us how to change each pixel to change how close or how away the image is from being the target label. \n",

+ ">\n",

+ "Therefore, we create our adversarial image by substracting the perturbation from the original image to try to decrease or minimize the value of the loss function, which means make the image be closer to being the target label. Formally the attack now looks like this:\n",

+ ">\n",

+ "$$ \\text{targeted_adv_example} = x - \\epsilon * \\text{sign}(\\nabla_xJ(\\theta, x, y_{target})) $$\n",

+ ">\n",

+ "So, let's start by defining the function to create the targeted version of the attack."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "ZzUPdVggVu3y",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "def targeted_fgsm(modelfn, input_image, target_label, epsilon):\n",

+ " perturbation = create_perturbation(modelfn, input_image, target_label)\n",

+ "\n",

+ " adv_example = input_image - (perturbation * epsilon)\n",

+ "\n",

+ " return adv_example"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "1DsdfU81dQ8o",

+ "colab_type": "text"

+ },

+ "source": [

+ "Great! Now we'll choose a random image to then craft into an adversarial example that will be classified as whatever we want."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "xGxiuy8SU_rX",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "random_index = np.random.randint(train_images.shape[0]) \n",

+ "\n",

+ "untargeted_image = tf.convert_to_tensor(train_images[random_index].reshape((1,28,28)))\n",

+ "untargeted_image_label = train_labels[random_index]\n",

+ "\n",

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "plt.imshow(np.reshape(untargeted_image,(28,28)))\n",

+ "plt.title(\"Classification of: {}\".format(np.max(untargeted_image_label)))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "xDqYF_XEYKQU",

+ "colab_type": "text"

+ },

+ "source": [

+ "Effectively applying this targeted attack require a little more tinkering than the un-targeted version. Some images might be easier to craft into certain labels than into others and some might need a bigger epsilon than others. But, in general, this attack requires smaller epsilons to shift the images to be classified as the target label. This means that, in pracice, this type of attack is more effective as it not only allows you to manipulate the output of the model but also do it with adversarial images where their perturbation is more difficult to spot.\n",

+ ">\n",

+ "So, play around by changing the target label for the same image and tweak the value for epsilon and see what it's limits are. You might also want to rerun the previous cell to try different images.\n",

+ "\n",

+ "If you then try to choose epsilons sufficently large it might go over the target label and classify it as something different, so, also try decreasing the value of epsilon."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "4T7Wqy9vTwHC",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "target = 3 # Change to something different than original and experiment changing it for the same image\n",

+ "epsilon = 0.05 # Change\n",

+ "\n",

+ "target_label = tf.one_hot(target, num_classes) # This gives us the desired output of the model given our target, a one hot encoding for it\n",

+ "\n",

+ "targeted_image = targeted_fgsm(model, untargeted_image, target_label, epsilon)\n",

+ "\n",

+ "targeted_pred = model.predict(targeted_image)\n",

+ "\n",

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "plt.imshow(np.reshape(targeted_image,(28,28)))\n",

+ "plt.title(\"Classification of: {}\".format(np.argmax(targeted_pred)))\n",

+ "plt.xlabel(\"Confidence of: {}\".format(np.max(targeted_pred)))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "9_EDE61bMzgW",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Where to find more information:\n",

+ "If you would like to learn more about and implement different types of attacks and defenses that have been developed against them or an integrated way to do adversarial training check out the Adversarial Machine Learning library [CleverHans](https://github.com/tensorflow/cleverhans) mantained by I. Goodfellow, the author of the paper proposing the fgsm attack, and N. Papernot. It offers an easy way to implement these attacks and research on the extent of their applications without having to write the type code described in this notebook yourself."

+ ]

+ }

+ ]

+}

\ No newline at end of file

diff --git a/tutorials/future/tf2/notebook_tutorials/README.md b/tutorials/future/tf2/notebook_tutorials/README.md

new file mode 100644

index 000000000..ffab34a92

--- /dev/null

+++ b/tutorials/future/tf2/notebook_tutorials/README.md

@@ -0,0 +1,3 @@

+# Notebook tutorials for Cleverhans

+>

+These Jupyter/Colab notebooks provide tutorials for how to use the cleverhans library.

\ No newline at end of file

diff --git a/tutorials/future/tf2/notebook_tutorials/mnist_fgsm_tutorial.ipynb b/tutorials/future/tf2/notebook_tutorials/mnist_fgsm_tutorial.ipynb

new file mode 100644

index 000000000..015ff6517

--- /dev/null

+++ b/tutorials/future/tf2/notebook_tutorials/mnist_fgsm_tutorial.ipynb

@@ -0,0 +1,321 @@

+{

+ "nbformat": 4,

+ "nbformat_minor": 0,

+ "metadata": {

+ "colab": {

+ "name": "Cleverhans FGSM attack.ipynb",

+ "version": "0.3.2",

+ "provenance": [],

+ "collapsed_sections": [],

+ "include_colab_link": true

+ },

+ "kernelspec": {

+ "name": "python3",

+ "display_name": "Python 3"

+ }

+ },

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "view-in-github",

+ "colab_type": "text"

+ },

+ "source": [

+ "

"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "RHwgLwN4nXPi",

+ "colab_type": "text"

+ },

+ "source": [

+ "# Fast Gradient Sign Method (FGSM) Attack \n",

+ "\n",

+ "#### This guide explores the FGSM attack which was first described by I. Goodfellow et al.* and is implemented with Tensorflow 2.0. \n",

+ ">\n",

+ "*[Explaining and Harnessing Adversarial Examples](https://arxiv.org/pdf/1412.6572.pdf)\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "Vs9wD38he0cM",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Import dependent libraries\n",

+ "Tensorflow 2.0 required\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "eBsLelavnv0u",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "!pip install -q tensorflow==2.0.0b1\n",

+ "\n",

+ "import tensorflow as tf\n",

+ "import numpy as np\n",

+ "import matplotlib.pyplot as plt\n",

+ "\n",

+ "print(tf.__version__)\n",

+ "print(\"GPU Available: \", tf.test.is_gpu_available())"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "b4kgPQqp-hwR",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Training a simple model on the MNIST dataset\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "_z99mq1bKkjz",

+ "colab_type": "text"

+ },

+ "source": [

+ "> If you would like to experiment with other datasets feel free to replace this code by whatever you need to do so. Just remember that to make the rest of the notebook work without any other major changes keep the variables train_images, train_labels, test_images, test_labels and assign them to the corresponding new data. I recommend any of the datasets offered by keras as they use the same mechanics to import them.\n",

+ "\n",

+ ">Keep in mind that the MNIST dataset was used in this guide because of the little amount of time it takes to both train a good model and craft the attacks."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "uQVP1re__pbf",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "mnist = tf.keras.datasets.mnist\n",

+ "(train_images, train_labels), (test_images, test_labels) = mnist.load_data()\n",

+ "\n",

+ "train_images = train_images / 255.0\n",

+ "test_images = test_images / 255.0\n",

+ "\n",

+ "num_classes = 10\n",

+ "\n",

+ "model = tf.keras.Sequential([\n",

+ " tf.keras.layers.Flatten(input_shape=(28, 28)),\n",

+ " tf.keras.layers.Dense(32, activation=tf.nn.relu),\n",

+ " tf.keras.layers.Dense(16, activation=tf.nn.relu),\n",

+ " tf.keras.layers.Dense(10, activation=tf.nn.softmax)\n",

+ "])\n",

+ "\n",

+ "model.compile(optimizer='adam',\n",

+ " loss= 'sparse_categorical_crossentropy',\n",

+ " metrics=['accuracy'])\n",

+ "\n",

+ "model.fit(train_images, train_labels, epochs=10, validation_split=0.2)\n",

+ "test_loss, test_acc = model.evaluate(test_images, test_labels)\n",

+ "\n",

+ "print('Test accuracy:', test_acc)"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "QpC4KgTS_Fj8",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Implementing the FGSM attack to craft an adversarial example\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "3NkfwLkjtNNy",

+ "colab_type": "text"

+ },

+ "source": [

+ "### What is a fast gradient sign method attack and what are adversarial examples?"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "iu66gSMTtYwO",

+ "colab_type": "text"

+ },

+ "source": [

+ "An FGSM attack is a way of exploiting a natural vulnerability in machine learning models and confuse them. It creates images that look like real, normal data but that are designed so that a model missclassifies them. This images are called adversarial examples. There are many different types of attacks that accomplish this same thing, crafting adversarial examples, but the fgsm attack provides the quickest way to so. It is also a white box attack which means that it assumes the attacker has access to the model's architecture, parameters and weights."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "qc_hLUbLAN55",

+ "colab_type": "text"

+ },

+ "source": [

+ "### We must start off by choosing the image we will craft into an adversarial example. \n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "pobla8qJOZf8",

+ "colab_type": "text"

+ },

+ "source": [

+ "To do this, we pick a random image from the training set and convert its corresponding label into its one-hot encoded version. [*Info on one-hot*](https://blog.cambridgespark.com/robust-one-hot-encoding-in-python-3e29bfcec77e)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "colab_type": "code",

+ "id": "wA7_JKrm1wsm",

+ "colab": {}

+ },

+ "source": [

+ "random_index = np.random.randint(test_images.shape[0])\n",

+ "\n",

+ "original_image = train_images[random_index]\n",

+ "original_image = tf.convert_to_tensor(original_image.reshape((1,28,28))) #The .reshape just gives it the proper form to input into the model, a batch of 1 a.k.a a tensor\n",

+ "\n",

+ "original_label = tf.one_hot(train_labels[random_index], num_classes)"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "sklj9G-JJPRY",

+ "colab_type": "text"

+ },

+ "source": [

+ "We can then look at the image and at it's label."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "k0ir_rYeJW6m",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "\n",

+ "plt.imshow(np.reshape(original_image, (28,28)))\n",

+ "plt.title(\"Label: {}\".format(np.argmax(original_label)))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "ZuO0xjqKKMmt",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Now, we create the perturbation we will use to perform the attack"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "-Gbgb2dZ4KE-",

+ "colab_type": "text"

+ },

+ "source": [

+ "The execution of this attack consists on creating a small perturbation that we can add to a benign, normal image so as to make a machine learning model missclassify it but have it still look unaffected to the human eye.\n",

+ ">\n",

+ "Here we can see exactly what this looks like:\n",

+ "\n",

+ "\n",

+ "\n",

+ "[Fig 1. Visualisation of fgsm](https://www.tensorflow.org/beta/tutorials/generative/images/adversarial_example.png)\n",

+ "\n",

+ ">\n",

+ "\n",

+ "The way we create this perturbation is by calculating the gradient of the cost or loss function used by the model with respect to the image. The loss function is one that returns a value that describes how different the classification of a given image is from what it should be classified as. This gives us an indication of how to change each pixel in order to affect the value of the loss function the most. We then take the sign of these gradients as we are only interested in wether to increase or decrease each pixel, not by how much.\n",

+ "\n",

+ "The formula for the perturbation is described by *I. Goodfellow et al* as:\n",

+ "\n",

+ "$$\\text{sign}(\\nabla_xJ(\\theta, x, y))$$\n",

+ "\n",

+ "or as (they are both equal):\n",

+ "\n",

+ "$$\\text{sign}(\\frac{\\partial J(\\theta, x, y)}{\\partial x})$$\n",

+ "\n",

+ "where:\n",

+ "* J : The loss function used by the model\n",

+ "* $\\theta$ : The model parameters\n",

+ "* $x$ : The input image\n",

+ "* $y$ : the original label\n",

+ "\n",

+ ">\n",

+ "This part of the tutorial was loosely adapted from [this guide](https://www.tensorflow.org/beta/tutorials/generative/adversarial_fgsm) made by the Tensorflow team.\n",

+ ">\n",

+ "The following function defines the implementation of this perturbation:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "d4y5A8-L3gqh",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "def create_perturbation(modelfn, input_image, original_label):\n",

+ " loss_object = tf.keras.losses.CategoricalCrossentropy()\n",

+ " \n",

+ " # Reference the docs for GradientTape in tf2.0 for more info\n",

+ " with tf.GradientTape() as gt:\n",

+ " # Define the calculation that needs to be derived\n",

+ " gt.watch(input_image)\n",

+ " prediction = modelfn(input_image)\n",

+ " loss = loss_object(original_label, prediction)\n",

+ " \n",

+ " # Get the gradients for the loss w.r.t image\n",

+ " grads = gt.gradient(loss, input_image)\n",

+ " perturbation = tf.sign(grads)\n",

+ "\n",

+ " return perturbation"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "KF8IBC-2k8Pf",

+ "colab_type": "text"

+ },

+ "source": [

+ "We can now create the respective perturbation for the image we chose earlier and see what it looks like and what the model classifies it as.\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "2SnsIwWkSbZn",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "perturbation = create_perturbation(model, original_image, original_label)\n",

+ "perturbation_pred = model.predict(perturbation)\n",

+ "\n",

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "plt.imshow(np.reshape(perturbation,(28,28)))\n",

+ "plt.title(\"Classification of: {}\".format(np.argmax(perturbation_pred)))\n",

+ "plt.xlabel(\"Confidence of: {}\".format(np.max(perturbation_pred)))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "cSVL3kesCBAd",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Finally, we implement the attack\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "o2c1lU4w8SMC",

+ "colab_type": "text"

+ },

+ "source": [

+ "To implement the attack we add the perturbation to the image, which we scale down by a value epsilon ($\\epsilon$) to try to make it unperceivable. Although, depending on the model, the minimum for this value may differ.\n",

+ ">\n",

+ "By adding this perturbation to the image we change the pixels in the direction where the value of the loss function will increase or be maximised. An increase in the value of the loss function translates to the image being further away from being classified as the correct label.\n",

+ ">\n",

+ "The adversarial image or example is then the original image with the perturbation. It can be formalised as the following expression:\n",

+ ">\n",

+ "$$ \\text{adv_example} = x + \\epsilon * \\text{sign}(\\nabla_xJ(\\theta, x, y)) $$\n",

+ ">\n",

+ "This next function implements the fgsm attack and creates the adversarial example:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "HpiGG_U0pkCP",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "def fgsm(modelfn, input_image, original_label, epsilon):\n",

+ " perturbation = create_perturbation(modelfn, input_image, original_label)\n",

+ "\n",

+ " adv_example = input_image + (perturbation * epsilon)\n",

+ "\n",

+ " return adv_example"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "9SGiWcOE7B4X",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Now, let's create the Adversarial Image\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "6mnZFQKK_8mt",

+ "colab_type": "text"

+ },

+ "source": [

+ "To do this we'll pass the original image we chose and it's correct label to the function we just defined to create its adversarial image. Then let's ask the model to predict what it thinks the image is, it should hopefully be different than what it looks like."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "-iH1hlEvy13G",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "epsilon = 0.1\n",

+ "\n",

+ "adv_image = fgsm(model, original_image, original_label, epsilon)\n",

+ "adv_image_pred = model.predict(adv_image)"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "ukkD4c7jAeOP",

+ "colab_type": "text"

+ },

+ "source": [

+ "Let's se what this looks like:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "hTnu62E769Ut",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "\n",

+ "plt.subplot(1,3,1)\n",

+ "plt.subplots_adjust(right = 2)\n",

+ "plt.title(\"Original Image\")\n",

+ "plt.imshow(np.reshape(original_image,(28,28)))\n",

+ "plt.xlabel(\"Label: {}\".format(np.argmax(original_label)))\n",

+ "\n",

+ "plt.subplot(1,3,2)\n",

+ "plt.subplots_adjust(right = 2)\n",

+ "plt.title(\"Perturbation\")\n",

+ "plt.imshow(np.reshape(perturbation,(28,28)))\n",

+ "\n",

+ "\n",

+ "plt.subplot(1,3,3)\n",

+ "plt.subplots_adjust(right = 2)\n",

+ "plt.title(\"Adversarial Image\")\n",

+ "plt.imshow(np.reshape(adv_image,(28,28)))\n",

+ "plt.xlabel(\"Classification: {} - Confidence: {}\".format(np.argmax(adv_image_pred),np.max(adv_image_pred)))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "HbGZQsIK8Zaf",

+ "colab_type": "text"

+ },

+ "source": [

+ "> I encourage you to play around with changing the value for epsilon and try to find the limits for when an image is still classified correctly to when it gets missclassified. \n",

+ "\n",

+ "Keep in mind that, as these are 28 * 28 images with pixel values ranging from 0 to 255, perturbations are easily seen and the perturbation required to missclassify the image might be visible. Something else to note is that some images may require bigger perturbations to be missclassified.\n",

+ "\n",

+ "However, when this attack is applied identically to RGB images the perturbations are much more easily hidden and the attack is still effective. An example of this can be seen in [this guide](https://www.tensorflow.org/beta/tutorials/generative/adversarial_fgsm) provided by the Tensorflow team. I also encourage you to try to replicate this code with more complex datasets like CIFAR-10 or even ImageNet. You might also easily do this by just changing the code that trains the model and loads the data to something else keep the rest of the code the same."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "ulbHU1bLNh7c",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Extra // Targeted FGSM: A version of fgsm that can missclassify to a chosen desired label"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "pFZA-IgUNtmK",

+ "colab_type": "text"

+ },

+ "source": [

+ "What if we deliberately wanted to craft an image that was classified as something but that looked like something else? By changing a little of the logic behind the fgsm attack this exact thing can be achieved. \n",

+ ">\n",

+ "By instead of looking for what pixels to change to make the image be missclassified the most, let's look at what pixels to change to make the model be more confident about it being a certain class. \n",

+ "\n",

+ "Technically, what we do is, again calculate the gradients of the loss with respect to the image but now the difference is that the loss is calculated with the target label like so.\n",

+ ">\n",

+ "$$\\text{sign}(\\nabla_xJ(\\theta, x, y_{target}))$$\n",

+ ">\n",

+ "In this case, this creates a perturbation that tells us how to change each pixel to change how close or how away the image is from being the target label. \n",

+ ">\n",

+ "Therefore, we create our adversarial image by substracting the perturbation from the original image to try to decrease or minimize the value of the loss function, which means make the image be closer to being the target label. Formally the attack now looks like this:\n",

+ ">\n",

+ "$$ \\text{targeted_adv_example} = x - \\epsilon * \\text{sign}(\\nabla_xJ(\\theta, x, y_{target})) $$\n",

+ ">\n",

+ "So, let's start by defining the function to create the targeted version of the attack."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "ZzUPdVggVu3y",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "def targeted_fgsm(modelfn, input_image, target_label, epsilon):\n",

+ " perturbation = create_perturbation(modelfn, input_image, target_label)\n",

+ "\n",

+ " adv_example = input_image - (perturbation * epsilon)\n",

+ "\n",

+ " return adv_example"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "1DsdfU81dQ8o",

+ "colab_type": "text"

+ },

+ "source": [

+ "Great! Now we'll choose a random image to then craft into an adversarial example that will be classified as whatever we want."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "xGxiuy8SU_rX",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "random_index = np.random.randint(train_images.shape[0]) \n",

+ "\n",

+ "untargeted_image = tf.convert_to_tensor(train_images[random_index].reshape((1,28,28)))\n",

+ "untargeted_image_label = train_labels[random_index]\n",

+ "\n",

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "plt.imshow(np.reshape(untargeted_image,(28,28)))\n",

+ "plt.title(\"Classification of: {}\".format(np.max(untargeted_image_label)))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "xDqYF_XEYKQU",

+ "colab_type": "text"

+ },

+ "source": [

+ "Effectively applying this targeted attack require a little more tinkering than the un-targeted version. Some images might be easier to craft into certain labels than into others and some might need a bigger epsilon than others. But, in general, this attack requires smaller epsilons to shift the images to be classified as the target label. This means that, in pracice, this type of attack is more effective as it not only allows you to manipulate the output of the model but also do it with adversarial images where their perturbation is more difficult to spot.\n",

+ ">\n",

+ "So, play around by changing the target label for the same image and tweak the value for epsilon and see what it's limits are. You might also want to rerun the previous cell to try different images.\n",

+ "\n",

+ "If you then try to choose epsilons sufficently large it might go over the target label and classify it as something different, so, also try decreasing the value of epsilon."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "4T7Wqy9vTwHC",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "target = 3 # Change to something different than original and experiment changing it for the same image\n",

+ "epsilon = 0.05 # Change\n",

+ "\n",

+ "target_label = tf.one_hot(target, num_classes) # This gives us the desired output of the model given our target, a one hot encoding for it\n",

+ "\n",

+ "targeted_image = targeted_fgsm(model, untargeted_image, target_label, epsilon)\n",

+ "\n",

+ "targeted_pred = model.predict(targeted_image)\n",

+ "\n",

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "plt.imshow(np.reshape(targeted_image,(28,28)))\n",

+ "plt.title(\"Classification of: {}\".format(np.argmax(targeted_pred)))\n",

+ "plt.xlabel(\"Confidence of: {}\".format(np.max(targeted_pred)))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "9_EDE61bMzgW",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Where to find more information:\n",

+ "If you would like to learn more about and implement different types of attacks and defenses that have been developed against them or an integrated way to do adversarial training check out the Adversarial Machine Learning library [CleverHans](https://github.com/tensorflow/cleverhans) mantained by I. Goodfellow, the author of the paper proposing the fgsm attack, and N. Papernot. It offers an easy way to implement these attacks and research on the extent of their applications without having to write the type code described in this notebook yourself."

+ ]

+ }

+ ]

+}

\ No newline at end of file

diff --git a/tutorials/future/tf2/notebook_tutorials/README.md b/tutorials/future/tf2/notebook_tutorials/README.md

new file mode 100644

index 000000000..ffab34a92

--- /dev/null

+++ b/tutorials/future/tf2/notebook_tutorials/README.md

@@ -0,0 +1,3 @@

+# Notebook tutorials for Cleverhans

+>

+These Jupyter/Colab notebooks provide tutorials for how to use the cleverhans library.

\ No newline at end of file

diff --git a/tutorials/future/tf2/notebook_tutorials/mnist_fgsm_tutorial.ipynb b/tutorials/future/tf2/notebook_tutorials/mnist_fgsm_tutorial.ipynb

new file mode 100644

index 000000000..015ff6517

--- /dev/null

+++ b/tutorials/future/tf2/notebook_tutorials/mnist_fgsm_tutorial.ipynb

@@ -0,0 +1,321 @@

+{

+ "nbformat": 4,

+ "nbformat_minor": 0,

+ "metadata": {

+ "colab": {

+ "name": "Cleverhans FGSM attack.ipynb",

+ "version": "0.3.2",

+ "provenance": [],

+ "collapsed_sections": [],

+ "include_colab_link": true

+ },

+ "kernelspec": {

+ "name": "python3",

+ "display_name": "Python 3"

+ }

+ },

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "view-in-github",

+ "colab_type": "text"

+ },

+ "source": [

+ " "

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "RHwgLwN4nXPi",

+ "colab_type": "text"

+ },

+ "source": [

+ "# Fast Gradient Sign Method (FGSM) using Cleverhans\n",

+ "\n",

+ "#### Easily implement an FGSM attack on a model using Cleverhans and Tensorflow 2.0\n",

+ ">\n",

+ "Checkout Cleverhans on Github [here](https://www.github.com/tensorflow/cleverhans).\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "Vs9wD38he0cM",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Import dependent libraries\n",

+ "Tensorflow 2.0 required \n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "eBsLelavnv0u",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "!pip install -q tensorflow==2.0.0b1\n",

+ "# Install bleeding edge version of cleverhans\n",

+ "!pip install git+https://github.com/tensorflow/cleverhans.git#egg=cleverhans\n",

+ "\n",

+ "import cleverhans\n",

+ "import tensorflow as tf\n",

+ "import numpy as np\n",

+ "import matplotlib.pyplot as plt\n",

+ "\n",

+ "print(\"\\nTensorflow Version: \" + tf.__version__)\n",

+ "print(\"Cleverhans Version: \" + cleverhans.__version__)\n",

+ "print(\"GPU Available: \", tf.test.is_gpu_available())"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "b4kgPQqp-hwR",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Training a simple model on the MNIST dataset\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "_z99mq1bKkjz",

+ "colab_type": "text"

+ },

+ "source": [

+ "> If you would like to experiment with other datasets feel free to replace this code by whatever you need to do so. Just remember that to make the rest of the notebook work without any other major changes keep the variables train_images, train_labels, test_images, test_labels and assign them to the corresponding new data. I recommend any of the datasets offered by keras as they use the same mechanics to import them.\n",

+ "\n",

+ ">Keep in mind that the MNIST dataset was used in this guide because of the little amount of time it takes to both train a good model and craft the attacks."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "uQVP1re__pbf",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "mnist = tf.keras.datasets.mnist\n",

+ "(train_images, train_labels), (test_images, test_labels) = mnist.load_data()\n",

+ "\n",

+ "train_images = train_images / 255.0\n",

+ "test_images = test_images / 255.0\n",

+ "\n",

+ "num_classes = 10\n",

+ "\n",

+ "model = tf.keras.Sequential([\n",

+ " tf.keras.layers.Flatten(input_shape=(28, 28)),\n",

+ " tf.keras.layers.Dense(32, activation=tf.nn.relu),\n",

+ " tf.keras.layers.Dense(16, activation=tf.nn.relu),\n",

+ " tf.keras.layers.Dense(10),\n",

+ " tf.keras.layers.Activation(tf.nn.softmax) # We seperate the activation layer to be able to access the logits of the previous layer later\n",

+ "])\n",

+ "\n",

+ "model.compile(optimizer='adam',\n",

+ " loss= 'sparse_categorical_crossentropy',\n",

+ " metrics=['accuracy'])\n",

+ "\n",

+ "model.fit(train_images, train_labels, epochs=10, validation_split=0.2)\n",

+ "test_loss, test_acc = model.evaluate(test_images, test_labels)\n",

+ "\n",

+ "print('Test accuracy:', test_acc)"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "QpC4KgTS_Fj8",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Implementing the FGSM attack using fast_gradient_method()\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "U68CRVKW5ULk",

+ "colab_type": "text"

+ },

+ "source": [

+ "Cleverhans implements the fgsm attack with the following method:\n",

+ "\n",

+ "\n",

+ "```\n",

+ "fast_gradient_method(model_fn, x, eps, norm, clip_min=None, clip_max=None, y=None, targeted=False, sanity_checks=False)\n",

+ "\n",

+ "```\n",

+ "\n",

+ "> * **model_fn**: a callable that takes an input tensor and returns the model logits\n",

+ "* **x**: input_tensor\n",

+ "* **eps**: epsilon (dictates the \"strength\" of the distortion created)\n",

+ "* **norm**: Order of the norm (mimics NumPy). Possible values: np.inf, 1 or 2.\n",

+ "* **clip_min**: (optional, default=None) float. Minimum float value for adversarial example components.\n",

+ "* **clip_max**: (optional, default=None) float. Maximum float value for adversarial example components\n",

+ "* **y**: (optional, default=None) Tensor with true labels. If targeted is true, then provide the target label. Otherwise, only provide this parameter if you'd like to use true labels when crafting adversarial samples. Otherwise, model predictions are used as labels to avoid the \"label leaking\" effect (explained in this [paper](https://arxiv.org/abs/1611.01236)).\n",

+ "* **targeted**: (optional, default=False) bool. Is the attack targeted or untargeted? Untargeted, the default, will try to make the label incorrect. Targeted will instead try to move in the direction of being more like y.\n",

+ "* **param sanity_checks**: (optional, default=False) bool, if True, include asserts (Turn them off to use less runtime / memory or for unit tests that intentionally pass strange input)\n",

+ ">\n",

+ "\n",

+ "Find it in the library [here](https://github.com/tensorflow/cleverhans/blob/master/cleverhans/future/tf2/attacks/fast_gradient_method.py)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "LgZhvgzbAuU8",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "# Import the attack\n",

+ "from cleverhans.future.tf2.attacks import fast_gradient_method\n",

+ "\n",

+ "#The attack requires the model to ouput the logits\n",

+ "logits_model = tf.keras.Model(model.input,model.layers[-1].output)"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "q53TkEuD-gR9",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Choose a random image to attack from the test set\n",

+ "\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "colab_type": "code",

+ "id": "wA7_JKrm1wsm",

+ "colab": {}

+ },

+ "source": [

+ "random_index = np.random.randint(test_images.shape[0])\n",

+ "\n",

+ "original_image = test_images[random_index]\n",

+ "original_image = tf.convert_to_tensor(original_image.reshape((1,28,28))) #The .reshape just gives it the proper form to input into the model, a batch of 1 a.k.a a tensor\n",

+ "\n",

+ "original_label = test_labels[random_index]\n",

+ "original_label = np.reshape(original_label, (1,)).astype('int64') # Give label proper shape and type for cleverhans\n",

+ "\n",

+ "#Show the image\n",

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "\n",

+ "plt.imshow(np.reshape(original_image, (28,28)))\n",

+ "plt.title(\"Label: {}\".format(original_label[0]))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "rz8QBZJ2-DO5",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Non-targeted FGSM attack\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "6LSemuYWrZWz",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "epsilon = 0.1\n",

+ "\n",

+ "adv_example_untargeted_label = fast_gradient_method(logits_model, original_image, epsilon, np.inf, targeted=False)\n",

+ "\n",

+ "adv_example_untargeted_label_pred = model.predict(adv_example_untargeted_label)\n",

+ "\n",

+ "#Show the image\n",

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "\n",

+ "plt.imshow(np.reshape(adv_example_untargeted_label, (28,28)))\n",

+ "plt.title(\"Model Prediction: {}\".format(np.argmax(adv_example_untargeted_label_pred)))\n",

+ "plt.xlabel(\"Original Label: {}\".format(original_label[0]))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "-l-eIvZZvSC6",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Targeted FGSM Attack"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "O4teWeVYvUGQ",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "epsilon = 0.1\n",

+ "# The target value may have to be changed to work, some images are more easily missclassified as different labels\n",

+ "target = 2\n",

+ "\n",

+ "target_label = np.reshape(target, (1,)).astype('int64') # Give target label proper size and dtype to feed through\n",

+ "\n",

+ "adv_example_targeted_label = fast_gradient_method(logits_model, original_image, epsilon, np.inf, y=target_label, targeted=True)\n",

+ "\n",

+ "adv_example_targeted_label_pred = model.predict(adv_example_targeted_label)\n",

+ "\n",

+ "#Show the image\n",

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "\n",

+ "plt.imshow(np.reshape(adv_example_targeted_label, (28,28)))\n",

+ "plt.title(\"Model Prediction: {}\".format(np.argmax(adv_example_targeted_label_pred)))\n",

+ "plt.xlabel(\"Original Label: {}\".format(original_label[0]))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "DlQ833TumOUC",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Other Docs\n",

+ ">\n",

+ "Find more tutorials for Cleverhans [here](https://github.com/tensorflow/cleverhans/tree/master/tutorials/future/tf2)."

+ ]

+ }

+ ]

+}

"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "RHwgLwN4nXPi",

+ "colab_type": "text"

+ },

+ "source": [

+ "# Fast Gradient Sign Method (FGSM) using Cleverhans\n",

+ "\n",

+ "#### Easily implement an FGSM attack on a model using Cleverhans and Tensorflow 2.0\n",

+ ">\n",

+ "Checkout Cleverhans on Github [here](https://www.github.com/tensorflow/cleverhans).\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "Vs9wD38he0cM",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Import dependent libraries\n",

+ "Tensorflow 2.0 required \n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "eBsLelavnv0u",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "!pip install -q tensorflow==2.0.0b1\n",

+ "# Install bleeding edge version of cleverhans\n",

+ "!pip install git+https://github.com/tensorflow/cleverhans.git#egg=cleverhans\n",

+ "\n",

+ "import cleverhans\n",

+ "import tensorflow as tf\n",

+ "import numpy as np\n",

+ "import matplotlib.pyplot as plt\n",

+ "\n",

+ "print(\"\\nTensorflow Version: \" + tf.__version__)\n",

+ "print(\"Cleverhans Version: \" + cleverhans.__version__)\n",

+ "print(\"GPU Available: \", tf.test.is_gpu_available())"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "b4kgPQqp-hwR",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Training a simple model on the MNIST dataset\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "_z99mq1bKkjz",

+ "colab_type": "text"

+ },

+ "source": [

+ "> If you would like to experiment with other datasets feel free to replace this code by whatever you need to do so. Just remember that to make the rest of the notebook work without any other major changes keep the variables train_images, train_labels, test_images, test_labels and assign them to the corresponding new data. I recommend any of the datasets offered by keras as they use the same mechanics to import them.\n",

+ "\n",

+ ">Keep in mind that the MNIST dataset was used in this guide because of the little amount of time it takes to both train a good model and craft the attacks."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "uQVP1re__pbf",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "mnist = tf.keras.datasets.mnist\n",

+ "(train_images, train_labels), (test_images, test_labels) = mnist.load_data()\n",

+ "\n",

+ "train_images = train_images / 255.0\n",

+ "test_images = test_images / 255.0\n",

+ "\n",

+ "num_classes = 10\n",

+ "\n",

+ "model = tf.keras.Sequential([\n",

+ " tf.keras.layers.Flatten(input_shape=(28, 28)),\n",

+ " tf.keras.layers.Dense(32, activation=tf.nn.relu),\n",

+ " tf.keras.layers.Dense(16, activation=tf.nn.relu),\n",

+ " tf.keras.layers.Dense(10),\n",

+ " tf.keras.layers.Activation(tf.nn.softmax) # We seperate the activation layer to be able to access the logits of the previous layer later\n",

+ "])\n",

+ "\n",

+ "model.compile(optimizer='adam',\n",

+ " loss= 'sparse_categorical_crossentropy',\n",

+ " metrics=['accuracy'])\n",

+ "\n",

+ "model.fit(train_images, train_labels, epochs=10, validation_split=0.2)\n",

+ "test_loss, test_acc = model.evaluate(test_images, test_labels)\n",

+ "\n",

+ "print('Test accuracy:', test_acc)"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "QpC4KgTS_Fj8",

+ "colab_type": "text"

+ },

+ "source": [

+ "## Implementing the FGSM attack using fast_gradient_method()\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "U68CRVKW5ULk",

+ "colab_type": "text"

+ },

+ "source": [

+ "Cleverhans implements the fgsm attack with the following method:\n",

+ "\n",

+ "\n",

+ "```\n",

+ "fast_gradient_method(model_fn, x, eps, norm, clip_min=None, clip_max=None, y=None, targeted=False, sanity_checks=False)\n",

+ "\n",

+ "```\n",

+ "\n",

+ "> * **model_fn**: a callable that takes an input tensor and returns the model logits\n",

+ "* **x**: input_tensor\n",

+ "* **eps**: epsilon (dictates the \"strength\" of the distortion created)\n",

+ "* **norm**: Order of the norm (mimics NumPy). Possible values: np.inf, 1 or 2.\n",

+ "* **clip_min**: (optional, default=None) float. Minimum float value for adversarial example components.\n",

+ "* **clip_max**: (optional, default=None) float. Maximum float value for adversarial example components\n",

+ "* **y**: (optional, default=None) Tensor with true labels. If targeted is true, then provide the target label. Otherwise, only provide this parameter if you'd like to use true labels when crafting adversarial samples. Otherwise, model predictions are used as labels to avoid the \"label leaking\" effect (explained in this [paper](https://arxiv.org/abs/1611.01236)).\n",

+ "* **targeted**: (optional, default=False) bool. Is the attack targeted or untargeted? Untargeted, the default, will try to make the label incorrect. Targeted will instead try to move in the direction of being more like y.\n",

+ "* **param sanity_checks**: (optional, default=False) bool, if True, include asserts (Turn them off to use less runtime / memory or for unit tests that intentionally pass strange input)\n",

+ ">\n",

+ "\n",

+ "Find it in the library [here](https://github.com/tensorflow/cleverhans/blob/master/cleverhans/future/tf2/attacks/fast_gradient_method.py)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "LgZhvgzbAuU8",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "# Import the attack\n",

+ "from cleverhans.future.tf2.attacks import fast_gradient_method\n",

+ "\n",

+ "#The attack requires the model to ouput the logits\n",

+ "logits_model = tf.keras.Model(model.input,model.layers[-1].output)"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "q53TkEuD-gR9",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Choose a random image to attack from the test set\n",

+ "\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "colab_type": "code",

+ "id": "wA7_JKrm1wsm",

+ "colab": {}

+ },

+ "source": [

+ "random_index = np.random.randint(test_images.shape[0])\n",

+ "\n",

+ "original_image = test_images[random_index]\n",

+ "original_image = tf.convert_to_tensor(original_image.reshape((1,28,28))) #The .reshape just gives it the proper form to input into the model, a batch of 1 a.k.a a tensor\n",

+ "\n",

+ "original_label = test_labels[random_index]\n",

+ "original_label = np.reshape(original_label, (1,)).astype('int64') # Give label proper shape and type for cleverhans\n",

+ "\n",

+ "#Show the image\n",

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "\n",

+ "plt.imshow(np.reshape(original_image, (28,28)))\n",

+ "plt.title(\"Label: {}\".format(original_label[0]))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "rz8QBZJ2-DO5",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Non-targeted FGSM attack\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "6LSemuYWrZWz",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "epsilon = 0.1\n",

+ "\n",

+ "adv_example_untargeted_label = fast_gradient_method(logits_model, original_image, epsilon, np.inf, targeted=False)\n",

+ "\n",

+ "adv_example_untargeted_label_pred = model.predict(adv_example_untargeted_label)\n",

+ "\n",

+ "#Show the image\n",

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "\n",

+ "plt.imshow(np.reshape(adv_example_untargeted_label, (28,28)))\n",

+ "plt.title(\"Model Prediction: {}\".format(np.argmax(adv_example_untargeted_label_pred)))\n",

+ "plt.xlabel(\"Original Label: {}\".format(original_label[0]))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "-l-eIvZZvSC6",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Targeted FGSM Attack"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "metadata": {

+ "id": "O4teWeVYvUGQ",

+ "colab_type": "code",

+ "colab": {}

+ },

+ "source": [

+ "epsilon = 0.1\n",

+ "# The target value may have to be changed to work, some images are more easily missclassified as different labels\n",

+ "target = 2\n",

+ "\n",

+ "target_label = np.reshape(target, (1,)).astype('int64') # Give target label proper size and dtype to feed through\n",

+ "\n",

+ "adv_example_targeted_label = fast_gradient_method(logits_model, original_image, epsilon, np.inf, y=target_label, targeted=True)\n",

+ "\n",

+ "adv_example_targeted_label_pred = model.predict(adv_example_targeted_label)\n",

+ "\n",

+ "#Show the image\n",

+ "plt.figure()\n",

+ "plt.grid(False)\n",

+ "\n",

+ "plt.imshow(np.reshape(adv_example_targeted_label, (28,28)))\n",

+ "plt.title(\"Model Prediction: {}\".format(np.argmax(adv_example_targeted_label_pred)))\n",

+ "plt.xlabel(\"Original Label: {}\".format(original_label[0]))\n",

+ "\n",

+ "plt.show()"

+ ],

+ "execution_count": 0,

+ "outputs": []

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "DlQ833TumOUC",

+ "colab_type": "text"

+ },

+ "source": [

+ "### Other Docs\n",

+ ">\n",

+ "Find more tutorials for Cleverhans [here](https://github.com/tensorflow/cleverhans/tree/master/tutorials/future/tf2)."

+ ]

+ }

+ ]

+}