diff --git a/.gitignore b/.gitignore

index bfe56cd1d..a0f41b0cd 100644

--- a/.gitignore

+++ b/.gitignore

@@ -32,4 +32,3 @@ dbs

benchmarks

benchmark_results_files.json

uploaded_benchmarks

-**/.env

diff --git a/examples/customer_service_bot/.env.example b/examples/customer_service_bot/.env

similarity index 100%

rename from examples/customer_service_bot/.env.example

rename to examples/customer_service_bot/.env

diff --git a/examples/customer_service_bot/README.md b/examples/customer_service_bot/README.md

index c223b4bd2..ab0672554 100644

--- a/examples/customer_service_bot/README.md

+++ b/examples/customer_service_bot/README.md

@@ -38,10 +38,10 @@ docker compose up --build --abort-on-container-exit --exit-code-from intent_clie

## Run the bot

### Run with Docker & Docker-Compose environment

-To interact with external APIs, the bot requires API tokens that can be set through [.env](.env.example). You should start by creating your own `.env` file:

+To interact with external APIs, the bot requires API tokens that can be set through the [.env](.env) file. Update it replacing templates with actual token values.

```

-echo TG_BOT_TOKEN=*** >> .env

-echo OPENAI_API_TOKEN=*** >> .env

+TG_BOT_TOKEN=***

+OPENAI_API_TOKEN=***

```

*The commands below need to be run from the /examples/customer_service_bot directory*

diff --git a/examples/customer_service_bot/bot/dialog_graph/script.py b/examples/customer_service_bot/bot/dialog_graph/script.py

index 5d31175ad..f641da159 100644

--- a/examples/customer_service_bot/bot/dialog_graph/script.py

+++ b/examples/customer_service_bot/bot/dialog_graph/script.py

@@ -61,7 +61,7 @@

},

"ask_item": {

RESPONSE: Message(

- text="Which books would you like to order? Please, separate the titles by commas (type 'abort' to cancel)."

+ text="Which books would you like to order? Separate the titles by commas (type 'abort' to cancel)"

),

PRE_TRANSITIONS_PROCESSING: {"1": loc_prc.extract_item()},

TRANSITIONS: {("form_flow", "ask_delivery"): loc_cnd.slots_filled(["items"]), lbl.repeat(0.8): cnd.true()},

diff --git a/examples/customer_service_bot/bot/test.py b/examples/customer_service_bot/bot/test.py

index 7940a5795..b9a19660a 100644

--- a/examples/customer_service_bot/bot/test.py

+++ b/examples/customer_service_bot/bot/test.py

@@ -32,7 +32,9 @@

(

TelegramMessage(text="card"),

Message(

- text="We registered your transaction. Requested titles are: Pale Fire, Lolita. Delivery method: deliver. Payment method: card. Type `abort` to cancel, type `ok` to continue."

+ text="We registered your transaction. Requested titles are: Pale Fire, Lolita. "

+ "Delivery method: deliver. Payment method: card. "

+ "Type `abort` to cancel, type `ok` to continue."

),

),

(TelegramMessage(text="ok"), script["chitchat_flow"]["init_chitchat"][RESPONSE]),

diff --git a/examples/customer_service_bot/intent_catcher/server.py b/examples/customer_service_bot/intent_catcher/server.py

index e4ae0007a..7d7871960 100644

--- a/examples/customer_service_bot/intent_catcher/server.py

+++ b/examples/customer_service_bot/intent_catcher/server.py

@@ -35,10 +35,10 @@

else:

no_cuda = True

model = AutoModelForSequenceClassification.from_config(DistilBertConfig(num_labels=23))

- state = torch.load(MODEL_PATH, map_location = "cpu" if no_cuda else "gpu")

+ state = torch.load(MODEL_PATH, map_location="cpu" if no_cuda else "gpu")

model.load_state_dict(state["model_state_dict"])

tokenizer = AutoTokenizer.from_pretrained("distilbert-base-uncased")

- pipe = pipeline('text-classification', model=model, tokenizer=tokenizer)

+ pipe = pipeline("text-classification", model=model, tokenizer=tokenizer)

logger.info("predictor is ready")

except Exception as e:

sentry_sdk.capture_exception(e)

@@ -71,9 +71,9 @@ def respond():

try:

results = pipe(contexts)

- indices = [int(''.join(filter(lambda x: x.isdigit(), result['label']))) for result in results]

+ indices = [int("".join(filter(lambda x: x.isdigit(), result["label"]))) for result in results]

responses = [list(label2id.keys())[idx] for idx in indices]

- confidences = [result['score'] for result in results]

+ confidences = [result["score"] for result in results]

except Exception as exc:

logger.exception(exc)

sentry_sdk.capture_exception(exc)

diff --git a/examples/frequently_asked_question_bot/telegram/.env b/examples/frequently_asked_question_bot/telegram/.env

new file mode 100644

index 000000000..6282da982

--- /dev/null

+++ b/examples/frequently_asked_question_bot/telegram/.env

@@ -0,0 +1 @@

+TG_BOT_TOKEN=tg_bot_token

\ No newline at end of file

diff --git a/examples/frequently_asked_question_bot/telegram/.env.example b/examples/frequently_asked_question_bot/telegram/.env.example

deleted file mode 100644

index 016ccd98a..000000000

--- a/examples/frequently_asked_question_bot/telegram/.env.example

+++ /dev/null

@@ -1 +0,0 @@

-TG_BOT_TOKEN=

\ No newline at end of file

diff --git a/examples/frequently_asked_question_bot/telegram/README.md b/examples/frequently_asked_question_bot/telegram/README.md

index ed0b32891..86513db11 100644

--- a/examples/frequently_asked_question_bot/telegram/README.md

+++ b/examples/frequently_asked_question_bot/telegram/README.md

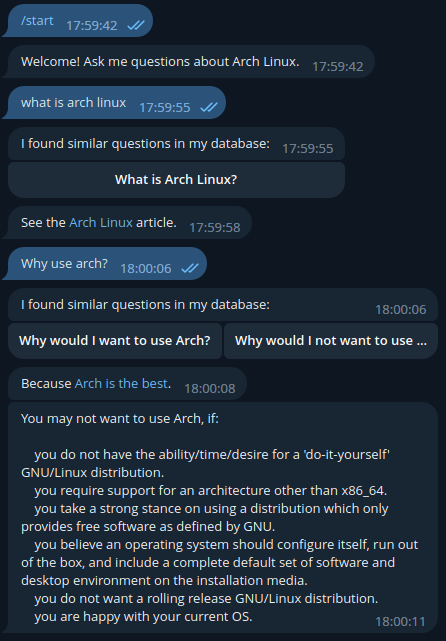

@@ -12,9 +12,11 @@ An example of bot usage:

### Run with Docker & Docker-Compose environment

-In order for the bot to work, set the bot token via [.env](.env.example). First step is creating your `.env` file:

+

+In order for the bot to work, update the [.env](.env) file replacing the template with the actual value of your Telegram token.

+

```

-echo TG_BOT_TOKEN=******* >> .env

+TG_BOT_TOKEN=***

```

Build the bot:

diff --git a/examples/frequently_asked_question_bot/web/.env b/examples/frequently_asked_question_bot/web/.env

new file mode 100644

index 000000000..813946eb4

--- /dev/null

+++ b/examples/frequently_asked_question_bot/web/.env

@@ -0,0 +1,3 @@

+POSTGRES_USERNAME=postgres

+POSTGRES_PASSWORD=pass

+POSTGRES_DB=test

\ No newline at end of file

diff --git a/examples/frequently_asked_question_bot/web/README.md b/examples/frequently_asked_question_bot/web/README.md

index 36c42054c..5576f69d3 100644

--- a/examples/frequently_asked_question_bot/web/README.md

+++ b/examples/frequently_asked_question_bot/web/README.md

@@ -14,7 +14,12 @@ A showcase of the website:

### Run with Docker & Docker-Compose environment

-

+The Postgresql image needs to be configured with variables that can be set through the [.env](.env) file. Update the file replacing templates with desired values.

+```

+POSTGRES_USERNAME=***

+POSTGRES_PASSWORD=***

+POSTGRES_DB=***

+```

Build the bot:

```commandline

docker-compose build

diff --git a/examples/frequently_asked_question_bot/web/web/app.py b/examples/frequently_asked_question_bot/web/web/app.py

index b96e9010f..5423fc666 100644

--- a/examples/frequently_asked_question_bot/web/web/app.py

+++ b/examples/frequently_asked_question_bot/web/web/app.py

@@ -11,7 +11,7 @@

@app.get("/")

async def index():

- return FileResponse('static/index.html', media_type='text/html')

+ return FileResponse("static/index.html", media_type="text/html")

@app.websocket("/ws/{client_id}")

@@ -20,11 +20,7 @@ async def websocket_endpoint(websocket: WebSocket, client_id: str):

# store user info in the dialogue context

await pipeline.context_storage.set_item_async(

- client_id,

- Context(

- id=client_id,

- misc={"ip": websocket.client.host, "headers": websocket.headers.raw}

- )

+ client_id, Context(id=client_id, misc={"ip": websocket.client.host, "headers": websocket.headers.raw})

)

async def respond(request: Message):

@@ -42,6 +38,7 @@ async def respond(request: Message):

except WebSocketDisconnect: # ignore disconnects

pass

+

if __name__ == "__main__":

uvicorn.run(

app,

diff --git a/examples/frequently_asked_question_bot/web/web/bot/dialog_graph/responses.py b/examples/frequently_asked_question_bot/web/web/bot/dialog_graph/responses.py

index fd27c3d54..696199c80 100644

--- a/examples/frequently_asked_question_bot/web/web/bot/dialog_graph/responses.py

+++ b/examples/frequently_asked_question_bot/web/web/bot/dialog_graph/responses.py

@@ -16,20 +16,15 @@ def get_bot_answer(question: str) -> Message:

FALLBACK_ANSWER = Message(

- text='I don\'t have an answer to that question. '

- 'You can find FAQ here.',

+ text="I don't have an answer to that question. "

+ 'You can find FAQ here.',

)

"""Fallback answer that the bot returns if user's query is not similar to any of the questions."""

-FIRST_MESSAGE = Message(

- text="Welcome! Ask me questions about Arch Linux."

-)

+FIRST_MESSAGE = Message(text="Welcome! Ask me questions about Arch Linux.")

-FALLBACK_NODE_MESSAGE = Message(

- text="Something went wrong.\n"

- "You may continue asking me questions about Arch Linux."

-)

+FALLBACK_NODE_MESSAGE = Message(text="Something went wrong.\n" "You may continue asking me questions about Arch Linux.")

def answer_similar_question(ctx: Context, _: Pipeline):

diff --git a/examples/frequently_asked_question_bot/web/web/bot/dialog_graph/script.py b/examples/frequently_asked_question_bot/web/web/bot/dialog_graph/script.py

index 3b8ecfa47..8c83b9d0c 100644

--- a/examples/frequently_asked_question_bot/web/web/bot/dialog_graph/script.py

+++ b/examples/frequently_asked_question_bot/web/web/bot/dialog_graph/script.py

@@ -28,12 +28,11 @@

},

"service_flow": {

"start_node": {}, # this is the start node, it simply redirects to welcome node

-

"fallback_node": { # this node will only be used if something goes wrong (e.g. an exception is raised)

RESPONSE: FALLBACK_NODE_MESSAGE,

},

},

},

"start_label": ("service_flow", "start_node"),

- "fallback_label": ("service_flow", "fallback_node")

+ "fallback_label": ("service_flow", "fallback_node"),

}

diff --git a/examples/frequently_asked_question_bot/web/web/bot/test.py b/examples/frequently_asked_question_bot/web/web/bot/test.py

index 191a1c26b..b14f647cb 100644

--- a/examples/frequently_asked_question_bot/web/web/bot/test.py

+++ b/examples/frequently_asked_question_bot/web/web/bot/test.py

@@ -35,5 +35,5 @@

async def test_happy_path(happy_path):

check_happy_path(

pipeline=Pipeline.from_script(**script.pipeline_kwargs, pre_services=pre_services.services),

- happy_path=happy_path

+ happy_path=happy_path,

)