diff --git a/.github/workflows/main.yml b/.github/workflows/main.yml

new file mode 100644

index 00000000..d8887048

--- /dev/null

+++ b/.github/workflows/main.yml

@@ -0,0 +1,18 @@

+name: Publish docs via GitHub Pages

+on:

+ push:

+ branches:

+ - master

+

+jobs:

+ build:

+ name: Deploy docs

+ runs-on: ubuntu-latest

+ steps:

+ - name: Checkout master

+ uses: actions/checkout@v1

+

+ - name: Deploy docs

+ uses: mhausenblas/mkdocs-deploy-gh-pages@master

+ env:

+ PERSONAL_TOKEN: ${{ secrets.PERSONAL_TOKEN }}

diff --git a/.templates/adminer/service.yml b/.templates/adminer/service.yml

old mode 100644

new mode 100755

index 55908eac..de1e6b53

--- a/.templates/adminer/service.yml

+++ b/.templates/adminer/service.yml

@@ -4,3 +4,6 @@

restart: unless-stopped

ports:

- 9080:8080

+ environment:

+ - VIRTUAL_HOST=~^adminer\..*\.xip\.io

+ - VIRTUAL_PORT=9080

diff --git a/.templates/blynk_server/service.yml b/.templates/blynk_server/service.yml

old mode 100644

new mode 100755

index 8f0dce75..cb2d9fc4

--- a/.templates/blynk_server/service.yml

+++ b/.templates/blynk_server/service.yml

@@ -8,4 +8,7 @@

- 9443:9443

volumes:

- ./volumes/blynk_server/data:/data

+ environment:

+ - VIRTUAL_HOST=~^blynk\..*\.xip\.io

+ - VIRTUAL_PORT=8180

diff --git a/.templates/diyhue/service.yml b/.templates/diyhue/service.yml

old mode 100644

new mode 100755

index cadebf5a..304a3efa

--- a/.templates/diyhue/service.yml

+++ b/.templates/diyhue/service.yml

@@ -9,6 +9,9 @@

# - "443:443/tcp"

env_file:

- ./services/diyhue/diyhue.env

+ environment:

+ - VIRTUAL_HOST=~^diyhue\..*\.xip\.io

+ - VIRTUAL_PORT=8070

volumes:

- ./volumes/diyhue/:/opt/hue-emulator/export/

restart: unless-stopped

diff --git a/.templates/gitea/gitea.env b/.templates/gitea/gitea.env

new file mode 100644

index 00000000..27f76ca3

--- /dev/null

+++ b/.templates/gitea/gitea.env

@@ -0,0 +1 @@

+# initially empty

diff --git a/.templates/gitea/service.yml b/.templates/gitea/service.yml

new file mode 100644

index 00000000..fb7685a6

--- /dev/null

+++ b/.templates/gitea/service.yml

@@ -0,0 +1,14 @@

+ gitea:

+ container_name: gitea

+ image: kapdap/gitea-rpi

+ restart: unless-stopped

+ user: "0"

+ ports:

+ - "7920:3000/tcp"

+ - "2222:22/tcp"

+ env_file:

+ - ./services/gitea/gitea.env

+ volumes:

+ - ./volumes/gitea/data:/data

+ - /etc/timezone:/etc/timezone:ro

+ - /etc/localtime:/etc/localtime:ro

diff --git a/.templates/grafana/service.yml b/.templates/grafana/service.yml

old mode 100644

new mode 100755

index 538c9858..ab6bb78b

--- a/.templates/grafana/service.yml

+++ b/.templates/grafana/service.yml

@@ -7,6 +7,9 @@

- 3000:3000

env_file:

- ./services/grafana/grafana.env

+ environment:

+ - VIRTUAL_HOST=~^grafana\..*\.xip\.io

+ - VIRTUAL_PORT=3000

volumes:

- ./volumes/grafana/data:/var/lib/grafana

- ./volumes/grafana/log:/var/log/grafana

diff --git a/.templates/homebridge/service.yml b/.templates/homebridge/service.yml

old mode 100644

new mode 100755

index 78fb68aa..63f37007

--- a/.templates/homebridge/service.yml

+++ b/.templates/homebridge/service.yml

@@ -2,7 +2,11 @@

container_name: homebridge

image: oznu/homebridge:no-avahi-arm32v6

restart: unless-stopped

- network_mode: host

+ ports:

+ - 8380:8080

env_file: ./services/homebridge/homebridge.env

+ environment:

+ - VIRTUAL_HOST=~^homebridge\..*\.xip\.io

+ - VIRTUAL_PORT=8380

volumes:

- ./volumes/homebridge:/homebridge

diff --git a/.templates/influxdb/service.yml b/.templates/influxdb/service.yml

old mode 100644

new mode 100755

index 3886ada5..4bdec47f

--- a/.templates/influxdb/service.yml

+++ b/.templates/influxdb/service.yml

@@ -8,6 +8,9 @@

- 2003:2003

env_file:

- ./services/influxdb/influxdb.env

+ environment:

+ - VIRTUAL_HOST=~^influxdb\..*\.xip\.io

+ - VIRTUAL_PORT=8086

volumes:

- ./volumes/influxdb/data:/var/lib/influxdb

- ./backups/influxdb/db:/var/lib/influxdb/backup

diff --git a/.templates/mosquitto/filter.acl b/.templates/mosquitto/filter.acl

new file mode 100644

index 00000000..e1682311

--- /dev/null

+++ b/.templates/mosquitto/filter.acl

@@ -0,0 +1,6 @@

+user admin

+topic read #

+topic write #

+

+pattern read #

+pattern write #

diff --git a/.templates/mosquitto/mosquitto.conf b/.templates/mosquitto/mosquitto.conf

index 9f63ac19..5827737d 100644

--- a/.templates/mosquitto/mosquitto.conf

+++ b/.templates/mosquitto/mosquitto.conf

@@ -1,4 +1,10 @@

persistence true

persistence_location /mosquitto/data/

log_dest file /mosquitto/log/mosquitto.log

+

+#Uncomment to enable passwords

#password_file /mosquitto/config/pwfile

+#allow_anonymous false

+

+#Uncomment to enable filters

+#acl_file /mosquitto/config/filter.acl

diff --git a/.templates/mosquitto/service.yml b/.templates/mosquitto/service.yml

index 8a62d776..a7d80921 100644

--- a/.templates/mosquitto/service.yml

+++ b/.templates/mosquitto/service.yml

@@ -10,4 +10,5 @@

- ./volumes/mosquitto/data:/mosquitto/data

- ./volumes/mosquitto/log:/mosquitto/log

- ./services/mosquitto/mosquitto.conf:/mosquitto/config/mosquitto.conf

+ - ./services/mosquitto/filter.acl:/mosquitto/config/filter.acl

diff --git a/.templates/nextcloud/service.yml b/.templates/nextcloud/service.yml

old mode 100644

new mode 100755

index b7e97624..560cceec

--- a/.templates/nextcloud/service.yml

+++ b/.templates/nextcloud/service.yml

@@ -10,6 +10,9 @@

- nextcloud_db

links:

- nextcloud_db

+ environment:

+ - VIRTUAL_HOST=~^nextcloud\..*\.xip\.io

+ - VIRTUAL_PORT=9321

nextcloud_db:

image: linuxserver/mariadb

diff --git a/.templates/nginx-proxy/directoryfix.sh b/.templates/nginx-proxy/directoryfix.sh

new file mode 100755

index 00000000..eea0c829

--- /dev/null

+++ b/.templates/nginx-proxy/directoryfix.sh

@@ -0,0 +1,27 @@

+#!/bin/bash

+if [ ! -d ./volumes/nginx-proxy/nginx-conf ]; then

+ sudo mkdir -p ./volumes/nginx-proxy/nginx-conf

+ sudo chown -R pi:pi ./volumes/nginx-proxy/nginx-conf

+fi

+

+if [ ! -d ./volumes/nginx-proxy/nginx-vhost ]; then

+ sudo mkdir -p ./volumes/nginx-proxy/nginx-vhost

+ sudo chown -R pi:pi ./volumes/nginx-proxy/nginx-vhost

+fi

+

+if [ ! -d ./volumes/nginx-proxy/html ]; then

+ sudo mkdir -p ./volumes/nginx-proxy/html

+ sudo chown -R pi:pi ./volumes/nginx-proxy/html

+fi

+

+if [ ! -d ./volumes/nginx-proxy/certs ]; then

+ sudo mkdir -p ./volumes/nginx-proxy/certs

+ sudo chown -R pi:pi ./volumes/nginx-proxy/certs

+fi

+

+if [ ! -d ./volumes/nginx-proxy/log ]; then

+ sudo mkdir -p ./volumes/nginx-proxy/log

+ sudo chown -R pi:pi ./volumes/nginx-proxy/log

+fi

+

+cp ./services/nginx-proxy//nginx.tmpl ./volumes/nginx-proxy

diff --git a/.templates/nginx-proxy/nginx.tmpl b/.templates/nginx-proxy/nginx.tmpl

new file mode 100755

index 00000000..8b73280f

--- /dev/null

+++ b/.templates/nginx-proxy/nginx.tmpl

@@ -0,0 +1,402 @@

+{{ $CurrentContainer := where $ "ID" .Docker.CurrentContainerID | first }}

+

+{{ $external_http_port := coalesce $.Env.HTTP_PORT "80" }}

+{{ $external_https_port := coalesce $.Env.HTTPS_PORT "443" }}

+

+{{ define "upstream" }}

+ {{ if .Address }}

+ {{/* If we got the containers from swarm and this container's port is published to host, use host IP:PORT */}}

+ {{ if and .Container.Node.ID .Address.HostPort }}

+ # {{ .Container.Node.Name }}/{{ .Container.Name }}

+ server {{ .Container.Node.Address.IP }}:{{ .Address.HostPort }};

+ {{/* If there is no swarm node or the port is not published on host, use container's IP:PORT */}}

+ {{ else if .Network }}

+ # {{ .Container.Name }}

+ server {{ .Network.IP }}:{{ .Address.Port }};

+ {{ end }}

+ {{ else if .Network }}

+ # {{ .Container.Name }}

+ {{ if .Network.IP }}

+ server {{ .Network.IP }} down;

+ {{ else }}

+ server 127.0.0.1 down;

+ {{ end }}

+ {{ end }}

+

+{{ end }}

+

+{{ define "ssl_policy" }}

+ {{ if eq .ssl_policy "Mozilla-Modern" }}

+ ssl_protocols TLSv1.3;

+ {{/* nginx currently lacks ability to choose ciphers in TLS 1.3 in configuration, see https://trac.nginx.org/nginx/ticket/1529 /*}}

+ {{/* a possible workaround can be modify /etc/ssl/openssl.cnf to change it globally (see https://trac.nginx.org/nginx/ticket/1529#comment:12 ) /*}}

+ {{/* explicitly set ngnix default value in order to allow single servers to override the global http value */}}

+ ssl_ciphers HIGH:!aNULL:!MD5;

+ ssl_prefer_server_ciphers off;

+ {{ else if eq .ssl_policy "Mozilla-Intermediate" }}

+ ssl_protocols TLSv1.2 TLSv1.3;

+ ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384';

+ ssl_prefer_server_ciphers off;

+ {{ else if eq .ssl_policy "Mozilla-Old" }}

+ ssl_protocols TLSv1 TLSv1.1 TLSv1.2 TLSv1.3;

+ ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384:DHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA:ECDHE-RSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES256-SHA256:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:DES-CBC3-SHA';

+ ssl_prefer_server_ciphers on;

+ {{ else if eq .ssl_policy "AWS-TLS-1-2-2017-01" }}

+ ssl_protocols TLSv1.2 TLSv1.3;

+ ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:AES128-GCM-SHA256:AES128-SHA256:AES256-GCM-SHA384:AES256-SHA256';

+ ssl_prefer_server_ciphers on;

+ {{ else if eq .ssl_policy "AWS-TLS-1-1-2017-01" }}

+ ssl_protocols TLSv1.1 TLSv1.2 TLSv1.3;

+ ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:AES128-GCM-SHA256:AES128-SHA256:AES128-SHA:AES256-GCM-SHA384:AES256-SHA256:AES256-SHA';

+ ssl_prefer_server_ciphers on;

+ {{ else if eq .ssl_policy "AWS-2016-08" }}

+ ssl_protocols TLSv1 TLSv1.1 TLSv1.2 TLSv1.3;

+ ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:AES128-GCM-SHA256:AES128-SHA256:AES128-SHA:AES256-GCM-SHA384:AES256-SHA256:AES256-SHA';

+ ssl_prefer_server_ciphers on;

+ {{ else if eq .ssl_policy "AWS-2015-05" }}

+ ssl_protocols TLSv1 TLSv1.1 TLSv1.2 TLSv1.3;

+ ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:AES128-GCM-SHA256:AES128-SHA256:AES128-SHA:AES256-GCM-SHA384:AES256-SHA256:AES256-SHA:DES-CBC3-SHA';

+ ssl_prefer_server_ciphers on;

+ {{ else if eq .ssl_policy "AWS-2015-03" }}

+ ssl_protocols TLSv1 TLSv1.1 TLSv1.2 TLSv1.3;

+ ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES128-SHA:DHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:AES128-GCM-SHA256:AES128-SHA256:AES128-SHA:AES256-GCM-SHA384:AES256-SHA256:AES256-SHA:DHE-DSS-AES128-SHA:DES-CBC3-SHA';

+ ssl_prefer_server_ciphers on;

+ {{ else if eq .ssl_policy "AWS-2015-02" }}

+ ssl_protocols TLSv1 TLSv1.1 TLSv1.2 TLSv1.3;

+ ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES128-SHA:DHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:AES128-GCM-SHA256:AES128-SHA256:AES128-SHA:AES256-GCM-SHA384:AES256-SHA256:AES256-SHA:DHE-DSS-AES128-SHA';

+ ssl_prefer_server_ciphers on;

+ {{ end }}

+{{ end }}

+

+# If we receive X-Forwarded-Proto, pass it through; otherwise, pass along the

+# scheme used to connect to this server

+map $http_x_forwarded_proto $proxy_x_forwarded_proto {

+ default $http_x_forwarded_proto;

+ '' $scheme;

+}

+

+# If we receive X-Forwarded-Port, pass it through; otherwise, pass along the

+# server port the client connected to

+map $http_x_forwarded_port $proxy_x_forwarded_port {

+ default $http_x_forwarded_port;

+ '' $server_port;

+}

+

+# If we receive Upgrade, set Connection to "upgrade"; otherwise, delete any

+# Connection header that may have been passed to this server

+map $http_upgrade $proxy_connection {

+ default upgrade;

+ '' close;

+}

+

+# Apply fix for very long server names

+server_names_hash_bucket_size 128;

+

+# Default dhparam

+{{ if (exists "/etc/nginx/dhparam/dhparam.pem") }}

+ssl_dhparam /etc/nginx/dhparam/dhparam.pem;

+{{ end }}

+

+# Set appropriate X-Forwarded-Ssl header

+map $scheme $proxy_x_forwarded_ssl {

+ default off;

+ https on;

+}

+

+gzip_types text/plain text/css application/javascript application/json application/x-javascript text/xml application/xml application/xml+rss text/javascript;

+

+log_format vhost '$host $remote_addr - $remote_user [$time_local] '

+ '"$request" $status $body_bytes_sent '

+ '"$http_referer" "$http_user_agent"';

+

+access_log off;

+

+{{/* Get the SSL_POLICY defined by this container, falling back to "Mozilla-Intermediate" */}}

+{{ $ssl_policy := or ($.Env.SSL_POLICY) "Mozilla-Intermediate" }}

+{{ template "ssl_policy" (dict "ssl_policy" $ssl_policy) }}

+

+{{ if $.Env.RESOLVERS }}

+resolver {{ $.Env.RESOLVERS }};

+{{ end }}

+

+{{ if (exists "/etc/nginx/proxy.conf") }}

+include /etc/nginx/proxy.conf;

+{{ else }}

+# HTTP 1.1 support

+proxy_http_version 1.1;

+proxy_buffering off;

+proxy_set_header Host $http_host;

+proxy_set_header Upgrade $http_upgrade;

+proxy_set_header Connection $proxy_connection;

+proxy_set_header X-Real-IP $remote_addr;

+proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

+proxy_set_header X-Forwarded-Proto $proxy_x_forwarded_proto;

+proxy_set_header X-Forwarded-Ssl $proxy_x_forwarded_ssl;

+proxy_set_header X-Forwarded-Port $proxy_x_forwarded_port;

+

+# Mitigate httpoxy attack (see README for details)

+proxy_set_header Proxy "";

+{{ end }}

+

+{{ $access_log := (or (and (not $.Env.DISABLE_ACCESS_LOGS) "access_log /var/log/nginx/access.log vhost;") "") }}

+

+{{ $enable_ipv6 := eq (or ($.Env.ENABLE_IPV6) "") "true" }}

+server {

+ server_name _; # This is just an invalid value which will never trigger on a real hostname.

+ listen {{ $external_http_port }};

+ {{ if $enable_ipv6 }}

+ listen [::]:{{ $external_http_port }};

+ {{ end }}

+ {{ $access_log }}

+ return 503;

+}

+

+{{ if (and (exists "/etc/nginx/certs/default.crt") (exists "/etc/nginx/certs/default.key")) }}

+server {

+ server_name _; # This is just an invalid value which will never trigger on a real hostname.

+ listen {{ $external_https_port }} ssl http2;

+ {{ if $enable_ipv6 }}

+ listen [::]:{{ $external_https_port }} ssl http2;

+ {{ end }}

+ {{ $access_log }}

+ return 503;

+

+ ssl_session_cache shared:SSL:50m;

+ ssl_session_tickets off;

+ ssl_certificate /etc/nginx/certs/default.crt;

+ ssl_certificate_key /etc/nginx/certs/default.key;

+}

+{{ end }}

+

+{{ range $host, $containers := groupByMulti $ "Env.VIRTUAL_HOST" "," }}

+

+{{ $host := trim $host }}

+{{ $is_regexp := hasPrefix "~" $host }}

+{{ $upstream_name := when $is_regexp (sha1 $host) $host }}

+

+# {{ $host }}

+upstream {{ $upstream_name }} {

+

+{{ range $container := $containers }}

+ {{ $addrLen := len $container.Addresses }}

+

+ {{ range $knownNetwork := $CurrentContainer.Networks }}

+ {{ range $containerNetwork := $container.Networks }}

+ {{ if (and (ne $containerNetwork.Name "ingress") (or (eq $knownNetwork.Name $containerNetwork.Name) (eq $knownNetwork.Name "host"))) }}

+ ## Can be connected with "{{ $containerNetwork.Name }}" network

+

+ {{/* If only 1 port exposed, use that */}}

+ {{ if eq $addrLen 1 }}

+ {{ $address := index $container.Addresses 0 }}

+ {{ template "upstream" (dict "Container" $container "Address" $address "Network" $containerNetwork) }}

+ {{/* If more than one port exposed, use the one matching VIRTUAL_PORT env var, falling back to standard web port 80 */}}

+ {{ else }}

+ {{ $port := coalesce $container.Env.VIRTUAL_PORT "80" }}

+ {{ $address := where $container.Addresses "Port" $port | first }}

+ {{ template "upstream" (dict "Container" $container "Address" $address "Network" $containerNetwork) }}

+ {{ end }}

+ {{ else }}

+ # Cannot connect to network of this container

+ server 127.0.0.1 down;

+ {{ end }}

+ {{ end }}

+ {{ end }}

+{{ end }}

+}

+

+{{ $default_host := or ($.Env.DEFAULT_HOST) "" }}

+{{ $default_server := index (dict $host "" $default_host "default_server") $host }}

+

+{{/* Get the VIRTUAL_PROTO defined by containers w/ the same vhost, falling back to "http" */}}

+{{ $proto := trim (or (first (groupByKeys $containers "Env.VIRTUAL_PROTO")) "http") }}

+

+{{/* Get the NETWORK_ACCESS defined by containers w/ the same vhost, falling back to "external" */}}

+{{ $network_tag := or (first (groupByKeys $containers "Env.NETWORK_ACCESS")) "external" }}

+

+{{/* Get the HTTPS_METHOD defined by containers w/ the same vhost, falling back to "redirect" */}}

+{{ $https_method := or (first (groupByKeys $containers "Env.HTTPS_METHOD")) "redirect" }}

+

+{{/* Get the SSL_POLICY defined by containers w/ the same vhost, falling back to empty string (use default) */}}

+{{ $ssl_policy := or (first (groupByKeys $containers "Env.SSL_POLICY")) "" }}

+

+{{/* Get the HSTS defined by containers w/ the same vhost, falling back to "max-age=31536000" */}}

+{{ $hsts := or (first (groupByKeys $containers "Env.HSTS")) "max-age=31536000" }}

+

+{{/* Get the VIRTUAL_ROOT By containers w/ use fastcgi root */}}

+{{ $vhost_root := or (first (groupByKeys $containers "Env.VIRTUAL_ROOT")) "/var/www/public" }}

+

+

+{{/* Get the first cert name defined by containers w/ the same vhost */}}

+{{ $certName := (first (groupByKeys $containers "Env.CERT_NAME")) }}

+

+{{/* Get the best matching cert by name for the vhost. */}}

+{{ $vhostCert := (closest (dir "/etc/nginx/certs") (printf "%s.crt" $host))}}

+

+{{/* vhostCert is actually a filename so remove any suffixes since they are added later */}}

+{{ $vhostCert := trimSuffix ".crt" $vhostCert }}

+{{ $vhostCert := trimSuffix ".key" $vhostCert }}

+

+{{/* Use the cert specified on the container or fallback to the best vhost match */}}

+{{ $cert := (coalesce $certName $vhostCert) }}

+

+{{ $is_https := (and (ne $https_method "nohttps") (ne $cert "") (exists (printf "/etc/nginx/certs/%s.crt" $cert)) (exists (printf "/etc/nginx/certs/%s.key" $cert))) }}

+

+{{ if $is_https }}

+

+{{ if eq $https_method "redirect" }}

+server {

+ server_name {{ $host }};

+ listen {{ $external_http_port }} {{ $default_server }};

+ {{ if $enable_ipv6 }}

+ listen [::]:{{ $external_http_port }} {{ $default_server }};

+ {{ end }}

+ {{ $access_log }}

+

+ # Do not HTTPS redirect Let'sEncrypt ACME challenge

+ location /.well-known/acme-challenge/ {

+ auth_basic off;

+ allow all;

+ root /usr/share/nginx/html;

+ try_files $uri =404;

+ break;

+ }

+

+ location / {

+ return 301 https://$host$request_uri;

+ }

+}

+{{ end }}

+

+server {

+ server_name {{ $host }};

+ listen {{ $external_https_port }} ssl http2 {{ $default_server }};

+ {{ if $enable_ipv6 }}

+ listen [::]:{{ $external_https_port }} ssl http2 {{ $default_server }};

+ {{ end }}

+ {{ $access_log }}

+

+ {{ if eq $network_tag "internal" }}

+ # Only allow traffic from internal clients

+ include /etc/nginx/network_internal.conf;

+ {{ end }}

+

+ {{ template "ssl_policy" (dict "ssl_policy" $ssl_policy) }}

+

+ ssl_session_timeout 5m;

+ ssl_session_cache shared:SSL:50m;

+ ssl_session_tickets off;

+

+ ssl_certificate /etc/nginx/certs/{{ (printf "%s.crt" $cert) }};

+ ssl_certificate_key /etc/nginx/certs/{{ (printf "%s.key" $cert) }};

+

+ {{ if (exists (printf "/etc/nginx/certs/%s.dhparam.pem" $cert)) }}

+ ssl_dhparam {{ printf "/etc/nginx/certs/%s.dhparam.pem" $cert }};

+ {{ end }}

+

+ {{ if (exists (printf "/etc/nginx/certs/%s.chain.pem" $cert)) }}

+ ssl_stapling on;

+ ssl_stapling_verify on;

+ ssl_trusted_certificate {{ printf "/etc/nginx/certs/%s.chain.pem" $cert }};

+ {{ end }}

+

+ {{ if (not (or (eq $https_method "noredirect") (eq $hsts "off"))) }}

+ add_header Strict-Transport-Security "{{ trim $hsts }}" always;

+ {{ end }}

+

+ {{ if (exists (printf "/etc/nginx/vhost.d/%s" $host)) }}

+ include {{ printf "/etc/nginx/vhost.d/%s" $host }};

+ {{ else if (exists "/etc/nginx/vhost.d/default") }}

+ include /etc/nginx/vhost.d/default;

+ {{ end }}

+

+ location / {

+ {{ if eq $proto "uwsgi" }}

+ include uwsgi_params;

+ uwsgi_pass {{ trim $proto }}://{{ trim $upstream_name }};

+ {{ else if eq $proto "fastcgi" }}

+ root {{ trim $vhost_root }};

+ include fastcgi_params;

+ fastcgi_pass {{ trim $upstream_name }};

+ {{ else if eq $proto "grpc" }}

+ grpc_pass {{ trim $proto }}://{{ trim $upstream_name }};

+ {{ else }}

+ proxy_pass {{ trim $proto }}://{{ trim $upstream_name }};

+ {{ end }}

+

+ {{ if (exists (printf "/etc/nginx/htpasswd/%s" $host)) }}

+ auth_basic "Restricted {{ $host }}";

+ auth_basic_user_file {{ (printf "/etc/nginx/htpasswd/%s" $host) }};

+ {{ end }}

+ {{ if (exists (printf "/etc/nginx/vhost.d/%s_location" $host)) }}

+ include {{ printf "/etc/nginx/vhost.d/%s_location" $host}};

+ {{ else if (exists "/etc/nginx/vhost.d/default_location") }}

+ include /etc/nginx/vhost.d/default_location;

+ {{ end }}

+ }

+}

+

+{{ end }}

+

+{{ if or (not $is_https) (eq $https_method "noredirect") }}

+

+server {

+ server_name {{ $host }};

+ listen {{ $external_http_port }} {{ $default_server }};

+ {{ if $enable_ipv6 }}

+ listen [::]:80 {{ $default_server }};

+ {{ end }}

+ {{ $access_log }}

+

+ {{ if eq $network_tag "internal" }}

+ # Only allow traffic from internal clients

+ include /etc/nginx/network_internal.conf;

+ {{ end }}

+

+ {{ if (exists (printf "/etc/nginx/vhost.d/%s" $host)) }}

+ include {{ printf "/etc/nginx/vhost.d/%s" $host }};

+ {{ else if (exists "/etc/nginx/vhost.d/default") }}

+ include /etc/nginx/vhost.d/default;

+ {{ end }}

+

+ location / {

+ {{ if eq $proto "uwsgi" }}

+ include uwsgi_params;

+ uwsgi_pass {{ trim $proto }}://{{ trim $upstream_name }};

+ {{ else if eq $proto "fastcgi" }}

+ root {{ trim $vhost_root }};

+ include fastcgi_params;

+ fastcgi_pass {{ trim $upstream_name }};

+ {{ else if eq $proto "grpc" }}

+ grpc_pass {{ trim $proto }}://{{ trim $upstream_name }};

+ {{ else }}

+ proxy_pass {{ trim $proto }}://{{ trim $upstream_name }};

+ {{ end }}

+ {{ if (exists (printf "/etc/nginx/htpasswd/%s" $host)) }}

+ auth_basic "Restricted {{ $host }}";

+ auth_basic_user_file {{ (printf "/etc/nginx/htpasswd/%s" $host) }};

+ {{ end }}

+ {{ if (exists (printf "/etc/nginx/vhost.d/%s_location" $host)) }}

+ include {{ printf "/etc/nginx/vhost.d/%s_location" $host}};

+ {{ else if (exists "/etc/nginx/vhost.d/default_location") }}

+ include /etc/nginx/vhost.d/default_location;

+ {{ end }}

+ }

+}

+

+{{ if (and (not $is_https) (exists "/etc/nginx/certs/default.crt") (exists "/etc/nginx/certs/default.key")) }}

+server {

+ server_name {{ $host }};

+ listen {{ $external_https_port }} ssl http2 {{ $default_server }};

+ {{ if $enable_ipv6 }}

+ listen [::]:{{ $external_https_port }} ssl http2 {{ $default_server }};

+ {{ end }}

+ {{ $access_log }}

+ return 500;

+

+ ssl_certificate /etc/nginx/certs/default.crt;

+ ssl_certificate_key /etc/nginx/certs/default.key;

+}

+{{ end }}

+

+{{ end }}

+{{ end }}

\ No newline at end of file

diff --git a/.templates/nginx-proxy/service.yml b/.templates/nginx-proxy/service.yml

new file mode 100755

index 00000000..afc0b20e

--- /dev/null

+++ b/.templates/nginx-proxy/service.yml

@@ -0,0 +1,49 @@

+ # As described in https://github.com/nginx-proxy/nginx-proxy. We use separate containers.

+ # The HTTPS implementation as documented here: https://medium.com/@francoisromain/host-multiple-websites-with-https-inside-docker-containers-on-a-single-server-18467484ab95

+ # We are using xip.io to be able make subdomains for IP address. So the nodered service can be accessed:

+ # nodered.X.X.X.X.xip.org where X.X.X.X is the IP address of IOTstack. Any other domain can be used, on that case please

+ # replace VIRTUAL_HOST env variable of the given instance with the coresponding value.

+

+ nginx-proxy:

+ image: nginx

+ container_name: nginx-proxy

+ network_mode: host

+ restart: unless-stopped # Always restart container

+ ports:

+ - "80:80" # Port mappings in format host:container

+ - "443:443"

+ labels:

+ - "com.github.jrcs.letsencrypt_nginx_proxy_companion.nginx_proxy" # Label needed for Let's Encrypt companion container

+ volumes: # Volumes needed for container to configure proixes and access certificates genereated by Let's Encrypt companion container

+ - "./volumes/nginx-proxy/nginx-conf:/etc/nginx/conf.d"

+ - "./volumes/nginx-proxy/nginx-vhost:/etc/nginx/vhost.d"

+ - "./volumes/nginx-proxy/html:/usr/share/nginx/html"

+ - "./volumes/nginx-proxy/certs:/etc/nginx/certs:ro"

+ - "./volumes/nginx-proxy/log:/var/log/nginx"

+

+ nginx-gen:

+ image: ajoubert/docker-gen-arm

+ command: -notify-sighup nginx-proxy -watch -wait 5s:30s /etc/docker-gen/templates/nginx.tmpl /etc/nginx/conf.d/default.conf

+ container_name: nginx-gen

+ restart: unless-stopped

+ volumes:

+ - /var/run/docker.sock:/tmp/docker.sock:ro

+ - "./volumes/nginx-proxy/nginx.tmpl:/etc/docker-gen/templates/nginx.tmpl:ro"

+ - "./volumes/nginx-proxy/nginx-conf:/etc/nginx/conf.d"

+ - "./volumes/nginx-proxy/nginx-vhost:/etc/nginx/vhost.d"

+ - "./volumes/nginx-proxy/html:/usr/share/nginx/html"

+ - "./volumes/nginx-proxy/certs:/etc/nginx/certs:ro"

+

+ nginx-letsencrypt:

+ image: budry/jrcs-letsencrypt-nginx-proxy-companion-arm

+ container_name: nginx-letsencrypt

+ restart: unless-stopped

+ volumes:

+ - /var/run/docker.sock:/var/run/docker.sock:ro

+ - "./volumes/nginx-proxy/nginx-conf:/etc/nginx/conf.d"

+ - "./volumes/nginx-proxy/nginx-vhost:/etc/nginx/vhost.d"

+ - "./volumes/nginx-proxy/html:/usr/share/nginx/html"

+ - "./volumes/nginx-proxy/certs:/etc/nginx/certs:rw"

+ environment:

+ NGINX_DOCKER_GEN_CONTAINER: "nginx-gen"

+ NGINX_PROXY_CONTAINER: "nginx-proxy"

diff --git a/.templates/nodered/service.yml b/.templates/nodered/service.yml

old mode 100644

new mode 100755

index ca5acad6..48e7618c

--- a/.templates/nodered/service.yml

+++ b/.templates/nodered/service.yml

@@ -5,6 +5,9 @@

user: "0"

privileged: true

env_file: ./services/nodered/nodered.env

+ environment:

+ - VIRTUAL_HOST=~^nodered\..*\.xip\.io

+ - VIRTUAL_PORT=1880

ports:

- 1880:1880

volumes:

diff --git a/.templates/openhab/service.yml b/.templates/openhab/service.yml

old mode 100644

new mode 100755

index 128cbc14..9aeea9db

--- a/.templates/openhab/service.yml

+++ b/.templates/openhab/service.yml

@@ -1,8 +1,8 @@

openhab:

- image: "openhab/openhab:2.4.0"

+ image: "openhab/openhab:2.5.3"

container_name: openhab

restart: unless-stopped

- network_mode: host

+# network_mode: host

# cap_add:

# - NET_ADMIN

# - NET_RAW

@@ -16,6 +16,10 @@

OPENHAB_HTTP_PORT: "8080"

OPENHAB_HTTPS_PORT: "8443"

EXTRA_JAVA_OPTS: "-Duser.timezone=Europe/Berlin"

+ environment:

+ - VIRTUAL_HOST=~^openhab\..*\.xip\.io

+ - VIRTUAL_PORT=8080

+

# # The command node is very important. It overrides

# # the "gosu openhab tini -s ./start.sh" command from Dockerfile and runs as root!

# command: "tini -s ./start.sh server"

diff --git a/.templates/pihole/service.yml b/.templates/pihole/service.yml

old mode 100644

new mode 100755

index b9c1eb3b..09acc594

--- a/.templates/pihole/service.yml

+++ b/.templates/pihole/service.yml

@@ -9,6 +9,9 @@

#- "443:443/tcp"

env_file:

- ./services/pihole/pihole.env

+ environment:

+ - VIRTUAL_HOST=~^pihole\..*\.xip\.io

+ - VIRTUAL_PORT=8089

volumes:

- ./volumes/pihole/etc-pihole/:/etc/pihole/

- ./volumes/pihole/etc-dnsmasq.d/:/etc/dnsmasq.d/

diff --git a/.templates/portainer/service.yml b/.templates/portainer/service.yml

old mode 100644

new mode 100755

index b7bd194a..68416d2e

--- a/.templates/portainer/service.yml

+++ b/.templates/portainer/service.yml

@@ -4,6 +4,9 @@

restart: unless-stopped

ports:

- 9000:9000

+ environment:

+ - VIRTUAL_HOST=~^portainer\..*\.xip\.io

+ - VIRTUAL_PORT=9000

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- ./volumes/portainer/data:/data

diff --git a/.templates/tasmoadmin/service.yml b/.templates/tasmoadmin/service.yml

old mode 100644

new mode 100755

index 8d26348a..fed96ed3

--- a/.templates/tasmoadmin/service.yml

+++ b/.templates/tasmoadmin/service.yml

@@ -6,4 +6,7 @@

- "8088:80"

volumes:

- ./volumes/tasmoadmin/data:/data

+ environment:

+ - VIRTUAL_HOST=~^tasmoadmin\..*\.xip\.io

+ - VIRTUAL_PORT=8088

diff --git a/.templates/webthings_gateway/service.yml b/.templates/webthings_gateway/service.yml

old mode 100644

new mode 100755

index 9bba05b7..a0c07ad0

--- a/.templates/webthings_gateway/service.yml

+++ b/.templates/webthings_gateway/service.yml

@@ -7,6 +7,9 @@

# - 4443:4443

#devices:

# - /dev/ttyACM0:/dev/ttyACM0

+ #environment:

+ # - VIRTUAL_HOST=~^webthings\..*\.xip\.io

+ # - VIRTUAL_PORT=8080

volumes:

- ./volumes/webthings_gateway/share:/home/node/.mozilla-iot

diff --git a/README.md b/README.md

index b59ba57e..ae8fefe0 100644

--- a/README.md

+++ b/README.md

@@ -1,198 +1,35 @@

-# IOTStack

+# IOT Stack

+IOTstack is a builder for docker-compose to easily make and maintain IoT stacks on the Raspberry Pi.

-IOTstack is a builder for docker-compose to easily make and maintain IoT stacks on the Raspberry Pi

+## Documentation for the project:

-## Announcements

+https://sensorsiot.github.io/IOTstack/

-The bulk of the README has moved to the Wiki. Please check it out [here](https://github.com/gcgarner/IOTstack/wiki)

+## Video

+https://youtu.be/a6mjt8tWUws

-* 2019-12-19 Added python container, tweaked update script

-* 2019-12-12 modified zigbee2mqtt template file

-* 2019-12-12 Added Function to add custom containers to the stack

-* 2019-12-12 PR cmskedgell: Added Homebridge

-* 2019-12-12 PR 877dev: Added trimming of online backups

-* 2019-12-03 BUGFIX Mosquitto: Fixed issue where mosquitto failed to start as a result of 11-28 change

-* 2019-12-03 Added terminal for postgres, temporarily removed setfacl from menu

-* 2019-11-28 PR @stfnhmplr added diyHue

-* 2019-11-28 Fixed update notification on menu

-* 2019-11-28 Fixed mosquitto logs and database not mapping correctly to volumes. Pull new template to fix

-* 2019-11-28 added the option to disable swapfile by setting swappiness to 0

-* 2019-11-28 PR @stfnhmplr fixed incorrect shegang on MariaDB terminal.sh

-* 2019-11-28 Added native install for RPIEasy

-* 2019-11-27 Additions: NextCloud, MariaDB, MotionEye, Mozilla Webthings, blynk-server (fixed issue with selection.txt)

-* 2019-11-22 BUGFIX selection.txt failed on fresh install, added pushd IOTstack to menu to ensure correct path

-* 2019-11-22 Added notification into menu if project update is available

-* 2019-11-20 BUGFIX influxdb backup: Placing docker_backup in crontab caused influxdb backup not to execute correctly

-* 2019-11-20 BUGFIX disable swap: swapfile recreation on reboot fixed. Re-run from menu to fix.

-* Node-RED: serial port. New template adds privileged which allows acces to serial devices

-* EspurinoHub: is available for testing see wiki entry

+## Installation

+1. On the (PRi) lite image you will need to install git first

-***

-

-## Highlighted topics

-

-* [Bluetooth and Node-RED](https://github.com/gcgarner/IOTstack/wiki/Node-RED#using-bluetooth)

-* [Saving files to disk inside containers](https://github.com/gcgarner/IOTstack/wiki/Node-RED#sharing-files-between-node-red-and-the-host)

-* [Updating the Project](https://github.com/gcgarner/IOTstack/wiki/Updating-the-Project)

-

- ***

-

-## Coming soon

-

-* reverse proxy is now next on the list, I cant keep up with the ports

-* Detection of arhcitecture for seperate stack options for amd64, armhf, i386

-* autocleanup of backups on cloud

-* Gitea (in testing branch)

-* OwnCloud

-

-***

-

-## About

-

-Docker stack for getting started on IoT on the Raspberry Pi.

-

-This Docker stack consists of:

-

-* Node-RED

-* Grafana

-* InfluxDB

-* Postgres

-* Mosquitto mqtt

-* Portainer

-* Adminer

-* openHAB

-* Home Assistant (HASSIO)

-* zigbee2mqtt

-* Pi-Hole

-* TasmoAdmin (parial wiki)

-* Plex media server

-* Telegraf (wiki coming soon)

-* RTL_433

-* EspruinoHub (testing)

-* MotionEye

-* MariaDB

-* Plex

-* Homebridge

-

-In addition, there is a write-up and some scripts to get a dynamic DNS via duckdns and VPN up and running.

-

-Firstly what is docker? The correct question is "what are containers?". Docker is just one of the utilities to run a container.

-

-A Container can be thought of as ultra-minimal virtual machines, they are a collection of binaries that run in a sandbox environment. You download a preconfigured base image and create a new container. Only the differences between the base and your "VM" are stored.

-Containers don't have [GUI](https://en.wikipedia.org/wiki/Graphical_user_interface)s so generally the way you interact with them is via web services or you can launch into a terminal.

-One of the major advantages is that the image comes mostly preconfigured.

-

-There are pro's and cons for using native installs vs containers. For me, one of the best parts of containers is that it doesn't "clutter" your device. If you don't need Postgres anymore then just stop and delete the container. It will be like the container was never there.

-

-The container will fail if you try to run the docker and native vesions as the same time. It is best to install this on a fresh system.

-

-For those looking for a script that installs native applications check out [Peter Scargill's script](https://tech.scargill.net/the-script/)

-

-## Tested platform

-

-Raspberry Pi 3B and 4B Raspbian (Buster)

-

-### Older Pi's

-

-Docker will not run on a PiZero or A model 1 because of the CPU. It has not been tested on a Model 2. You can still use Peter Scargill's [script](https://tech.scargill.net/the-script/)

-

-## Running under a virtual machine

-

-For those wanting to test out the script in a Virtual Machine before installing on their Pi there are some limitations. The script is designed to work with Debian based distributions. Not all the container have x86_64 images. For example Portainer does not and will give an error when you try and start the stack. Please see the pinned issue [#29](https://github.com/gcgarner/IOTstack/issues/29), there is more info there.

-

-## Feature Requests

-

-Please direct all feature requests to [Discord](https://discord.gg/W45tD83)

-

-## Youtube reference

-

-This repo was originally inspired by Andreas Spiess's video on using some of these tools. Some containers have been added to extend its functionality.

-

-[YouTube video](https://www.youtube.com/watch?v=JdV4x925au0): This is an alternative approach to the setup. Be sure to watch the video for the instructions. Just note that the network addresses are different, see the wiki under Docker Networks.

-

-### YouTube guide

-

-@peyanski (Kiril) made a YouTube video on getting started using the project, check it out [here](https://youtu.be/5JMNHuHv134)

-

-## Download the project

-

-1.On the lite image you will need to install git first

-

-```bash

-sudo apt-get install git

+```

+sudo apt-get install git -y

```

-2.Download the repository with:

-

-```bash

-git clone https://github.com/gcgarner/IOTstack.git ~/IOTstack

+2. Download the repository with:

+```

+git clone https://github.com/SensorsIot/IOTstack.git ~/IOTstack

```

Due to some script restraints, this project needs to be stored in ~/IOTstack

-3.To enter the directory run:

-

-```bash

-cd ~/IOTstack

+3. To enter the directory and run menu for installation options:

+```

+cd ~/IOTstack && bash ./menu.sh

```

-## The Menu

-

-I've added a menu to make things easier. It is good to familiarise yourself with the installation process.

-The menu can be used to install docker and build the docker-compose.yml file necessary for starting the stack. It also runs a few common commands. I do recommend you start to learn the docker and docker-compose commands if you plan on using docker in the long run. I've added several helper scripts, have a look inside.

-

-Navigate to the project folder and run `./menu.sh`

-

-### Installing from the menu

-

-Select the first option and follow the prompts

-

-### Build the docker-compose file

-

-docker-compose uses the `docker-compose.yml` file to configure all the services. Run through the menu to select the options you want to install.

-

-### Docker commands

-

-This menu executes shell scripts in the root of the project. It is not necessary to run them from the menu. Open up the shell script files to see what is inside and what they do.

-

-### Miscellaneous commands

-

-Some helpful commands have been added like disabling swap.

-

-## Running Docker commands

-

-From this point on make sure you are executing the commands from inside the project folder. Docker-compose commands need to be run from the folder where the docker-compose.yml is located. If you want to move the folder make sure you move the whole project folder.

-

-## Starting and Stopping containers

-

-to start the stack navigate to the project folder containing the docker-compose.yml file

-

-To start the stack run:

-`docker-compose up -d` or `./scripts/start.sh`

-

-To stop:

-`docker-compose stop`

-

-The first time you run 'start' the stack docker will download all the images for the web. Depending on how many containers you selected and your internet speed this can take a long while.

-

-The `docker-compose down` command stops the containers then deletes them.

-

-## Persistent data

-

-Docker allows you to map folders inside your containers to folders on the disk. This is done with the "volume" key. There are two types of volumes. Modification to the container are reflected in the volume.

-

-## See Wiki for further info

-

-[Wiki](https://github.com/gcgarner/IOTstack/wiki)

-

-## Add to the project

-

-Feel free to add your comments on features or images that you think should be added.

-

-## Contributions

-

-If you use some of the tools in the project please consider donating or contributing on their projects. It doesn't have to be monetary, reporting bugs and PRs help improve the projects for everyone.

-

-### Thanks

+4. Install docker with the menu, restart your system.

-@mrmx, @oscrx, @brianimmel, @Slyke, @AugustasV, @Paulf007, @affankingkhan, @877dev, @Paraphraser, @stfnhmplr, @peyanski, @cmskedgell

+5. Run menu again to select your build options, then start docker-compose with

+```

+docker-compose up -d

+```

diff --git a/docs/Accessing-your-Device-from-the-internet.md b/docs/Accessing-your-Device-from-the-internet.md

new file mode 100644

index 00000000..386b3da5

--- /dev/null

+++ b/docs/Accessing-your-Device-from-the-internet.md

@@ -0,0 +1,44 @@

+# Accessing your device from the internet

+The challenge most of us face with remotely accessing your home network is that you don't have a static IP. From time to time the IP that your ISP assigns to you changes and it's difficult to keep up. Fortunately, there is a solution, a DynamicDNS. The section below shows you how to set up an easy to remember address that follows your public IP no matter when it changes.

+

+Secondly, how do you get into your home network? Your router has a firewall that is designed to keep the rest of the internet out of your network to protect you. Here we install a VPN and configure the firewall to only allow very secure VPN traffic in.

+

+## DuckDNS

+If you want to have a dynamic DNS point to your Public IP I added a helper script.

+Register with duckdns.org and create a subdomain name. Then edit the `nano ~/IOTstack/duck/duck.sh` file and add your

+

+```bash

+DOMAINS="YOUR_DOMAINS"

+DUCKDNS_TOKEN="YOUR_DUCKDNS_TOKEN"

+```

+

+first test the script to make sure it works `sudo ~/IOTstack/duck/duck.sh` then `cat /var/log/duck.log`. If you get KO then something has gone wrong and you should check out your settings in the script. If you get an OK then you can do the next step.

+

+Create a cron job by running the following command `crontab -e`

+

+You will be asked to use an editor option 1 for nano should be fine

+paste the following in the editor `*/5 * * * * sudo ~/IOTstack/duck/duck.sh >/dev/null 2>&1` then ctrl+s and ctrl+x to save

+

+Your Public IP should be updated every five minutes

+

+## PiVPN

+pimylifeup.com has an excellent tutorial on how to install [PiVPN](https://pimylifeup.com/raspberry-pi-vpn-server/)

+

+In point 17 and 18 they mention using noip for their dynamic DNS. Here you can use the DuckDNS address if you created one.

+

+Don't forget you need to open the port 1194 on your firewall. Most people won't be able to VPN from inside their network so download OpenVPN client for your mobile phone and try to connect over mobile data. ([More info.](https://en.wikipedia.org/wiki/Hairpinning))

+

+Once you activate your VPN (from your phone/laptop/work computer) you will effectively be on your home network and you can access your devices as if you were on the wifi at home.

+

+I personally use the VPN any time I'm on public wifi, all your traffic is secure.

+

+## Zerotier

+https://www.zerotier.com/

+

+Zerotier is an alternative to PiVPN that doesn't require port forwarding on your router. It does however require registering for their free tier service [here](https://my.zerotier.com/login).

+

+Kevin Zhang has written a how to guide [here](https://iamkelv.in/blog/2017/06/zerotier.html). Just note that the install link is outdated and should be:

+```

+curl -s 'https://raw.githubusercontent.com/zerotier/ZeroTierOne/master/doc/contact%40zerotier.com.gpg' | gpg --import && \

+if z=$(curl -s 'https://install.zerotier.com/' | gpg); then echo "$z" | sudo bash; fi

+```

diff --git a/docs/Adminer.md b/docs/Adminer.md

new file mode 100644

index 00000000..2df6a824

--- /dev/null

+++ b/docs/Adminer.md

@@ -0,0 +1,12 @@

+# Adminer

+## References

+- [Docker](https://hub.docker.com/_/adminer)

+- [Website](https://www.adminer.org/)

+

+## About

+

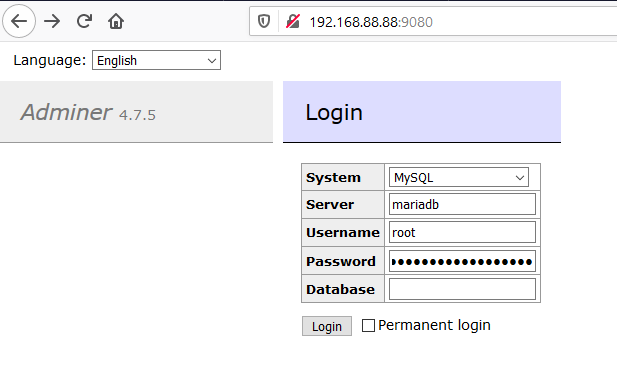

+This is a nice tool for managing databases. Web interface has moved to port 9080. There was an issue where openHAB and Adminer were using the same ports. If you have an port conflict edit the docker-compose.yml and under the adminer service change the line to read:

+```

+ ports:

+ - 9080:8080

+```

diff --git a/docs/Backups.md b/docs/Backups.md

new file mode 100644

index 00000000..ddb657c9

--- /dev/null

+++ b/docs/Backups.md

@@ -0,0 +1,113 @@

+# Backups

+Because containers can easily be rebuilt from docker hub we only have to back up the data in the "volumes" directory.

+

+## Cloud Backups

+### Dropbox-Uploader

+This a great utility to easily upload data from your Pi to the cloud. https://magpi.raspberrypi.org/articles/dropbox-raspberry-pi. It can be installed from the Menu under Backups.

+### rclone (Google Drive)

+This is a service to upload to Google Drive. The config is described [here]( https://medium.com/@artur.klauser/mounting-google-drive-on-raspberry-pi-f5002c7095c2). Install it from the menu then follow the link for these sections:

+* Getting a Google Drive Client ID

+* Setting up the Rclone Configuration

+

+When naming the service in `rclone config` ensure to call it "gdrive"

+

+**The Auto-mounting instructions for the drive in the link don't work on Rasbian**. Auto-mounting of the drive isn't necessary for the backup script.

+

+If you want your Google Drive to mount on every boot then follow the instructions at the bottom of the wiki page

+

+

+## Influxdb

+`~/IOTstack/scripts/backup_influxdb.sh` does a database snapshot and stores it in ~/IOTstack/backups/influxdb/db . This can be restored with the help a script (that I still need to write)

+

+## Docker backups

+The script `~/IOTstack/scripts/docker_backup.sh` performs the master backup for the stack.

+

+This script can be placed in a cron job to backup on a schedule.

+Edit the crontab with`crontab -e`

+Then add `0 23 * * * ~/IOTstack/scripts/docker_backup.sh >/dev/null 2>&1` to have a backup every night at 23:00.

+

+This script cheats by copying the volume folder live. The correct way would be to stop the stack first then copy the volumes and restart. The cheating method shouldn't be a problem unless you have fast changing data like in influxdb. This is why the script makes a database export of influxdb and ignores its volume.

+

+### Cloud integration

+The docker_backup.sh script now no longer requires modification to enable cloud backups. It now tests for the presence of and enable file in the backups folder

+#### Drobox-Uploader

+The backup tests for a file called `~/IOTstack/backups/dropbox`, if it is present it will upload to dropbox. To disable dropbox upload delete the file. To enable run `sudo touch ~/IOTstack/backups/dropbox`

+#### rclone

+The backup tests for a file called `~/IOTstack/backups/rclone`, if it is present it will upload to google drive. To disable rclone upload delete the file. To enable run `sudo touch ~/IOTstack/backups/rclone`

+

+#### Pruning online backups

+@877dev has added functionality to prune both local and cloud backups. For dropbox make sure you dont have any files that contain spaces in your backup directory as the script cannot handle it at this time.

+

+### Restoring a backup

+The "volumes" directory contains all the persistent data necessary to recreate the container. The docker-compose.yml and the environment files are optional as they can be regenerated with the menu. Simply copy the volumes directory into the IOTstack directory, Rebuild the stack and start.

+

+## Added your Dropbox token incorrectly or aborted the install at the token screen

+

+Make sure you are running the latest version of the project [link](https://github.com/gcgarner/IOTstack/wiki/Updating-the-Project).

+

+Run `~/Dropbox-Uploader/dropbox_uploader.sh unlink` and if you have added it key then it will prompt you to confirm its removal. If no key was found it will ask you for a new key.

+

+Confirm by running `~/Dropbox-Uploader/dropbox_uploader.sh` it should ask you for your key if you removed it or show you the following prompt if it has the key:

+

+```

+ $ ~/Dropbox-Uploader/dropbox_uploader.sh

+Dropbox Uploader v1.0

+Andrea Fabrizi - andrea.fabrizi@gmail.com

+

+Usage: /home/pi/Dropbox-Uploader/dropbox_uploader.sh [PARAMETERS] COMMAND...

+

+Commands:

+ upload <LOCAL_FILE/DIR ...> <REMOTE_FILE/DIR>

+ download <REMOTE_FILE/DIR> [LOCAL_FILE/DIR]

+ delete <REMOTE_FILE/DIR>

+ move <REMOTE_FILE/DIR> <REMOTE_FILE/DIR>

+ copy <REMOTE_FILE/DIR> <REMOTE_FILE/DIR>

+ mkdir <REMOTE_DIR>

+....

+

+```

+

+Ensure you **are not** running as sudo as this will store your api in the /root directory as `/root/.dropbox_uploader`

+

+If you ran the command with sudo the remove the old token file if it exists with either `sudo rm /root/.dropbox_uploader` or `sudo ~/Dropbox-Uploader/dropbox_uploader.sh unlink`

+

+## Auto-mount Gdrive with rclone

+

+To enable rclone to mount on boot you will need to make a user service. Run the following commands

+

+```bash

+mkdir -p ~/.config/systemd/user

+nano ~/.config/systemd/user/gdrive.service

+```

+Copy the following code into the editor, save and exit

+

+```

+[Unit]

+Description=rclone: Remote FUSE filesystem for cloud storage

+Documentation=man:rclone(1)

+

+[Service]

+Type=notify

+ExecStartPre=/bin/mkdir -p %h/mnt/gdrive

+ExecStart= \

+ /usr/bin/rclone mount \

+ --fast-list \

+ --vfs-cache-mode writes \

+ gdrive: %h/mnt/gdrive

+

+[Install]

+WantedBy=default.target

+```

+enable it to start on boot with: (no sudo)

+```bash

+systemctl --user enable gdrive.service

+```

+start with

+```bash

+systemctl --user start gdrive.service

+```

+if you no longer want it to start on boot then type:

+```bash

+systemctl --user disable gdrive.service

+```

+

diff --git a/docs/Blynk_server.md b/docs/Blynk_server.md

new file mode 100644

index 00000000..e255d518

--- /dev/null

+++ b/docs/Blynk_server.md

@@ -0,0 +1,82 @@

+# Blynk server

+This is a custom implementation of Blynk Server

+

+```yml

+ blynk_server:

+ build: ./services/blynk_server/.

+ container_name: blynk_server

+ restart: unless-stopped

+ ports:

+ - 8180:8080

+ - 8441:8441

+ - 9443:9443

+ volumes:

+ - ./volumes/blynk_server/data:/data

+```

+

+To connect to the admin interface navigate to `<your pis IP>:9443/admin`

+

+I don't know anything about this service so you will need to read though the setup on the [Project Homepage](https://github.com/blynkkk/blynk-server)

+

+When setting up the application on your mobile be sure to select custom setup [here](https://github.com/blynkkk/blynk-server#app-and-sketch-changes)

+

+Writeup From @877dev

+

+## Getting started

+Log into admin panel at https://youripaddress:9443/admin

+(Use your Pi's IP address, and ignore Chrome warning).

+

+Default credentials:

+user:admin@blynk.cc

+pass:admin

+

+## Change username and password

+Click on Users > "email address" and edit email, name and password.

+Save changes

+Restarting the container using Portainer may be required to take effect.

+

+## Setup gmail

+Optional step, useful for getting the auth token emailed to you.

+(To be added once confirmed working....)

+

+## iOS/Android app setup

+Login the app as per the photos [HERE](https://github.com/blynkkk/blynk-server#app-and-sketch-changes)

+Press "New Project"

+Give it a name, choose device "Raspberry Pi 3 B" so you have plenty of [virtual pins](http://help.blynk.cc/en/articles/512061-what-is-virtual-pins) available, and lastly select WiFi.

+Create project and the [auth token](https://docs.blynk.cc/#getting-started-getting-started-with-the-blynk-app-4-auth-token) will be emailed to you (if emails configured). You can also find the token in app under the phone app settings, or in the admin web interface by clicking Users>"email address" and scroll down to token.

+

+## Quick usage guide for app

+Press on the empty page, the widgets will appear from the right.

+Select your widget, let's say a button.

+It appears on the page, press on it to configure.

+Give it a name and colour if you want.

+Press on PIN, and select virtual. Choose any pin i.e. V0

+Press ok.

+To start the project running, press top right Play button.

+You will get an offline message, because no devices are connected to your project via the token.

+Enter node red.....

+

+## Node red

+Install node-red-contrib-blynk-ws from pallette manager

+Drag a "write event" node into your flow, and connect to a debug node

+Configure the Blynk node for the first time:

+```URL: wss://youripaddress:9443/websockets``` more info [HERE](https://github.com/gablau/node-red-contrib-blynk-ws/blob/master/README.md#how-to-use)

+Enter your [auth token](https://docs.blynk.cc/#getting-started-getting-started-with-the-blynk-app-4-auth-token) from before and save/exit.

+When you deploy the flow, notice the app shows connected message, as does the Blynk node.

+Press the button on the app, you will notice the payload is sent to the debug node.

+

+## What next?

+Further information and advanced setup:

+https://github.com/blynkkk/blynk-server

+

+Check the documentation:

+https://docs.blynk.cc/

+

+Visit the community forum pages:

+https://community.blynk.cc/

+

+Interesting post by Peter Knight on MQTT/Node Red flows:

+https://community.blynk.cc/t/my-home-automation-projects-built-with-mqtt-and-node-red/29045

+

+Some Blynk flow examples:

+https://github.com/877dev/Node-Red-flow-examples

\ No newline at end of file

diff --git a/docs/Custom.md b/docs/Custom.md

new file mode 100644

index 00000000..1d84b018

--- /dev/null

+++ b/docs/Custom.md

@@ -0,0 +1,14 @@

+# Custom container

+If you have a container that you want to stop and start with the stack you can now use the custom container option. This you can use for testing or in prep for a Pull Request.

+

+You will need to create a directory for your container call `IOTstack/services/<container>`

+

+Inside that container create a `service.yml` containing your service and configurations. Have a look at one of the other services for inspiration.

+

+Create a file called `IOTstack/services/custom.txt` and and enter your container names, one per line

+

+Run the menu.sh and build the stack. After you have made the selection you will be asked if you want to add the custom containers.

+

+Now your container will be part of the docker-compose.yml file and will respond to the docker-compose up -d commands.

+

+Docker creates volumes under root and your container may require different ownership on the volume directory. You can see an example of the workaround for this in the python template in `IOTstack/.templates/python/directoryfix.sh`

\ No newline at end of file

diff --git a/docs/Docker-commands.md b/docs/Docker-commands.md

new file mode 100644

index 00000000..6965c9c2

--- /dev/null

+++ b/docs/Docker-commands.md

@@ -0,0 +1,15 @@

+# Docker commands

+## Aliases

+

+I've added bash aliases for stopping and starting the stack. They can be installed in the docker commands menu. These commands no longer need to be executed from the IOTstack directory and can be executed in any directory

+

+```bash

+alias iotstack_up="docker-compose -f ~/IOTstack/docker-compose.yml up -d"

+alias iotstack_down="docker-compose -f ~/IOTstack/docker-compose.yml down"

+alias iotstack_start="docker-compose -f ~/IOTstack/docker-compose.yml start"

+alias iotstack_stop="docker-compose -f ~/IOTstack/docker-compose.yml stop"

+alias iotstack_update="docker-compose -f ~/IOTstack/docker-compose.yml pull"

+alias iotstack_build="docker-compose -f ~/IOTstack/docker-compose.yml build"

+```

+

+You can now type `iotstack_up`, they even accept additional parameters `iotstack_stop portainer`

diff --git a/docs/EspruinoHub.md b/docs/EspruinoHub.md

new file mode 100644

index 00000000..215a0de5

--- /dev/null

+++ b/docs/EspruinoHub.md

@@ -0,0 +1,12 @@

+# Espruinohub

+This is a testing container

+

+I tried it however the container keeps restarting `docker logs espruinohub` I get "BLE Broken?" but could just be i dont have any BLE devices nearby

+

+web interface is on "{your_Pis_IP}:1888"

+

+see https://github.com/espruino/EspruinoHub#status--websocket-mqtt--espruino-web-ide for other details

+

+there were no recommendations for persistent data volumes. so `docker-compose down` may destroy all you configurations so use `docker-compose stop` in stead

+

+please let me know about your success or issues [here](https://github.com/gcgarner/IOTstack/issues/84)

\ No newline at end of file

diff --git a/docs/Getting-Started.md b/docs/Getting-Started.md

new file mode 100644

index 00000000..b04375ad

--- /dev/null

+++ b/docs/Getting-Started.md

@@ -0,0 +1,83 @@

+# Getting started

+## Download the project

+

+On the lite image you will need to install git first

+```

+sudo apt-get install git

+```

+Then download with

+```

+git clone https://github.com/gcgarner/IOTstack.git ~/IOTstack

+```

+Due to some script restraints, this project needs to be stored in ~/IOTstack

+

+To enter the directory run:

+```

+cd ~/IOTstack

+```

+## The Menu

+I've added a menu to make things easier. It is good however to familiarise yourself with how things are installed.

+The menu can be used to install docker and then build the docker-compose.yml file necessary for starting the stack and it runs a few common commands. I do recommend you start to learn the docker and docker-compose commands if you plan using docker in the long run. I've added several helper scripts, have a look inside.

+

+Navigate to the project folder and run `./menu.sh`

+

+### Installing from the menu

+Select the first option and follow the prompts

+

+### Build the docker-compose file

+docker-compose uses the `docker-compose.yml` file to configure all the services. Run through the menu to select the options you want to install.

+

+### Docker commands

+This menu executes shell scripts in the root of the project. It is not necessary to run them from the menu. Open up the shell script files to see what is inside and what they do

+

+### Miscellaneous commands

+Some helpful commands have been added like disabling swap

+

+## Running Docker commands

+From this point on make sure you are executing the commands from inside the project folder. Docker-compose commands need to be run from the folder where the docker-compose.yml is located. If you want to move the folder make sure you move the whole project folder.

+

+### Starting and Stopping containers

+to start the stack navigate to the project folder containing the docker-compose.yml file

+

+To start the stack run:

+`docker-compose up -d` or `./scripts/start.sh`

+

+To stop:

+`docker-compose stop` stops without removing containers

+

+To remove the stack:

+`docker-compose down` stops containers, deletes them and removes the network

+

+The first time you run 'start' the stack docker will download all the images for the web. Depending on how many containers you selected and your internet speed this can take a long while.

+

+### Persistent data

+Docker allows you to map folders inside your containers to folders on the disk. This is done with the "volume" key. There are two types of volumes. Any modification to the container reflects in the volume.

+

+#### Sharing files between the Pi and containers

+Have a look a the wiki on how to share files between Node-RED and the Pi. [Wiki](https://github.com/gcgarner/IOTstack/wiki/Node-RED#sharing-files-between-node-red-and-the-host)

+

+### Updating the images

+If a new version of a container image is available on docker hub it can be updated by a pull command.

+

+Use the `docker-compose stop` command to stop the stack

+

+Pull the latest version from docker hub with one of the following command

+

+`docker-compose pull` or the script `./scripts/update.sh`

+

+Start the new stack based on the updated images

+

+`docker-compose up -d`

+

+### Node-RED error after modifications to setup files

+The Node-RED image differs from the rest of the images in this project. It uses the "build" key. It uses a dockerfile for the setup to inject the nodes for pre-installation. If you get an error for Node-RED run `docker-compose build` then `docker-compose up -d`

+

+### Deleting containers, volumes and images

+

+`./prune-images.sh` will remove all images not associated with a container. If you run it while the stack is up it will ignore any in-use images. If you run this while you stack is down it will delete all images and you will have to redownload all images from scratch. This command can be helpful to reclaim disk space after updating your images, just make sure to run it while your stack is running as not to delete the images in use. (your data will still be safe in your volume mapping)

+

+### Deleting folder volumes

+If you want to delete the influxdb data folder run the following command `sudo rm -r volumes/influxdb/`. Only the data folder is deleted leaving the env file intact. review the docker-compose.yml file to see where the file volumes are stored.

+

+You can use git to delete all files and folders to return your folder to the freshly cloned state, AS IN YOU WILL LOSE ALL YOUR DATA.

+`sudo git clean -d -x -f` will return the working tree to its clean state. USE WITH CAUTION!

\ No newline at end of file

diff --git a/docs/Grafana.md b/docs/Grafana.md

new file mode 100644

index 00000000..8194f1c6

--- /dev/null

+++ b/docs/Grafana.md

@@ -0,0 +1,27 @@

+# Grafana

+## References

+- [Docker](https://hub.docker.com/r/grafana/grafana)

+- [Website](https://grafana.com/)

+

+## Security

+Grafana's default credentials are username "admin" password "admin" it will ask you to choose a new password on boot. Go to `<yourIP>:3000` in your web browser.

+

+## Overwriting grafana.ini settings

+

+A list of the settings available in grafana.ini are listed [here](https://grafana.com/docs/installation/configuration/)

+

+To overwrite a setting edit the IOTstack/services/grafana/grafana.env file. The format is `GF_<SectionName>_<KeyName>`

+

+An example would be:

+```

+GF_PATHS_DATA=/var/lib/grafana

+GF_PATHS_LOGS=/var/log/grafana

+# [SERVER]

+GF_SERVER_ROOT_URL=http://localhost:3000/grafana

+GF_SERVER_SERVE_FROM_SUB_PATH=true

+# [SECURITY]

+GF_SECURITY_ADMIN_USER=admin

+GF_SECURITY_ADMIN_PASSWORD=admin

+```

+

+After the alterations run `docker-compose up -d` to pull them in

diff --git a/docs/Home-Assistant.md b/docs/Home-Assistant.md

new file mode 100644

index 00000000..40f4d679

--- /dev/null

+++ b/docs/Home-Assistant.md

@@ -0,0 +1,52 @@

+# Home assistant

+## References

+- [Docker](https://hub.docker.com/r/homeassistant/home-assistant/)

+- [Webpage](https://www.home-assistant.io/)

+

+Hass.io is a home automation platform running on Python 3. It is able to track and control all devices at home and offer a platform for automating control. Port binding is `8123`.

+Hass.io is exposed to your hosts' network in order to discover devices on your LAN. That means that it does not sit inside docker's network.

+

+## Menu installation

+Hass.io now has a seperate installation in the menu. The old version was incorrect and should be removed. Be sure to update you project and install the correct version.

+

+You will be asked to select you device type during the installation. Hass.io is no longer dependant on the IOTstack, it has its own service for maintaining its uptime.

+

+## Installation

+The installation of Hass.io takes up to 20 minutes (depending on your internet connection). Refrain from restarting your Pi until it had come online and you are able to create a user account

+

+## Removal

+

+To remove Hass.io you first need to stop the service that controls it. Run the following in the terminal:

+

+```bash

+sudo systemctl stop hassio-supervisor.service

+sudo systemctl disable hassio-supervisor.service

+```

+

+This should stop the main service however there are two additional container that still need to be address

+

+This will stop the service and disable it from starting on the next boot

+

+Next you need to stop the hassio_dns and hassio_supervisor

+

+```bash

+docker stop hassio_supervisor

+docker stop hassio_dns

+docker stop homeassistant

+```

+

+If you want to remove the containers

+

+```bash

+docker rm hassio_supervisor

+docker rm hassio_dns

+docker stop homeassistant

+```

+

+After rebooting you should be able to reinstall

+

+The stored file are located in `/usr/share/hassio` which can be removed if you need to

+

+Double check with `docker ps` to see if there are other hassio containers running. They can stopped and removed in the same fashion for the dns and supervisor

+

+You can use Portainer to view what is running and clean up the unused images.

\ No newline at end of file

diff --git a/docs/Home.md b/docs/Home.md

new file mode 100644

index 00000000..dc8fdafc

--- /dev/null

+++ b/docs/Home.md

@@ -0,0 +1,71 @@

+# Wiki

+

+The README is moving to the Wiki, It's easier to add content and example to the Wiki vs the README.md

+

+* [Getting Started](https://github.com/SensorsIot/IOTstack/wiki/Getting-Started)

+* [Updating the project](https://github.com/SensorsIot/IOTstack/wiki/Updating-the-Project)

+* [How the script works](https://github.com/SensorsIot/IOTstack/wiki/How-the-script-works)

+* [Understanding Containers](https://github.com/SensorsIot/IOTstack/wiki/Understanding-Containers)

+

+***

+

+# Docker

+

+* [Commands](https://github.com/SensorsIot/IOTstack/wiki/Docker-commands)

+* [Docker Networks](https://github.com/SensorsIot/IOTstack/wiki/Networking)

+

+***

+

+# Containers

+* [Portainer](https://github.com/SensorsIot/IOTstack/wiki/Portainer)

+* [Portainer Agent](https://github.com/SensorsIot/IOTstack/wiki/Portainer-agent)

+* [Node-RED](https://github.com/SensorsIot/IOTstack/wiki/Node-RED)

+* [Grafana](https://github.com/SensorsIot/IOTstack/wiki/Grafana)

+* [Mosquitto](https://github.com/SensorsIot/IOTstack/wiki/Mosquitto)

+* [PostgreSQL](https://github.com/SensorsIot/IOTstack/wiki/PostgreSQL)

+* [Adminer](https://github.com/SensorsIot/IOTstack/wiki/Adminer)

+* [openHAB](https://github.com/SensorsIot/IOTstack/wiki/openHAB)

+* [Home Assistant](https://github.com/SensorsIot/IOTstack/wiki/Home-Assistant)

+* [Pi-Hole](https://github.com/SensorsIot/IOTstack/wiki/Pi-hole)

+* [zigbee2MQTT](https://github.com/SensorsIot/IOTstack/wiki/Zigbee2MQTT)

+* [Plex](https://github.com/SensorsIot/IOTstack/wiki/Plex)

+* [TasmoAdmin](https://github.com/SensorsIot/IOTstack/wiki/TasmoAdmin)

+* [RTL_433](https://github.com/SensorsIot/IOTstack/wiki/RTL_433-docker)

+* [EspruinoHub (testing)](https://github.com/SensorsIot/IOTstack/wiki/EspruinoHub)

+* [Next-Cloud](https://github.com/SensorsIot/IOTstack/wiki/NextCloud)

+* [MariaDB](https://github.com/SensorsIot/IOTstack/wiki/MariaDB)

+* [MotionEye](https://github.com/SensorsIot/IOTstack/wiki/MotionEye)

+* [Blynk Server](https://github.com/SensorsIot/IOTstack/wiki/Blynk_server)

+* [diyHue](https://github.com/SensorsIot/IOTstack/wiki/diyHue)

+* [Python](https://github.com/SensorsIot/IOTstack/wiki/Python)

+* [Custom containers](https://github.com/SensorsIot/IOTstack/wiki/Custom)

+

+***

+

+# Native installs

+

+* [RTL_433](https://github.com/SensorsIot/IOTstack/wiki/Native-RTL_433)

+* [RPIEasy](https://github.com/SensorsIot/IOTstack/wiki/RPIEasy_native)

+

+***

+

+# Backups

+

+* [Docker backups](https://github.com/SensorsIot/IOTstack/wiki/Backups)

+* Recovery (coming soon)

+***

+

+# Remote Access

+

+* [VPN and Dynamic DNS](https://github.com/SensorsIot/IOTstack/wiki/Accessing-your-Device-from-the-internet)

+* [x2go](https://github.com/SensorsIot/IOTstack/wiki/x2go)

+

+***

+

+# Miscellaneous

+

+* [log2ram](https://github.com/SensorsIot/IOTstack/wiki/Misc)

+* [Dropbox-Uploader](https://github.com/SensorsIot/IOTstack/wiki/Misc)

+

+***

+

diff --git a/docs/How-the-script-works.md b/docs/How-the-script-works.md

new file mode 100644

index 00000000..54dddd9b

--- /dev/null

+++ b/docs/How-the-script-works.md

@@ -0,0 +1,6 @@

+# How the script works

+The build script creates the ./services directory and populates it from the template file in .templates . The script then appends the text withing each service.yml file to the docker-compose.yml . When the stack is rebuild the menu doesn not overwrite the service folder if it already exists. Make sure to sync any alterations you have made to the docker-compose.yml file with the respective service.yml so that on your next build your changes pull through.

+

+The .gitignore file is setup such that if you do a `git pull origin master` it does not overwrite the files you have already created. Because the build script does not overwrite your service directory any changes in the .templates directory will have no affect on the services you have already made. You will need to move your service folder out to get the latest version of the template.

+

+

diff --git a/docs/InfluxDB.md b/docs/InfluxDB.md

new file mode 100644

index 00000000..4f714771

--- /dev/null

+++ b/docs/InfluxDB.md

@@ -0,0 +1,9 @@

+# InfluxDB

+## References

+- [Docker](https://hub.docker.com/_/influxdb)

+- [Website](https://www.influxdata.com/)

+

+## Security

+The credentials and default database name for influxdb are stored in the file called influxdb/influx.env . The default username and password is set to "nodered" for both. It is HIGHLY recommended that you change them. The environment file contains several commented out options allowing you to set several access options such as default admin user credentials as well as the default database name. Any change to the environment file will require a restart of the service.

+

+To access the terminal for influxdb execute `./services/influxdb/terminal.sh`. Here you can set additional parameters or create other databases.

diff --git a/docs/MariaDB.md b/docs/MariaDB.md

new file mode 100644

index 00000000..0b2408ac

--- /dev/null

+++ b/docs/MariaDB.md

@@ -0,0 +1,23 @@

+## Source

+* [Docker hub](https://hub.docker.com/r/linuxserver/mariadb/)

+* [Webpage](https://mariadb.org/)

+

+## About

+

+MariaDB is a fork of MySQL. This is an unofficial image provided by linuxserver.io because there is no official image for arm

+

+## Conneting to the DB

+

+The port is 3306. It exists inside the docker network so you can connect via `mariadb:3306` for internal connections. For external connections use `<your Pis IP>:3306`

+

+

+

+## Setup

+

+Before starting the stack edit the `./services/mariadb/mariadb.env` file and set your access details. This is optional however you will only have one shot at the preconfig. If you start the container without setting the passwords then you will have to either delete its volume directory or enter the terminal and change manually

+

+The env file has three commented fields for credentials, either **all three** must be commented or un-commented. You can't have only one or two, its all or nothing.

+

+## Terminal

+

+A terminal is provided to access mariadb by the cli. execute `./services/maraidb/terminal.sh`. You will need to run `mysql -uroot -p` to enter mariadbs interface

\ No newline at end of file

diff --git a/docs/Misc.md b/docs/Misc.md

new file mode 100644

index 00000000..dca1aa99

--- /dev/null

+++ b/docs/Misc.md

@@ -0,0 +1,9 @@