9000:1 token:param ratios are all you need.

AI News for 9/24/2024-9/25/2024. We checked 7 subreddits, 433 Twitters and 31 Discords (223 channels, and 3218 messages) for you. Estimated reading time saved (at 200wpm): 316 minutes. You can now tag @smol_ai for AINews discussions!

Big news from Mira Murati and FB Reality Labs today, but the actual technical news you can use today is Llama 3.2:

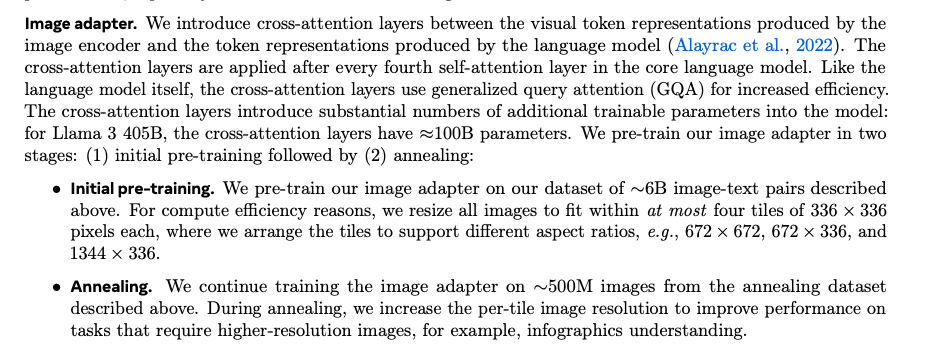

As teased by Zuck and previewed in the Llama 3 paper (our coverage here), the Multimodal versions of Llama 3.2 released as anticipated, adding a 3B and a 20B vision adapter on a frozen Llama 3.1:

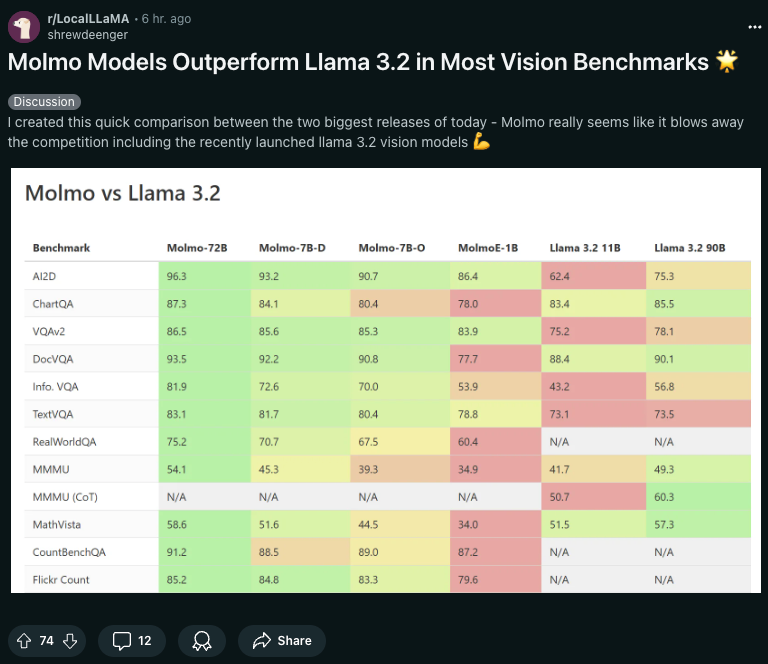

The 11B is comparable/slightly better than Claude Haiku, and the 90B is comparable/slightly better than GPT-4o-mini, though you will have to dig a lot harder to find out how far it trails behind 4o, 3.5 Sonnet, 1.5 Pro, and Qwen2-VL with a 60.3 on MMMU.

Meta is being praised for their open source here, but don't miss the multimodal Molmo 72B and 7B models from AI2 also releasing today. It has not escaped /r/localLlama's attention that Molmo is outperforming 3.2 in vision:

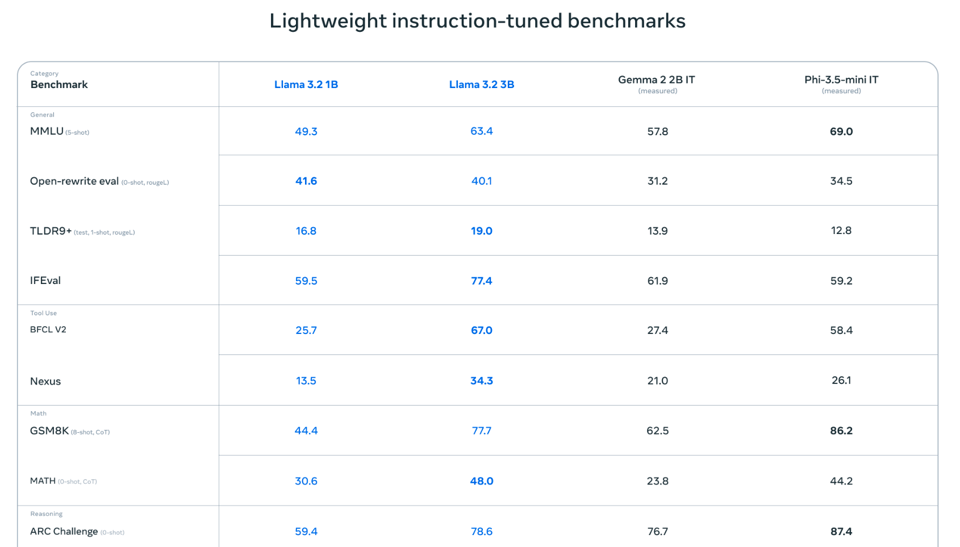

The bigger/pleasant/impressive surprise from Meta are the new 128k-context 1B and 3B models, which noew compete with Gemma 2 and Phi 3.5:

The release notes hint at some very tight on device collaborations with Qualcomm, Mediatek, and Arm:

The weights being released today are based on BFloat16 numerics. Our teams are actively exploring quantized variants that will run even faster, and we hope to share more on that soon.

Don't miss:

- launch blogpost

- Followup technical detail from @AIatMeta disclosing a 9 trillion token count for Llama 1B and 3B, and quick arch breakdown from Daniel Han

- updated HuggingFace collection including Evals

- the Llama Stack launch (see RFC here)

Partner launches:

- Ollama

- Together AI (offering FREE 11B model access rate limited to 5 rpm until end of year)

- Fireworks AI

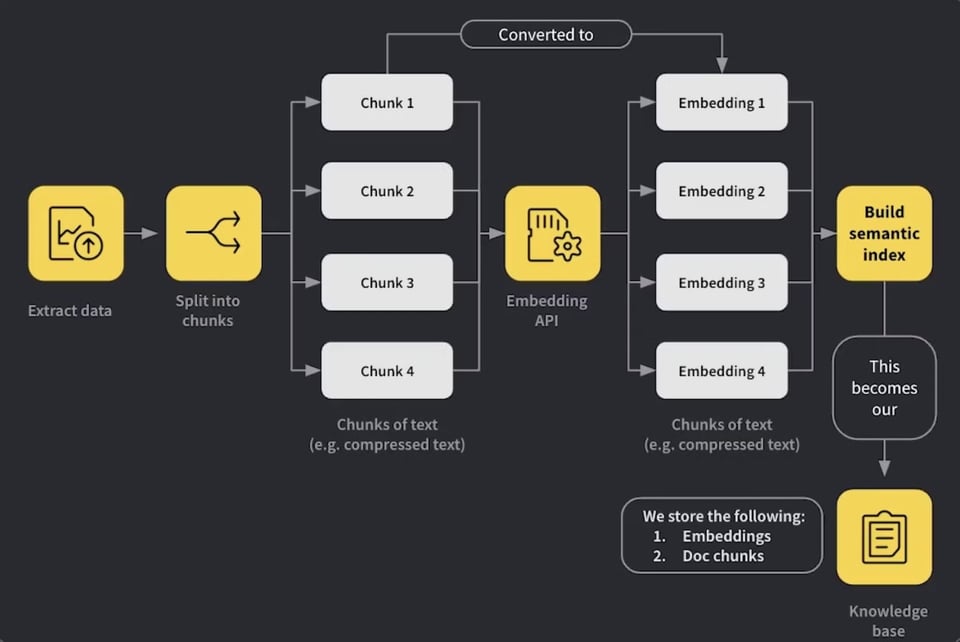

This issue sponsored by RAG++: a new course from Weights & Biases. Go beyond RAG POCs and learn how to evaluate systematically, use hybrid search correctly and give your RAG system access to tool calling. Based on 18 months of running a customer support bot in production, industry experts at Weights & Biases, Cohere, and Weaviate show how to get to a deployment-grade RAG app. Includes free credits from Cohere to get you started!

Swyx commentary: Whoa, 74 lessons in 2 hours. I've worked on this kind of very tightly edited course content before and it's amazing that this is free! Chapters 1-2 cover some necessary RAG table stakes, but then it was delightful to see Chapter 3 teach important ETL and IR concepts, and learn some new things on cross encoding, rank fusion, and query translation in 4 and 5. We shall have to cover this on livestream soon!

{% if medium == 'web' %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Advanced Voice Model Release

- OpenAI is rolling out an advanced voice model for ChatGPT Plus and Team users over the course of a week.

- @sama announced: "advanced voice mode rollout starts today! (will be completed over the course of the week)hope you think it was worth the wait 🥺🫶"

- @miramurati confirmed: "All Plus and Team users in ChatGPT"

- @gdb noted: "Advanced Voice rolling out broadly, enabling fluid voice conversation with ChatGPT. Makes you realize how unnatural typing things into a computer really is:"

The new voice model features lower latency, the ability to interrupt long responses, and support for memory to personalize responses. It also includes new voices and improved accents.

Google's Gemini 1.5 Pro and Flash Updates

Google announced significant updates to their Gemini models:

- @GoogleDeepMind tweeted: "Today, we're excited to release two new, production-ready versions of Gemini 1.5 Pro and Flash. 🚢They build on our latest experimental releases and include significant improvements in long context understanding, vision and math."

- @rohanpaul_ai summarized key improvements: "7% increase in MMLU-Pro benchmark, 20% improvement in MATH and HiddenMath, 2-7% better in vision and code tasks"

- Price reductions of over 50% for Gemini 1.5 Pro

- 2x faster output and 3x lower latency

- Increased rate limits: 2,000 RPM for Flash, 1,000 RPM for Pro

The models can now process 1000-page PDFs, 10K+ lines of code, and hour-long videos. Outputs are 5-20% shorter for efficiency, and safety filters are customizable by developers.

AI Model Performance and Benchmarks

- OpenAI's models are leading in various benchmarks:

- @alexandr_wang reported: "OpenAI's o1 is dominating SEAL rankings!🥇 o1-preview is dominating across key categories:- #1 in Agentic Tool Use (Enterprise)- #1 in Instruction Following- #1 in Spanish👑 o1-mini leads the charge in Coding"

- Comparisons between different models:

- @bindureddy noted: "Gemini's Real Superpower - It's 10x Cheaper Than o1!The new Gemini is live on ChatLLM teams if you want to play with it."

AI Development and Research

- @alexandr_wang discussed the phases of LLM development: "We are entering the 3rd phase of LLM Development.1st phase was early tinkering, Transformer to GPT-32nd phase was scaling3rd phase is an innovation phase: what breakthroughs beyond o1 get us to a new proto-AGI paradigm"

- @JayAlammar shared insights on LLM concepts: "Chapter 1 paves the way for understanding LLMs by providing a history and overview of the concepts involved. A central concept the general public should know is that language models are not merely text generators, but that they can form other systems (embedding, classification) that are useful for problem solving."

AI Tools and Applications

- @svpino discussed AI-powered code reviews: "Unpopular opinion: Code reviews are dumb, and I can't wait for AI to take over completely."

- @nerdai shared an ARC Task Solver that allows humans to collaborate with LLMs: "Using the handy-dandy @llama_index Workflows, we've built an ARC Task Solver that allows humans to collaborate with an LLM to solve these ARC Tasks."

Memes and Humor

- @AravSrinivas joked: "Should I drop a wallpaper app ?"

- @swyx humorously commented on the situation: "guys stop it, mkbhd just uploaded the wrong .IPA file to the app store. be patient, he is recompiling the code from scratch. meanwhile he privately dm'ed me a test flight for the real mkbhd app. i will investigate and get to the bottom of this as a self appointed auror for the wallpaper community"

Theme 1. High-Speed Inference Platforms: Cerebras and MLX

- Just got access to Cerebras. 2,000 token per second. (Score: 99, Comments: 39): The Cerebras platform has demonstrated impressive inference speeds, achieving 2,010 tokens per second with the Llama3.1-8B model and 560 tokens per second with the Llama3.1-70B model. The user expresses amazement at this performance, indicating they are still exploring potential applications for such high-speed inference capabilities.

- JSON outputs are supported by the Cerebras platform, as confirmed by the original poster. Access to the platform is granted through a sign-up and invite system, with users directed to inference.cerebras.ai.

- Potential applications discussed include Chain of Thought (CoT) + RAG with Voice, potentially creating a Siri/Google Voice competitor capable of providing expert-level answers in real-time. A voice demo on Cerebras is available at cerebras.vercel.app.

- The platform is compared to Groq, with Cerebras reportedly being even faster. SambaNova APIs are mentioned as an alternative, offering similar speeds (1500 tokens/second) without a waitlist, while users note the potential for real-time applications and security implications of such high-speed inference.

- MLX batch generation is pretty cool! (Score: 42, Comments: 15): The MLX paraLLM library enabled a 5.8x speed improvement for Mistral-22b generation, increasing from 17.3 tokens per second to 101.4 tps at a batch size of 31. Peak memory usage increased from 12.66GB to 17.01GB, with approximately 150MB required for each additional concurrent generation, while the author managed to run 100 concurrent batches of the 22b-4bit model on a 64GB M1 Max machine without exceeding 41GB of wired memory.

- Energy efficiency tests showed 10 tokens per watt for Mistral-7b and 3.5 tokens per watt for 22b at batch size 100 in low power mode. This efficiency is comparable to human brain performance in terms of words per watt.

- The library is Apple-only, but similar batching capabilities exist for NVIDIA/CUDA through tools like vLLM, Aphrodite, and MLC, though with potentially more complex setup processes.

- While not applicable for improving speed in normal chat scenarios, the technology is valuable for synthetic data generation and dataset distillation.

Theme 2. Qwen 2.5: Breakthrough Performance on Consumer Hardware

- Qwen2-VL-72B-Instruct-GPTQ-Int4 on 4x P100 @ 24 tok/s (Score: 37, Comments: 52): Qwen2-VL-72B-Instruct-GPTQ-Int4, a large multimodal model, is reported to run on 4x P100 GPUs at a speed of 24 tokens per second. This implementation utilizes GPTQ quantization and Int4 precision, enabling the deployment of a 72 billion parameter model on older GPU hardware with limited VRAM.

- DeltaSqueezer provided a GitHub repository and Docker command for running Qwen2-VL-72B-Instruct-GPTQ-Int4 on Pascal GPUs. The setup includes support for P40 GPUs, but may experience slow loading times due to FP16 processing.

- The model demonstrated reasonable vision and reasoning capabilities when tested with a political image. A comparison with Pixtral model's output on the same image was provided, showing similar interpretation abilities.

- Discussion on video processing revealed that the 7B VL version consumes significant VRAM. The model's performance on P100 GPUs was noted to be faster than 3x3090s, with the P100's HBM being comparable to the 3090's memory bandwidth.

- Qwen 2.5 is a game-changer. (Score: 524, Comments: 121): Qwen 2.5 72B model is running efficiently on dual RTX 3090s, with the Q4_K_S (44GB) version achieving approximately 16.7 T/s and the Q4_0 (41GB) version reaching about 18 T/s. The post includes Docker compose configurations for setting up Tailscale, Ollama, and Open WebUI, along with bash scripts for updating and downloading multiple AI models, including variants of Llama 3.1, Qwen 2.5, Gemma 2, and Mistral.

- Tailscale integration in the setup allows for remote access to OpenWebUI via mobile devices and iPads, enabling on-the-go usage of the AI models through a browser.

- Users discussed model performance, with suggestions to try AWQ (4-bit quantization) served by lmdeploy for potentially faster performance on 70B models. Comparisons between 32B and 7B models showed better performance from larger models on complex tasks.

- Interest in hardware requirements was expressed, with the original poster noting that dual RTX 3090s were chosen for running 70B models efficiently, expecting a 6-month ROI. Questions about running models on Apple M1/M3 hardware were also raised.

Theme 3. Gemini 1.5 Pro 002: Google's Latest Model Impresses

- Gemini 1.5 Pro 002 putting up some impressive benchmark numbers (Score: 102, Comments: 42): Gemini 1.5 Pro 002 is demonstrating impressive performance across various benchmarks. The model achieves 97.8% on MMLU, 90.0% on HumanEval, and 82.6% on MATH, surpassing previous state-of-the-art results and showing significant improvements over its predecessor, Gemini 1.0 Pro.

- Google's Gemini 1.5 Pro 002 shows significant improvements, including >50% reduced price, 2-3x higher rate limits, and 2-3x faster output and lower latency. The model's performance across benchmarks like MMLU (97.8%) and HumanEval (90.0%) is impressive.

- Users praised Google's recent progress, noting their publication of research papers and the AI Studio playground. Some compared Google favorably to other AI companies, with Meta being highlighted for its open-weight models and detailed papers.

- Discussion arose about the consumer version of Gemini, with some users finding it less capable than competitors. Speculation on when the updated model would be available to consumers ranged from a few days to October 8th at the latest.

- Updated gemini models are claimed to be the most intelligent per dollar* (Score: 291, Comments: 184): Google has released Gemini 1.5 Pro 002, claiming it to be the most intelligent AI model per dollar. The model demonstrates significant improvements in various benchmarks, including a 90% score on MMLU and 93.2% on HumanEval, while offering competitive pricing at $0.0025 per 1k input tokens and $0.00875 per 1k output tokens. These performance gains and cost-effective pricing position Gemini 1.5 Pro 002 as a strong contender in the AI model market.

- Mistral offers 1 billion tokens of Large v2 per month for free, with users noting its strong performance. This contrasts with Google's pricing strategy for Gemini 1.5 Pro 002.

- Users criticized Google's naming scheme for Gemini models, suggesting alternatives like date-based versioning. The announcement also revealed 2-3x higher rate limits and faster performance for API users.

- Discussions highlighted the trade-offs between cost, performance, and data privacy. Some users prefer self-hosting for data control, while others appreciate Google's free tier and AI Studio for unlimited free usage.

Theme 4. Apple Silicon vs NVIDIA GPUs for LLM Inference

- HF releases Hugging Chat Mac App - Run Qwen 2.5 72B, Command R+ and more for free! (Score: 54, Comments: 19): Hugging Face has released the Hugging Chat Mac App, allowing users to run state-of-the-art open-source language models like Qwen 2.5 72B, Command R+, Phi 3.5, and Mistral 12B locally on their Macs for free. The app includes features such as web search and code highlighting, with additional features planned, and contains hidden easter eggs like Macintosh, 404, and Pixel pals themes; users can download it from GitHub and provide feedback for future improvements.

- Low Context Speed Comparison: Macbook, Mac Studios, and RTX 4090 (Score: 33, Comments: 29): The post compares the performance of RTX 4090, M2 Max Macbook Pro, M1 Ultra Mac Studio, and M2 Ultra Mac Studio for running Llama 3.1 8b q8, Nemo 12b q8, and Mistral Small 22b q6_K models. Across all tests, the RTX 4090 consistently outperformed the Mac devices, with the M2 Ultra Mac Studio generally coming in second, followed by the M1 Ultra Mac Studio and M2 Max Macbook Pro. The author notes that these tests were run with freshly loaded models without flash attention enabled, and apologizes for not making the tests deterministic.

- Users recommend using exllamav2 for better performance on RTX 4090, with one user reporting 104.81 T/s generation speed for Llama 3.1 8b on an RTX 3090. Some noted past quality issues with exl2 compared to gguf models.

- Discussion on prompt processing speed for Apple Silicon, with users highlighting the significant difference between initial and subsequent prompts due to caching. The M2 Ultra processes 4000 tokens in 16.7 seconds compared to 5.6 seconds for the RTX 4090.

- Users explored options for improving Mac performance, including enabling flash attention and the theoretical possibility of adding a GPU for prompt processing on Macs running Linux, though driver support remains limited.

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Releases and Improvements

-

OpenAI releases advanced voice mode for ChatGPT: OpenAI has rolled out an advanced voice mode for ChatGPT that allows for more natural conversations, including the ability to interrupt and continue thoughts. Users report it as a significant improvement, though some limitations remain around letting users finish thoughts.

-

Google updates Gemini models: Google announced updated production-ready Gemini models including Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002. The update includes reduced pricing, increased rate limits, and performance improvements across benchmarks.

-

New Flux model released: The creator of Realistic Vision released a Flux model called RealFlux, available on Civitai. Users note it produces good results but some limitations remain around facial features.

AI Capabilities and Benchmarks

-

Gemini 1.5 002 performance: Reports indicate Gemini 1.5 002 outperforms OpenAI's o1-preview on the MATH benchmark at 1/10th the cost and with no thinking time.

-

o1 capabilities: An OpenAI employee suggests o1 is capable of performing at the level of top PhD students, outperforming humans more than 50% of the time in certain tasks. However, some users dispute this claim, noting limitations in o1's ability to learn and adapt compared to humans.

AI Development Tools and Interfaces

- Invoke 5.0 update: The Invoke AI tool received a major update introducing a new Canvas with layers, Flux support, and prompt templates. This update aims to provide a more powerful interface for combining various AI image generation techniques.

AI Impact on Society and Work

-

Job displacement predictions: Vinod Khosla predicts AI will take over 80% of work in 80% of jobs, sparking discussions about potential economic impacts and the need for universal basic income.

-

AI in law enforcement: A new AI tool for police work claims to perform "81 years of detective work in 30 hours," raising both excitement about increased efficiency and concerns about potential misuse.

Emerging AI Research and Applications

- MIT vaccine technology: Researchers at MIT have developed a new vaccine technology that could potentially eliminate HIV with just two shots, showcasing the potential for AI to accelerate medical breakthroughs.

A summary of Summaries of Summaries by O1-mini

Theme 1. New AI Model Releases and Multimodal Enhancements

- Llama 3.2 Launches with Multimodal and Edge Capabilities: Llama 3.2 introduces various model sizes including 1B, 3B, 11B, and 90B with multimodal support and a 128K context length, optimized for deployment on mobile and edge devices.

- Molmo 72B Surpasses Competitors in Benchmarks: The Molmo 72B model from Allen Institute for AI outperforms models like Llama 3.2 V 90B in benchmarks such as AI2D and ChatQA, offering state-of-the-art performance with an Apache license.

- Hermes 3 Enhances Instruction Following on HuggingChat: Hermes 3, available on HuggingChat, showcases improved instruction adherence, providing more accurate and contextually relevant responses compared to previous versions.

Theme 2. Model Performance, Quantization, and Optimization

- Innovations in Image Generation with MaskBit and MonoFormer: The MaskBit model achieves a FID of 1.52 on ImageNet 256 × 256 without embeddings, while MonoFormer unifies autoregressive text and diffusion-based image generation, matching state-of-the-art performance by leveraging similar training methodologies.

- Quantization Techniques Enhance Model Efficiency: Discussions on quantization vs distillation reveal the complementary benefits of each method, with implementations in Setfit and TorchAO addressing memory and computational optimizations for models like Llama 3.2.

- GPU Optimization Strategies for Enhanced Performance: Members explore TF32 and float8 representations to accelerate matrix operations, alongside tools like Torch Profiler and Compute Sanitizer to identify and resolve performance bottlenecks.

Theme 3. API Pricing, Integration, and Deployment Challenges

- Cohere API Pricing Clarified for Developers: Developers learn that while rate-limited Trial-Keys are free, transitioning to Production-Keys incurs costs for commercial applications, emphasizing the need to align API usage with project budgets.

- OpenAI's API and Data Access Scrutiny: OpenAI announces limited access to training data for review purposes, hosted on a secured server, raising concerns about transparency and licensing compliance among the engineering community.

- Integrating Multiple Tools and Platforms: Challenges in integrating SillyTavern, Forge, Langtrace, and Zapier with various APIs are discussed, highlighting the complexities of maintaining seamless deployment pipelines and compatibility across tools.

Theme 4. AI Safety, Censorship, and Licensing Issues

- Debates on Model Censorship and Uncensoring Techniques: Community members discuss the over-censorship of models like Phi-3.5, with efforts to uncensor models through tools and sharing of uncensored versions on platforms like Hugging Face.

- MetaAI's Licensing Restrictions in the EU: MetaAI faces licensing challenges in the EU, restricting access to multimodal models like Llama 3.2 and prompting discussions on compliance with regional laws.

- OpenAI's Corporate Shifts and Team Exodus: The resignation of Mira Murati and other key team members from OpenAI sparks speculation about organizational stability, corporate culture changes, and the potential impact on AI model development and safety protocols.

Theme 5. Hardware Infrastructure and GPU Optimization for AI

- Cost-Effective GPU Access with Lambda Labs: Members discuss utilizing Lambda Labs for GPU access at around $2/hour, highlighting its flexibility for running benchmarks and fine-tuning models without significant upfront costs.

- Troubleshooting CUDA Errors on Run Pod: Users encounter illegal CUDA memory access errors on platforms like Run Pod, with solutions including switching machines, updating drivers, and modifying CUDA code to prevent memory overflows.

- Deploying Multimodal Models on Edge Devices: Discussions on integrating Llama 3.2 models into edge platforms like GroqCloud, emphasizing the importance of optimized inference kernels and minimal latency for real-time AI applications.