-

Notifications

You must be signed in to change notification settings - Fork 20

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Mask image remains black #6

Comments

|

Great thx, it works here from packages so:

On Fri, Mar 1, 2013 at 6:19 AM, beetleskin [email protected] wrote:

|

|

Yes, as you can see in the image the pose is drawn correctly in most frames. Also the matches look good. Could this be related to the openni-drivers? I built and installed OpenNi and SensorKinect from source. |

|

Ah, my bad, I answered through mail and did not see the image. I looks good. You never ever get anything in the mask ? (even when you're far, close, seeing from the top or with a different object ? ) |

|

Nope, nothing ever. Just black. |

|

ok, might be related to your other bug. As you have everything from source, source your setup.sh and then run any of those two scripts in ecto_opencv/samples/rgbd/plane* (one tracks planes, the other one segments object on top). |

|

Interesting! The plane_cluster.py crashes: May this be related to the malfunctioning openni driver (I run into this problem with roboearth). capture_openni_usb.py yields the same error. The plane_sample.py however seems to work fine: |

|

The plane cluster is fine, just git pull it, I fixed it the other day. |

|

Ok, I do remember a problem that I forgot to fix that seems to fit your data: no pixel of your object is touching the plane, there are only NaN 's around it. Let me fix that one at least. |

|

actually, did you try to change the --seg_radius_crop value ? Set it to something large like 0.5 or 1 meter. |

|

ok, sorry to be picky, can you please try with an object whose depth will be reflected by the Kinect (this one here has glass it seems) like a cardboard orange juice box. |

|

ok, I was finally able to reproduce that bug and it was a C++ bug :) Can you please download the latest of capture and try it out ? |

|

Ok now the capturing with templates produces bag files, thanks! However (oh no issues incomming :) ) ...

I uploaded you the bag file here. |

Fixes are in capture and ecto_opencv. Thx for your very detailed bug report, that helps make the code more robust, I hope it's all good for you now ! Off to #7 now :) |

|

Hej, thanks for the updates. I just updated the sources ... is it correct, that the capture package now depends on household_object_database? Because I'm stuck now at installing the dependencies. Apparently there is nothing in the ros repo except household_object_database_msg. |

|

tabletop is the only one depending on it and that is one of the pipelines so you can remove it safely if you don't want to use that pipeline. The package is out but only in shadow-fixed (not ros), you can get it from here: |

|

ok thanks, I just removed tabletop. I'll try the fixes soon :) |

|

Ok I used a better template and scanned very carefully. The model looks ok, so I guess you can close this issue. Capturing with templates works very well now. Just one thing ... is there a textured version of the mesh somewhere? Do you produce a UV-map ore something? How do you store the color information of a model when uploading/meshin? |

|

For the non-alignment, we've noticed the Kinect is much worse than the ASUS when hand-held (most likely because the Kinect is not synchronized). That's why we recommend a lazy-Suzanne. |

|

So you don't store any color information? What about tod? For the texturing, pcl_kinfu_largeScale_texture_output comes into my mind, but I don't know if this is of any help. I didn't check what input they are using exactly. |

|

Color is not stored right now but it should obviously be stored. TOD does not use the mesh (but should if meshes+texture were of great quality): it just uses the raw 2d input for descriptors and their 3d position. |

|

Oh I don't mean kinfu in general. They have a script whicht produces a texured mesh output from colored pointclouds: http://svn.pointclouds.org/pcl/trunk/gpu/kinfu_large_scale/tools/standalone_texture_mapping.cpp |

|

Thx for the reference, I added an issue for it: #12 |

|

beetleskin, how did you solved the problem of mask remaining empty? |

|

Well I posted the issue here and vincent rabaud solved it ;) Try wg-perception and ecto from source. |

Hi again,

I tried to run the object_recognition_capture with a template but the mask image remains dark and no capturing is performed:

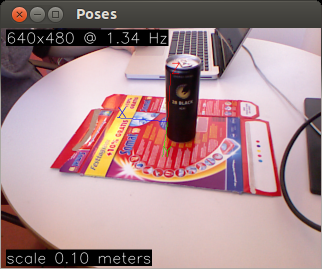

rosrun object_recognition_capture capture -i my_textured_plane -o orc_scan_dataIntensiv.bag -n 12 --preview --seg_z_min 0.0001The pose estimation seems to work, the coordinate origin is reprojected correctly onto the template plane:

I tried different optional parameter but nothing changed. I compiled ecto and wg_perception completely from source within a catkin groovy workspace on Ubuntu 12.4, the data comes from a Kinect.

The text was updated successfully, but these errors were encountered: