- NodeJs

- OCR -> tesseract.js

- Image processing -> jimp

The captcha looks like this:

(Original captcha. Frame is drawn in the original)

If you try to OCR this without editing, you will get just an empty result. OCR don't like this image at all! But with some image processig this should not be pretty hard right?! Well not quite! And here is why...

- Remove the Frame

- Remove Noise and lines by light colors

- Finally OCR

Easy, just set every border pixel to white:

(Captcha with remove border)

This captchas are always grayscale pictures, so to remove the noise, I just check every pixel (Pseudocode):

if red channel of pixel are over 50 -> set to white //255 is white, 0 black

This leaves only the black pixels:

(Captcha with remove noise)

It is time for the next round of OCR:

At this point, I thought I would be done, and we get a nice result, but not a even close one...

So I tried to "fill gaps" and make it "thin" like in my other docu, on what I got success with... But the image is a lot smaller so I scaled it up a to work with... but no luck. The OCR did not like it... Always returns the same result => "thq"

My explanation for this is: Even tough the OCR is trained to detect letters AND numbers, it is not used to a number inside a "word" -> Not common in the english language. So it assumes the "2" must be a letter as well...

I validated this by putting a single char (the "h") from the captcha in, and it worked without problems... Also the "2", "d", "q" -> all characters without problems. So I was on the right path.

My new plan: Do some image segmentation first!

The first Idea what came to my to mind: Move from left to right trough the image, and remember all lines that have not crossed any black pixel.

(Zoomed in on the captcha with some example lines that do not cross any black pixel)

With some computing, you could easily find the different connected segements. Would probably work here, but thinking ahead, we see other captchas in wich the letters are also above and below each other like here:

(Captcha from filepanzer.com)

So I wanted a more generic solution that would also work at some later point and more complex tasks.

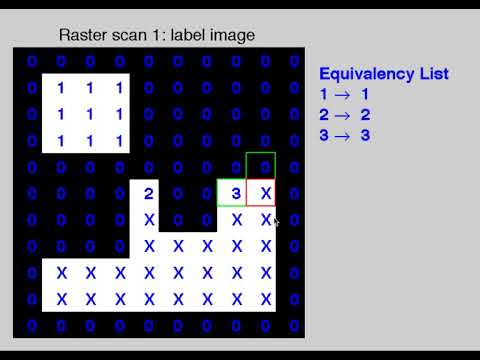

To work in mor complex environments, we need to "connect" pixels to blocks. Even if they go around each other. Here is a nice video that explains the process pretty well:

I programmed the algorithm after the example in the video, but with the addition, that even pixels that are not direct neighbors but touch each other at one point (f.e. pixel [0,0] and [1,1]) are registred as "connected".

For better testing, I took the first half of the captcha -> So I'm still able to print the representive segmentation array in the console:

(Array of connected pixels with red outline for better contrast)

The image shows a perfect segmentation of both segments ("h" and "2"), and this also works with more complex 2D images. The image above also includes the function that sorts the segments from left to right (Not top->down or random). This is required, because we need all segments in the correct order -> even if the are small, tall or slightly displaced.

So after successful segmentation, we can run the OCR on each part, wait for all parts to be finished and add the results back together. This works without any problems!

I found out that, after I do the segmentation, then upscal -> fill gaps -> thin out. The result is even better. The final algorithm works like:

- Remove border

- Remove grey (Noise)

- run segmentation

- Scale, fill gabs and thin it again (see last post for how it's done)

- run OCR on each segment

- Add all results in the right order

output:h2dq

With OCR confident log: Letter0: h => 86% Letter1: 2 => 83% Letter2: d => 84% Letter3: q => 79%

The result is perfectly fine, but I only had this one captcha image to test the algorithm with so far...