-

Notifications

You must be signed in to change notification settings - Fork 5

Milestone: CMEPS 0.4

In this milestone, the Community Mediator for Earth Prediction Systems (CMEPS) is used to couple the model components in NOAA's Unified Forecast System (UFS) Subseasonal-to-Seasonal (S2S) application. The model components included are the Global Forecast System (GFS) atmosphere with the Finite Volume Cubed Sphere (FV3) dynamical core (FV3GFS), the Modular Ocean Model (MOM6) and the Los Alamos sea ice model (CICE5). The purpose of this release was to introduce usability improvements to the CMEPS 0.3 release.

CMEPS leverages the Earth System Modeling Framework (ESMF) infrastructure, which consists of tools for building and coupling component models, along with the National Unified Operational Capability (NUOPC) Layer, a set of templates and conventions that increases interoperability in ESMF-based systems. The workflow used is the Common Infrastructure for Modeling the Earth (CIME) community workflow software.

This is not an official release of the UFS S2S application, but a prototype system being used to evaluate specific aspects of CMEPS and the CIME workflow. There were two tags released with this milestone, 0.4.1 and 0.4.2. Documentation for both is presented below.

Changes relative to CMEPS 0.3 are:

- (Code change) The FV3GFS namelist is now properly handled by CIME, with support for changing PET layout and write component tasks. Several PET layout configurations have been verified to run on the supported platforms.

- (Diagnostics added) An initial performance timing table was created including sample timings on NCAR's Cheyenne and TACC's Stampede2 using different PET layouts.

Changes relative to CMEPS 0.3 are:

- (Code change) The FV3GFS namelist is now properly handled by CIME, with support for changing PET layout and write component tasks. Several PET layout configurations have been verified to run on the supported platforms.

- (Namelist change in Mediator) flux_convergence_max_iteration was changed from 2 to 5 to be consistent with NEMS BM-1 runs.

- (Namelist change in Mediator) flux_convergence is changed from 0.0 to 0.01 to be consistent with NEMS BM-1 runs.

- (Namelist change in FV3GFS) h2o_phys namelist option is changed from .false. to .true. to be consistent with NEMS-BM1 runs (this requires an additional input file, global_h2oprdlos.f77).

- (Diagnostics added) An initial performance timing table was created including sample timings on NCAR's Cheyenne and TACC's Stampede2 using different PET layouts.

This milestone uses the same versions of model components as CMEPS 0.3, but has an updated mediator code. The specified tags for the components are as follows:

-

UFSCOMP: cmeps_v0.4.2

- CMEPS: cmeps_v0.4.2 (new version of mediator but to create numerically stable modeling system and to be consistent with 0.3 release, flux_convergence=0 and flux_max_iteration=2 are used)

- CIME: cmeps_v0.4.3

- FV3GFS CIME interface: cmeps_v0.4.3 (h2o_phys is set as .false. to be consistent with 0.3 release)

- FV3GFS: cmeps_v0.3

- MOM CIME interface: cmeps_v0.4

- MOM: cmeps_v0.3

- CICE: cmeps_v0.3.1 (just includes modifications to work with newer version of mediator 0.4)

-

UFSCOMP: cmeps_v0.4.1

- CMEPS: cmeps_v0.4.1

- CIME: cmeps_v0.4.2

- FV3GFS CIME interface: cmeps_v0.4.2

- FV3GFS: cmeps_v0.3

- MOM CIME interface: cmeps_v0.4

- MOM: cmeps_v0.3

- CICE: cmeps_v0.3.1 (just includes modifications to work with newer version of mediator 0.4)

The FV3GFS atmosphere is discretized on a cubed sphere grid. This grid is based on a decomposition of the sphere into six identical regions, obtained by projecting the sides of a circumscribed cube onto a spherical surface. See more information about the cubed sphere grid here. The cubed sphere grid resolution for this milestone is C384.

The MOM6 ocean and CICE5 sea ice components are discretized on a tripolar grid. This type of grid avoids a singularity at the North Pole by relocating poles to the land masses of northern Canada and northern Russia, in addition to one at the South Pole. See more information about tripolar grids here. The tripolar grid resolution for this milestone is 1/4 degree.

In ESMF, component model execution is split into initialize, run, and finalize methods, and each method can have multiple phases. The run sequence specifies the order in which model component phases are called by a driver.

To complete a run, a sequential cold start run sequence generates an initial set of surface fluxes using a minimum set of files. The system is then restarted using a warm start run sequence, with CMEPS reading in the initial fluxes and the atmosphere, ocean, and ice components reading in their initial conditions.

The cold start sequence initializes all model components using only a few files. The cold start sequence only needs to run for an hour. However, it is also possible to have longer initial run to restart the model.

The cold start run sequence below shows an outer (slow) loop and an inner (fast) loop each associated with a coupling interval. Normally the inner loop is faster, but for this milestone both loops were set to the same coupling interval, 1800 seconds. In general, the outer loop coupling interval must be a multiple of the inner loop interval.

An arrow ( ->) indicates that data is being transferred from one component to another during that step. CMEPS is shown as MED, FV3GFS as ATM, CICE5 as ICE, and MOM6 as OCN. Where a component name (e.g. ATM) or a component name and specific phase appears in the run sequence (e.g., MED med_phases_prep_atm), that is where in the sequence the component method or phase is run.

runSeq::

@1800

@1800

MED med_phases_prep_atm

MED -> ATM :remapMethod=redist

ATM

ATM -> MED :remapMethod=redist

MED med_phases_prep_ice

MED -> ICE :remapMethod=redist

ICE

ICE -> MED :remapMethod=redist

MED med_fraction_set

MED med_phases_prep_ocn_map

MED med_phases_aofluxes_run

MED med_phases_prep_ocn_merge

MED med_phases_prep_ocn_accum_fast

MED med_phases_history_write

MED med_phases_profile

@

MED med_phases_prep_ocn_accum_avg

MED -> OCN :remapMethod=redist

OCN

OCN -> MED :remapMethod=redist

MED med_phases_restart_write

@

::

The warm start sequence, shown below, is for the main time integration loop. It is initialized by restart files generated by the cold start sequence. For this milestone the inner and outer coupling intervals are both set to 1800 seconds.

@1800

MED med_phases_prep_ocn_accum_avg

MED -> OCN :remapMethod=redist

OCN

@1800

MED med_phases_prep_atm

MED med_phases_prep_ice

MED -> ATM :remapMethod=redist

MED -> ICE :remapMethod=redist

ATM

ICE

ATM -> MED :remapMethod=redist

ICE -> MED :remapMethod=redist

MED med_fraction_set

MED med_phases_prep_ocn_map

MED med_phases_aofluxes_run

MED med_phases_prep_ocn_merge

MED med_phases_prep_ocn_accum_fast

MED med_phases_history_write

MED med_phases_profile

@

OCN -> MED :remapMethod=redist

MED med_phases_restart_write

@

::

-

The NEMS (NOAA Environmental Modeling System) Mediator is not currently fully conservative when remapping surface fluxes between model grids: a special nearest neighbor fill is used along the coastline to ensure physically realistic values are available in cells where masking mismatches would otherwise leave unmapped values. Although CMEPS has a conservative option and support for fractional surface types, the NEMS nearest neighbor fills were implemented in CMEPS so that the two Mediators would have similar behavior. In a future release, the fully conservative option will be enabled.

-

The current version of CIME case control system does not support the JULIAN calendar type and the default calendar is set to NOLEAP for this milestone. In addition, there are inconsistencies among the model components and mediator in terms of the calendar type and this might by observed by checking the NetCDF time attributes of the model outputs. In a future release of the CMEPS modeling system, the JULIAN calendar will be set as the default by ensuring the consistency of calendar type among the model components and mediator.

-

The namelist options in ESMF config files with uppercase characters are not handled properly by the current version of the CIME case control system and xmlchange can not change the value of those particular namelist options. This will be fixed in future release of the CMEPS modeling system.

-

The tables below show results for tags 0.4.2 and 0.4.1 on three platforms (Cheyenne/NCAR, Stampede2/XSEDE and Theia/NOAA) for this milestone.

| Initial Conditions | Cheyenne | Theia | Stampede2 |

|---|---|---|---|

| Jan 2012 | Ran 35 days | Ran 29 days* | Ran 35 days |

| Apr 2012 | Ran 35 days | Ran 29 days* | Ran 35 days |

| Jul 2012 | Ran 35 days | Ran 24 days* | Ran 35 days |

| Oct 2012 | Ran 35 days | Ran 24 days* | Ran 35 days |

* timed out at 8 hours due to the job scheduler limit

A model instability was discovered in this tag and has been traced to specific namelist settings.

| Initial Conditions | Cheyenne | Theia | Stampede2 |

|---|---|---|---|

| Jan 2012 | Ran 35 days | Ran 35 days | Ran 35 days |

| Apr 2012 | Not Tested | Not Tested | Died after 2 hours with saturation vapor pressure table outflow |

| Jul 2012 | Not Tested | Not Tested | Died after 23 days with saturation vapor pressure table outflow |

| Oct 2012 | Not Tested | Not Tested | Ran 35 days |

- Cheyenne/NCAR

- Stampede2/XSEDE

- Theia/NOAA

Currently, the UFSCOMP, CMEPS, CIME and model components MOM6 and CICE are distributed using public repositories but FV3GFS is a private repository on GitHub that requires additional steps to access. The first step is getting a github account if you do not have one, by going to github.com and clicking Sign Up in the upper right. Once you have a github account, please send a mail here to get permission to access the FV3GFS repository.

To download, run and build:

Instructions are shown for the latest 0.4.2 tag. To clone UFSCOMP umbrella repository and checkout its components,

$ git clone https://github.com/ESCOMP/UFSCOMP.git

$ cd UFSCOMP

# To checkout the tag for this milestone:

$ git checkout cmeps_v0.4.2

# Check out all model components and CIME

# Note that there is no need to use separate Externals.cfg file for Stampede2 and Theia anymore

# Also, note that the manage externals asks username and password four times just for FV3GFS repository because it queries information from the private repository

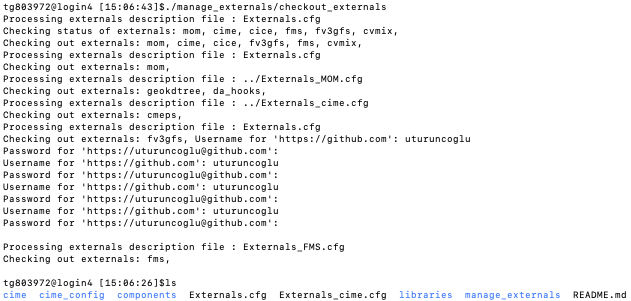

$ ./manage_externals/checkout_externals

| Screenshot for checking out code and the UFSCOMP directory structure |

|---|

|

To create new case,

# Go to CIME scripts directory

$ cd cime/scripts

# Set the PROJECT environment variable and replace PROJECT ID with an appropriate project number

# Bourne shell (sh, ksh, bash, sh)

$ export PROJECT=[PROJECT ID]

# C shell (csh, tcsh)

$ setenv PROJECT [PROJECT ID]

# Create UFS S2S case using the name "ufs.s2s.c384_t025" (the user can choose any name)

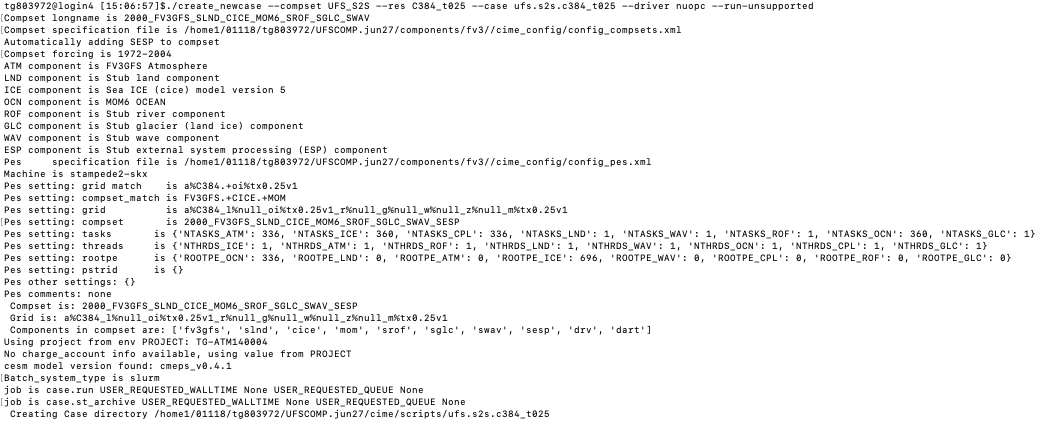

$ ./create_newcase --compset UFS_S2S --res C384_t025 --case ufs.s2s.c384_t025 --driver nuopc --run-unsupported

| Screenshot for creating new case |

|---|

|

To setup case, change its configuration (e.g. start time, wall clock limit for schedular, simulation time) and build,

$ cd ufs.s2s.c384_t025 # this is your "case root" directory selected above, and can be whatever name you choose

$ ./case.setup

# Set start time

$ ./xmlchange RUN_REFDATE=2012-01-01

$ ./xmlchange RUN_STARTDATE=2012-01-01

$ ./xmlchange JOB_WALLCLOCK_TIME=00:30:00 # wall-clock time limit for job scheduler (optional)

$ ./xmlchange USER_REQUESTED_WALLTIME=00:30:00 # to be consistent with JOB_WALLCLOCK_TIME

# Turn off short term archiving

$ ./xmlchange DOUT_S=FALSE

# Submit a 1-hour cold start run to generate mediator restart

$ ./xmlchange STOP_OPTION=nhours

$ ./xmlchange STOP_N=1

# To use correct initial condition for CICE, edit user_nl_cice and add following line

# ice_ic = "$ENV{UGCSINPUTPATH}/cice5_model.res_2012010100.nc"

# Build case

$ ./case.build # on Cheyenne $ qcmd -- ./case.build

| Screenshot for successful build |

|---|

|

To submit 1-hour cold start run,

$ ./case.submit

# Output appears in the case run directory:

# On Cheyenne:

$ cd /glade/scratch/<user>/ufs.s2s.c384_t025

# On Stampede2:

$ cd $SCRATCH/ufs.s2s.c384_t025

# On Theia:

$ cd /scratch4/NCEPDEV/nems/noscrub/<user>/cimecases/ufs.s2s.c384_t025

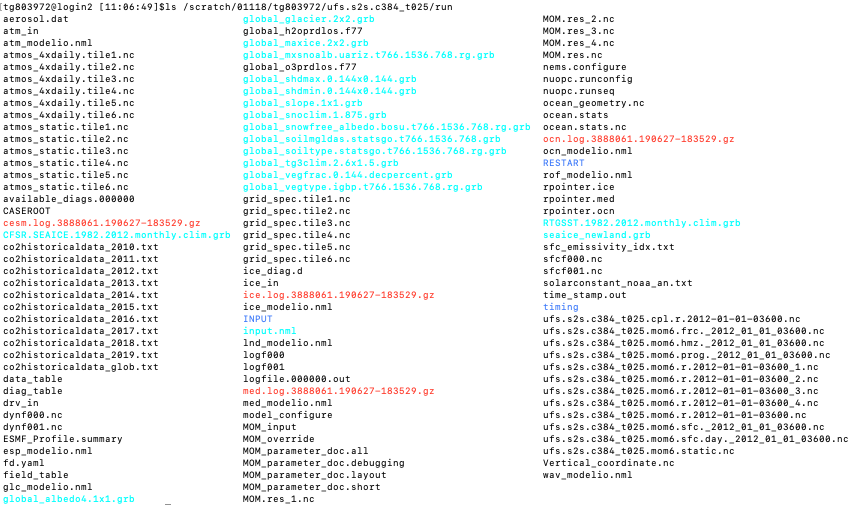

| Screenshot for directory structure of after 1-hour successful run |

|---|

|

Then, model can be restarted using restart file generated by 1-hour simulation,

$ ./xmlchange MEDIATOR_READ_RESTART=TRUE

$ ./xmlchange STOP_OPTION=ndays

$ ./xmlchange STOP_N=1

$ ./case.submit

Not for this milestone. The only model resolution that is supported in this milestone is C384_t025 (atmosphere: C384 cubed sphere ~ 25 km, ocean/ice: 1/4 degree tripolar).

The Persistent Execution Threads (PET) layout affects the overall performance of the system. The following command shows the default PET layout of the case:

$ cd /path/to/UFSCOMP/cime/scripts/ufs.s2s.c384_t025

$ ./pelayout

To change the PET layout temporarily, the xmlchange command is used. For example, the following commands are used to assign a custom PET layout for the c384_t025 resolution case:

$ cd /path/to/UFSCOMP/cime/scripts/ufs.s2s.c384_t025

$ ./xmlchange NTASKS_CPL=648

$ ./xmlchange NTASKS_ATM=648

$ ./xmlchange NTASKS_OCN=360

$ ./xmlchange NTASKS_ICE=360

$ ./xmlchange ROOTPE_CPL=0

$ ./xmlchange ROOTPE_ATM=0

$ ./xmlchange ROOTPE_OCN=648

$ ./xmlchange ROOTPE_ICE=1008

This will double the default number of PETs used for the ATM component and will assign 648 cores to ATM and CPL (Mediator), 360 cores to OCN and 360 cores to ICE components. In this setup, all the model components run concurrently and PETs will be distributed as 0-647 for ATM and CPL, 648-1007 for OCN and 1008-1367 for ICE components. Note that the PETs that are assigned to ATM component (FV3GFS) also includes the IO tasks (write_tasks_per_group), which the default value set as 48. The real number of PETs that is used by the ATM component is 600 (648-48).

To change the default PET layout permanently for a specific case:

# Go to main configuration file used to define PET layout

$ cd /path/to/UFSCOMP/components/fv3/cime_config

# And find specific case in config_PETs.xml file and edit ntasks and rootpe elements in the XML file.

# For example, a%C384.+oi%tx0.25v1 is used to modify default PET layout for c384_t025 case.

The layout namelist option mainly defines the processor layout on each tile and the number of PETs assigned to ATM component must equal layout(1)*layout(2)*ntiles+write_tasks_per_group*write_groups. In this case, layout(1) indicates the number of sub-regions in X direction and layout(2) in Y direction to specify two-dimensional decomposition ratios. For the cubed sphere, ntiles should be 6, one tile for each face of the cubed sphere.

To change the default layout option (6x8):

$ cd /path/to/UFSCOMP/cime/scripts/ufs.s2s.c384_t025

# Add following line to user_nl_fv3gfs

layout = 6 12

This will set layout(1) to 6 and layout(2) to 12 and the total number of PETs used by the FV3GFS model will be 6x12*6 = 432 (except PETs used by IO).

Also note that if the layout namelist option is not provided by the user using user_nl_fv3gfs, then the CIME case control system will automatically calculate appropriate layout options by considering the total number of PETs assigned to ATM component and to the IO tasks (write_tasks_per_group*write_groups). In this case, the CIME case control system will define the layout option to have as close to a square two-dimensional decomposition as possible.

In the current version of the CMEPS modeling system, FV3GFS namelist options are handled by the CIME.

To change the default number of IO tasks (write_tasks_per_group, 48):

$ cd /path/to/UFSCOMP/cime/scripts/ufs.s2s.c384_t025

# Add following line to user_nl_fv3gfs

write_tasks_per_group = 72

This will increase the IO tasks by 50% over the default (48+24=72).

CIME calculates and modifies the FV3GFS layout namelist parameter (input.nml) automatically.

For a new case, pass the --walltime parameter to create_newcase. For example, to default the job time to 20 minutes, you would use this command:

$ ./create_newcase --compset UFS_S2S --res C384_t025 --case ufs.s2s.c384_t025.tw --driver nuopc --run-unsupported --walltime=00:20:00

Alternatively, the xmlchange command is also used to change wallclock time and job submission queue for an existing case:

# the following command sets job time to 20 minutes

$ ./xmlchange JOB_WALLCLOCK_TIME=00:20:00

$ ./xmlchange USER_REQUESTED_WALLTIME=00:20:00

# the following command changes the queue from regular to premium on NCAR's Cheyenne system

$ ./xmlchange JOB_QUEUE=premium

The current version of the coupled system includes two different restart option to support different variety of applications:

- Option 1: Restarting only Mediator component (MEDIATOR_READ_RESTART)

- Option 2: Restarting all components including Mediator (CONTINUE_RUN)

Option 1:

To run the system concurrently, an initial set of surface fluxes must be available to the Mediator. This capability was implemented to match the existing protocol used in the UFS. The basic procedure is to (1) run a cold start run sequence for one hour so the Mediator can write out a restart file, and then (2) run the system again with the Mediator set to read this restart file (containing surfaces fluxes for the first time-step) while the other components read in their original initial conditions. The following commands can be used to restart the coupled system with only the Mediator reading in a restart file.

$ ./xmlchange MEDIATOR_READ_RESTART=TRUE

$ ./xmlchange STOP_OPTION=ndays

$ ./xmlchange STOP_N=1

$ ./case.submit

Just as with the CONTINUE_RUN option, the modeling system will also use the warm start run sequence when MEDIATOR_READ_RESTART is set as TRUE so that the model component will run concurrently.

Option 2:

In this case, the coupled system should be run for at least 1 hour (or more precisely two slow coupling time steps) forecast period before restarting (cold run sequence). To restart the model, re-submit a case after the previous run by setting CONTINUE_RUN XML option to TRUE using xmlchange.

$ cd /path/to/UFSCOMP/cime/scripts/ufs.s2s.c384_t025 # back to your case root

$ ./xmlchange CONTINUE_RUN=TRUE

$ ./xmlchange STOP_OPTION=ndays

$ ./xmlchange STOP_N=1

$ ./case.submit

In this case, the modeling system will use the warm start run sequence which allows concurrency.

The input file directories are set in the XML file /path/to/UFSCOMP/cime/config/cesm/machines/config_machines.xml. Note that this file is divided into sections, one for each supported platform (machine). This file sets the following three environment variables:

- UGCSINPUTPATH: directory containing initial conditions

- UGCSFIXEDFILEPATH: fixed files for FV3GFS such as topography, land-sea mask and land use types for different model resolutions

- UGCSADDONPATH: fixed files for mediator such as grid spec file for desired FV3GFS model resolution

The relevant entries for each directory can be found under special XML element called as machine. For example, the <machine MACH="cheyenne"> element points to machine dependent entries for Cheyenne.

The default directories for Cheyenne, Stampede2 and Theia are as follows:

Cheyenne:

<env name="UGCSINPUTPATH">/glade/work/turuncu/FV3GFS/benchmark-inputs/2012010100/gfs/fcst</env>

<env name="UGCSFIXEDFILEPATH">/glade/work/turuncu/FV3GFS/fix_am</env>

<env name="UGCSADDONPATH">/glade/work/turuncu/FV3GFS/addon</env>

Stampede2:

<env name="UGCSINPUTPATH">/work/06242/tg855414/stampede2/FV3GFS/benchmark-inputs/2012010100/gfs/fcst</env>

<env name="UGCSFIXEDFILEPATH">/work/06242/tg855414/stampede2/FV3GFS/fix_am</env>

<env name="UGCSADDONPATH">/work/06242/tg855414/stampede2/FV3GFS/addon</env>

Theia:

<env name="UGCSINPUTPATH">/scratch4/NCEPDEV/nems/noscrub/Rocky.Dunlap/INPUTDATA/benchmark-inputs/2012010100/gfs/fcst</env>

<env name="UGCSFIXEDFILEPATH">/scratch4/NCEPDEV/nems/noscrub/Rocky.Dunlap/INPUTDATA/fix_am</env>

<env name="UGCSADDONPATH">/scratch4/NCEPDEV/nems/noscrub/Rocky.Dunlap/INPUTDATA/addon</env>

There are four different initial conditions for the C384/0.25 degree resolution available with this release, each based on CFS analyses: 2012-01-01, 2012-04-01, 2012-07-01 and 2012-10-01.

There are several steps to modify the initial condition of the coupled system:

1. Update the UGCSINPUTPATH variable to point to the desired directory containing the initial conditions

-

Make the change in /path/to/UFSCOMP/cime/config/cesm/machines/config_machines.xml. This will set the default path used for all new cases created after the change.

-

In an existing case, make the change in env_machine_specific.xml in the case root directory. This will only affect this single case.

2. Update the ice namelist to point to the correct initial condition file

-

The ice component needs to be manually updated to point to the correct initial conditions file. To update the initial conditions for the ice component, modify the user_nl_cice file in the case root and set ice_ic to the full path of the ice initial conditions file. For example, initial conditions for date 2012010100 should be provided as follows:

For example, the user_nl_cice would look like this:

!---------------------------------------------------------------------------------- ! Users should add all user specific namelist changes below in the form of ! namelist_var = new_namelist_value ! Note - that it does not matter what namelist group the namelist_var belongs to !---------------------------------------------------------------------------------- ice_ic = "$ENV{UGCSINPUTPATH}/cice5_model.res_2012010100.nc"

3. Modify the start date of the simulation

-

The start date of the simulation needs to be set to the date of the new initial condition. This is done using xmlchange in your case root directory. Then, CIME will update date related namelist options for model_configure automatically. For example:

$ ./xmlchange RUN_REFDATE=2012-01-01 $ ./xmlchange RUN_STARTDATE=2012-01-01

CIME interface of each model component is mainly extended to allow manual modification of the namelist options. This is maintained by individual files (user_nl_cice for CICE, user_nl_mom for MOM, user_nl_fv3gfs for FV3GFS and user_nl_cpl for Mediator).

Running in debug mode will enable compiler checks and turn on additional diagnostic output including the ESMF PET logs (one for each MPI task). Running in debug mode is recommended if you run into an issue or plan to make any code changes.

In your case directory:

$ ./xmlchange DEBUG=TRUE

$ ./case.build --clean-all

$ ./case.build

The mediator fields (or history) can be written to disk to check exchanged fields among model components. Due to the large volume of data that will be written to the disk, it is better to keep the simulation length relatively short (i.e., couple of hours or days).

In your case directory:

$ ./xmlchange HIST_OPTION=nsteps

$ ./xmlchange HIST_N=1

$ ./xmlchange STOP_OPTION=nhours

$ ./xmlchange STOP_N=3

This will activate writing history files to disk and limit simulation time as 3-hours.

After a run completes, the timing information is copied into the timing directory under your case root. The timing summary file has the name cesm_timing.CASE-NAME.XXX.YYY. Detailed timing of individual parts of the system is available in the cesm.ESMF_Profile.summary.XXX.YYY file.

The purpose of the validation is to show that components used in the UFS Subseasonal-to-Seasonal system (FV3GFS, MOM6, and CICE5) coupled through the CMEPS Mediator (both 0.3 and 0.4 versions) show similar physical results as the three components coupled through the NEMS Mediator. While the aim of this milestone is not validating CMEPS modeling system scientifically against the NEMS Mediator (labeled NEMS-BM1) and available observations, only the results from January initial conditions from are analyzed and presented. The results of Theia/NOAA platform is used in this section to be consistent with the 0.3 milestone.

Note that the results provided in the validation section are for the UFSCOMP cmeps_v0.4.1 tag that uses flux_convergence=0.01 and flux_max_iteration=5 for CMEPS mediator and h2o_phys is set as .true. in FV3GFS namelist file.

Validation results are not available. Documentation for these results will likely fall under milestone CMEPS v0.5.

Used software environment:

| Cheyenne/NCAR | Stampede2/XSEDE | Theia/NOAA | |

|---|---|---|---|

| Compiler | intel/19.0.2 | intel/18.0.2 | intel/18.0.1.163 |

| MPI | mpt/2.19 | impi/18.0.2 | impi/5.1.1.109 |

| NetCDF | netcdf-mpi/4.6.3 | parallel-netcdf/4.3.3.1 | netcdf/4.3.0 |

| PNetCDF | pnetcdf/1.11.1 | pnetcdf/1.11.0 | pnetcdf |

| ESMF | ESMF 8.0.0 bs38 | ESMF 8.0.0 bs38 | esmf/8.0.0bs38 |

The comparison of the simulated sea ice concentration (Fig. 1a-b), thickness (Fig. 2a-b) and total ice area (Fig. 3a-b) for the NEMS-BM1, CMEPS-BM1 (v0.3) and CMEPS-BM1 (v0.4) simulations are given in this section. In general, CMEPS-BM1 (v0.4) is able to reproduce the main characteristics and spatial distribution of the sea ice and its evolution in time for both Northern and Southern Hemisphere, when it is compared with NEMS-BM1 and CMEPS-BM1 (v0.3). While the only modified component is the mediator for this milestone, the minor difference in the results is explained with the changes and improvements in the mediator component itself.

|

| Figure 1a: Comparison of spatial distribution of ice concentration (aice) in the Northern Hemisphere on the last day of the simulation (2012-01-30) for the run with January 2012 initial conditions. |

|

| Figure 1b: Comparison of spatial distribution of ice concentration (aice) in the Southern Hemisphere on last day of the simulation (2012-01-30) for the run with January 2012 initial conditions. |

|

| Figure 2a: Comparison of spatial distribution of ice thickness (hi) in the Southern Hemisphere on last day of the simulation (2012-01-30) for the run with January 2012 initial conditions. |

|

| Figure 2b: Comparison of spatial distribution of ice thickness (hi) in the Southern Hemisphere on last day of the simulation (2012-01-30) for the run with January 2012 initial conditions. |

The results of total ice area time series indicates that CMEPS-BM1 (v0.4) has similar temporal behavior with NEMS-BM1 and CMEPS-BM1 (v0.3) runs (Fig. 3a-b). It is also seen that CMEPS-BM1 (v0.4) and NEMS-BM1 have better agreement in terms of simulated total ice area in Southern Hemisphere (Fig. 3b). On the other hand, the CMEPS-BM1 (v0.4) results are slightly overestimates total ice area in Northern Hemisphere (Fig. 3a) when it is compared with NEMS-BM1 and CMEPS-BM1 (v0.3).

|

| Figure 3a: Comparison of total ice area time series in the Northern Hemisphere for January 2012 initial conditions. |

|

| Figure 3b: Comparison of total ice area time series in the Southern Hemisphere for January 2012 initial conditions. |

As a part of analysis of CMEPS-BM1 simulations, both spatial and temporal analysis of SST field is performed. Figure 4 and 5 shows the SST anomaly maps, which is derived from the results of the NEMS-BM1 and CMEPS-BM1 (v0.3 and v0.4) simulations for 30 January 2012. As it can be seen from the figures, NEMS-BM1 and CMEPS-BM1 (v0.4) simulations are consistent and there is no spatial systematic bias between the simulations. In general the bias between model simulations are less than 0.5 oC. The comparison of CMEPS-BM1 v0.3 and v0.4 simulations are also have similar bias pattern which is slightly stronger than the comparison of NEMS-BM1.

|

| Figure 4: SST map of NEMS-BM1 and CMEPS-BM1 v0.4 for 30 January 2012. |

|

| Figure 5: SST map of CMEPS-BM1 v0.4 and CMEPS-BM1 v0.3 for 30 January 2012. |

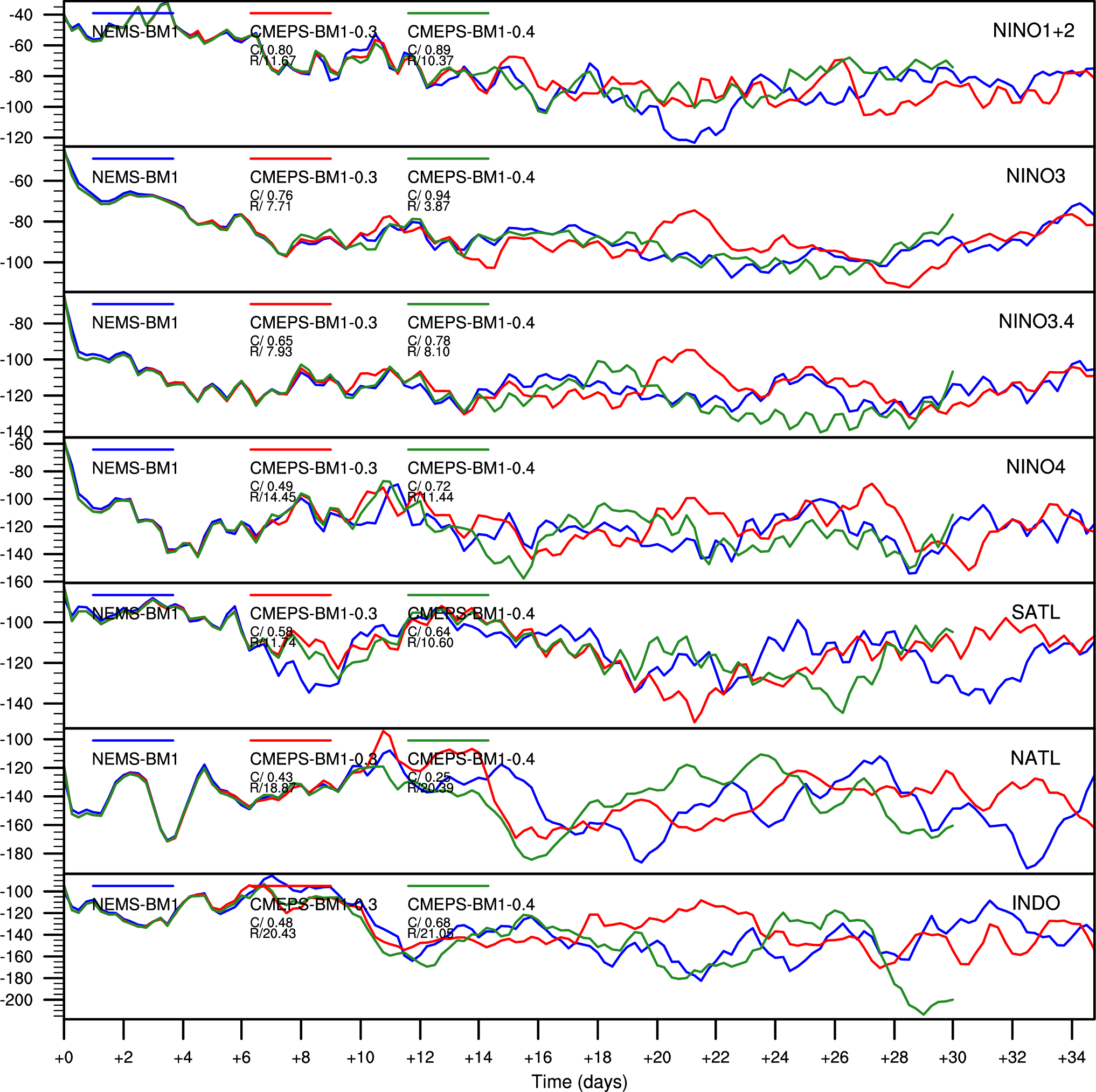

Figures 6 show the evolution of SST for each defined sub-region (see Figure 4 on 0.3 milestone page). The results of CMEPS-BM1 simulations are very consistent with the results of NEMS-BM1 simulation. In addition, the temporal correlation is slightly increased in almost all sub-regions (except NINO3.4) with CMEPS-BM1 (v0.4) when it is compared with CMEPS-BM1 (v0.3). Along with the improvement in the temporal correlation, the RMSE errors are also reduced in CMEPS-BM1 (v0.4) simulation.

|

| Figure 6: SST time-series for January 2012 initial conditions. |

The initial analysis of the surface heat flux components (shortwave and long-wave radiation, latent and sensible heat fluxes) is summarized in Figures 7 and 8. The comparison of NEMS-BM1 and CMEPS-BM1 (v0.4) indicates that CMEPS-BM1 (v0.4) is able to reproduce spatial distribution of the surface flux components with a region depended slight negative and positive biases (Fig. 7). The largest difference seen in latent heat flux component over the ocean, which might be caused by the bias seen in SST field. Moreover, the bias seen in shortwave radiation is very minor at the beginning of the simulation (not shown in here) and start to increase with time. This might be caused by the change in the atmospheric clouds but detailed analysis of atmospheric model output is required to reveal the real source of the bias and will be done in the future releases of the CMEPS modeling system. Additionally, the difference between CMEPS-BM1 v0.3 and v0.4 runs also shows very similar spatial and temporal bias pattern (Fig. 8).

|

| Figure 7: Map of surface heat flux components of NEMS-BM1 and CMEPS-BM1 v0.4 for 30 January 2012. |

|

| Figure 8: Map of surface heat flux components of CMEPS-BM1 v0.4 and CMEPS-BM1 v0.3 for 30 January 2012. |

In addition to the spatial maps and the analysis, Figure 9a-d demonstrates the temporal pattern of individual surface heat flux components averaged over pre-defined sub-regions (see Figure 4 on 0.3 milestone page). The comparison of NEMS-BM1, CMEPS-BM1 (v0.3) and CMEPS-BM1 (v0.4) indicates the all the simulations are consistent in the first half of the simulation (+15 days) and then the internal variability of the modeling system start to dominate the solution. It is also note that there is no systematic difference between CMEPS-BM1 (v0.3) and CMEPS-BM1 (v0.4) simulations and the calculated biases and RMSE errors are strongly depend on the studied region.

|

| Figure 9a: Time-series of shortwave radiation for January 2012 initial conditions. |

|

| Figure 9b: Time-series of longwave radiation for January 2012 initial conditions. |

|

| Figure 9c: Time-series of latent heat for January 2012 initial conditions. |

|

| Figure 9d: Time-series of sensible heat for January 2012 initial conditions. |