-

Notifications

You must be signed in to change notification settings - Fork 0

Homework Dan ‐ Thursday

| Shiffman Section Info | |

|---|---|

| Meeting Time | Thurs 12:10pm - 2:40pm EST |

| Location | 409 |

| Contact | [email protected] |

| Office Hours | (check email for calendar link) |

| Additional Help |

Resident office hours (schedule) The Coding Lab (schedule or drop-in help) How to ask code-related questions: examples |

Final Project presentations can be demonstrations and do not require a slide deck, however, you might find slides useful to help you plan and structure your demo and discussion. Plan for ~5 minutes! Here is a randomized order, please add your documentation link next to your name!

- 👋 Hi!

- Fay - Final

- Georgia - Kaleidoscope1,Kaleidoscope2

- Sao - Flock of Particle

- Sofia - You don't have to read it blog

- Shun - Hello World

- Muqing - sketch, Blog post

- Linna -

- Sangyu - Christmasy Photo Booth

- ☕️ BREAK 🍹

- Michal - Narrative principles

- Wallis - Updated blog

- Kyrie - Flora

- Leijing -

- Fiona - hugggy

- Parth - Finals

- Iris - Finals

- ❤️ Thank you! ❤️

- Complete your end of semester sketch and prepare to present in class next week! See Week 5 below for guidelines.

- Prepare (which means rehearse!) a 5 minute presentation to demonstrate what your project does that emphasizes its computational aspects. Some ideas that might help: How to create a first-person perspective demo of your project.

- Post documentation in the form of a blog post. It's up to you to figure out how to best document your project, here are some loose guidelines if you aren't sure what to include.

- Title

- Sketch link

- One sentence description

- Project summary (250-500 words)

- Inspiration: How did you become interested in this idea?

- Audience/Context: Who is this for? How will people experience it?

- Code references: What examples, tutorials, references did you use to create the project?

- Next steps: If you had more time to work on this, what would you do next?

- Additional Reading and Resources

- String documentation

- Text and Drawing Worksheet

- Examples from class: text(), charAt(), substring(), split()

- 🔗 SPEECH COMPARISON by Rune Madsen

- 🔗 Book-Book by Sarah Groff-Palermo

- 🔗 Word Tree by Martin Wattenberg and Fernanda Viegas

- 🔗 Entangled Word Bank by Stephanie Posavec

Your "end of semester sketch" is a two week assignment to be completed in two stages (planning and presenting). Your work should build off or be inspired by any of the concepts we've covered this semester. You should feel free to think non-traditionally, projects do not need to be screen-based and there is no requirement to use a particular aspect of JavaScript or programming.

Final projects can be collaborations with anyone in any class. Final projects can be a part of a larger project from one of your other classes (like PCOMP or Hypercinema).

You may also expand on a previous assignment (from any week this semester) or create something new, for example, a "word counting" visualization inspired by the material from this week.

For next class, please write a short post proposing a sketch you'd like to present in the final class. You can document your idea any way you like, but here are some prompts to help you get started.

- In one sentence, what is it you hope to create?

- What topics, assignments, or other material from this semester are you drawing from? What other inspirations, resources, references do you hope to draw from?

- What challenges do you expect to face? What are you unsure of (technically or conceptually)?

- Who is this for? (What is your audience?)

- name -- [blog title](url to blog)

- Linna -- word counting 75%, proposal: a continuation on visualizing the word count data

- Michal -- Blog

- Sofia -- nothing much, just for fun, read it or not blog

- Sangyu -- Blog

- Wallis --blog,sketch

- Parth -- Blog

Additional Reading and Resources

- 🚂 Data and APIs Playlist

- 🚂 The Climate Spiral

- 🚂 Mapping Earthquake Data

- 🚂 Wikipedia API

- 📕 p5.js loading external data with p5.js

Load "external" data in a p5.js sketch. You may use data of any format, loading a file uploaded to the web editor, or data from an API. Use loadJSON() or loadTable() depending on the format. Some possibilities:

- Look through Corpora and download a JSON file for use in a p5.js sketch.

- Collect your own data and create a JSON or CSV file!

- Use any of the APIs from this example collection. Some require you to sign up for an API key, and some you can use without one.

- More data sources to explore, add to this!

- Try an API not listed!

Write a "data biography" (Thank you to Ellen Nickles for this term) for your data. Have the maintainers of this dataset or API made this information easily available? Here is a selection of questions to consider inspired by the readings.

- What is the purpose of this dataset and who created it?**

- What type of data is in the dataset and how many items does it contain?

- How was the data collected and what method was used to select the data?

- What are the known limitations, biases, or potential uses of this dataset? What is missing?

- In what types of projects or studies could this dataset be appropriately used?

- name -- [blog title](url to blog), [sketch title](url to sketch) -- any other comments

- Shun -- blog

- Michal --Blog

- Lejing -- blog cont. previous week

- Fiona -- blog

- Georgia -- blog,Pi ,sketch

- wallis -- NYT sketch,NASA sketch

- Parth -- blog

- 📕 Nature of Code Chapter 3

- 🚂 Simple Harmonic Motion Video

- 🚂 Lissajous Curves

- 🎨 Memo Akten's Simple Harmonic Motion

- 🔊 Web Audio API

- 🎶 Tone.js

- 🎶 Andreas Refsgaard: Sounds from the Mouth, Eye Conductor

- 🎼 Creatability

- 🪩 Maya Man's PoseNet Sketchbook

- 🎙 MAXforum: Art Of Movement Mapping Artist/Programmer Lisa Jamhoury, Artist/Technologist Mimi Yin, and Neurobiologist Dr. Ryan York discuss the creative constraints and possibilities of working with AI and movement mapping on humans (and other organisms!)

- 💫 Adam Harvey CVDazzle

- 🦎 Connected Worlds

- 🌊 Simple Harmonic Motion: Unit Circle

- 🎹 Oscillator + Synthesizer

- 🖼

createGraphics()and trails - 👃 ml5.js "next gen" single body part

- 💻 all ml5.js "next generation" examples

A theremin is an electronic musical instrument controlled without physical contact. It is a quintessential example of how gesture and movement can create sound. Think about how this idea can be extended into the realm of digital interactivity and sound synthesis.

For this assignment, how might you create your own version of a theremin or a similar gesture-controlled sound synthesizer using p5.sound for sound generation and manipulation and ml5.js for gesture or pose recognition.

Experiment with different types of oscillators (sine, square, triangle, sawtooth) and their effects on the sound. How does changing the frequency or amplitude with your gestures alter the sound? What interactions can you create?

Document your process and the challenges you faced in a blog post. Include visual and auditory documentation such as screen recordings, videos, or sound clips.

🚨 Remember, this is just one path for your assignment! Feel free to diverge from the idea of a traditional theremin and explore other possibilities around visualizing waves, generating sound, and/or interaction through gesture. 🚨

- name -- [blog title](url to blog), [sketch title](url to sketch) -- any other comments

- Iris -- sketch

- Fay -- Webcam Theremin, sinewave. isSinging, blog

- Sangyu -- sketch, blog

- Sofia -- blog

- Wallis -- sketch

- Sao -- sketch

- Lejing -- blog

- Michal Blog

- 📕 A People’s Guide to AI by Mimi Onuoha and Mother Cyborg (Diana Nucera)

- 📕 Chapter 10: Neural Networks from Nature of Code

- 🚂 Beginner's Guide to ml5.js Introduction

- 🚂 Teachable Machine Videos

- 📝 ml5.js "next generation" in progress documentation

- 🚧 ml5.js "next generation" in progress website

- 💻 ml5.js "next generation" repo

- 📜 Andrey Kurenkov's 'Brief' History of Neural Nets and Deep Learning

- 🚂 What is a Convolution (in the context of a "convolutional" neural network)

- 🎥 Kyle McDonald - Weird Intelligence

- 🔎 Emoji Scavenger Hunt

- 📖 ImageNet: The Data That Transformed AI Research—and Possibly the World by Dave Gershgorn (Note: Fei-Fei Li is no longer at Google; she is currently Co-Director of the Stanford Human-Centered AI Institute)

- 🖼 ImageNet Database

- 💻 Teachable Machine Template (with mirrored video)

- 💻 ml5.js "next generation" examples

- 🦥 sloth image processing

- 🎨 random walk painter

- Read Excavating AI: The Politics of Images in Machine Learning Training Sets by Kate Crawford and Trevor Paglen. Consider the following excerpt from the conclusion:

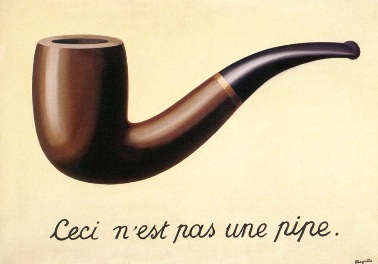

The artist René Magritte completed a painting of a pipe and coupled it with the words “Ceci n’est pas une pipe.” Magritte called the painting La trahison des images, “The Treachery of Images.”

Magritte’s assumption was almost diametrically opposed: that images in and of themselves have, at best, a very unstable relationship to the things seem to represent, one that can be sculpted by whoever has the power to say what a particular image means. For Magritte, the meaning of images is relational, open to contestation. At first blush, Magritte’s painting might seem like a simple semiotic stunt, but the underlying dynamic Magritte underlines in the painting points to a much broader politics of representation and self-representation.

-

Train your own image classifer with Teachable Machine. What works well? What fails? Experiment with different outputs and modes of interaction using the predicted labels.

-

Document your work in a blog post: include visual documentation such as a recorded screen capture / video / GIFs of training the model and working in p5.

🚨 The above is just one option for your assignment! You may also take inspiration from anything related to images, pixels, or machine learning in the above materials or discussion from class! You are not required to use Teachable Machine! For example, you may prefer to explore the "pose" models in the new ml5.js. You are also welcome to continue to explore image processing and creative visualization of pixel data from last week. 🚨

- name -- [blog title](url to blog), [sketch title](url to sketch) -- any other comments

- Sofia -- Blog

- Parth -- blog

- Shun -- sketch

- Fay -- Musical Instrument

- Lejing -- blog

- Georgia -- blog

- Fiona blog / still pixel

- Wallis blog

- Michal Blog ,Hand-pose

Thank you Shawn Van Every!

Create and/or manipulate an image (or images or video) to create an alternative reality than depicted in the source image(s). Describe how the result feels different from the source image(s). Write a blog post documenting your process and result. Work with any kind of imagery you want. Whatever you choose, you might consider these elements of a photograph in your computational approach. Here are a few ideas:

- Create a photobooth with snapshots from a camera. What happens if you slice off sections of an image?

- Create a "painting" system that colors pixels according to an image or video. (Note the video provided uses old non-classes syntax for the Particle objects, the accompanying code is updated to use classes.)

- These "coding challenge" videos all demonstrate various effects with pixels. Most are coded with Processing rather than p5.js, but there are p5.js ports of the code. (If not, please email me!)

- Pixels Videos

- Chapters 7 (images section) of Getting Started with p5.js book | Code

This is much too much to go through, pick and choose what interests you! I'll give an overview of everything in class.

- Teachable Machine Videos

- Beginner's Guide to Machine Learning—we'll be using an updated version of ml5.js that has different features and syntax than what is shown in the video, but the concepts remain the same.

- A People's Guide to AI

- Neural Networks from Nature of Code

- Add questions here!

- name -- [blog title](url to blog), [sketch title](url to sketch) -- any other comments

- Shun -- blog, sketch

- Parth -- blog, sketch

- Sangyu -- blog, sketch

- Lejing -- blog, sketch

- Michal -- Blog, sketch, use your lighter to draw

- Georgia -- Blog, sketch

- Wallis -- Blog, slit-scan capture, slit-scan capture RGB (fail)

- Linna - pixel art