Releases: JohnSnowLabs/nlu

Releases · JohnSnowLabs/nlu

John Snow Labs NLU 1.0.3 trainable models, offline mode, sentence similarity

1.0.3 Release Notes

We are happy to announce NLU 1.0.3 has been released and comes with a lot new features, training classifiers, saving them and loading them offline, enabling running NLU with no internet connection, new notebooks and articles!

NLU 1.0.3 New Features

- Train a Deep Learning classifier in 1 line! The popular ClassifierDL

which can achieve state of the art results on any multi class text classification problem is now trainable!

All it takes is just nlu.load('train.classifier).fit(dataset) . Your dataset can be a Pandas/Spark/Modin/Ray/Dask dataframe and needs to have a column named 'text" for text data and a column named 'y' for labels - Saving pipelines to HDD is now possible with nlu.save(path)

- Loading pipelines from disk now possible with nlu.load(path=path).

- NLU offline mode: Loading from disk makes running NLU offline now possible, since you can load pipelines/models from your local hard drive instead of John Snow Labs AWS servers.

NLU 1.0.3 New Notebooks and Tutorials

- New colab notebook showcasing NLU training, saving and loading from disk

- Sentence Similarity with BERT, Electra and Universal Sentence Encoder colab notebook

- Sentence Detector Notebook Updated

- New Workshop video

- Sentence Similarity Tutorial on Medium with BERT, ELECTRA and Universal Sentence Encoder Embeddings

NLU 1.0.3 Bug fixes

- Sentence Detector bugfix

nlu1.0.2

We are glad to announce nlu 1.0.2 is released!

NLU 1.0.2 Enhancements

- More semantically concise output levels sentence and document enforced :

- If a pipe is set to output_level='document' :

- Every Sentence Embedding will generate 1 Embedding per Document/row in the input Dataframe, instead of 1 embedding per sentence.

- Every Classifier will classify an entire Document/row

- Each row in the output DF is a 1 to 1 mapping of the original input DF. 1 to 1 mapping from input to output.

- If a pipe is set to output_level='sentence' :

- Every Sentence Embedding will generate 1 Embedding per Sentence,

- Every Classifier will classify exactly one sentence

- Each row in the output DF can is mapped to one row in the input DF, but one row in the input DF can have multiple corresponding rows in the output DF. 1 to N mapping from input to output.

- If a pipe is set to output_level='document' :

- Improved generation of column names for classifiers. based on input nlu reference

- Improved generation of column names for embeddings, based on input nlu reference

- Improved automatic output level inference

- Various test updates

- Integration of CI pipeline with Github Actions

New Documentation is out!

Check it out here : http://nlu.johnsnowlabs.com/

nlu1.0.1

nlu1.0.0

1.0 Release Notes

We are glad to announce that NLU 1.0.0 has been released!

Changes and Updates:

- Automatic to Numpy conversion of embeddings

- Added various testing classes

- Added various new to the NLU namespace Aliases for easier access to models

- Removed irrelevant information from Component Infos

- Integration of Spark NLP 2.6.2 enhancements and bugfixes https://github.com/JohnSnowLabs/spark-nlp/releases/tag/2.6.2

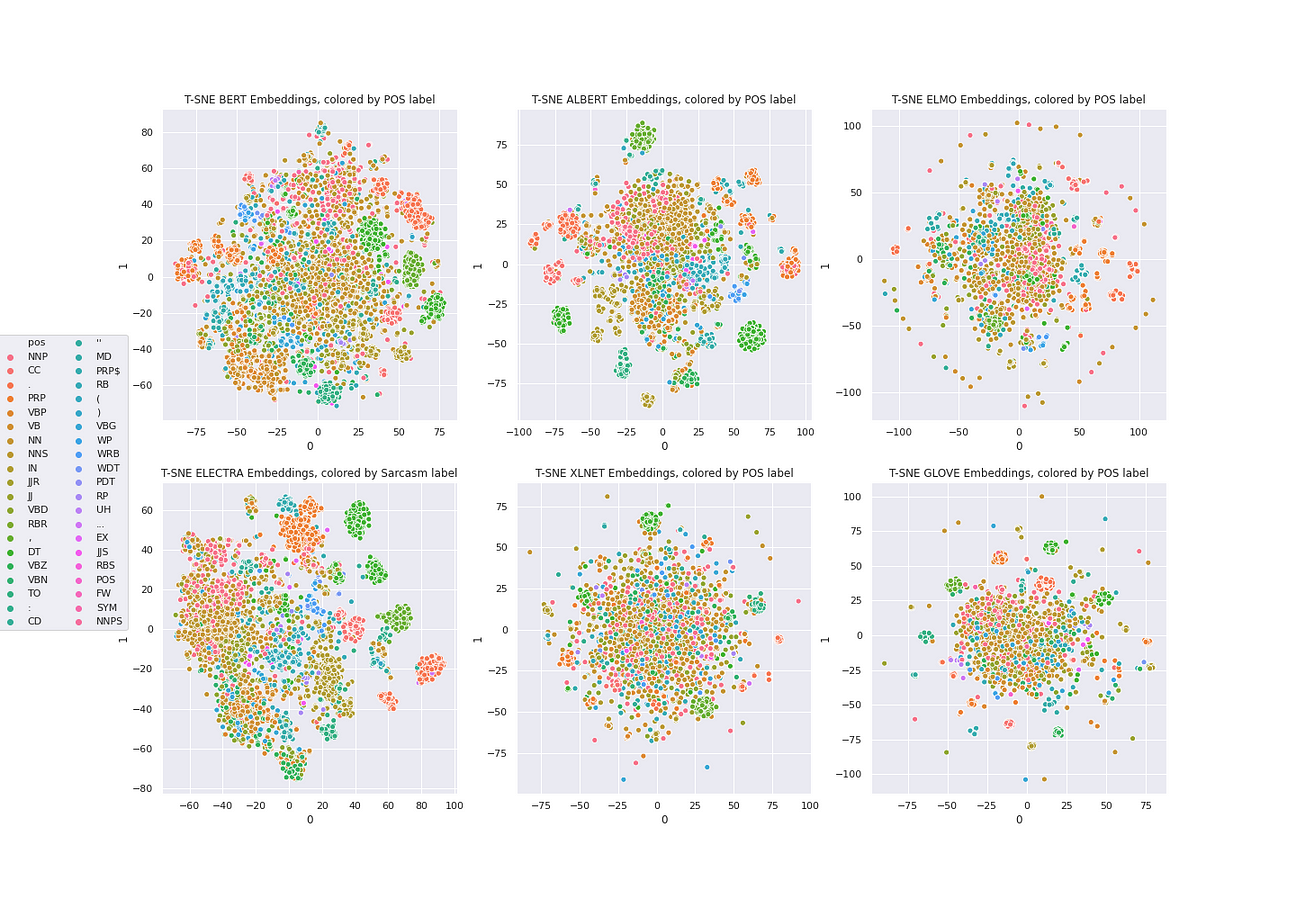

- Updated old T-SNE notebooks with the more elegant and simpler generation of t-SNE embeddings

- New 6 embeddings at once notebook with t-SNE and Medium article

350+ NLP Models in 1 line of Python code with NLU 0.2.1

We are glad to announce that NLU 0.2.1 has been released!

NLU makes the 350+ models and annotators in Spark NLPs arsenal available in just 1 line of python code and it works with Pandas dataframes!

What is included in NLU 0.2.1

- 350+ pre-trained models

- 100+ of the latest NLP word embeddings ( BERT, ELMO, ALBERT, XLNET, GLOVE, BIOBERT, ELECTRA, COVIDBERT) and different variations of them

- 50+ of the latest NLP sentence embeddings ( BERT, ELECTRA, USE) and different variations of them

- 50+ Classifiers

- 50+ Supported languages

- Named Entity Recognition (NER)

- Part of Speech(POS)

- Labeled and Unlabeled Dependency parsing

- Spell Checking

- Various Text Preprocessing and Cleaning algorithms

NLU References

- NLU Website

- NLU Github

- NLU Install

- Overview of all NLU example notebooks

- Overview of almost every 1-liner in NLU

NLU on Medium

- Introduction to NLU

- One line BERT Word Embeddings and t-SNE plotting with NLU

- BERT, ALBERT, ELECTRA, ELMO, XLNET, GLOVE Word Embeddings in one line and plotting with t-SNE

NLU component examples

The following are Collab examples that showcase each NLU component and some applications.

-

Named Entity Recognition (NER) notebooks

-

Part of speech (POS) notebooks

-

Classifiers notebooks

- Unsupervised Keyword Extraction with YAKE

- Toxic Text Classifier

- Twitter Sentiment Classifier

- Movie Review Sentiment Classifier

- Sarcasm Classifier

- 50 Class Questions Classifier

- 20 Class Languages Classifier

- Fake News Classifier

- E2E Classifier

- Cyberbullying Classifier

- Spam Classifier

- Emotion Classifier

-

Word Embeddings notebooks

- BERT Word Embeddings and T-SNE plotting

- ALBERT Word Embeddings and T-SNE plotting

- ELMO Word Embeddings and T-SNE plotting

- XLNET Word Embeddings and T-SNE plotting

- ELECTRA Word Embeddings and T-SNE plotting

- COVIDBERT Word Embeddings and T-SNE plotting

- BIOBERT Word Embeddings and T-SNE plotting

- GLOVE Word Embeddings and T-SNE plotting

-

Sentence Embeddings notebooks

-

Dependency Parsing notebooks

-

Text Pre Processing and Cleaning notebooks

-

Chunkers notebooks

-

Matchers notebooks