InterDim is a Python package for interactive exploration of latent data dimensions. It wraps existing tools for dimensionality reduction, clustering, and data visualization in a streamlined interface, allowing for quick and intuitive analysis of high-dimensional data.

- Easy-to-use pipeline for dimensionality reduction, clustering, and visualization

- Interactive 3D scatter plots for exploring reduced data

- Support for various dimensionality reduction techniques (PCA, t-SNE, UMAP, etc.)

- Multiple clustering algorithms (K-means, DBSCAN, etc.)

- Customizable point visualizations for detailed data exploration

You can install from PyPI via pip (recommended):

pip install interdimOr from source:

git clone https://github.com/MShinkle/interdim.git

cd interdim

pip install .Here's a basic example using the Iris dataset:

from sklearn.datasets import load_iris

from interdim import InterDimAnalysis

iris = load_iris()

analysis = InterDimAnalysis(iris.data, true_labels=iris.target)

analysis.reduce(method='tsne', n_components=3)

analysis.cluster(method='kmeans', n_clusters=3)

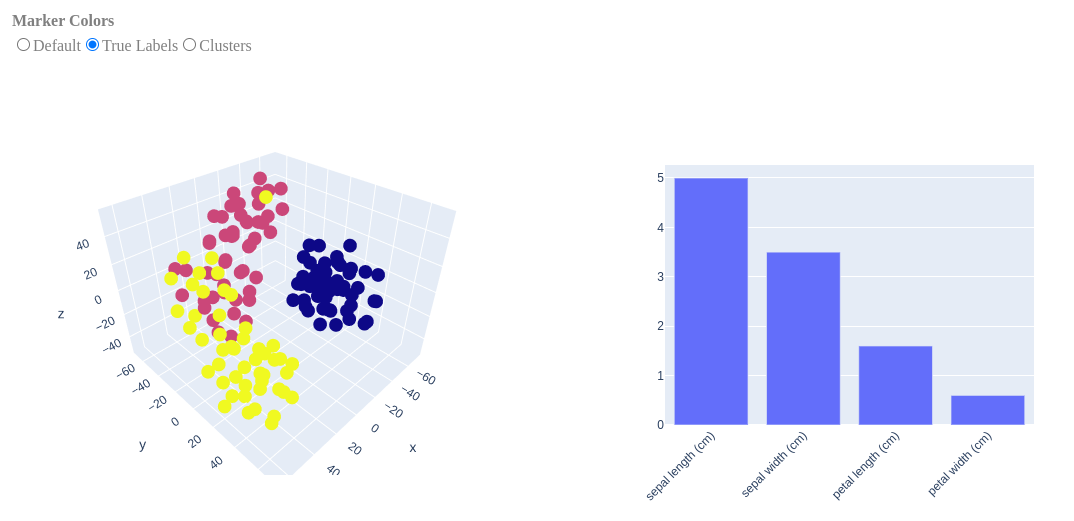

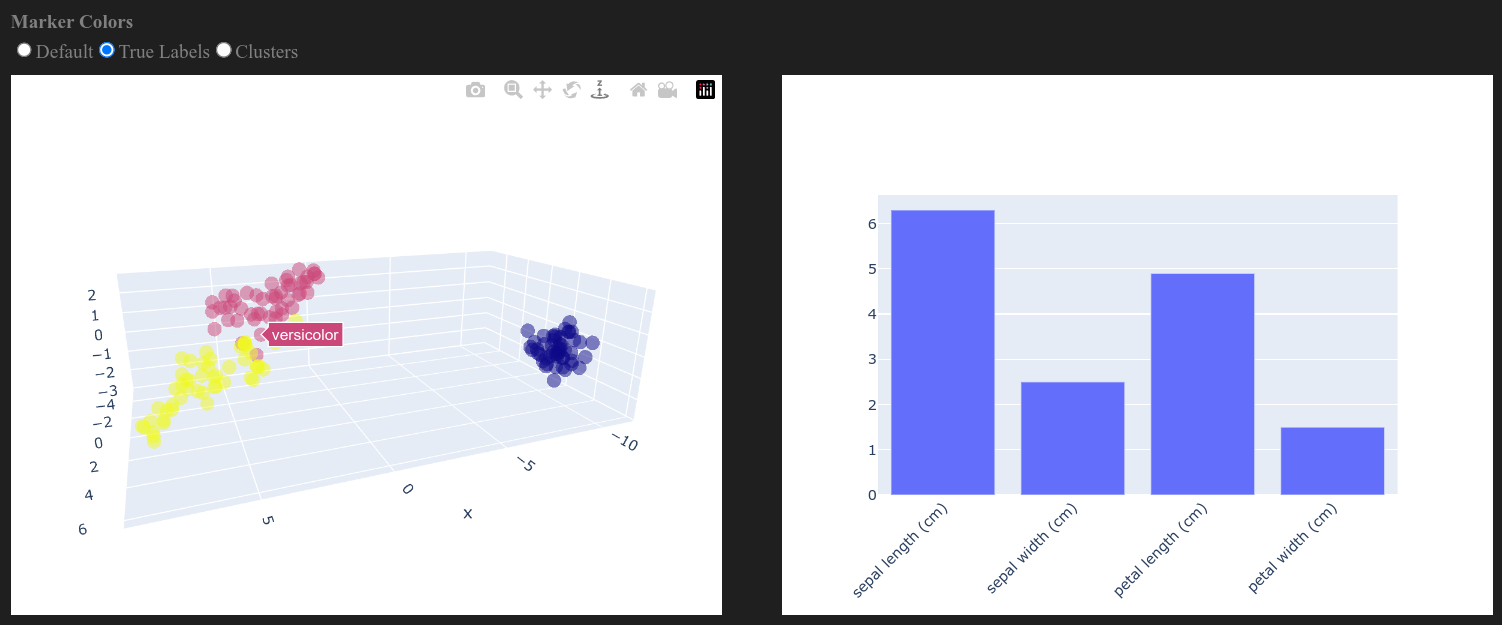

analysis.show(n_components=3, point_visualization='bar')This will reduce the Iris dataset to 3 dimensions using t-SNE, clusters the data using K-means, and displays an interactive 3D scatter plot with bar charts for each data point as you hover over them.

However, this is just a small example of what you can do with InterDim. You can use it to explore all sorts of data, including high-dimensional data like language model embeddings!

For more in-depth examples and use cases, check out our demo notebooks:

-

Iris Species Analysis: Basic usage with the classic Iris dataset.

-

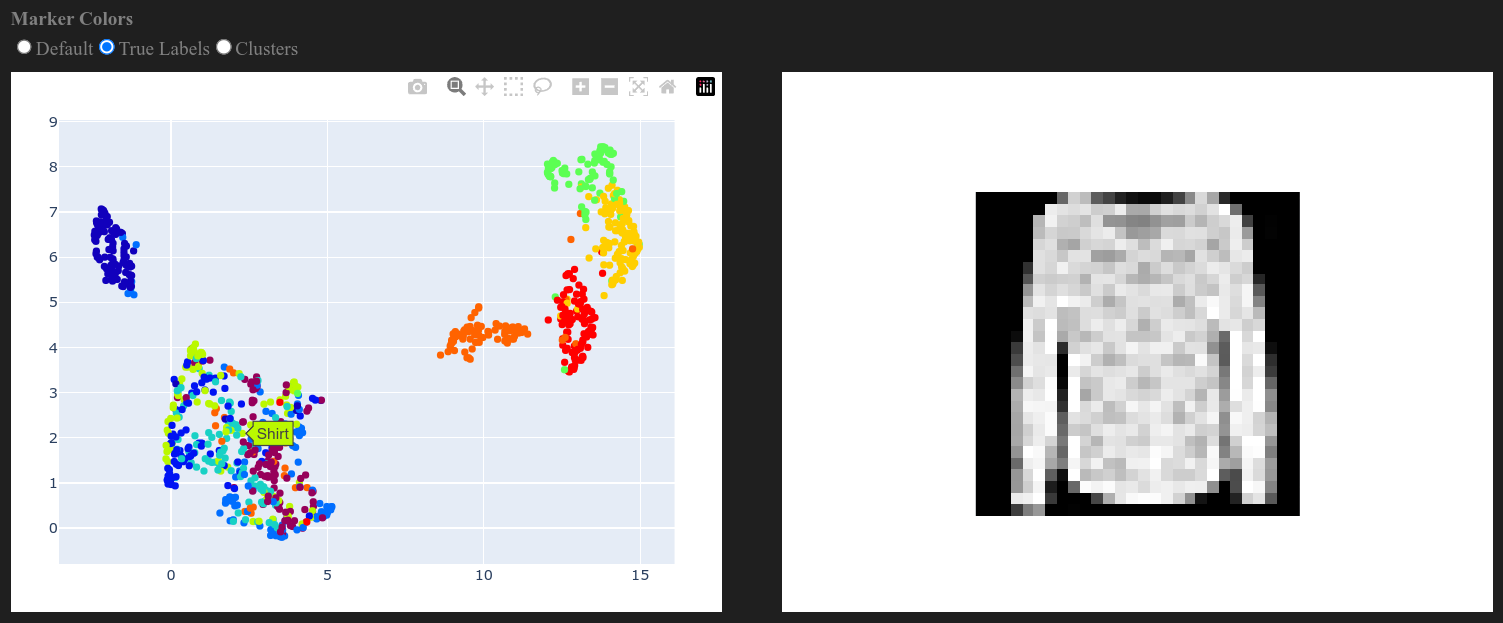

DNN Latent Space Exploration: Visualizing deep neural network activations.

-

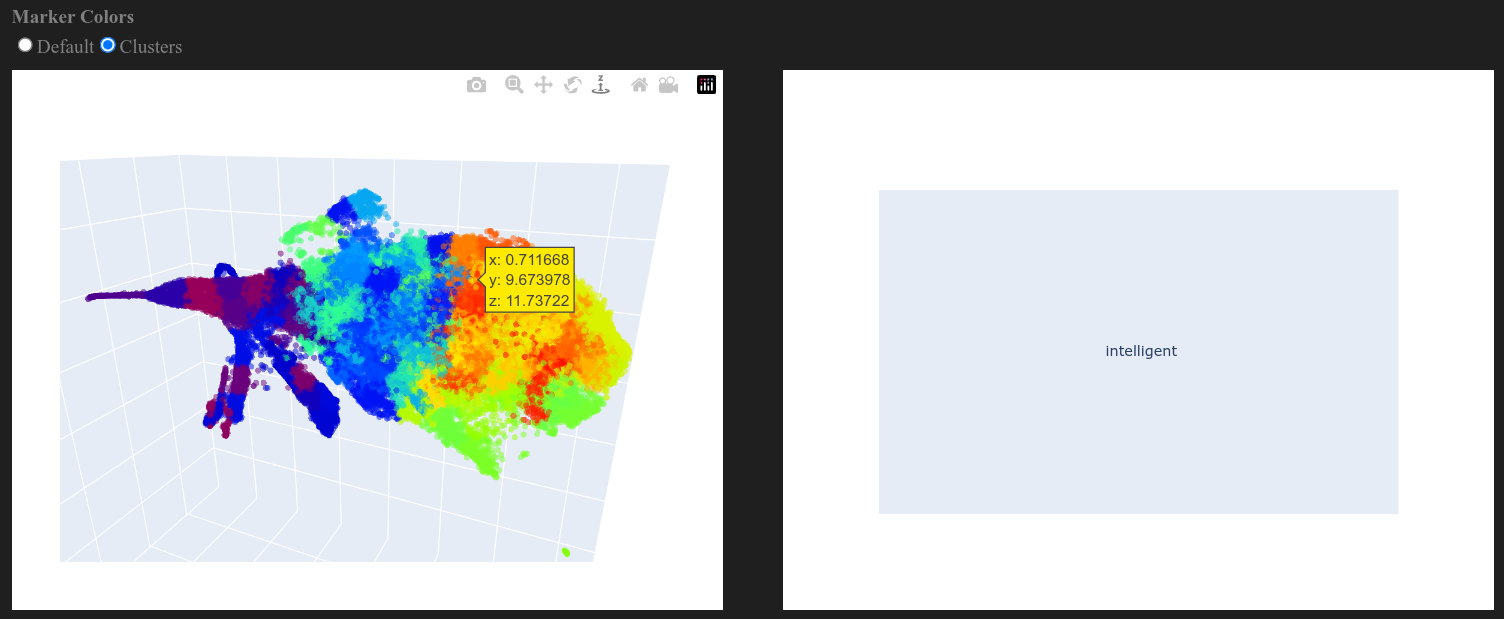

LLM Token Analysis: Exploring language model token embeddings and layer activations.

For detailed API documentation and advanced usage, visit our GitHub Pages.

We welcome discussion and contributions!

InterDim is released under the BSD 3-Clause License. See the LICENSE file for details.

For questions and feedback, please open an issue on GitHub.