The goal of this project is to develop a system that utilizes a neural network, specifically based on Long Short Term Memory (LSTM) and the attention mechanism, to generate handwriting from input text.

This project implements the concepts from Alex Graves' 2014 paper, Generating Sequences With Recurrent Neural Networks. The model processes input text and outputs realistic handwriting by employing stacked LSTM layers coupled with an attention mechanism.

During our learning phase, we engaged in Coursera courses by Andrew Ng on Deep learning Specialization.

-

We studied Neural Networks and Optimization methods, using our knowledge to create a Handwritten Digit Recognition model from scratch with the MNIST dataset, achieving impressive accuracy.

-

We explored Convolutional Neural Networks (CNNs) and various architectures. Utilizing ResNet18 and ResNet50 architectures, we developed a Facial Emotion Recognition model with the FER2013 dataset to detect a person's mood from images.

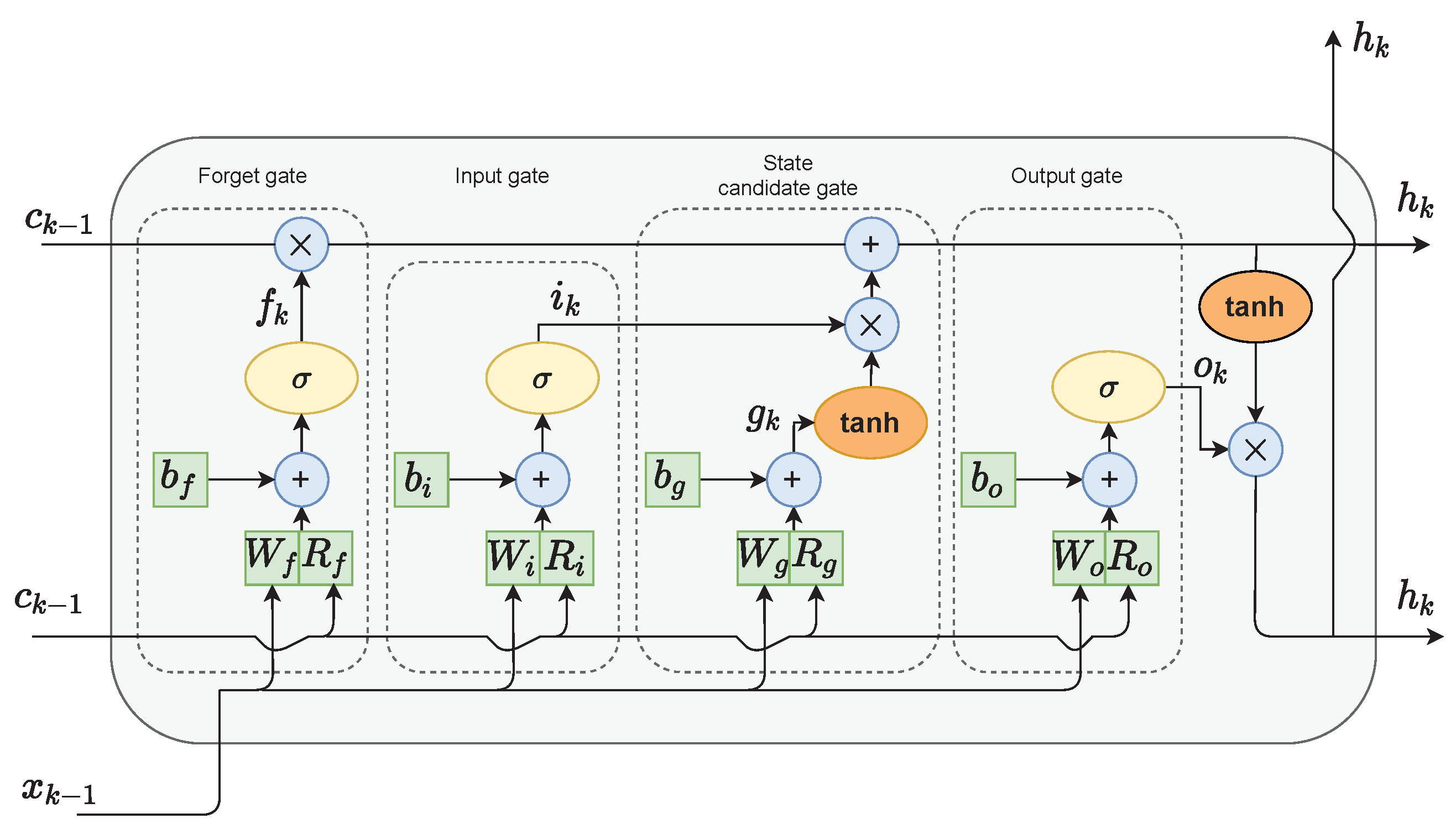

LSTM networks can generate complex sequences with long-range structure by utilizing their memory cells to store information, making them adept at identifying and leveraging long-range dependencies in data.

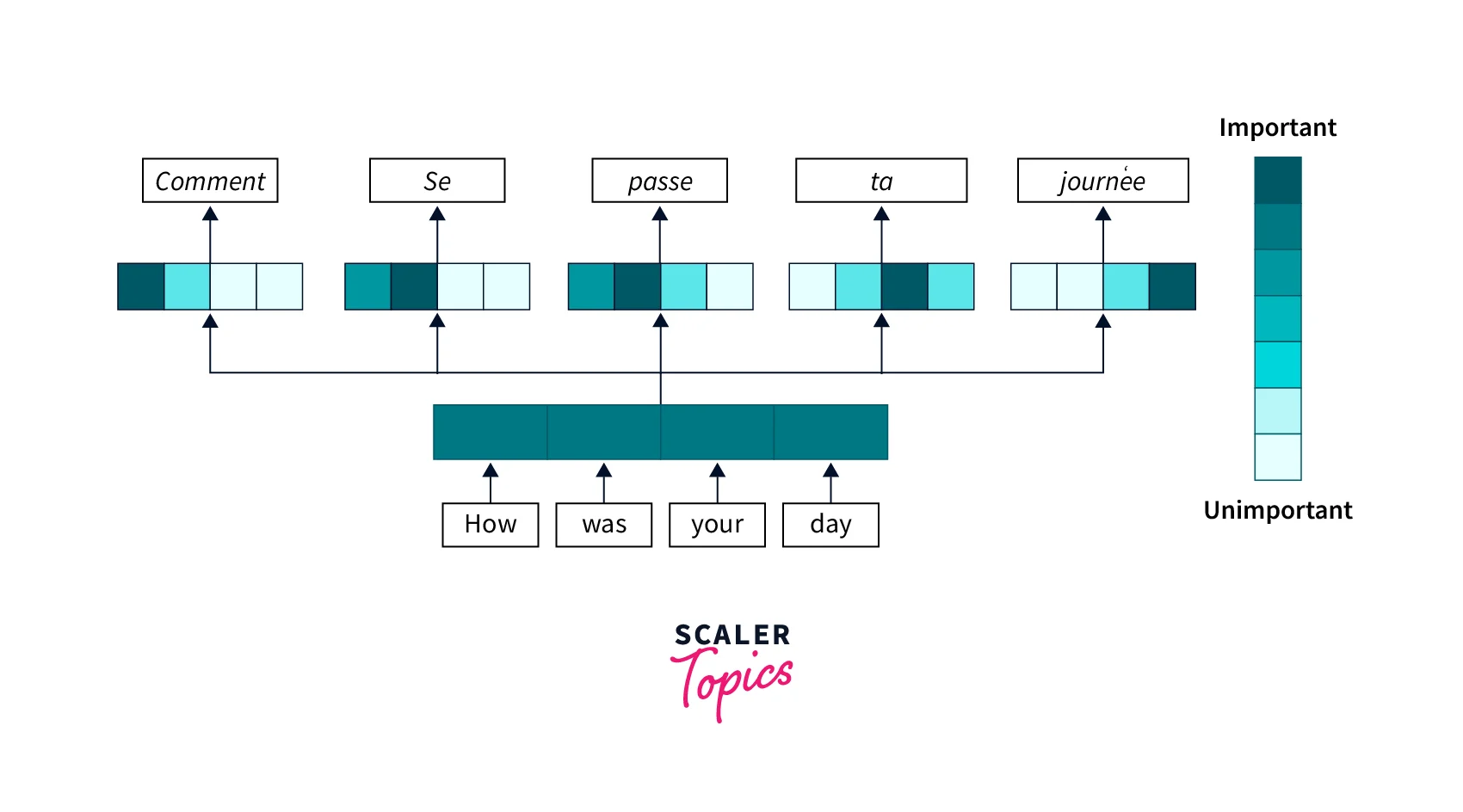

Handwriting involves longer sequences and more points than text, requiring the model to generate multiple points per character. The attention mechanism assists by creating a soft window that dynamically aligns the text with pen locations, helping the model determine which character to write next.

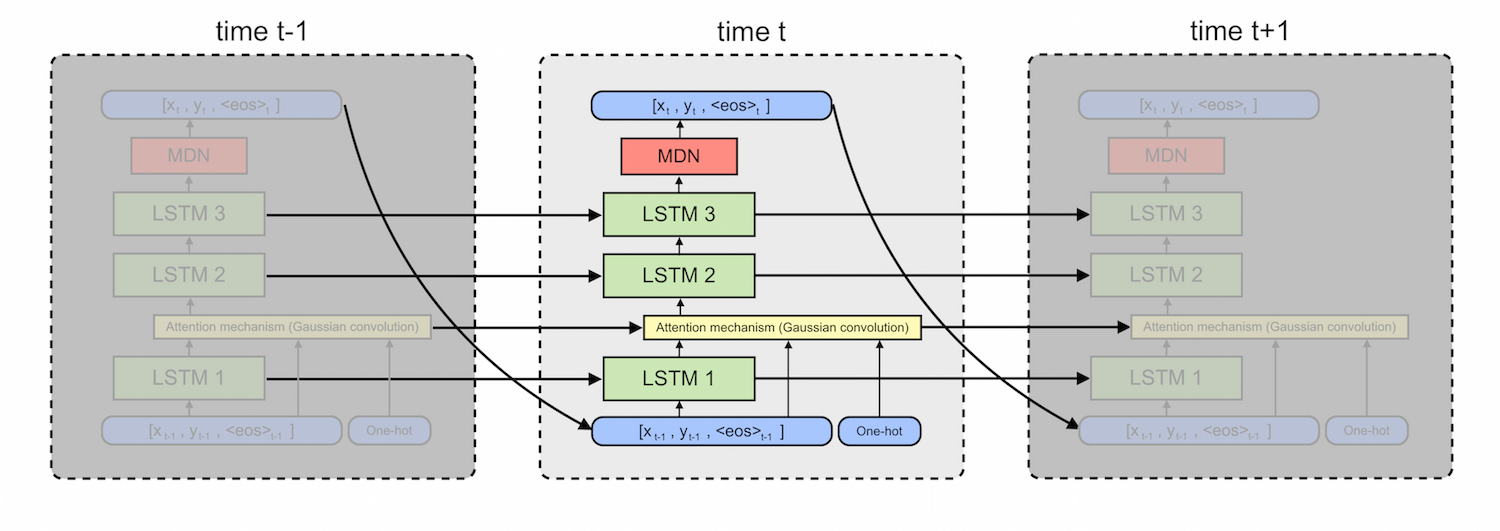

Mixture Density Networks can quantify their uncertainty and capture the randomness of handwriting. They output parameters for several multivariate Gaussian components, including mean (μ), standard deviation (σ), and correlation (ρ), as well as weights (π) to gauge each distribution's contribution to the final output.

This component consists of three stacked LSTM layers. The output is fed into a mixture density layer, which provides the mean, variance, correlation, and weights of 20 mixture components. This model explores the handwriting generation capability of the architecture without input text.

The model integrates an LSTM layer that receives additional input from the character sequence via the window layer. This creates an LSTM-Attention layer, whose output is then processed through two stacked LSTM layers before reaching the mixture density layer.

The inputs (x, y, eos) are sourced from the IAM-OnDB online database, where x and y represent pen coordinates and eos marks points where the pen is lifted off the writing surface.

We developed the model using PyTorch, as outlined in the research paper. Despite training for several epochs, the model reached a bottleneck where the loss magnitude ceased to decrease.

Additionally, the output did not meet our expectations and requires further refinement. Here are some of the results we obtained:

- We plan to enhance the model's accuracy and output quality to generate more realistic handwriting.

- Upon achieving satisfactory results, we aim to deploy the model and create a web interface for public use.

- SRA VJTI - Eklavya 2023

- Heartfelt gratitude to our mentors Lakshaya Singhal and Advait Dhamorikar for guiding us at every point of our journey. The project wouldn't have been possible without them

Deep Learning courses