300 Coding Interview Questions (ANSWERED) To Land Your Next Six-Figure Job Offer from FullStack.Cafe

FullStack.Cafe is a biggest hand-picked collection of most common coding interview questions for software developers. Prepare for your next programming interview in no time and get 6-figure job offer fast!

🔴 Get All Answers + PDFs on FullStack.Cafe - Kill Your Tech & Coding Interview

👉 For 1299 ML & DataScience Interview Questions Check MLStack.Cafe - Kill Your Machine Learning, Data Science & Python Interview

- Arrays

- Backtracking

- Big-O Notation

- Binary Tree

- Bit Manipulation

- Blockchain

- Brain Teasers

- Divide & Conquer

- Dynamic Programming

- Fibonacci Series

- Graph Theory

- Greedy Algorithms

- Hash Tables

- Heaps and Maps

- Linked Lists

- Queues

- Recursion

- Searching

- Sorting

- Stacks

- Strings

- Trees

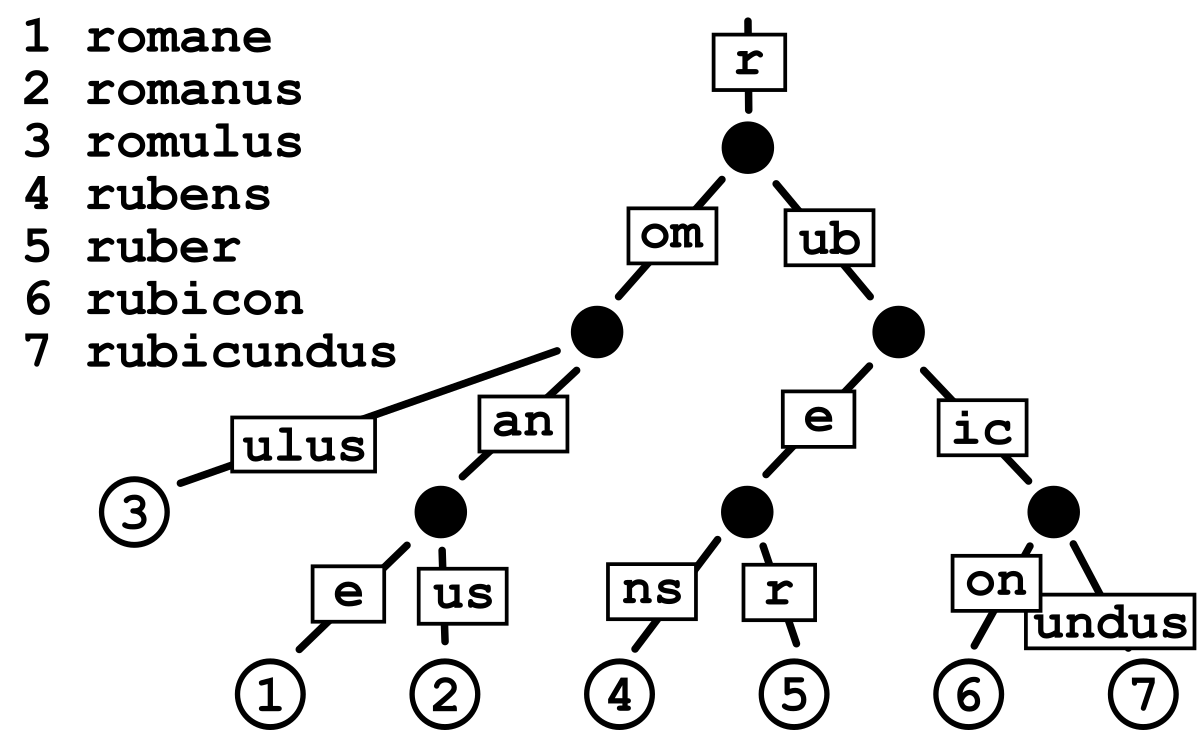

- Trie

Answer: An array is a collection of homogeneous (same type) data items stored in contiguous memory locations. For example if an array is of type “int”, it can only store integer elements and cannot allow the elements of other types such as double, float, char etc. The elements of an array are accessed by using an index.

O(1)O(log n)O(n)O(n log n)O(n2)O(2n)O(n!)

Source: beginnersbook.com

Answer: Arrays are:

- Finite (fixed-size) - An array is finite because it contains only limited number of elements.

- Order -All the elements are stored one by one , in contiguous location of computer memory in a linear order and fashion

- Homogenous - All the elements of an array are of same data types only and hence it is termed as collection of homogenous

Source: codelack.com

Answer: Pros:

- Fast lookups. Retrieving the element at a given index takes

O(1)time, regardless of the length of the array. - Fast appends. Adding a new element at the end of the array takes

O(1)time.

Cons:

- Fixed size. You need to specify how many elements you're going to store in your array ahead of time.

- Costly inserts and deletes. You have to shift the other elements to fill in or close gaps, which takes worst-case

O(n)time.

Source: www.interviewcake.com

Answer:

Array uses continuous memory locations (space complexity O(n)) to store the element so retrieving of any element will take O(1) time complexity (constant time by using index of the retrieved element). O(1) describes inserting at the end of the array. However, if you're inserting into the middle of an array, you have to shift all the elements after that element, so the complexity for insertion in that case is O(n) for arrays. End appending also discounts the case where you'd have to resize an array if it's full.

| Operation | Average Case | Worst Case |

|---|---|---|

| Read | O(1) |

O(1) |

| Append | O(1) |

O(1) |

| Insert | O(n) |

O(n) |

| Delete | O(n) |

O(n) |

| Search | O(n) |

O(n) |

Source: beginnersbook.com

Answer: Arrays and dictionaries both store collections of data, but differ by how they are accessed. Arrays provide random access of a sequential set of data. Dictionaries (or associative arrays) provide a map from a set of keys to a set of values.

- Arrays store a set of objects (that can be accessed randomly)

- Dictionaries store pairs of objects

- Items in an array are accessed by position (index) (often a number) and hence have an order.

- Items in a dictionary are accessed by key and are unordered.

This makes array/lists more suitable when you have a group of objects in a set (prime numbers, colors, students, etc.). Dictionaries are better suited for showing relationships between a pair of objects.

Source: stackoverflow.com

Answer: A dynamic array is an array with a big improvement: automatic resizing.

One limitation of arrays is that they're fixed size, meaning you need to specify the number of elements your array will hold ahead of time. A dynamic array expands as you add more elements. So you don't need to determine the size ahead of time.

Source: www.interviewcake.com

Answer: A simple dynamic array can be constructed by allocating an array of fixed-size, typically larger than the number of elements immediately required. The elements of the dynamic array are stored contiguously at the start of the underlying array, and the remaining positions towards the end of the underlying array are reserved, or unused. Elements can be added at the end of a dynamic array in constant time by using the reserved space until this space is completely consumed.

When all space is consumed, and an additional element is to be added, the underlying fixed-sized array needs to be increased in size. Typically resizing is expensive because you have to allocate a bigger array and copy over all of the elements from the array you have overgrow before we can finally append our item.

Dynamic arrays memory allocation is language specific. For example in C++ arrays are created on the stack, and have automatic storage duration -- you don't need to manually manage memory, but they get destroyed when the function they're in ends. They necessarily have a fixed size:

int foo[10];Arrays created with operator new[] have dynamic storage duration and are stored on the heap (technically the "free store"). They can have any size, but you need to allocate and free them yourself since they're not part of the stack frame:

int* foo = new int[10];

delete[] foo;Source: stackoverflow.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Backtracking Interview Questions

Answer: Backtracking is a systematic way of trying out different sequences of decisions until we find one that "works." Backtracking does not generate all possible solutions first and checks later. It tries to generate a solution and as soon as even one constraint fails, the solution is rejected and the next solution is tried.

Backtracking can be understood as as searching os a tree for a particular "goal" leaf node. Backtracking in that case is a depth-first search with any bounding function. All solution using backtracking is needed to satisfy a complex set of constraints.

Source: www.javatpoint.com

Answer:

- Recursion describes the calling of the same function that you are in. The typical example of a recursive function is the factorial. You always need a condition that makes recursion stop (base case).

- Backtracking is when the algorithm makes an opportunistic decision*, which may come up to be wrong. If the decision was wrong then the backtracking algorithm restores the state before the decision. It builds candidates for the solution and abandons those which cannot fulfill the conditions. A typical example for a task to solve would be the Eight Queens Puzzle. Backtracking is also commonly used within Neuronal Networks. Many times backtracking is not implemented recursively. If backtracking uses recursion its called Recursive Backtracking

P.S. * Opportunistic decision making refers to a process where a person or group assesses alternative actions made possible by the favorable convergence of immediate circumstances recognized without reference to any general plan.

Source: www.quora.com

Answer:

- Using Backtracking you built a solution (that is a structure where every variable is assigned a value).

- It is however possible that during construction, you realize that the solution is not successful (does not satisfy certain constraints), then you backtrack: you undo certain assignments of values to variables in order to reassign them.

Like when looking for a book because at first you check drawers in the first room, but it's not found, so you backtrack out of the first room to check the drawers in the next room. It's also called Trial & Error.

Source: stackoverflow.com

Answer: Exhaustive Search is an algorithmic technique in which first all possible solutions are generated first and then we select the most feasible solution by applying some rules. Since it follows the most naive approach, it is a.k.a Brute-Force Search. This approach is one of the most expensive algorithmic techniques, mainly in terms of time complexity. It is also, therefore, one of the most naive ones.

Source: afteracademy.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Big-O Notation Interview Questions

Answer: Big-O notation (also called "asymptotic growth" notation) is a relative representation of the complexity of an algorithm. It shows how an algorithm scales based on input size. We use it to talk about how thing scale. Big O complexity can be visualized with this graph:

Source: stackoverflow.com

Answer:

Say we have an array of n elements:

int array[n];If we wanted to access the first (or any) element of the array this would be O(1) since it doesn't matter how big the array is, it always takes the same constant time to get the first item:

x = array[0];Source: stackoverflow.com

Answer: Big-O is often used to make statements about functions that measure the worst case behavior of an algorithm. Worst case analysis gives the maximum number of basic operations that have to be performed during execution of the algorithm. It assumes that the input is in the worst possible state and maximum work has to be done to put things right.

Source: stackoverflow.com

Answer:

O(log n) means for every element, you're doing something that only needs to look at log N of the elements. This is usually because you know something about the elements that let you make an efficient choice (for example to reduce a search space).

The most common attributes of logarithmic running-time function are that:

- the choice of the next element on which to perform some action is one of several possibilities, and

- only one will need to be chosen

or

- the elements on which the action is performed are digits of

n

Most efficient sorts are an example of this, such as merge sort. It is O(log n) when we do divide and conquer type of algorithms e.g binary search. Another example is quick sort where each time we divide the array into two parts and each time it takes O(N) time to find a pivot element. Hence it N O(log N)

Plotting log(n) on a plain piece of paper, will result in a graph where the rise of the curve decelerates as n increases:

Source: stackoverflow.com

Answer: The fact is it's difficult to determine the exact runtime of an algorithm. It depends on the speed of the computer processor. So instead of talking about the runtime directly, we use Big O Notation to talk about how quickly the runtime grows depending on input size.

With Big O Notation, we use the size of the input, which we call n. So we can say things like the runtime grows “on the order of the size of the input” (O(n)) or “on the order of the square of the size of the input” (O(n2)). Our algorithm may have steps that seem expensive when n is small but are eclipsed eventually by other steps as n gets larger. For Big O Notation analysis, we care more about the stuff that grows fastest as the input grows, because everything else is quickly eclipsed as n gets very large.

Source: medium.com

Answer:

O(n2) means for every element, you're doing something with every other element, such as comparing them. Bubble sort is an example of this.

Source: stackoverflow.com

Details: Let's say we wanted to find a number in the list:

for (int i = 0; i < n; i++){

if(array[i] == numToFind){ return i; }

}What will be the time complexity (Big O) of that code snippet?

Answer:

This would be O(n) since at most we would have to look through the entire list to find our number. The Big-O is still O(n) even though we might find our number the first try and run through the loop once because Big-O describes the upper bound for an algorithm.

Source: stackoverflow.com

Answer:

O(1). Note, you don't have to insert at the end of the list. If you insert at the front of a (singly-linked) list, they are both O(1).

Stack contains 1,2,3:

[1]->[2]->[3]Push 5:

[5]->[1]->[2]->[3]Pop:

[1]->[2]->[3] // returning 5Source: stackoverflow.com

Answer: Let's consider a traversal algorithm for traversing a list.

O(1)denotes constant space use: the algorithm allocates the same number of pointers irrespective to the list size. That will happen if we move (reuse) our pointer along the list.- In contrast,

O(n)denotes linear space use: the algorithm space use grows together with respect to the input sizen. That will happen if let's say for some reason the algorithm needs to allocate 'N' pointers (or other variables) when traversing a list.

Source: stackoverflow.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Q13: What is meant by "Constant Amortized Time" when talking about time complexity of an algorithm? ⭐⭐⭐

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Q21: What are some algorithms which we use daily that has O(1), O(n log n) and O(log n) complexities? ⭐⭐⭐⭐

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Binary Tree Interview Questions

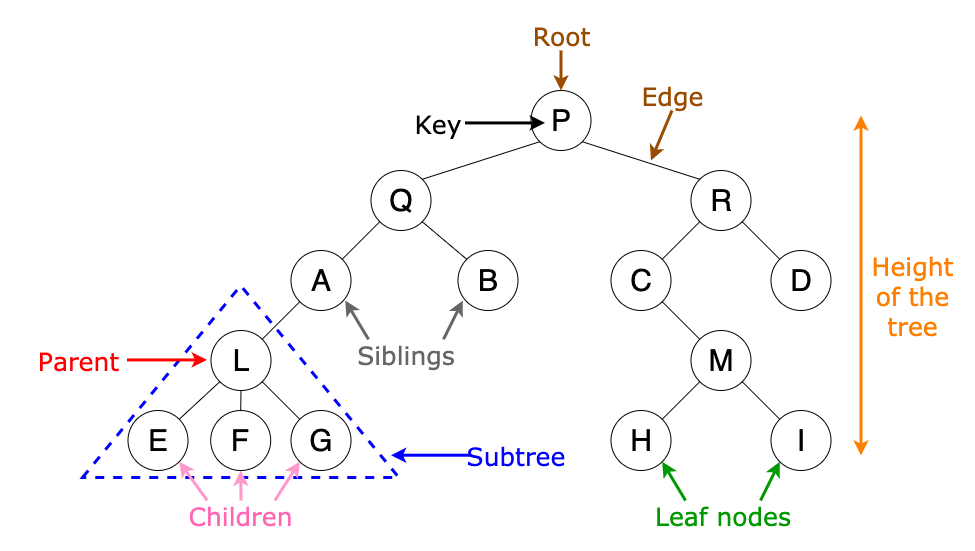

Answer: A normal tree has no restrictions on the number of children each node can have. A binary tree is made of nodes, where each node contains a "left" pointer, a "right" pointer, and a data element.

There are three different types of binary trees:

- Full binary tree: Every node other than leaf nodes has 2 child nodes.

- Complete binary tree: All levels are filled except possibly the last one, and all nodes are filled in as far left as possible.

- Perfect binary tree: All nodes have two children and all leaves are at the same level.

Source: study.com

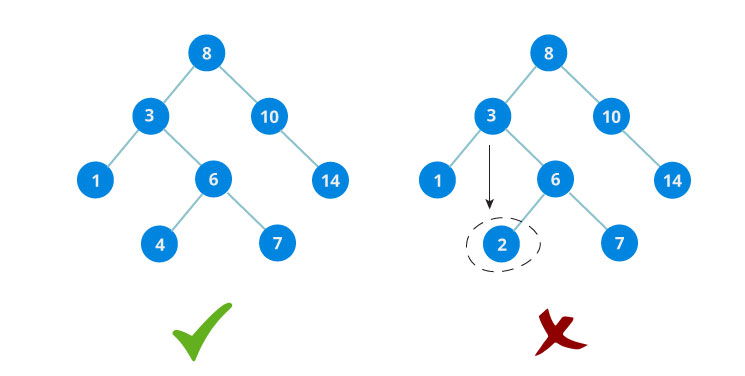

Answer: Binary search tree is a data structure that quickly allows to maintain a sorted list of numbers.

- It is called a binary tree because each tree node has maximum of two children.

- It is called a search tree because it can be used to search for the presence of a number in

O(log n)time.

The properties that separates a binary search tree from a regular binary tree are:

- All nodes of left subtree are less than root node

- All nodes of right subtree are more than root node

- Both subtrees of each node are also BSTs i.e. they have the above two properties

Source: www.programiz.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Bit Manipulation Interview Questions

Answer:

Bit stands for Binary Digit and is the smallest unit of data in a computer. Binary digits can only be 0 or 1 because they are a 2- base number. This means that if we want to represent the number 5 in binary we would have to write 0101.

An integer variable usually have a 32 bits limit, which means it consists of 32 bits with its range of 2³² (2 derived from the state of bits — 0 or 1 which is 2 possibilities).

Answer: A byte is made up of 8 bits and the highest value of a byte is 255, which would mean every bit is set.

Now:

| 1 Byte ( 8 bits ) | ||||||||||

| Place Value | 128 | 64 | 32 | 16 | 8 | 4 | 2 | 1 | ||

|

0

|

0

|

0

|

1

|

0

|

1

|

1

|

0

|

=

|

22

|

|

Lets take it right to left and add up all those values together:

128 * 0 + 64 * 0 + 32 * 0 + 16 * 1 + 8 * 0 + 4 * 1 + 2 * 1 + 1 * 0 = 22

Source: github.com

Answer: A byte is made up of 8 bits and the highest value of a byte is 255, which would mean every bit is set. We will look at why a byte's maximum value is 255 in just a second.

So if all bits are set and the value = 255 my byte would look like this:

| 1 Byte ( 8 bits ) | ||||||||||

| Place Value | 128 | 64 | 32 | 16 | 8 | 4 | 2 | 1 | ||

|

1

|

1

|

1

|

1

|

1

|

1

|

1

|

1

|

=

|

255 | |

Lets take it right to left and add up all those values together 1 + 2 + 4 + 8 + 16 + 32 + 64 + 128 = 255

Source: github.com

Answer:

- NOT ( ~ ): Bitwise NOT is an unary operator that flips the bits of the number i.e., if the ith bit is 0, it will change it to 1 and vice versa.

- AND ( & ): Bitwise AND is a binary operator that operates on two equal-length bit patterns. If both bits in the compared position of the bit patterns are 1, the bit in the resulting bit pattern is 1, otherwise 0.

- OR ( | ): Bitwise OR is also a binary operator that operates on two equal-length bit patterns, similar to bitwise AND. If both bits in the compared position of the bit patterns are 0, the bit in the resulting bit pattern is 0, otherwise 1.

- XOR ( ^ ): Bitwise XOR also takes two equal-length bit patterns. If both bits in the compared position of the bit patterns are 0 or 1, the bit in the resulting bit pattern is 0, otherwise 1.

AND|0 1 OR|0 1

---+---- ---+----

0|0 0 0|0 1

1|0 1 1|1 1

XOR|0 1 NOT|0 1

---+---- ---+---

0|0 1 |1 0

1|1 0-

Left Shift ( << ): Left shift operator is a binary operator which shift the some number of bits, in the given bit pattern, to the left and append 0 at the end.

-

Signed Right Shift ( >> ): Right shift operator is a binary operator which shift the some number of bits, in the given bit pattern, to the right, preserving the sign (which is the first bit)

-

Zero Fill Right Shift ( >>> ): Shifts right by pushing zeros in from the left, filling in the left bits with 0s

Source: www.hackerearth.com

Answer: Bitwise operators are used for manipulating a data at the bit level, also called as bit level programming. It is a fast and simple action, directly supported by the processor, and is used to manipulate values for comparisons and calculations.

On simple low-cost processors, typically, bitwise operations are substantially faster than division, several times faster than multiplication, and sometimes significantly faster than addition.

Source: en.wikipedia.org

Details: I have to flip all bits in a binary representation of an integer. Given:

10101The output should be

01010What is the bitwise operator to accomplish this when used with an integer?

Answer:

Simply use the bitwise not operator ~.

int flipBits(int n) {

return ~n;

}Source: stackoverflow.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Blockchain Interview Questions

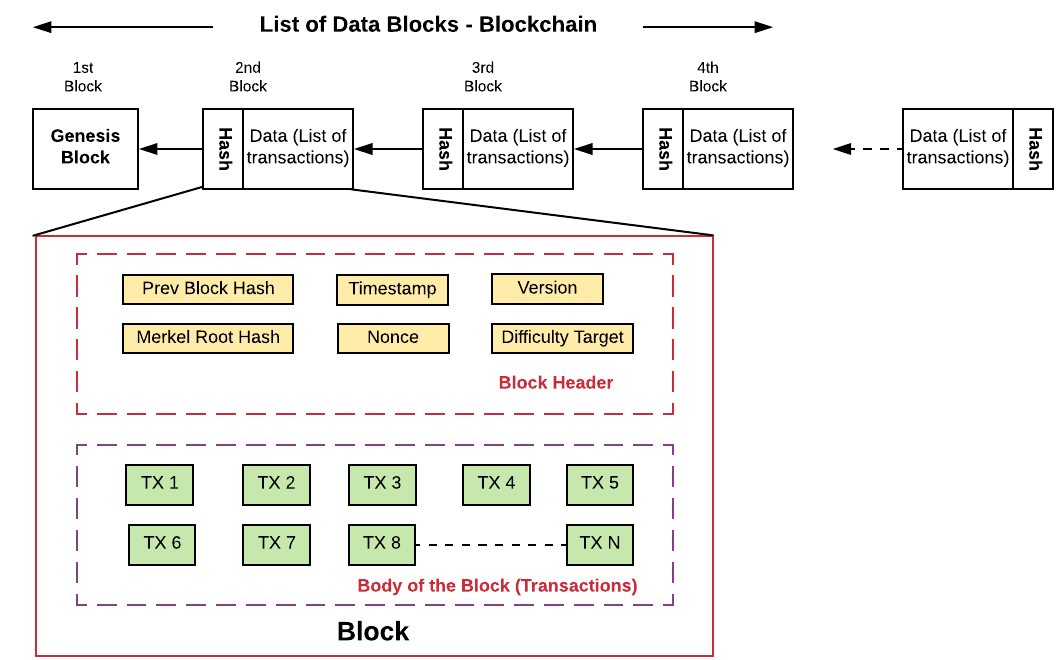

Answer: Blockchain is a secure distributed ledger (data structure or database) that maintains a continuously growing list of ordered records, called “blocks”, that are linked using cryptography. Each block contains a cryptographic hash of the previous block, a timestamp, and transaction data.

By design, a blockchain is resistant to modification of the data. It is "an open, distributed ledger that can record transactions between two parties efficiently and in a verifiable and permanent way".

Once recorded, the data in any given block cannot be altered retroactively without alteration of all subsequent blocks, which requires consensus of the network majority.

Source: en.wikipedia.org

Answer: Transactions are the things that give a blockchain purpose. They are the smallest building blocks of a blockchain system. Transactions generally consist of:

- a recipient address,

- a sender address,

- and a value.

This is not too different from a standard transaction that you would find on a credit card statement.

A transaction changes the state of the agreed-correct blockchain. A blockchain is a shared, decentralized, distributed state machine. This means that all nodes (users of the blockchain system) independently hold their own copy of the blockchain, and the current known "state" is calculated by processing each transaction in order as it appears in the blockchain.

Source: pluralsight.com

Answer: Blockchains are composed of three core parts:

- Block: A list of transactions recorded into a ledger over a given period. The size, period, and triggering event for blocks is different for every blockchain.

- Chain: A hash that links one block to another, mathematically “chaining” them together.

- Network: The network is composed of “full nodes.” Think of them as the computer running an algorithm that is securing the network. Each node contains a complete record of all the transactions that were ever recorded in that blockchain.

Source: dummies.com

Answer: Basically the blockchain data structure is explained as a back-linked record of blocks of transactions, which is ordered. It can be saved as a file or in a plain database. Each block can be recognized by a hash, created utilizing the SHA256 cryptographic hash algorithm on the header of the block. Each block mentions a former block, also identified as the parent block, in the “previous block hash” field, in the block header.

Source: cryptoticker.io

Answer: A blockchain exists out of blocks of data. These blocks of data are stored on nodes (compare it to small servers). Nodes can be any kind of device (mostly computers, laptops or even bigger servers). Nodes form the infrastructure of a blockchain.

All nodes on a blockchain are connected to each other and they constantly exchange the latest blockchain data with each other so all nodes stay up to date. They store, spread and preserve the blockchain data, so theoretically a blockchain exists on nodes.

A full node is basically a device (like a computer) that contains a full copy of the transaction history of the blockchain.

Source: lisk.io

Answer: The **first block in any blockchain **is termed the genesis block. If you start at any block and follow the chain backwards chronologically, you will arrive at the genesis block. The genesis block is statically encoded within the client software, that it cannot be changed. Every node can identify the genesis block’s hash and structure, the fixed time of creation, and the single transactions within. Thus every node has a secure “root” from which is possible to build a trusted blockchain on.

Source: linkedin.com

Answer: A proof of work is a piece of data which is difficult (costly, time-consuming) to produce but easy for others to verify and which satisfies certain requirements. Producing a proof of work can be a random process with low probability so that a lot of trial and error is required on average before a valid proof of work is generated. Difficulty is a measure of how difficult it is to find a hash below a given target.

Source: en.bitcoin.it

Answer: Tokens/Coins are used as a medium of exchange between the states. They are digital assets built in to perform a specific function within a blockchain.

When someone does a transaction, there is a change of state, and coins are moved from one address to another address. Apart from that, transactions contain some additional data; this data can be mutated through the change of state. For this reason, blockchains need coins or tokens to incentivize the participants to join their networks.

Source: mindmajix.com

Answer: If A + B = C, then no matter what the circumstances, A+B will always be equal to C. That is called deterministic behavior.

Hash functions are deterministic, meaning A’s hash will always be H(A).

Source: blockgeeks.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Q27: What's the difference between distributed hashtable technology and the bitcoin blockchain? ⭐⭐⭐⭐

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Brain Teasers Interview Questions

Answer: You can swap two numbers without using a temporary or third variable if you can store the sum of numbers in one number and then minus the sum with other number, something like:

a = 3;

b = 5;

a = a + b; //8

b = a — b; // 3

a = a — b; //5now you have a = 5 and b = 3, so numbers are swapped without using a third or temp variable.

Source: javarevisited.blogspot.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Divide & Conquer Interview Questions

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Dynamic Programming Interview Questions

Answer: Dynamic programming is all about ordering your computations in a way that avoids recalculating duplicate work. More specifically, Dynamic Programming is a technique used to avoid computing multiple times the same subproblem in a recursive algorithm. DP algorithms could be implemented with recursion, but they don't have to be.

With dynamic programming, you store your results in some sort of table generally. When you need the answer to a problem, you reference the table and see if you already know what it is. If not, you use the data in your table to give yourself a stepping stone towards the answer.

There are two approaches to apply Dynamic Programming:

- The top-down or memoization. When the recursion does a lot of unecessary calculation, an easy way to solve this is to cache the results and to check before executing the call if the result is already in the cache.

- The bottom-up or tabulation approach. A better way to do this is to get rid of the recursion all-together by evaluating the results in the right order and building the array as we iterate. The partial results are available when needed if the iteration is done in the right order.

TOP of the tree

fib(4)

fib(3)...................... + fib(2)

fib(2)......... + fib(1) fib(1)........... + fib(0)

fib(1) + fib(0) fib(1) fib(1) fib(0)

fib(1) fib(0)

BOTTOM of the treeSource: stackoverflow.com

Answer:

- Memoization is when you store previous results of a function call (a real function always returns the same thing, given the same inputs). It doesn't make a difference for algorithmic complexity before the results are stored.

- Recursion is the method of a function calling itself, usually with a smaller dataset. Since most recursive functions can be converted to similar iterative functions, this doesn't make a difference for algorithmic complexity either.

- Dynamic programming is the process of solving easier-to-solve sub-problems and building up the answer from that. Most DP algorithms will be in the running times between a Greedy algorithm (if one exists) and an exponential (enumerate all possibilities and find the best one) algorithm.

- DP algorithms could be implemented with recursion, but they don't have to be.

- DP algorithms can't be sped up by memoization, since each sub-problem is only ever solved (or the "solve" function called) once.

Source: stackoverflow.com

Answer: The key idea of DP is to save answers of overlapping smaller sub-problems to avoid recomputation. For that:

- An instance is solved using the solutions for smaller instances.

- The solutions for a smaller instance might be needed multiple times, so store their results in a table.

- Thus each smaller instance is solved only once.

- Additional space is used to save time.

Source: stackoverflow.com

Answer: Pros:

- Memoization is very easy to code (you can generally* write a "memoizer" annotation or wrapper function that automatically does it for you), and should be your first line of approach. It feels more natural. You can take a recursive function and memoize it by a mechanical process (first lookup answer in cache and return it if possible, otherwise compute it recursively and then before returning, you save the calculation in the cache for future use), whereas doing bottom up dynamic programming requires you to encode an order in which solutions are calculated.

- Top-down only solves sub-problems used by your solution whereas bottom-up might waste time on redundant sub-problems.

Cons:

- With memoization, if the tree is very deep (e.g.

fib(106)), you will run out of stack space, because each delayed computation must be put on the stack, and you will have106of them.

Source: stackoverflow.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Q7: What should you consider when choosing between Top-Down vs Bottom-Up solutions for the same problem? ⭐⭐⭐

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Fibonacci Series Interview Questions

Answer: The Fibonacci Sequence is the series of numbers:

0, 1, 1, 2, 3, 5, 8, 13, 21, 34, ...Fibonacci sequence characterized by the fact that every number after the first two is the sum of the two preceding ones:

Fibonacci(0) = 0,

Fibonacci(1) = 1,

Fibonacci(n) = Fibonacci(n-1) + Fibonacci(n-2) Fibonacci sequence, appears a lot in nature. Patterns such as spirals of shells, curve of waves, seed heads, pinecones, and branches of trees can all be described using this mathematical sequence. The fact that things as large as spirals of galaxies, and as small as DNA molecules follow the Golden Ratio rule suggests that Fibonacci sequence is one of the most fundamental characteristics of the Universe.

Source: www.mathsisfun.com

Answer:

When we take any two successive (one after the other) Fibonacci Numbers, their ratio is very close to the Golden Ratio φ which is approximately 1.618034.... In fact, the bigger the pair of Fibonacci Numbers, the closer the approximation. Let us try a few:

3/2 = 1.5

5/3 = 1.666666666...

...

233/377 = 1.618055556...This also works when we pick two random whole numbers to begin the sequence, such as 192 and 16 (we get the sequence 192, 16, 208, 224, 432, 656, 1088, 1744, 2832, 4576, 7408, 11984, 19392, 31376, ...):

16/192 = 0.08333333...

208/16 = 13

...

11984/7408 = 1.61771058...

19392/11984 = 1.61815754...Source: www.mathsisfun.com

Answer: The easiest solution that comes to mind here is iteration:

function fib(n){

let arr = [0, 1];

for (let i = 2; i < n + 1; i++){

arr.push(arr[i - 2] + arr[i -1])

}

return arr[n]

}And output:

fib(4)

=> 3

Notice that two first numbers can not really be effectively generated by a for loop, because our loop will involve adding two numbers together, so instead of creating an empty array we assign our arr variable to [0, 1] that we know for a fact will always be there. After that we create a loop that starts iterating from i = 2 and adds numbers to the array until the length of the array is equal to n + 1. Finally, we return the number at n index of array.

Source: medium.com

Answer: Recursive solution looks pretty simple (see code).

Let’s look at the diagram that will help you understand what’s going on here with the rest of our code. Function fib is called with argument 5:

Basically our fib function will continue to recursively call itself creating more and more branches of the tree until it hits the base case, from which it will start summing up each branch’s return values bottom up, until it finally sums them all up and returns an integer equal to 5.

Source: medium.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Graph Theory Interview Questions

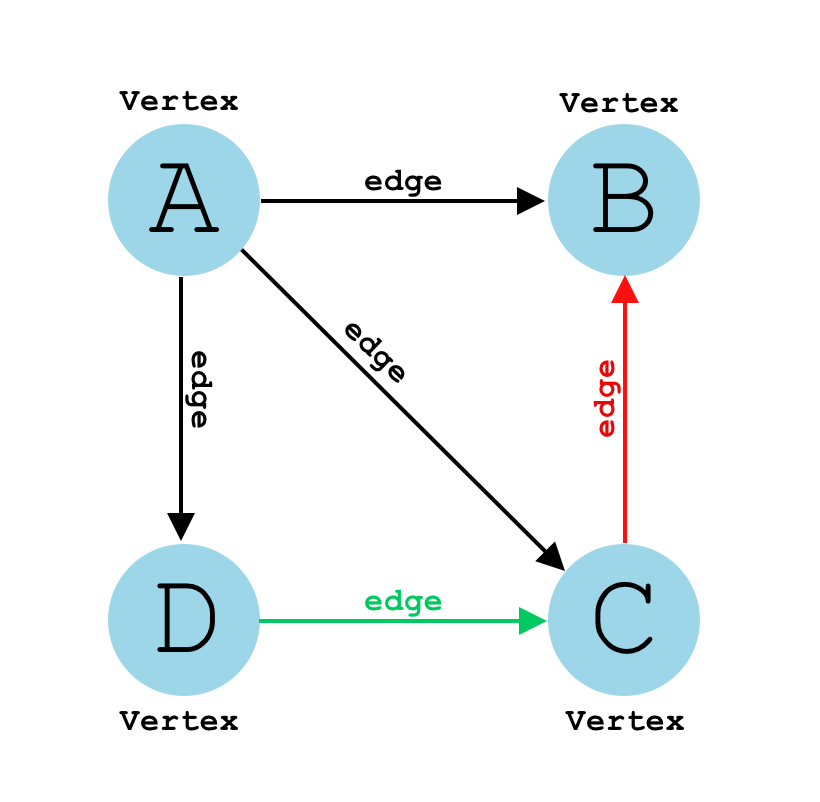

Answer:

A graph is a common data structure that consists of a finite set of nodes (or vertices) and a set of edges connecting them. A pair (x,y) is referred to as an edge, which communicates that the x vertex connects to the y vertex.

Graphs are used to solve real-life problems that involve representation of the problem space as a network. Examples of networks include telephone networks, circuit networks, social networks (like LinkedIn, Facebook etc.).

Source: www.educative.io

Answer: Graph:

- Consists of a set of vertices (or nodes) and a set of edges connecting some or all of them

- Any edge can connect any two vertices that aren't already connected by an identical edge (in the same direction, in the case of a directed graph)

- Doesn't have to be connected (the edges don't have to connect all vertices together): a single graph can consist of a few disconnected sets of vertices

- Could be directed or undirected (which would apply to all edges in the graph)

Tree:

- A type of graph (fit with in the category of Directed Acyclic Graphs (or a DAG))

- Vertices are more commonly called "nodes"

- Edges are directed and represent an "is child of" (or "is parent of") relationship

- Each node (except the root node) has exactly one parent (and zero or more children)

- Has exactly one "root" node (if the tree has at least one node), which is a node without a parent

- Has to be connected

- Is acyclic, meaning it has no cycles: "a cycle is a path [AKA sequence] of edges and vertices wherein a vertex is reachable from itself"

- Trees aren't a recursive data structure

Source: stackoverflow.com

Answer:

- Edge Lists. We have an array of two vertex numbers, or an array of objects containing the vertex numbers of the vertices that the edges are incident on (plus weight). Edge lists are simple, but if we want to find whether the graph contains a particular edge, we have to search through the edge list. If the edges appear in the edge list in no particular order, that's a linear search through

Eedges.

[ [0,1], [0,6], [0,8], [1,4], [1,6], [1,9], [2,4], [2,6], [3,4], [3,5], [3,8], [4,5], [4,9], [7,8], [7,9] ]- Adjacency Matrices. With an adjacency matrix, we can find out whether an edge is present in constant time, by just looking up the corresponding entry in the matrix - we can query whether edge

(i, j)is in the graph by looking atgraph[i][j]value. For a sparse graph, the adjacency matrix is mostly0s, and we use lots of space to represent only a few edges. For an undirected graph, the adjacency matrix is symmetric.

[ [0, 1, 0, 0, 0, 0, 1, 0, 1, 0],

[1, 0, 0, 0, 1, 0, 1, 0, 0, 1],

[0, 0, 0, 0, 1, 0, 1, 0, 0, 0],

[0, 0, 0, 0, 1, 1, 0, 0, 1, 0],

[0, 1, 1, 1, 0, 1, 0, 0, 0, 1],

[0, 0, 0, 1, 1, 0, 0, 0, 0, 0],

[1, 1, 1, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 1, 1],

[1, 0, 0, 1, 0, 0, 0, 1, 0, 0],

[0, 1, 0, 0, 1, 0, 0, 1, 0, 0] ]- Adjacency Lists. For each vertex

i, store an array of the vertices adjacent to it (or array of tuples for weighted graph). To find out whether an edge(i,j)is present in the graph, we go toi'sadjacency list in constant time and then look forjini'sadjacency list.

[ [1, 6, 8], // 0

[0, 4, 6, 9], // 1

[4, 6], // 2

[4, 5, 8],

[1, 2, 3, 5, 9],

[3, 4],

[0, 1, 2],

[8, 9],

[0, 3, 7],

[1, 4, 7] ] // NSource: www.khanacademy.org

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Q8: Provide some practical examples of using Depth-First Search (DFS) vs Breadth-First Search (BFS)? ⭐⭐⭐

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Greedy Algorithms Interview Questions

Answer: We call algorithms greedy when they utilise the greedy property. The greedy property is:

At that exact moment in time, what is the optimal choice to make?

Greedy algorithms are greedy. They do not look into the future to decide the global optimal solution. They are only concerned with the optimal solution locally. This means that the overall optimal solution may differ from the solution the greedy algorithm chooses.

They never look backwards at what they've done to see if they could optimise globally. This is the main difference between Greedy Algorithms and Dynamic Programming.

Source: skerritt.blog

Answer: Greedy algorithms are quick. A lot faster than the two other alternatives (Divide & Conquer, and Dynamic Programming). They're used because they're fast. Sometimes, Greedy algorithms give the global optimal solution every time. Some of these algorithms are:

- Dijkstra's Algorithm

- Kruskal's algorithm

- Prim's algorithm

- Huffman trees

These algorithms are Greedy, and their Greedy solution gives the optimal solution.

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Hash Tables Interview Questions

Answer: A hash table (hash map) is a data structure that implements an associative array abstract data type, a structure that can map keys to values. Hash tables implement an associative array, which is indexed by arbitrary objects (keys). A hash table uses a hash function to compute an index, also called a hash value, into an array of buckets or slots, from which the desired value can be found.

Source: en.wikipedia.org

Answer: Hashing is the practice of using an algorithm (or hash function) to map data of any size to a fixed length. This is called a hash value (or sometimes hash code or hash sums or even a hash digest if you’re feeling fancy). In hashing, keys are converted into hash values or indexes by using hash functions. Hashing is a one-way function.

Source: www.thesslstore.com

Answer: A Hash Value (also called as Hashes or Checksum) is a string value (of specific length), which is the result of calculation of a Hashing Algorithm. Hash Values have different uses:

- Indexing for Hash Tables

- Determine the Integrity of any Data (which can be a file, folder, email, attachments, downloads etc).

Source: www.omnisecu.com

Answer:

The space complexity of a datastructure indicates how much space it occupies in relation to the amount of elements it holds. For example a space complexity of O(1) would mean that the datastructure alway consumes constant space no matter how many elements you put in there. O(n) would mean that the space consumption grows linearly with the amount of elements in it.

A hashtable typically has a space complexity of O(n).

Source: stackoverflow.com

Answer: A hash function is any function that can be used to map data of arbitrary size to fixed-size values. The values returned by a hash function are called hash values, hash codes, digests, or simply hashes. The values are used to index a fixed-size table called a hash table. Use of a hash function to index a hash table is called hashing or scatter storage addressing.

Mathematically speaking, a hash function is usually defined as a mapping from the universe of elements you want to store in the hash table to the range {0, 1, 2, .., numBuckets - 1}.

Some properties of Hash Functions are:

- Very fast to compute (nearly constant)

- One way; can not be reversed

- Output does not reveal information on input

- Hard to find collisions (different data with same hash)

- Implementation is based on parity-preserving bit operations (XOR and ADD), multiply, or divide.

Source: en.wikipedia.org

Answer: To detect if a list is cyclic, we can check whether a node had been visited before. A natural way is to use a hash table.

Algorithm

We go through each node one by one and record each node's reference (or memory address) in a hash table. If the current node is null, we have reached the end of the list and it must not be cyclic. If current node’s reference is in the hash table, then return true.

Source: leetcode.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Heaps and Maps Interview Questions

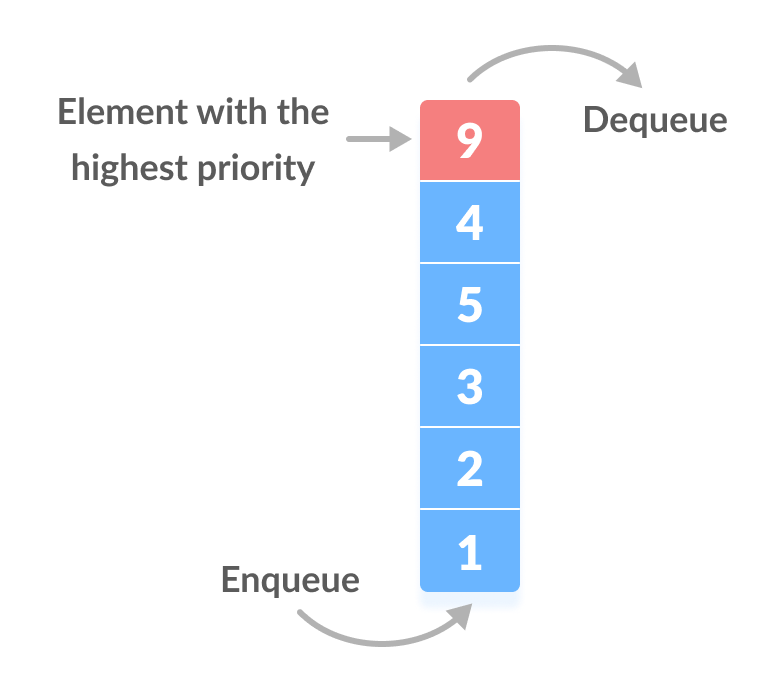

Answer: A Heap is a special Tree-based data structure which is an almost complete tree that satisfies the heap property:

- in a max heap, for any given node C, if P is a parent node of C, then the key (the value) of P is greater than or equal to the key of C.

- In a min heap, the key of P is less than or equal to the key of C. The node at the "top" of the heap (with no parents) is called the root node.

A common implementation of a heap is the binary heap, in which the tree is a binary tree.

Source: www.geeksforgeeks.org

Answer: A priority queue is a data structure that stores priorities (comparable values) and perhaps associated information. A priority queue is different from a "normal" queue, because instead of being a "first-in-first-out" data structure, values come out in order by priority. Think of a priority queue as a kind of bag that holds priorities. You can put one in, and you can take out the current highest priority.

Source: pages.cs.wisc.edu

Answer: A binary heaps are commonly implemented with an array. Any binary tree can be stored in an array, but because a binary heap is always a complete binary tree, it can be stored compactly. No space is required for pointers; instead, the parent and children of each node can be found by arithmetic on array indices:

- The root element is

0 - Left child :

(2*i)+1 - Right child :

(2*i)+2 - Parent child :

(i-1)/2

Source: www.geeksforgeeks.org

Details: Suppose I have a Heap Like the following:

77

/ \

/ \

50 60

/ \ / \

22 30 44 55

Now, I want to insert another item 55 into this Heap. How to do this?

Answer: A binary heap is defined as a binary tree with two additional constraints:

- Shape property: a binary heap is a complete binary tree; that is, all levels of the tree, except possibly the last one (deepest) are fully filled, and, if the last level of the tree is not complete, the nodes of that level are filled from left to right.

- Heap property: the key stored in each node is either greater than or equal to (≥) or less than or equal to (≤) the keys in the node's children, according to some total order.

We start adding child from the most left node and if the parent is lower than the newly added child than we replace them. And like so will go on until the child got the parent having value greater than it.

Your initial tree is:

77

/ \

/ \

50 60

/ \ / \

22 30 44 55Now adding 55 according to the rule on most left side:

77

/ \

/ \

50 60

/ \ / \

22 30 44 55

/

55

But you see 22 is lower than 55 so replaced it:

77

/ \

/ \

50 60

/ \ / \

55 30 44 55

/

22

Now 55 has become the child of 50 which is still lower than 55 so replace them too:

77

/ \

/ \

55 60

/ \ / \

50 30 44 55

/

22

Now it cant be sorted more because 77 is greater than 55.

Source: stackoverflow.com

Answer: A Binary Heap is a Binary Tree with following properties:

- It’s a complete tree (all levels are completely filled except possibly the last level and the last level has all keys as left as possible). This property of Binary Heap makes them suitable to be stored in an array.

- A Binary Heap is either Min Heap or Max Heap. In a Min Binary Heap, the key at root must be minimum among all keys present in Binary Heap. The same property must be recursively true for all nodes in Binary Tree. Max Binary Heap is similar to MinHeap.

10 10

/ \ / \

20 100 15 30

/ / \ / \

30 40 50 100 40Source: www.geeksforgeeks.org

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

[⬆] Linked Lists Interview Questions

Answer: A linked list is a linear data structure where each element is a separate object. Each element (we will call it a node) of a list is comprising of two items - the data and a reference (pointer) to the next node. The last node has a reference to null. The entry point into a linked list is called the head of the list. It should be noted that head is not a separate node, but the reference to the first node. If the list is empty then the head is a null reference.

Source: www.cs.cmu.edu

Answer: There are some:

- Linked Lists are Dynamic Data Structure - it can grow and shrink at runtime by allocating and deallocating memory. So there is no need to give initial size of linked list.

- Insertion and Deletion are simple to implement - Unlike array here we don’t have to shift elements after insertion or deletion of an element. In linked list we just have to update the address present in next pointer of a node.

- Efficient Memory Allocation/No Memory Wastage - In case of array there is lot of memory wastage, like if we declare an array of size 10 and store only 6 elements in it then space of 4 elements are wasted. There is no such problem in linked list as memory is allocated only when required.

Source: www.thecrazyprogrammer.com

Answer: A cycle/loop occurs when a node’s next points back to a previous node in the list. The linked list is no longer linear with a beginning and end—instead, it cycles through a loop of nodes.

Source: www.interviewcake.com

Answer:

- A singly linked list

- A doubly linked list is a list that has two references, one to the next node and another to previous node.

- A multiply linked list - each node contains two or more link fields, each field being used to connect the same set of data records in a different order of same set(e.g., by name, by department, by date of birth, etc.).

- A circular linked list - where last node of the list points back to the first node (or the head) of the list.

Source: www.cs.cmu.edu

Answer:

- A linked list can typically only be accessed via its head node. From there you can only traverse from node to node until you reach the node you seek. Thus access is

O(n). - Searching for a given value in a linked list similarly requires traversing all the elements until you find that value. Thus search is

O(n). - Inserting into a linked list requires re-pointing the previous node (the node before the insertion point) to the inserted node, and pointing the newly-inserted node to the next node. Thus insertion is

O(1). - Deleting from a linked list requires re-pointing the previous node (the node before the deleted node) to the next node (the node after the deleted node). Thus deletion is

O(1).

Source: github.com

Answer: Few disadvantages of linked lists are :

- They use more memory than arrays because of the storage used by their pointers.

- Difficulties arise in linked lists when it comes to reverse traversing. For instance, singly linked lists are cumbersome to navigate backwards and while doubly linked lists are somewhat easier to read, memory is wasted in allocating space for a back-pointer.

- Nodes in a linked list must be read in order from the beginning as linked lists are inherently sequential access.

- Random access has linear time.

- Nodes are stored incontiguously (no or poor cache locality), greatly increasing the time required to access individual elements within the list, especially with a CPU cache.

- If the link to list's node is accidentally destroyed then the chances of data loss after the destruction point is huge. Data recovery is not possible.

- Search is linear versus logarithmic for sorted arrays and binary search trees.

- Different amount of time is required to access each element.

- Not easy to sort the elements stored in the linear linked list.

Source: www.quora.com

Answer:

Nothing faster than O(n) can be done. You need to traverse the list and alter pointers on every node, so time will be proportional to the number of elements.

Source: stackoverflow.com

Answer:

O(1). Note, you don't have to insert at the end of the list. If you insert at the front of a (singly-linked) list, they are both O(1).

Stack contains 1,2,3:

[1]->[2]->[3]Push 5:

[5]->[1]->[2]->[3]Pop:

[1]->[2]->[3] // returning 5Source: stackoverflow.com

Answer:

The add() method below walks down the list until it finds the appropriate position. Then, it splices in the new node and updates the start, prev, and curr pointers where applicable.

Note that the reverse operation, namely removing elements, doesn't need to change, because you are simply throwing things away which would not change any order in the list.

Source: stackoverflow.com

Answer: To convert a singly linked list to circular linked list, we will set next pointer of tail node to head pointer.

- Create a copy of head pointer, let's say

temp. - Using a loop, traverse linked list till tail node (last node) using temp pointer.

- Now set the next pointer of tail node to head node.

temp\->next = head

Source: www.techcrashcourse.com

Answer: To detect if a list is cyclic, we can check whether a node had been visited before. A natural way is to use a hash table.

Algorithm

We go through each node one by one and record each node's reference (or memory address) in a hash table. If the current node is null, we have reached the end of the list and it must not be cyclic. If current node’s reference is in the hash table, then return true.

Source: leetcode.com

Answer: Linked lists are very useful when you need :

- to do a lot of insertions and removals, but not too much searching, on a list of arbitrary (unknown at compile-time) length.

- splitting and joining (bidirectionally-linked) lists is very efficient.

- You can also combine linked lists - e.g. tree structures can be implemented as "vertical" linked lists (parent/child relationships) connecting together horizontal linked lists (siblings).

Using an array based list for these purposes has severe limitations:

- Adding a new item means the array must be reallocated (or you must allocate more space than you need to allow for future growth and reduce the number of reallocations)

- Removing items leaves wasted space or requires a reallocation

- inserting items anywhere except the end involves (possibly reallocating and) copying lots of the data up one position

Source: stackoverflow.com

Answer: A doubly linked list is simply a linked list where every element has both next and prev mebers, pointing at the elements before and after it, not just the one after it in a single linked list.

so to convert your list to a doubly linked list, just change your node to be:

private class Node

{

Picture data;

Node pNext;

Node pPrev;

};and when iterating the list, on each new node add a reference to the previous node.

Source: stackoverflow.com

Answer: You can simulate a linked list by using two stacks. One stack is the "list," and the other is used for temporary storage.

- To add an item at the head, simply push the item onto the stack.

- To remove from the head, pop from the stack.

- To insert into the middle somewhere, pop items from the "list" stack and push them onto the temporary stack until you get to your insertion point. Push the new item onto the "list" stack, then pop from the temporary stack and push back onto the "list" stack. Deletion of an arbitrary node is similar.

This isn't terribly efficient, by the way, but it would in fact work.

Source: stackoverflow.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Q19: Floyd's Cycle Detect Algorithm: Explain how to find a starting node of a Cycle in a Linked List? ⭐⭐⭐

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Answer: Queue, as the name suggests is used whenever we need to manage any group of objects in an order in which the first one coming in, also gets out first while the others wait for their turn, like in the following scenarios:

- Serving requests on a single shared resource, like a printer, CPU task scheduling etc.

- In real life scenario, Call Center phone systems uses Queues to hold people calling them in an order, until a service representative is free.

- Handling of interrupts in real-time systems. The interrupts are handled in the same order as they arrive i.e First come first served.

Source: www.studytonight.com

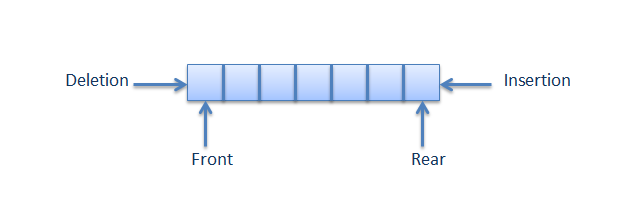

Answer: A queue is a container of objects (a linear collection) that are inserted and removed according to the first-in first-out (FIFO) principle. The process to add an element into queue is called Enqueue and the process of removal of an element from queue is called Dequeue.

Source: www.cs.cmu.edu

Answer: Because they help manage your data in more a particular way than arrays and lists. It means that when you're debugging a problem, you won't have to wonder if someone randomly inserted an element into the middle of your list, messing up some invariants.

Arrays and lists are random access. They are very flexible and also easily corruptible. If you want to manage your data as FIFO or LIFO it's best to use those, already implemented, collections.

More practically you should:

- Use a queue when you want to get things out in the order that you put them in (FIFO)

- Use a stack when you want to get things out in the reverse order than you put them in (LIFO)

- Use a list when you want to get anything out, regardless of when you put them in (and when you don't want them to automatically be removed).

Source: stackoverflow.com

Answer:

- Queues offer random access to their contents by shifting the first element off the front of the queue. You have to do this repeatedly to access an arbitrary element somewhere in the queue. Therefore, access is

O(n). - Searching for a given value in the queue requires iterating until you find it. So search is

O(n). - Inserting into a queue, by definition, can only happen at the back of the queue, similar to someone getting in line for a delicious Double-Double burger at In 'n Out. Assuming an efficient queue implementation, queue insertion is

O(1). - Deleting from a queue happens at the front of the queue. Assuming an efficient queue implementation, queue deletion is `

O(1).

Source: github.com

Answer: Queue can be classified into following types:

- Simple Queue - is a linear data structure in which removal of elements is done in the same order they were inserted i.e., the element will be removed first which is inserted first.

- Circular Queue - is a linear data structure in which the operations are performed based on FIFO (First In First Out) principle and the last position is connected back to the first position to make a circle. It is also called Ring Buffer. Circular queue avoids the wastage of space in a regular queue implementation using arrays.

- Priority Queue - is a type of queue where each element has a priority value and the deletion of the elements is depended upon the priority value

- In case of max-priority queue, the element will be deleted first which has the largest priority value

- In case of min-priority queue the element will be deleted first which has the minimum priority value.

- De-queue (Double ended queue) - allows insertion and deletion from both the ends i.e. elements can be added or removed from rear as well as front end.

- Input restricted deque - In input restricted double ended queue, the insertion operation is performed at only one end and deletion operation is performed at both the ends.

- Output restricted deque - In output restricted double ended queue, the deletion operation is performed at only one end and insertion operation is performed at both the ends.

Source: www.ques10.com

Details:

Given two queues with their standard operations (enqueue, dequeue, isempty, size), implement a stack with its standard operations (pop, push, isempty, size). The stack should be efficient when pushing an item.

Answer:

Given we have queue1 and queue2:

push - O(1):

- enqueue in

queue1

pop - O(n):

- while size of

queue1is bigger than 1, pipe (dequeue + enqueue) dequeued items fromqueue1intoqueue2 - dequeue and return the last item of

queue1, then switch the names ofqueue1andqueue2

Source: stackoverflow.com

Details: Suppose we have two stacks and no other temporary variable. Is to possible to "construct" a queue data structure using only the two stacks?

Answer:

Keep two stacks, let's call them inbox and outbox.

Enqueue:

- Push the new element onto

inbox

Dequeue:

- If

outboxis empty, refill it by popping each element frominboxand pushing it ontooutbox - Pop and return the top element from

outbox

Source: stackoverflow.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Answer: There are some:

- The binary tree search

- Check for a palyndrome

- Finding factorial

- Traversing the folder hierarchy of a directory tree as part of a file system

- Towers of Hanoi

- Merge sort

- Catalan numbers

Source: stackoverflow.com

Answer:

- Memoization is when you store previous results of a function call (a real function always returns the same thing, given the same inputs). It doesn't make a difference for algorithmic complexity before the results are stored.

- Recursion is the method of a function calling itself, usually with a smaller dataset. Since most recursive functions can be converted to similar iterative functions, this doesn't make a difference for algorithmic complexity either.

- Dynamic programming is the process of solving easier-to-solve sub-problems and building up the answer from that. Most DP algorithms will be in the running times between a Greedy algorithm (if one exists) and an exponential (enumerate all possibilities and find the best one) algorithm.

- DP algorithms could be implemented with recursion, but they don't have to be.

- DP algorithms can't be sped up by memoization, since each sub-problem is only ever solved (or the "solve" function called) once.

Source: stackoverflow.com

Answer:

- Recursion describes the calling of the same function that you are in. The typical example of a recursive function is the factorial. You always need a condition that makes recursion stop (base case).

- Backtracking is when the algorithm makes an opportunistic decision*, which may come up to be wrong. If the decision was wrong then the backtracking algorithm restores the state before the decision. It builds candidates for the solution and abandons those which cannot fulfill the conditions. A typical example for a task to solve would be the Eight Queens Puzzle. Backtracking is also commonly used within Neuronal Networks. Many times backtracking is not implemented recursively. If backtracking uses recursion its called Recursive Backtracking

P.S. * Opportunistic decision making refers to a process where a person or group assesses alternative actions made possible by the favorable convergence of immediate circumstances recognized without reference to any general plan.

Source: www.quora.com

Details: Can you convert this recursion function into a loop?

A(x) {

if x<0 return 0;

return something(x) + A(x-1)

}Answer: Any recursive function can be made to iterate (into a loop) but you need to use a stack yourself to keep the state.

A(x) {

temp = 0;

for i in 0..x {

temp = temp + something(i);

}

return temp;

}Source: stackoverflow.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

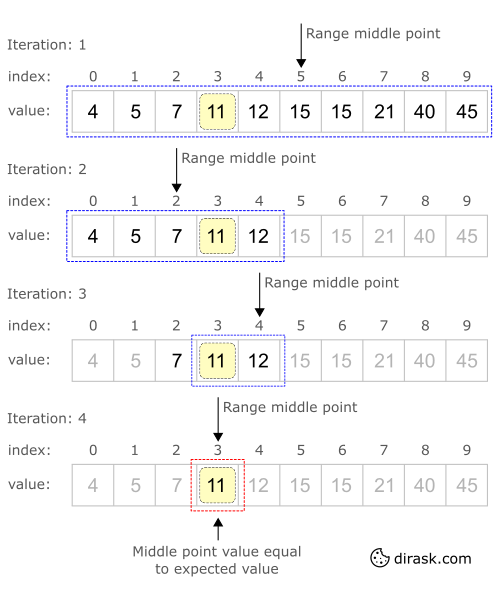

Answer: When the list is sorted we can use the binary search (also known as half-interval search, logarithmic search, or binary chop) technique to find items on the list. Here's a step-by-step description of using binary search:

- Let

min = 1andmax = n. - Guess the average of

maxandminrounded down so that it is an integer. - If you guessed the number, stop. You found it!

- If the guess was too low, set min to be one larger than the guess.

- If the guess was too high, set max to be one smaller than the guess.

- Go back to step two.

In this example we looking for array item with value 4:

When you do one operation in binary search we reduce the size of the problem by half (look at the picture below how do we reduce the size of the problem area) hence the complexity of binary search is O(log n). The binary search algorithm can be written either recursively or iteratively.

Source: www.tutorialspoint.com

Answer:

Linear (sequential) search goes through all possible elements in some array and compare each one with the desired element. It may take up to O(n) operations, where N is the size of an array and is widely considered to be horribly slow. In linear search when you perform one operation you reduce the size of the problem by one (when you do one operation in binary search you reduce the size of the problem by half). Despite it, it can still be used when:

- You need to perform this search only once,

- You are forbidden to rearrange the elements and you do not have any extra memory,

- The array is tiny, such as ten elements or less, or the performance is not an issue at all,

- Even though in theory other search algorithms may be faster than linear search (for instance binary search), in practice even on medium-sized arrays (around 100 items or less) it might be infeasible to use anything else. On larger arrays, it only makes sense to use other, faster search methods if the data is large enough, because the initial time to prepare (sort) the data is comparable to many linear searches,

- When the list items are arranged in order of decreasing probability, and these probabilities are geometrically distributed, the cost of linear search is only

O(1) - You have no idea what you are searching.

When you ask MySQL something like SELECT x FROM y WHERE z = t, and z is a column without an index, linear search is performed with all the consequences of it. This is why adding an index to searchable columns is important.

Source: bytescout.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Answer: Bubble Sort is based on the idea of repeatedly comparing pairs of adjacent elements and then swapping their positions if they are in the wrong order. Bubble sort is a stable, in-place sort algorithm.

How it works:

- In an unsorted array of

nelements, start with the first two elements and sort them in ascending order. (Compare the element to check which one is greater). - Compare the second and third element to check which one is greater, and sort them in ascending order.

- Compare the third and fourth element to check which one is greater, and sort them in ascending order.

- ...

- Repeat steps 1–

nuntil no more swaps are required.

Visualisation:

Source: github.com

Answer: Efficient sorting is important for optimizing the efficiency of other algorithms (such as search and merge algorithms) that require input data to be in sorted lists. Sorting is also often useful for canonicalizing data and for producing human-readable output. Sorting have direct applications in database algorithms, divide and conquer methods, data structure algorithms, and many more.

Source: en.wikipedia.org

Answer: The idea of an in-place algorithm isn't unique to sorting, but sorting is probably the most important case, or at least the most well-known. The idea is about space efficiency - using the minimum amount of RAM, hard disk or other storage that you can get away with.

The idea is to produce an output in the same memory space that contains the input by successively transforming that data until the output is produced. This avoids the need to use twice the storage - one area for the input and an equal-sized area for the output.

Quicksort is one example of In-Place Sorting.

Source: stackoverflow.com

Answer: The ideal sorting algorithm would have the following properties:

- Stable: Equal keys aren’t reordered.

- Operates in place: requiring O(1) extra space.

- Worst-case

O(n log n)key comparisons. - Worst-case

O(n)swaps. - Adaptive: Speeds up to O(n) when data is nearly sorted or when there are few unique keys.

There is no algorithm that has all of these properties, and so the choice of sorting algorithm depends on the application.

Source: www.toptal.com

Answer: Sorting algorithms can be categorised based on the following parameters:

- Based on Number of Swaps or Inversion. This is the number of times the algorithm swaps elements to sort the input.

Selection Sortrequires the minimum number of swaps. - Based on Number of Comparisons. This is the number of times the algorithm compares elements to sort the input. Using Big-O notation, the sorting algorithm examples listed above require at least

O(n log n)comparisons in the best case andO(n2)comparisons in the worst case for most of the outputs. - Based on Recursion or Non-Recursion. Some sorting algorithms, such as

Quick Sort, use recursive techniques to sort the input. Other sorting algorithms, such asSelection SortorInsertion Sort, use non-recursive techniques. Finally, some sorting algorithm, such asMerge Sort, make use of both recursive as well as non-recursive techniques to sort the input. - Based on Stability. Sorting algorithms are said to be

stableif the algorithm maintains the relative order of elements with equal keys. In other words, two equivalent elements remain in the same order in the sorted output as they were in the input.Insertion sort,Merge Sort, andBubble Sortare stableHeap SortandQuick Sortare not stable

- Based on Extra Space Requirement. Sorting algorithms are said to be in place if they require a constant

O(1)extra space for sorting.Insertion sortandQuick-sortarein placesort as we move the elements about the pivot and do not actually use a separate array which is NOT the case in merge sort where the size of the input must be allocated beforehand to store the output during the sort.Merge Sortis an example ofout placesort as it require extra memory space for it’s operations.

Source: www.freecodecamp.org

Answer: Insertion Sort is an in-place, stable, comparison-based sorting algorithm. The idea is to maintain a sub-list which is always sorted. An element which is to be 'insert'ed in this sorted sub-list, has to find its appropriate place and then it has to be inserted there. Hence the name, insertion sort.

Steps on how it works:

- If it is the first element, it is already sorted.

- Pick the next element.

- Compare with all the elements in sorted sub-list.

- Shift all the the elements in sorted sub-list that is greater than the value to be sorted.

- Insert the value.

- Repeat until list is sorted.

Visualisation:

Source: medium.com

Answer: Advantages:

- Simple to understand

- Ability to detect that the list is sorted efficiently is built into the algorithm. When the list is already sorted (best-case), the complexity of bubble sort is only

O(n).

Disadvantages:

- It is very slow and runs in

O(n^2)time in worst as well as average case. Because of that Bubble sort does not deal well with a large set of data. For example Bubble sort is three times slower than Quicksort even for n = 100

Source: en.wikipedia.org

Answer:

In Bubble sort, you know that after k passes, the largest k elements are sorted at the k last entries of the array, so the conventional Bubble sort uses:

public static void bubblesort(int[] a) {

for (int i = 1; i < a.length; i++) {

boolean is_sorted = true;

for (int j = 0; j < a.length - i; j++) { // skip the already sorted largest elements, compare to a.length - 1

if (a[j] > a[j+1]) {

int temp = a[j];

a[j] = a[j+1];

a[j+1] = temp;

is_sorted = false;

}

}

if(is_sorted) return;

}

}Now, that would still do a lot of unnecessary iterations when the array has a long sorted tail of largest elements. If you remember where you made your last swap, you know that after that index, there are the largest elements in order, so:

public static void bubblesort(int[] a) {

int lastSwap = a.length - 1;

for (int i = 1; i< a.length; i++) {

boolean is_sorted = true;

int currentSwap = -1;

for (int j = 0; j < lastSwap; j++) { // compare to a.length - i

if (a[j] > a[j+1]) {

int temp = a[j];

a[j] = a[j+1];

a[j+1] = temp;

is_sorted = false;

currentSwap = j;

}

}

if (is_sorted) return;

lastSwap = currentSwap;

}

}This allows to skip over many elements, resulting in about a worst case 50% improvement in comparison count (though no improvement in swap counts), and adds very little complexity.

Source: stackoverflow.com

Answer:

The add() method below walks down the list until it finds the appropriate position. Then, it splices in the new node and updates the start, prev, and curr pointers where applicable.

Note that the reverse operation, namely removing elements, doesn't need to change, because you are simply throwing things away which would not change any order in the list.

Source: stackoverflow.com

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Read answer on 👉 FullStack.Cafe

Answer: A Stack is a container of objects that are inserted and removed according to the last-in first-out (LIFO) principle. In the pushdown stacks only two operations are allowed: push the item into the stack, and pop the item out of the stack.

There are basically three operations that can be performed on stacks. They are:

- inserting an item into a stack (push).

- deleting an item from the stack (pop).

- displaying the contents of the stack (peek or top).

A stack is a limited access data structure - elements can be added and removed from the stack only at the top. push adds an item to the top of the stack, pop removes the item from the top. A helpful analogy is to think of a stack of books; you can remove only the top book, also you can add a new book on the top.

Source: www.cs.cmu.edu

Answer: A stack is a recursive data structure, so it's:

- a stack is either empty or

- it consists of a top and the rest which is a stack by itself;

Source: www.cs.cmu.edu

Answer: Because they help manage your data in more a particular way than arrays and lists. It means that when you're debugging a problem, you won't have to wonder if someone randomly inserted an element into the middle of your list, messing up some invariants.

Arrays and lists are random access. They are very flexible and also easily corruptible. If you want to manage your data as FIFO or LIFO it's best to use those, already implemented, collections.

More practically you should:

- Use a queue when you want to get things out in the order that you put them in (FIFO)

- Use a stack when you want to get things out in the reverse order than you put them in (LIFO)

- Use a list when you want to get anything out, regardless of when you put them in (and when you don't want them to automatically be removed).

Source: stackoverflow.com

Details:

Given two queues with their standard operations (enqueue, dequeue, isempty, size), implement a stack with its standard operations (pop, push, isempty, size). The stack should be efficient when pushing an item.

Answer:

Given we have queue1 and queue2:

push - O(1):

- enqueue in

queue1

pop - O(n):

- while size of