-

Notifications

You must be signed in to change notification settings - Fork 9

Plotting package metrics with Rperform

Note: The visualization/plotting functionalities of Rperform will be improved and expanded upon over the next few weeks. Keep checking back to stay updated.

Rperform currently has two class of functions for plotting package metrics. They respectively deal with:

The plotting functions, like most of the other Rperform functions, are designed to work in harmony with hadley's testthat package. However, they will work fine with the packages not employing testthat as well. Read on for further explanation.

Go straight to the examples if you want to skip the theoretical details. However, going through the below detail would be helpful towards grasping the plotting functions completely.

Before diving into the specific functions, one bit of information might be helpful. The parameter key to using the functions effectively is metric. There are three options the metric parameter any plotting function of will accept:

- time - Deals with and plots the runtime metric for all the tests contained in testfile as well as for the file itself. All the tests contained within a single testfile (as in the case of testthat blocks) are plotted as a single plot.

- memory - Deals with and plots the memory metrics (maximum memory utilized and total memory leaked during execution) for all the tests contained in testfile as well as the file itself. All the tests contained within a single testfile (as in the case of testthat blocks) are plotted as a single plot.

- testMetrics - This function plots both the parameters on a single plot for each test contained in a single testfile. Each test file as a whole also gets a single plot. For example, let's assume that a testfile in question contains two testthat blocks. Then for that particular testfile, three plots will be generated with each plot containing information about three metrics (two memory metrics and one runtime).

Further, depending on the kind of analysis required (across a single branch or comparison across two branches), different types of plots will be returned. Go through the below examples for a clearer understanding.

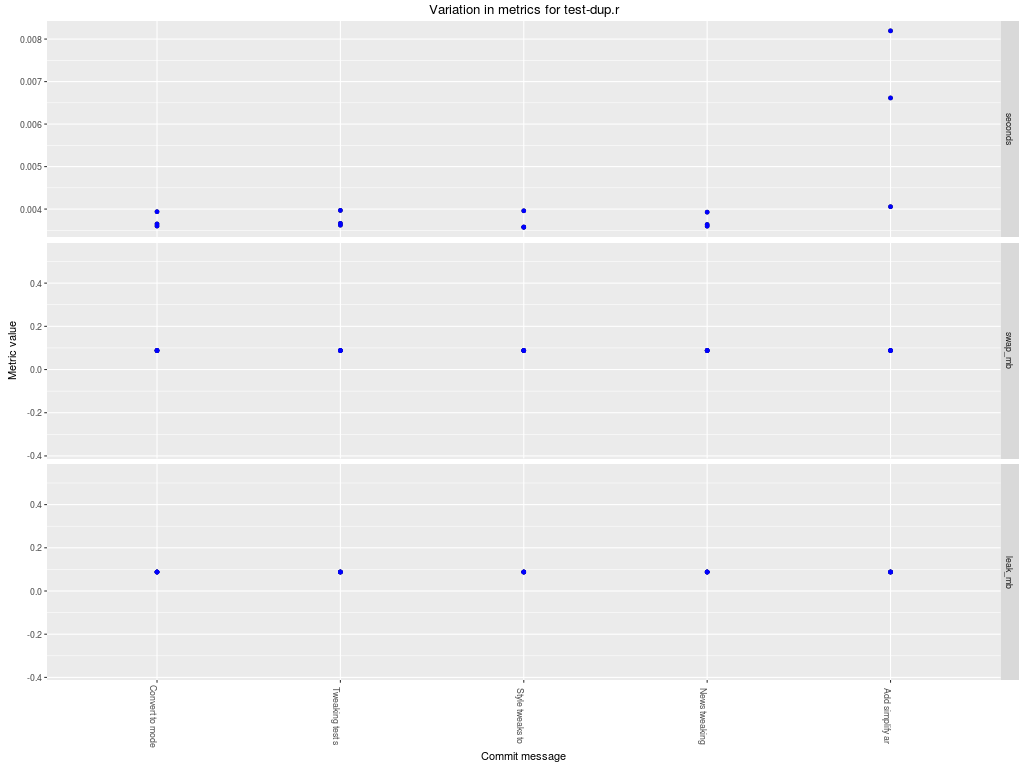

Note: For each individual commit, three values of a particular metric are measured and plotted. Hence, the multiple values for a single commit exist on the plots. Many a times the values simply overlap.

-> The most basic function of this class is the plot_metrics() function. We will use the package stringr for demonstration purposes.

## Warning: Always set the current directory to be the root directory of the package you intend to test.

setwd("path/to/stringr")

library(Rperform)

plot_metrics(test_path = "tests/testthat/test-extract.r", metric = "time", num_commits = 10, save_data = F, save_plots = F)

*Setting save_plots to TRUE will result in the generated plots being stored in the 'Rperform_Data' directory in the root of the repo rather than being printed on the screen. Similary setting save_data to TRUE will result in the data-frame containing the metrics information being stored in the 'Rperform_Data' directory the root of the repo.

Rperform::plot_metrics(test_path = "tests/testthat/test-dup.r", metric = "memory", num_commits = 5, save_data = F, save_plots = F)

Rperform::plot_metrics(test_path = "tests/testthat/test-dup.r", metric = "testMetrics", num_commits = 5, save_data = F, save_plots = T)

-> The next two functions in this category provide the same functionality as plot_metrics() but for the entire directory of test files rather than just a single one. They are: plot_directory() and plot_webpage().

plot_directory(): Given a directory path, plot the memory and time usage statistics of all files in the directory against the commit message summaries of the specified number of commits in the current git repository.

Usage

plot_directory(test_directory, metric = "testMetrics", num_commits = 5, save_data = FALSE, save_plots = TRUE)

plot_webpage(): Plots specified metrics for all the tests present in the specified directory of the current git repository on a webpage.

Usage

plot_webpage(test_directory = "tests/testthat", metric = "testMetrics", output_name = "index")

Note 1: For each individual commit, three values of a particular metric are measured and plotted. Hence, the multiple values for a single commit exist on the plots. Many a times the values simply overlap.

Note 2: The commit on the left-hand side (LHS) of the vertical line in the below plots is the latest commit from the branch provided as the parameter, 'branch2'. The right-hand side (RHS) contains the commits from the branch provided as the parameter, 'branch1'. The commits on the RHS run from branch1's latest commit until the first commit common to 'branch2'. This will be helpful in cases when the developer wants to merge one branch (branch1) into another (branch2). That's why the default value of branch2 is 'master'.

-> The plot_branchmetrics() function can be used to compare performance across two branches (keeping in mind the details from Note 2).

## Warning: Always set the current directory to be the root directory of the package you intend to test.

setwd("path/to/stringr")

library(Rperform)

plot_branchmetrics(test_path = "tests/testthat/test-join.r", metric = "time", num_commits = 10, save_data = FALSE, save_plots = FALSE)

Rperform::plot_branchmetrics(test_path = "tests/testthat/test-interp.r", metric = "memory", branch1 = "rperform_test", save_data = F, save_plots = F)

Setting metric = testMetrics will work similarly as explained in the examples for single branch functions in the previous section.