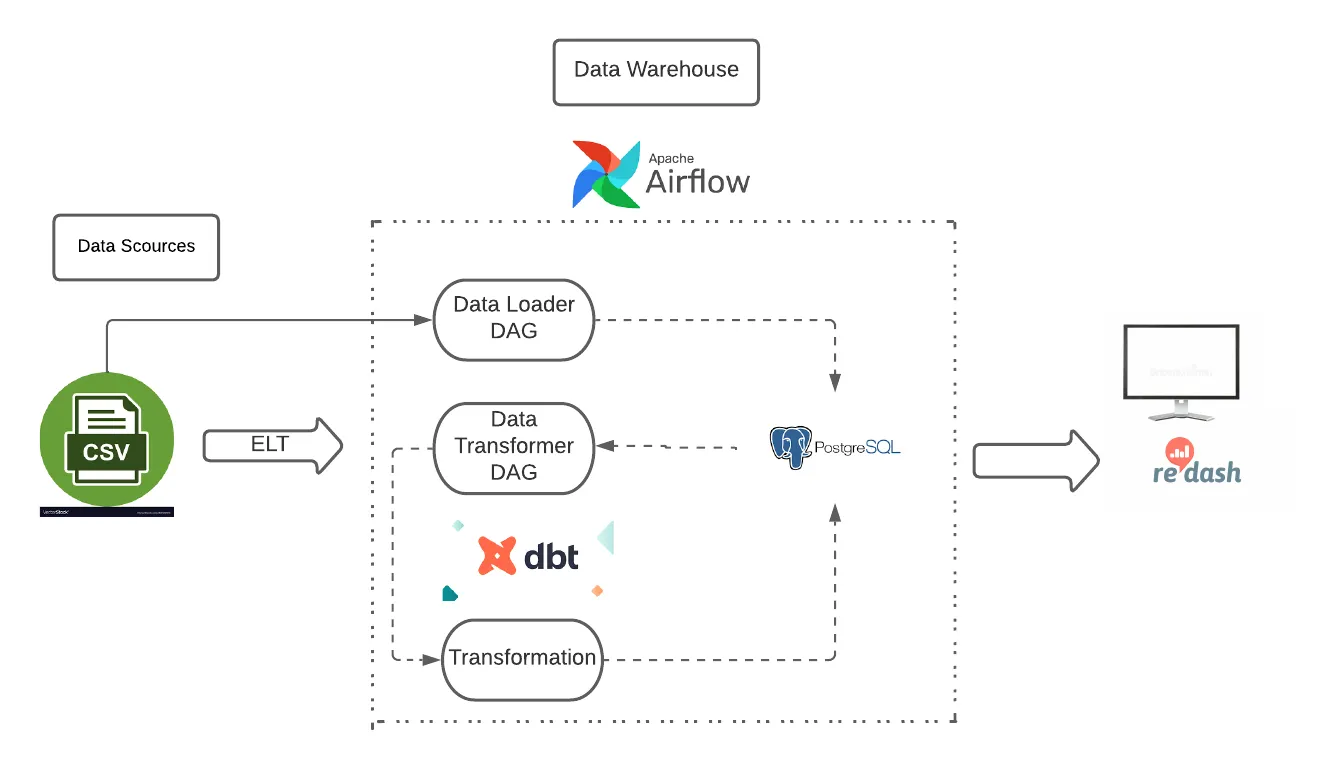

This project focuses on creating a scalable data warehouse for a city traffic department, utilizing swarm UAVs (drones) to collect traffic data. The data is intended for improving traffic flow and undisclosed projects. The tech stack comprises MySQL, DBT, and Airflow, following the Extract Load Transform (ELT) framework.

The project structure includes:

- data: Raw and cleaned datasets' CSV files.

- dags: Airflow DAGs for task orchestration.

- notebooks: Jupyter notebook for Explanatory Data Analysis (EDA).

- screenshots: Visual representations of the project, including tech stack flow, path for track ID, and speed comparisons.

- scripts: Python utility scripts.

- traffic_dbt: dbt (Data Build Tool) files and configurations.

- docker-compose.yaml: YAML file for Docker Compose, facilitating the setup of Airflow and Docker.

This repository contains the necessary files to set up a Dockerized Airflow environment for data loading into PostgreSQL.

-

Clone the Repository:

git clone https://github.com/birehan/Traffic-Analytics-Data-Warehouse-Airflow-dbt-Redash cd Traffic-Analytics-Data-Warehouse-Airflow-dbt-Redash -

Configure Environment Variables (Optional):

If needed, you can set environment variables by creating a

.envfile in the project root. Adjust variables as necessary.Example

.envfile:AIRFLOW_UID=1001 AIRFLOW_IMAGE_NAME=apache/airflow:2.8.0 _PIP_ADDITIONAL_REQUIREMENTS=your_additional_requirements.txt

-

Build and Run Airflow Services:

docker-compose up --build

-

Access Airflow Web Interface:

Once the services are running, access the Airflow web interface at http://localhost:8080.

-

Stop Airflow Services:

When you're done, stop the Airflow services:

docker-compose down

- The Airflow DAG

create_vehicle_tablesis designed to create a PostgreSQL database, tables, and load data from a CSV file. - Customize the DAG or SQL scripts in the

dagsanddags/sqldirectories as needed.