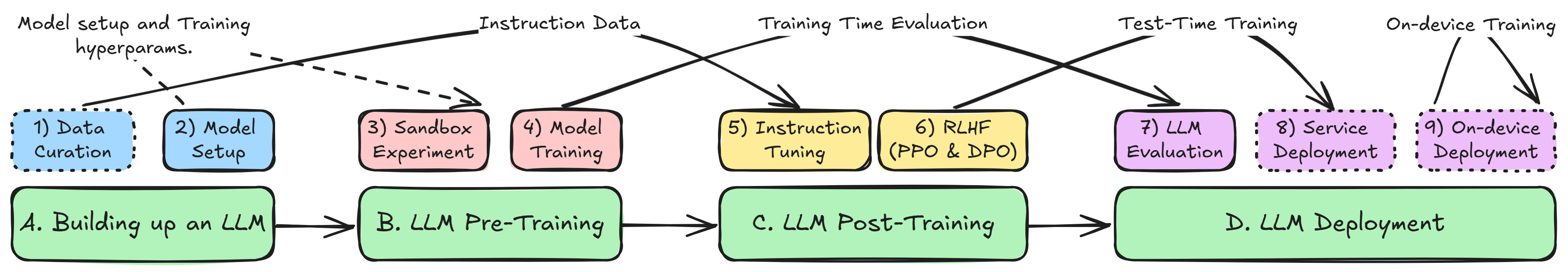

A full stack practice to train a large language model @ RLChina 2024. The overview is shown as follows:

This is technical material suitable for LLM training engineers and researchers interested in LLM. That is the content here contains pieces of completed scripts and .ipynb-format-files to enable you to quickly training and using LLM.

note: The list of topics will be improved over time, the first version is used for the courses in RLChina 2024, Guangzhou.

| No. | Section | Description | Code | Last Update Date |

|---|---|---|---|---|

| 1 | Data Curation | This section covers the process of collecting, cleaning, and preparing datasets for LLM training. It ensures that the data is suitable and ready for model training. | data_curation | 2024-10-04 |

| 2 | LLM Model Setup | Here we explain how to configure and initialize the LLM architecture. This includes defining model parameters and preparing the environment for training. | llm_model_setup | 2024-10-04 |

| 3 | LLM Pre-Training | This section guides you through pre-training the LLM on large-scale datasets. It focuses on the initial phase where the model learns general language patterns. | llm_pretraining | 2024-10-04 |

| 4 | LLM Post-Training | Post-training involves fine-tuning the model for specific tasks or domains. This section walks through adjusting the pretrained model for enhanced performance. | llm_posttraining | 2024-10-04 |

| 5 | LLM Deployment | Learn how to deploy the trained model into production environments. This includes integrating the model with applications and optimizing performance. | llm_deployment | 2024-10-04 |

| 6 | Resources and References | This section provides additional resources, including papers, tutorials, and tools for LLM training and deployment. It's a helpful reference for further learning and exploration. | resource_and_references | 2024-10-04 |

Unless specified otherwise the code in this repo is licensed under Apache License, Version 2.0.