-

Notifications

You must be signed in to change notification settings - Fork 0

week9.md

operating system (OS) is system software that manages computer hardware, software resources, and provides common services for computer programs.

Time-sharing operating systems schedule tasks for efficient use of the system and may also include accounting software for cost allocation of processor time, mass storage, printing, and other resources.

For hardware functions such as input and output and memory allocation, the operating system acts as an intermediary between programs and the computer hardware, although the application code is usually executed directly by the hardware and frequently makes system calls to an OS function or is interrupted by it. Operating systems are found on many devices that contain a computer – from cellular phones and video game consoles to web servers and supercomputers.

From time to time, an administrator or end user will want to run a command or series of commands at a set point in the future. Scheduling one-time tasks with the at command, scheduled commands are often called tasks or jobs.

For users of a Red Hat Enterprise Linux (RHEL) system, at is a solution for scheduling future tasks. This is not a standalone tool, but rather a system daemon (atd), with a set of command-line tools to interact with the daemon (at, atq and more). In a default RHEL installation, the atd daemon is installed and enabled automatically. The atd daemon can be found in the at package.

Users (including the root) can queue up jobs for the atd daemon using the at command-line tool. The atd daemon provides 26 queues, a to z, with jobs in alphabetical order; the later queues get less system priority.

A new job can be scheduled by using the command at . at will then read the commands to execute the stdin. For larger commands, and those that are case-sensitive, it is often easier to use input redirection from a script’s file, e.g., at now +5 min <myscript, rather than typing all the commands manually in a terminal window. When entering commands manually, you can finish your input by pressing CTRL+D.

allows for many powerful combinations, giving users an (almost) free-form way of describing exactly when a job should be run. Typically, they start with a time, e.g., 02:00pm, 15:59, or even teatime, followed by an optional date or the number of days in the future.

Some examples of combinations that can be used are listed in the following text. For a complete list, see the timespec definition in the Reference section (see note below).

Note: Reference section for crond includes three man pages – crond(8), crontab(1), crontab(5), which can be opened by the man command in the Red Hat Enterprise Linux 7 section.

now +5min

teatime tomorrow(teatime is 16:00)

noon +4 days

5pm august 3 2016

- Inspecting jobs: Running the atq command will give a job scheduled to run in the future, a number, date and time schedule, as well as the queue for the job and its owner.

Note: Normal, unprivileged users can only view and control their own jobs. The root user can view and manage all jobs.

To inspect the actual commands that will run when a job is executed, use the command at-c . The output will first show the environment for the job being set up to reflect the environment of the user who created the job (at the time this job was created), followed by the commands to be run.

- Removing jobs: The command atrm will remove a scheduled job. This is useful when a job is no longer needed—for example, when a remote firewall configuration has succeeded and does not need to be reset.

- Scheduling recurring jobs with Cron: Using at, one could, in theory, schedule a recurring job by having the job resubmit a new job at the end of its execution. In practice, this turns out to be a bad idea. RHEL systems ship with the crond daemon enabled and are started, by default, specifically for recurring jobs. crond is controlled by multiple configuration files, one per user (edited with the crontab (1) command), and system wide files. These configuration files give users and administrators fine-grained control over exactly when their recurring jobs should be executed. The crond daemon is installed as part of the cronie package.

If the commands that run from a cron job produce any output to either stdout or stderr that is not redirected, the crond daemon will attempt to email that output to the user owning that job (unless overridden) using the mail server configured on the system. Depending on the environment, this may need additional configuration.

- Scheduling jobs: Normal users can use the crontab command to manage their jobs. This command can be called in four different ways:

crontab –l (List the jobs for the current user).

crontab –r (Remove all jobs for the current users).

crontab –e (Edit jobs for the current user).

crontab <filename> (Remove all jobs, and replace with the jobs read from <filename>.

If no file is specified, stdin will be used. Note: The root can use the option –u to manage the jobs for another user. It is not recommended to use the crontab command to manage system jobs; instead, the methods described in the next section should be used.

When editing jobs with crontab-e, an editor will be started (vi by default, unless the EDITOR environment variable has been set to something different). The file being edited will have one job per line. Empty lines are allowed, and comments start their line with a hash symbol (#). Environment variables can also be declared, using the format NAME=value, and will affect all lines below the line in which they are declared. Common environment variables in a crontab include SHELL and MAILTO. Setting the SHELL variable will change which shell is used to execute the commands on the lines below it, while setting the MAILTO variable will change which email address output will be mailed to.

Important: Sending emails may require additional configuration of the local mail server or an SMTP relay on a system.

Individual jobs consist of six fields, which detail when and what should be executed. When all five of the first fields match the current date and time, the command in the last field will be executed. The fields are in the following order:

Minutes

Hours

Day-of-month

Month

Day-of-week

Command

Important: When the ‘Day-of-month’ and ‘Day-of-week’ fields are both other than *, the command will be executed when either of these fields match. This can be used, for example, to run a command on the fifteenth of every month, and on every Friday.

The first five of these fields all use the same syntax rules: * for “Don’t Care”/ always.

There is a number to specify the number of minutes or hours, a date, or a weekday. (For weekdays, 0 equals Sunday, 1 equals Monday, 2 equals Tuesday, etc. 7 also equals Sunday. x-y is used for a range, inclusive of x and y.) x, y for lists: Lists can include ranges as well, e.g., 5, 10-13, 17 in the ‘Minutes’ column, to indicate that a job should run at 5 minutes past the hour, 10 minutes past, 11 minutes past, 12 minutes past, 13 minutes past, and 17 minutes past.

*/x indicates an interval of x, e.g., */7 in the ‘Minutes’ column will run a job in exactly every seven minutes.

Additionally, three-letter English abbreviations can be used for both months and weekdays, e.g., Jan, Feb and Tue, Wed.

The last field contains the command to be executed. This command will be executed by /bin/sh unless a SHELL environment variable has been declared. If the command contains an unescaped percentage sign (%), that sign will be treated as a new line, and everything after the percentage sign will be fed to the command or stdin.

Examples of cron jobs:

Start the crontab editor:

# crontab –e

Insert the following line:

* * * * * * date >> /home/student/my_first_cron_job.

Save your changes and quit the editor.

Inspect all of your scheduled cron jobs.

# crontab –l

Wait for your job to run, the inspect the contents of the /home/student/my_first_cron_job.

# cat ~/my_first_cron_job.

Remove all of the cron jobs for student.

# crontab –r.

System cron jobs: Apart from user cron jobs, there are also system cron jobs. System cron jobs are not defined using the crontab command, but are instead configured in a set of configuration files. The main difference in these configuration files is an extra field, located between the Day-of-week field and the Command field, specifying under which user a job should be run.

System cron jobs are defined in two locations: /etc/crontab and /etc/cron.d/*. Packages that install cron jobs should do so by placing a file in /etc/cron.d/, but administrators can also use this location to more easily group related jobs into a single file, or to push jobs using a configuration management system.

There are also predefined jobs that run every hour, day, week and month. These jobs will execute all scripts placed in /etc/cron.hourly/, /etc/cron.daily/, /etc/cron.weekly/, and /etc/cron.monthly/, respectively. Do note that these directories contain executable scripts, and not cron configuration files.

Important: Make sure that any scripts you place in these directories are made executable. If not (e.g., with chmod+x), the task will not run as per the schedule.

The /etc/cron.hourly/* scripts are executed using the run-parts command, from a job defined in /etc/cron.d/0hourly. The daily, weekly and monthly jobs are also executed using the run-parts command, but from a different configuration file: /etc/anacrontab.

In the past, /etc/anacrontab was handled by a separate daemon (anacron), but in Red Hat Enterprise Linux 7, the file is parsed by the regular crond daemon. The purpose of this file is to make sure that important jobs will always be run, and not skipped accidentally because the system was turned off or hibernating when the job ought to have been executed.

The syntax of /etc/anacrontab is different from the other cron configuration files. It contains exactly four fields per line:

Once per how many days this job should be run

The amount of time the cron daemon should wait before starting this job

This is the name of the file in /var/spool/anacron/ that will be used to check if this job has run. When cron starts a job from /etc/anacrontab, it will update the timestamp on this file. The same timestamp is used to check when a job has last run in the past.

Command: This is the command to be executed. /etc/anacrontab also contains environment variable declarations using the syntax NAME=value. Of special interest is START_HOURS_RANGE. Jobs will not be started outside of this range.

A modern system requires a large number of temporary files and directories. Not just the highly visible ones such as /tmp that get used and abused by regular users but also more task-specific ones such as daemon- and user-specific volatile directories under /run. In this context, ‘volatile’ means that the file system storing these files only exists in memory. When the system reboots or loses power, all the contents of volatile storage will be gone.

To keep a system running cleanly, it is necessary for these directories and files to be created when they do not exist (since daemons and scripts might rely on them being there), and for old files to be purged so that they do not fill up disk space or provide fault-configured directories.

In RHEL 7, systemd provides a more structured and configurable method to manage temporary directories and files: system-tmpfiles.

When the system starts, one of the first service units launched is system-tmpfiles-setup. This service runs the command system-tmpfiles –create –remove. This command reads configuration files from /usr/lib/tmpfiles.d/.conf, /run/tmpfiles.d/.conf, and /etc/tmpfiles.d/*.conf. Any files and directories marked for deletion in those configuration files will be removed, and any files and directories marked for creation (or permission fixes) will be created with the correct permissions, if necessary.

To make sure that long running systems do not fill up their disks with stale data, there is also a sytemd timer unit that calls system-tmpfiles –clean at a regular interval.

systemd timer units are a special type of systemd service that have a {TIMER} block indicating how often the service with the same name should be started.

On a RHEL 7 system, the configuration for the systemd-tmpfiles-clean.timer units looks like what is shown below:

[Timer]

OnBootSec=15min

OnUnitActiveSec=1d

This indicates that the service with the same name (systemd-tmpfiles-clean.service) will be started 15 minutes after systemd has started and then once every 24 hours, afterwards.

The command systemd-tmpfiles –clean parses the same configuration files as systemd-tmpfiles –create, but instead of creating files and directories, it will purge all files that have not been accessed, changed or modified till the maximum age defined in the configuration file .

Linux Memory Management The subsystem of Linux memory management is responsible to manage the memory inside the system. It contains the implementation of demand paging and virtual memory.

Also, it contains memory allocation for user space programs and kernel internal structures. Linux memory management subsystem includes files mapping into the address space of the processes and several other things.

Linux memory management subsystem is a complicated system with several configurable settings. Almost every setting is available by the /proc filesystem and could be adjusted and acquired with sysctl. These types of APIs are specified inside the man 5 proc and Documentation for /proc/sys/vm/.

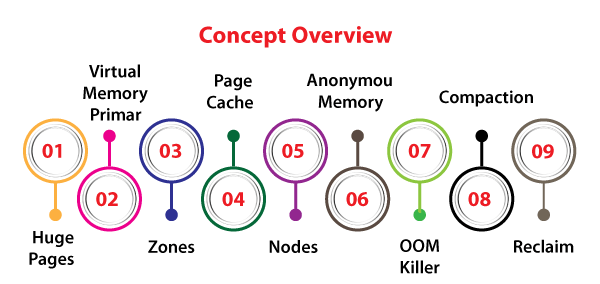

Linux memory management includes its jargon. Here we discuss in detail that how to understand several mechanisms of Linux memory management.

-

Huge Pages The translation of addresses requires various memory accesses. These memory accesses are very slow as compared to the speed of the CPU. To ignore spending precious cycles of the processor on the translation of the address, CPUs manage the cache of these types of translations known as Translation Lookaside Buffer (TLB).

-

Virtual Memory Primer In a computer system, physical memory is a restricted resource. The physical memory isn't contiguous necessarily. It may be accessible as a group of different ranges of addresses. Besides, distinct architectures of CPU and implementations of similar architecture have distinct perspectives of how These types of ranges are specified. It will make dealing with physical memory directly quite difficult and to ignore this complexity a mechanism virtual memory was specified. The virtual memory separates the physical memory details through the application software. It permits to keep of only required details inside the physical memory. It gives a mechanism for controlled data sharing and protection between processes.

-

Zones Linux combines memory pages into some zones according to the possible usage. Let's say, ZONE_HIGHMEM will include memory that isn't mapped permanently into the address space of the kernel, ZONE_DMA will include memory that could be used by various devices for DMA, and ZONE_NORMAL will include addressed pages normally.

-

Page Cache The common case to get data into memory is to read it through files as the physical memory is unstable. The data will be put in the page cache to ignore expensive access of disk on the subsequent reads whenever any file is read. Similarly, the data will be positioned inside the page cache and gets into the backing storage device whenever any file is written.

-

Nodes Several multi-processor machines can be defined as the NUMA - Non-Uniform Memory Access systems. The memory is organized into banks that include distinct access latency relying on the "distance" through the processor in these types of systems. All the banks are known As a node and for all nodes, Linux creates a subsystem of independent memory management. A single node contains its zones set, list of used and free pages, and several statistics counters.

-

Anonymous Memory The anonymous mapping or anonymous memory specifies memory that isn't backed by any file system. These types of mappings are implicitly developed for heap and stack of the program or by explicitly calls to the mmap(2) system call. The anonymous mappings usually only specify the areas of virtual memory that a program is permitted to access.

-

OOM killer It is feasible that the kernel would not be able to reclaim sufficient memory and the loaded machine memory would be exhausted to proceed to implement.

-

Compaction As the system executes, various tasks allocate the free up the memory space and it becomes partitioned. However, it is possible to restrict scattered physical pages with virtual memory. Memory compaction defines the partitioning problems.

-

Reclaim According to the usage of the page, it is treated by Linux memory management differently. The pages that could be freed either due to they cache the details that existed elsewhere on a hard disk or due to they could be again swapped out to a hard disk , are known as reclaimable.

All programs need to interact with the external world which makes I/O important. Programs store data in files which provide large persistent storage. In this post we will look at the system calls and functions for file I/O and the issues that govern the program and I/O device interaction.

Reading and writing to the hard disk takes a lot of time as compared with read and write from the main memory. There is also the observation of localized data access by programs in subsequent I/O calls. For example, take the case of sequential file access by a program. The program reads data at a particular location, the file access pointer moves by the amount of data read and the program reads from that location the next time. Data is read or written to a hard disk in units of blocks, where block size is determined by the filesystem on the hard disk. The block size is mostly 4K bytes, which implies buffering of data. A block of data is read and smaller chunks of data are given from it to the program in subsequent read calls. There are two levels of buffering, the kernel and user levels. The kernel keeps a copy of recently accessed disk blocks in the main memory. When a process wants some data from a file via a read call, the kernel first checks its page cache, and if the data is available, it is given to the process. If data is not available in the cache, the concerned disk block is read into the cache and data is given to the process. Similarly for a write call, availability of the concerned block is first checked in the page cache. If the concerned block is available, it is modified and marked for write to the disk. If data is not in the page cache, the concerned block is read, updated and marked for write to the disk. Since processes ask for data located in close proximity in successive read and write calls, page cache helps in minimizing the device access for I/O.

Synchronized I/O means that when we make a write-like call, the data is physically written on the hard disk and all the control metadata is updated and, only then, the call returns. Synchronized I/O is not be confused with synchronous I/O. Linux calls like open, read, write and close are all synchronous; they block by default and return only when the required functionality is done. It is a different matter that the functionality required of write is only that of writing to the page cache. However, if we say synchronized I/O, the write must actually write all the way down to the hard disk and update all the concerned control metadata.

The primary system calls for I/O are, open, creat, read, write, lseek, close and unlink.

- open

#include <sys/types.h>

#include <sys/stat.h>

#include <fcntl.h>

int open (const char *pathname, int flags);

int open (const char *pathname, int flags, mode_t mode);

- creat

#include <sys/types.h>

#include <sys/stat.h>

#include <fcntl.h>

int creat (const char *pathname, mode_t mode);

- read #include <unistd.h> ssize_t read (int fd, void *buf, size_t count);

* write

#include <unistd.h>

ssize_t write (int fd, const void *buf, size_t count);

* lseek

#include <sys/types.h> #include <unistd.h>

off_t lseek (int fd, off_t offset, int whence);

* close

#include <unistd.h>

int close (int fd);

* unlink

#include <unistd.h>

int unlink (const char *pathname);

### Synchronized I/O, revisited

These system calls help in ensuring that the data written to a file is actually written to the underlying filesystem

* sync

#include <unistd.h>

void sync (void);

* syncfs

#include <unistd.h>

int syncfs (int fd);

* fsync

#include <unistd.h>

int fsync (int fd);

* fdatasync

#include <unistd.h>

int fdatasync (int fd);

### truncate and ftruncate

* truncate

#include <unistd.h> #include <sys/types.h>

int truncate (const char *path, off_t length);

* truncate

``

#include <unistd.h>

#include <sys/types.h>

int truncate (const char *path, off_t length);

There are two basic concepts in Linux - processes and files. The processes do things and files keep all the important data. An efficient filesystem is important for an operating system. When Unix was conceived around 1969-70, several design decisions were taken to simplify the filesystem. It was thought that if something was simple it would be efficient and also it would provide a strong foundation for software development and operations.

Another important decision taken in design of Unix was the generalization that anything with which I/O was done was a file. So, regular files, directories, devices, interprocess communication mechanisms like pipes, fifos, sockets all are files. All files can be accessed with a file descriptor in a program. It also led to simplification of commands.

The hard disk provides the medium on which data in files can be stored. Data in files persists even when the system is switched off. There may be multiple disks and similar secondary storage media. Each disk my have one or more partitions. Each partition can have a filesystem. There is a root filesystem, which is the filesystem for the system. When the system is powered on, it is mounted and is available. The root filesystem has the root directory which is the starting point for traversing the tree structured filesystem. More filesystems can be mounted at directory nodes in the root filesystem. Once mounted, the files in the mounted filesystem appear to be part of the filesystem tree. The filesystem tree looks like this.

Although a filesystem appears to be a tree with files at its nodes and some nodes may be directories, which in turn may be sub-trees, the data structure implemented in a filesystems is not a tree and is quite involved. The most important thing is that a file does not have a name. It is identified by an i-number, which is the index into a table of inodes at the beginning of a filesystem. An inode for a file contains all the control information for the file. It contains, file type, permissions, the owner and group ids for the file, file size, the number of links to the file, the creation and last update and access timestamps, inline file data, and direct and indirect links to the blocks of data contained in the file.

There are many kinds of files under Linux. The major file types are regular files, directories, symbolic links, special files, named pipes and Unix domain sockets.

-

Regular Files A file is a sequence of bytes. The operating system does not put any special bytes inside a file. At the time of introduction of Unix, it was customary for computers to have records inside a file. And there would be control data for each record. In Unix, data inside a file is only put in by the concerned programs. The operating system does not write any control data inside a file. As another example, some systems put carriage return (CR) and line feed (LF) control characters between lines so that it can be displayed or printed on devices. Unix just puts an LF character when the user presses ENTER to indicate a new line. When the file is being displayed, the device driver puts in CR before every LF so that the file is displayed or printed correctly. Once again, this conforms to the basic philosophy that file contents should be exactly what the user (or program) put in. If, for display on a device something more is required, it should be done by the device driver at the time of output to the device.

-

Directories It is not necessary to remember inode numbers to access file because of directories. A directory is a special file. It is conceptually a two column table, mapping a file name to an inode number. The combination "filename - inode number" is called a link. These are "hard" links and can only be made to files on the same filesystem. The number of such links is kept in the inode data structure. When the number of links becomes zero and the file is not being opened by any process, it is discarded.

A file appears in at least one directory. Each row in a directory can be for a file or another directory. This leads to a tree-like impression of the filesystem, with the root directory (/), at the top. Also, it means that a single inode can appear with different file names in different directories.

-

Symbolic Links Each filesystem has its own inodes. So a "hard" link to an inode (and the file) can only be made in the filesystem in which that inode is present. To make it possible to link to a file present in another filesystem, symbolic links were introduced. Symbolic links contain the actual file path as the data.

-

Special Files Special files are devices like the hard disk or cdrom. These are mostly present in the /dev directory. There are two types of special files - block devices and character devices. On block devices, data can only be written or read in blocks. There is no such restriction on character devices and even small amount of data can be read or written. Data is cached in buffers in the kernel for block devices. Also, block devices need to be random access. Only filesystems on block devices can be mounted.

-

Named Pipes Fifos, or named pipes, are used for interprocess communication. Fifos behave just like pipes, except that they appear in the file system and can be opened, read and written by a process having the permissions to do so. The standard open, read and write calls for files work on Fifos.

-

Unix Domain Sockets Unix domain sockets are also used for inter process communication. The calls used are the same as that for networking sockets. The domain sockets are fast and read and write calls can be used sending and receiving data.

- pwd The pwd command prints the current working directory.

$ pwd

/home/user1/src

- ls The ls command lists files.

$ ls -ls

total 60

4 drwxr-xr-x 2 user1 user1 4096 Mar 21 20:04 dbus

28 -rw-rw-r-- 1 user1 user1 25642 Apr 15 21:00 shell.c

4 drwxr-xr-x 5 user1 user1 4096 Feb 29 18:53 socket

4 drwxr-xr-x 2 user1 user1 4096 Jan 12 12:17 threads

4 drwxr-xr-x 2 user1 user1 4096 Apr 19 02:17 time

12 -rwxr-xr-x 1 user1 user1 8296 Apr 8 09:05 try

4 -rw-r--r-- 1 user1 user1 186 Apr 8 09:05 try.c

The -i option prints the inode number for the file.

$ ls -lsi

total 60

4063680 4 drwxr-xr-x 2 user1 user1 4096 Mar 21 20:04 dbus

52167051 28 -rw-rw-r-- 1 user1 user1 25642 Apr 15 21:00 shell.c

4063368 4 drwxr-xr-x 5 user1 user1 4096 Feb 29 18:53 socket

52824390 4 drwxr-xr-x 2 user1 user1 4096 Jan 12 12:17 threads

4195245 4 drwxr-xr-x 2 user1 user1 4096 Apr 19 02:17 time

52824007 12 -rwxr-xr-x 1 user1 user1 8296 Apr 8 09:05 try

52824001 4 -rw-r--r-- 1 user1 user1 186 Apr 8 09:05 try.c

- file The file command prints the type of a file.

$ file *

acpid.pid: ASCII text

acpid.socket: socket

alsa: directory

avahi-daemon: directory

boltd: directory

crond.reboot: empty

initctl: symbolic link to /run/systemd/initctl/fifo

initramfs: directory

lock: sticky, directory

log: directory

ntpd.pid: ASCII text, with no line terminators

sendsigs.omit.d: directory

snapd-snap.socket: socket

snapd.socket: socket

spice-vdagentd: directory

- du The du command estimates the disk usage of files and recursively for directories.

$ du -h

56K ./socket/tcp

52K ./socket/udp

68K ./socket/select

180K ./socket

72K ./time

24K ./threads

20K ./dbus

344K .

- df The df command tells the free space available on mounted filesystems.

$ df -h

Filesystem Size Used Avail Use% Mounted on

udev 3.9G 0 3.9G 0% /dev

tmpfs 784M 2.0M 782M 1% /run

/dev/sda3 92G 16G 71G 19% /

tmpfs 3.9G 80M 3.8G 3% /dev/shm

tmpfs 5.0M 4.0K 5.0M 1% /run/lock

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/loop3 15M 15M 0 100% /snap/gnome-characters/399

... ... ... ... ... ....

/dev/sda4 801G 81G 679G 11% /home

tmpfs 784M 20K 784M 1% /run/user/121

tmpfs 784M 40K 784M 1% /run/user/1000

/dev/loop22 291M 291M 0 100% /snap/vlc/1620

- cat The cat command prints the file, passed as argument, on the terminal.

$ cat hello.c

#include <stdio.h>

#include <string.h>

int main (int argc, char *argv[])

{

printf ("Hello, World!\n");

}

- hexdump cat is fine for text files, but if you have a binary data file and are determined to know its contents, you can try hexdump.

$ hexdump -cx hello

0000000 177 E L F 002 001 001 \0 \0 \0 \0 \0 \0 \0 \0 \0

0000000 457f 464c 0102 0001 0000 0000 0000 0000

0000010 003 \0 > \0 001 \0 \0 \0 0 005 \0 \0 \0 \0 \0 \0

0000010 0003 003e 0001 0000 0530 0000 0000 0000

0000020 @ \0 \0 \0 \0 \0 \0 \0 0 031 \0 \0 \0 \0 \0 \0

0000020 0040 0000 0000 0000 1930 0000 0000 0000

0000030 \0 \0 \0 \0 @ \0 8 \0 \t \0 @ \0 035 \0 034 \0

0000030 0000 0000 0040 0038 0009 0040 001d 001c

0000040 006 \0 \0 \0 004 \0 \0 \0 @ \0 \0 \0 \0 \0 \0 \0

0000040 0006 0000 0004 0000 0040 0000 0000 0000

0000050 @ \0 \0 \0 \0 \0 \0 \0 @ \0 \0 \0 \0 \0 \0 \0

...

The first line shows the contents as characters. The next line shows bytes in hexadecimal. The leftmost column is offset in the file.

- Text Editor Files are created using a text editor. There are many text editors under Linux, and the one people use is a matter of taste or preference. The popular text editors are Emacs, vi, ed, nano, gedit, etc.

References : https://en.wikipedia.org/wiki/Operating_system https://www.opensourceforu.com/2018/06/how-to-schedule-tasks-in-linux-systems/ https://www.javatpoint.com/linux-memory-management#:~:text=The%20subsystem%20of%20Linux%20memory,programs%20and%20kernel%20internal%20structures. https://www.softprayog.in/programming/file-io-in-linux https://www.softprayog.in/tutorials/files-in-linux