This repo is an official pytorch implementation of the paper "MVOC: a training-free multiple video object composition method with diffusion models"

MVOC is a training-free multiple video object composition framework aimed at achieving visually harmonious and temporally consistent results.

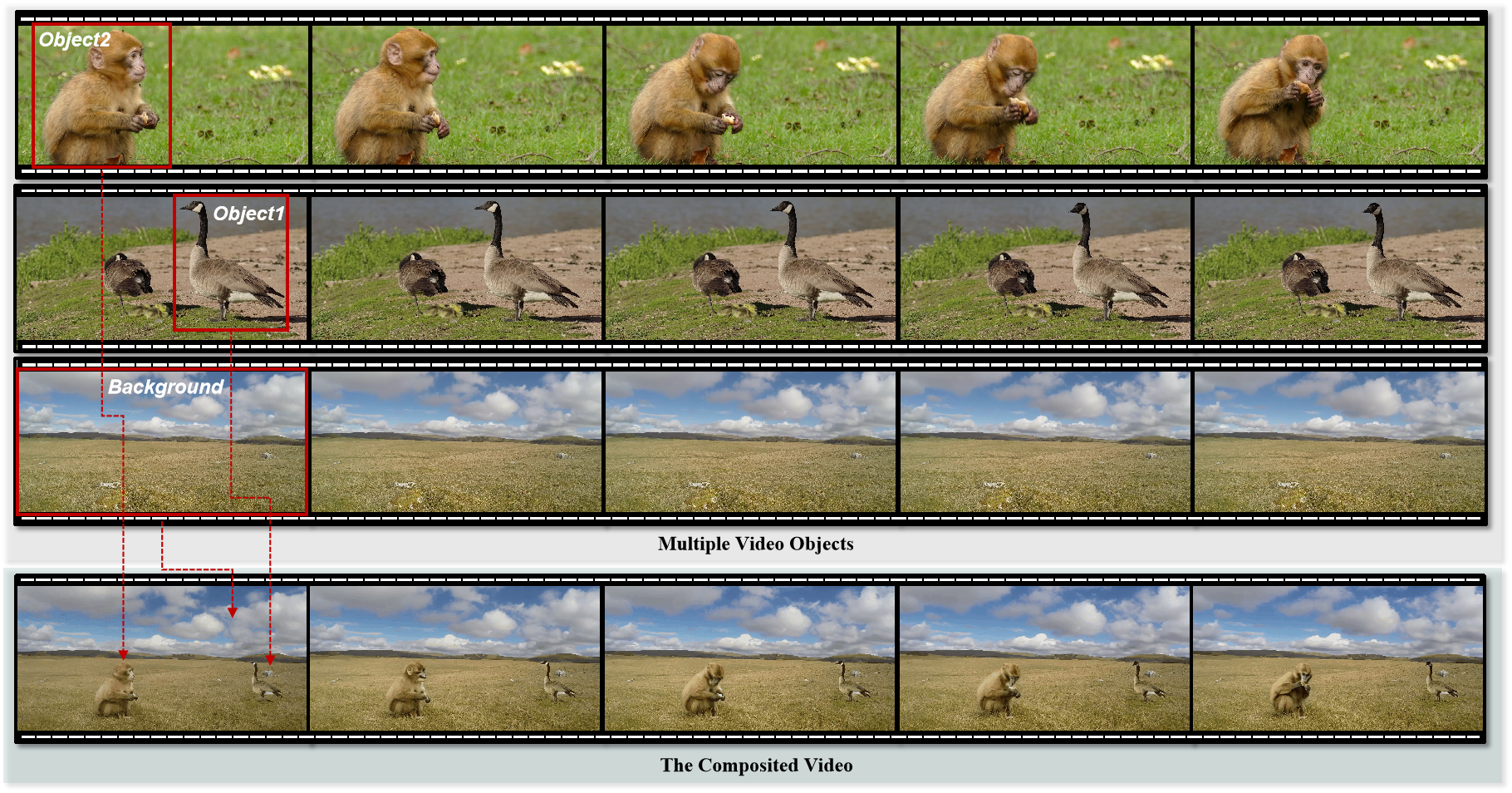

Given multiple video objects (e.g. Background, Object1, Object2), our method enables presenting the interaction effects between multiple video objects and maintaining the motion and identity consistency of each object in the composited video.

Clone this repo and prepare Conda environment using the following commands:

git clone https://github.com/SobeyMIL/MVOC

cd MVOC

conda env create -f environment.ymlWe use i2vgen-xl to inverse the videos and compose them in a training-free manner. Download it from huggingface and put it at i2vgen-xl/checkpoints.

We offer the videos we use in the paper, you can find it at demo

First, you need to get the latent representation of the source videos, we offer the inversion config file at i2vgen-xl/configs/group_inversion/group_config.json.

Then you can run the following command:

cd i2vgen-xl/scripts

bash run_group_ddim_inversion.sh

bash run_group_composition.shWe provide some composition results in this repo as below.

| Demo | Collage | Our result |

|---|---|---|

| boat_surf |  |

|

| crane_seal |  |

|

| duck_crane |  |

|

| monkey_swan |  |

|

| rider_deer |  |

|

| robot_cat |  |

|

| seal_bird |  |

|

Please kindly cite our paper if you use our code, data, models or results:

@inproceedings{wang2024mvoc,

title = {MVOC: a training-free multiple video object composition method with diffusion models},

author = {Wei Wang and Yaosen Chen and Yuegen Liu and Qi Yuan and Shubin Yang and Yanru Zhang},

year = {2024},

booktitle = {arxiv}

}This project is released under the MIT License.

The code is built upon the below repositories, we thank all the contributors for open-sourcing.