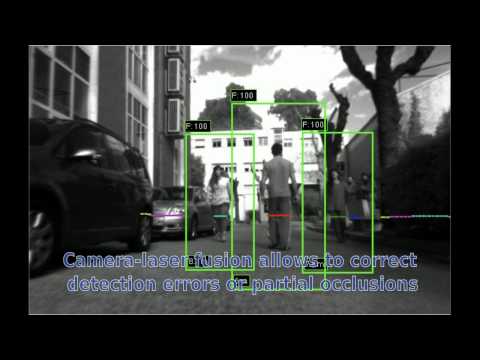

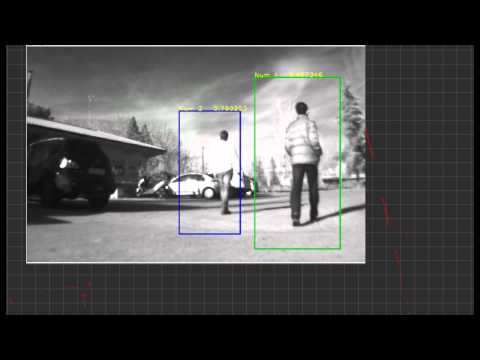

Uses a combination of laser range finder and a computer vision module for the pedestrian detection. The vision module works with OpenCV's detector which uses Histogram of Oriented Gradients and Support Vector Machines.

For pairing observations and tracking it uses a combination of Kalman filters and Mahalanobis distance.

The code was written for the ROTOS project in the RobCib research group of the Polytechnic University of Madrid.

If you use this code please reference the following works:

Bibtext entry:

@article{doi:10.1108/IR-02-2015-0037,

author = { Mario Andrei Garzon Oviedo and Antonio Barrientos and Jaime Del Cerro and Andrés Alacid and Efstathios Fotiadis and Gonzalo R. Rodríguez-Canosa and Bang-Chen Wang },

title = {Tracking and following pedestrian trajectories, an approach for autonomous surveillance of critical infrastructures},

journal = {Industrial Robot: An International Journal},

volume = {42},

number = {5},

pages = {429-440},

year = {2015},

doi = {10.1108/IR-02-2015-0037},

URL = {http://dx.doi.org/10.1108/IR-02-2015-0037}

}

Bibtext entry:

@Article{s130911603,

AUTHOR = {Fotiadis, Efstathios P. and Garzón, Mario and Barrientos, Antonio},

TITLE = {Human Detection from a Mobile Robot Using Fusion of Laser and Vision Information},

JOURNAL = {Sensors},

VOLUME = {13},

YEAR = {2013},

NUMBER = {9},

PAGES = {11603--11635},

URL = {http://www.mdpi.com/1424-8220/13/9/11603},

PubMedID = {24008280},

ISSN = {1424-8220},

DOI = {10.3390/s130911603}

}

Human Detection with fusion of laser and camera

Autonomous detection tracking and following

- ROS (Indigo)

- libgsl

- install it with: $ sudo apt-get install libgsl-ruby

- NEWMAT

- install it with: $ sudo apt-get install libnewmat10-dev

- all the ROS components listed in the package.xml dependencies

The code compiles only using catkin.

- cd to catkin workspace

- catkin_make hdetect

- Download this rosbag

- Change the recognizeBag.launch launchfile to point towards the rosbag

- Run

roslaunch hdetect recognizeBag.launch - Rviz is going to launch. Enable the image (camera)

- Wait till everything is launched and hit space to playback

- headlessRT - human detection without visualization and tracking

- visualizeRT - human detection with visualization and without tracking

- recognizeRT - human detection with tracking and without visualization

- showRT - human detection with visualization and tracking

- annotateData - annotate the human for training and save the result to csv file

- trainLaser – train the annotation with given csv file and save the result to boost.xml

It is suggested to run the launch files than to run the bin files

- headlessRT.launch - headlessRT on robot

- headlessBag.launch - headlessRT with rosbag. The bag name can be changed inside the launch

- visualizeRT.launch - visualizeRT on robot.

- visualizeBag.launch - visualizeRT with rosbag. The bag name can be changed inside the launch

- recognizeRT.launch - recognizeRT on robot.

- recognizeBag.launch - recognizeRT with rosbag. The bag name can be changed inside the launch

- showRT.launch - showRT on robot.

- showBag.launch - showRT with rosbag. The bag name can be changed inside the launch

- annotateBAG.launch - annotateData with rosbag. The bag name can be changed inside the launch.

- trainLaser.launch - trainLaser with csv file. The file name can be changed inside the launch.

####lengine segment the laser points into clusters, call the function to compute the 17 features of the laser points

####lfeature compute the 17 features of the laser points

####lgeometry compute the geometry used by the computation of the features

####laserLib load the raw laser point, call the function to compute the clusters and the features

####projectTools standard function for projection, used in everywhere

####Header contains the enumeration of HUMAN, the static topic name, curTimeStamp and preTimeStamp

####Human Structure for storing the value of the human detection and tracking

####Observation Structure for storing the value casted from detection

####ObjectTracking Using Kalman filter to track the object, including predict and update

####Detector callback function of headlessRT, main function for detection, run the detection of laser and of image, then merge them together

####Visualizer callback function of visualizeRT, run the detector first, then plot them on the window

####Recognizer callback function of recognizeRT, run the detector first, then do the tracking of the human, and stand it to rviz

####Annotator callback function of annotateData, main function of the annotation

####bagReader read the bag for the annotation

The laser processing module uses code swritten by L. Spinello. The tracking module is based on the work of Gonzalo Rodriguez-Canosa