-

Notifications

You must be signed in to change notification settings - Fork 220

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Added Vectorized Gym Env Action Repeat Wrapper #832

base: main

Are you sure you want to change the base?

Conversation

949681f to

027aa96

Compare

027aa96 to

a0a8390

Compare

|

Actually I just had a thought. One problem with action repeats at this layer of a wrapper is generates observation and reward data more than once, which is unnecessarily slow. I'm open to the idea of instead calling the internal _step_action function twice, which would make things faster and if you are worried about recorded videos being jumpy the record episode wrapper has a record_substeps argument that lets you record the sim steps in between |

|

@StoneT2000 The idea of using |

4a27dfa to

372c65a

Compare

372c65a to

48b19fe

Compare

a6cf2c8 to

26ddb8c

Compare

262b8f2 to

480fc39

Compare

480fc39 to

587fa92

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

see comments for discussion. Moreover can you share the image you shared before? It is not showing up.

| from mani_skill.vector.wrappers.gymnasium import ManiSkillVectorEnv | ||

|

|

||

|

|

||

| class ActionRepeatWrapper(VectorEnvWrapper): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

thanks for the contribution! I personally would prefer if we could somehow move the ActionRepeatWrapper to be a gym.Wrapper such that it is applied before we apply ManiSkillVectorEnv. But that could be a separate implementation (but maybe its too strange to do it that way anyway). I will document in the future that all wrappers in the vector.wrappers module are VectorEnvWrappers or wrappers that map gym.Env to VectorEnv interfaces.

For "single" env wrappers inheriting gym.Wrapper will then all be placed in utils.wrapper module.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Interesting suggestion, I guess a single env is just the same as parallel envs with n=1 so this makes sense.

Is there anything I should watch for in regards to how Maniskill is different before ManiSkillVectorEnv is applied? What changes?

| self._auto_reset = ( | ||

| env.auto_reset | ||

| ) # The wrapper will handle auto resets based on if the base env had auto_reset enabled | ||

| self.env.auto_reset = False # Disable auto_reset in the base env since we will handle it in this wrapper |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

just saw this actually. Would it be somehow easier if instead we actually move action repeat wrapper to before applying ManiSkillVectorEnv?

I also prefer to avoid reading given environment properties like env.auto_reset because some users may apply other wrappers before applying the ActionRepeatWrapper

In general gym wrappers are meant to be designed such that they can be chained in any order. As such it is possible for user error here if we assume this auto_reset variable exists.

A quick fix as opposed to doing what I suggest is to search the wrapped environments for the env that is an instance of ManiSkillVectorEnv and then modify.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I agree that this section probably does not follow the gym wrappers philosophy, let's improve this.

I will try to see if I can move the wrapper before applying ManiSkillVectorEnv as suggested.

| dones = torch.logical_or(final_terminations, final_truncations) | ||

| not_dones = ~dones | ||

|

|

||

| if not dones.all(): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

small optimization, can we do not_dones.any(). I think torch may run .any faster than .all

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Although in the worst case and average case these are both the same, you are right that in the best case it is faster to do not_dones.any(). I will change that thanks!

I re-uploaded the image, the PR description should be good now. |

|

Neat diagram! We should probably include that in our documentation somewhere to explain how action repeat works in batched sims. another question I just thought of is when one env finishes earlier during the action repeat sequence, it is not auto reset, and instead you just take another step right, but we do not use any of the ifnromation generated by the extra steps, and then your repeat wrapper forcefully does a reset? |

Good point, where do you think this should be added ? Which doc section ?

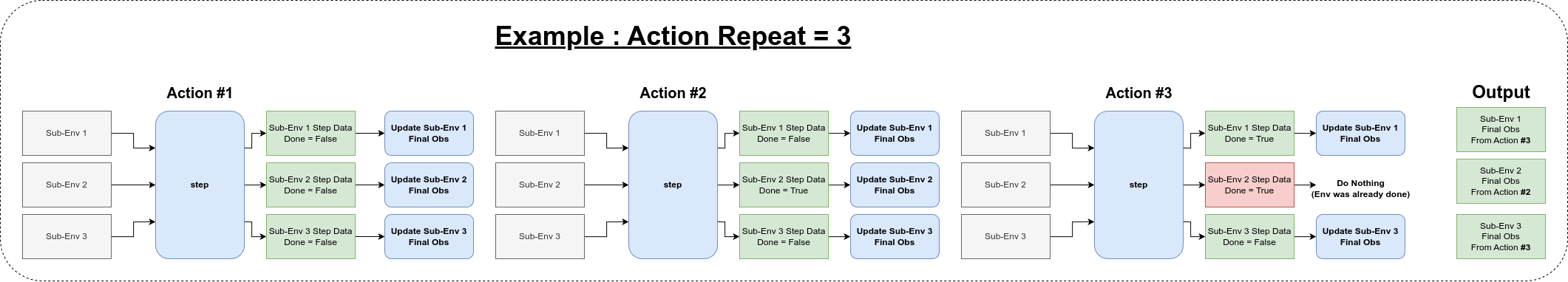

Your understanding is correct, we basically keep track of the observation and reward returned and when one env finishes earlier we stop updating our memory of its last observation and just take another step. The step will be performed in the environment but because we do not update our memory of its last observation and reward, then only the true last observation is kept so you are correct, the information of the extra steps are not used. |

|

Re the ordering of wrappers. Strongly recommend again to move wrapper to before VectorEnv or CPUGymWrapper (the expectaiton of most wrappers in maniskill is they can work in CPU or GPU sim to make creating cpu or gpu envs easier). I think it may be easier this way anyway because the auto-reset functionality is provided by ManiSkillVectorEnv class (and for gymnasium their AsyncVectorEnv class) which is almost always the final class applied to the environment. Any other ones are vector env wrappers but our codebase never uses those and I think gymnasium avoids those as well. For an action repeat wrapper that occurs before the ManiSkillVectorEnv, you can basically keep all the same code but don't do any resets actually. ManiSkillVectorEnv auto-resets if the underlying env (which is your action repeat wrapped env) returns truncation = True for some env |

Description

Adds an env wrapper that can be used with

ManiSkillVectorEnvto add action repeat functionality.action_repeat=2means the same action is repeat for 2 time steps.This wrapper handles the fact that not all parallel envs might be done at the same time.

This wrapper supports both

auto_reset=Trueandauto_reset=Falseenvs.This wrapper guarantees that the observation and data returned will be from at most

repeatactions.In other words, if an environment is not done after the action repeat steps then the final observation is the last observation obtained. But if a sub-env is already done before the action repeat steps are executed, then for this sub-env we only return the true last observation that was obtained when the sub-env became done (the extra observations are discarded).

This allows an action repeat that is maximal under parallel envs as environments that are not done do not impact environments that are done and vice-versa.

Here is a graph showing what is done by this wrapper :

Usage

Note on Alternative Implementations

Common implementations break the action repeat loop as soon as 1 (sub)-env is done, this would work but would be highly sub-optimal and even more so as the number of parallel envs grows (eg: if we have 10 000 envs, then it's very possible that we never do action_repeat = 2 steps because there might always be 1 env that is done after 1 step).

This is why I decided to not follow this common implementation which do not account for parallel envs.

Important

Must merge #828 first as this branch relies on it.