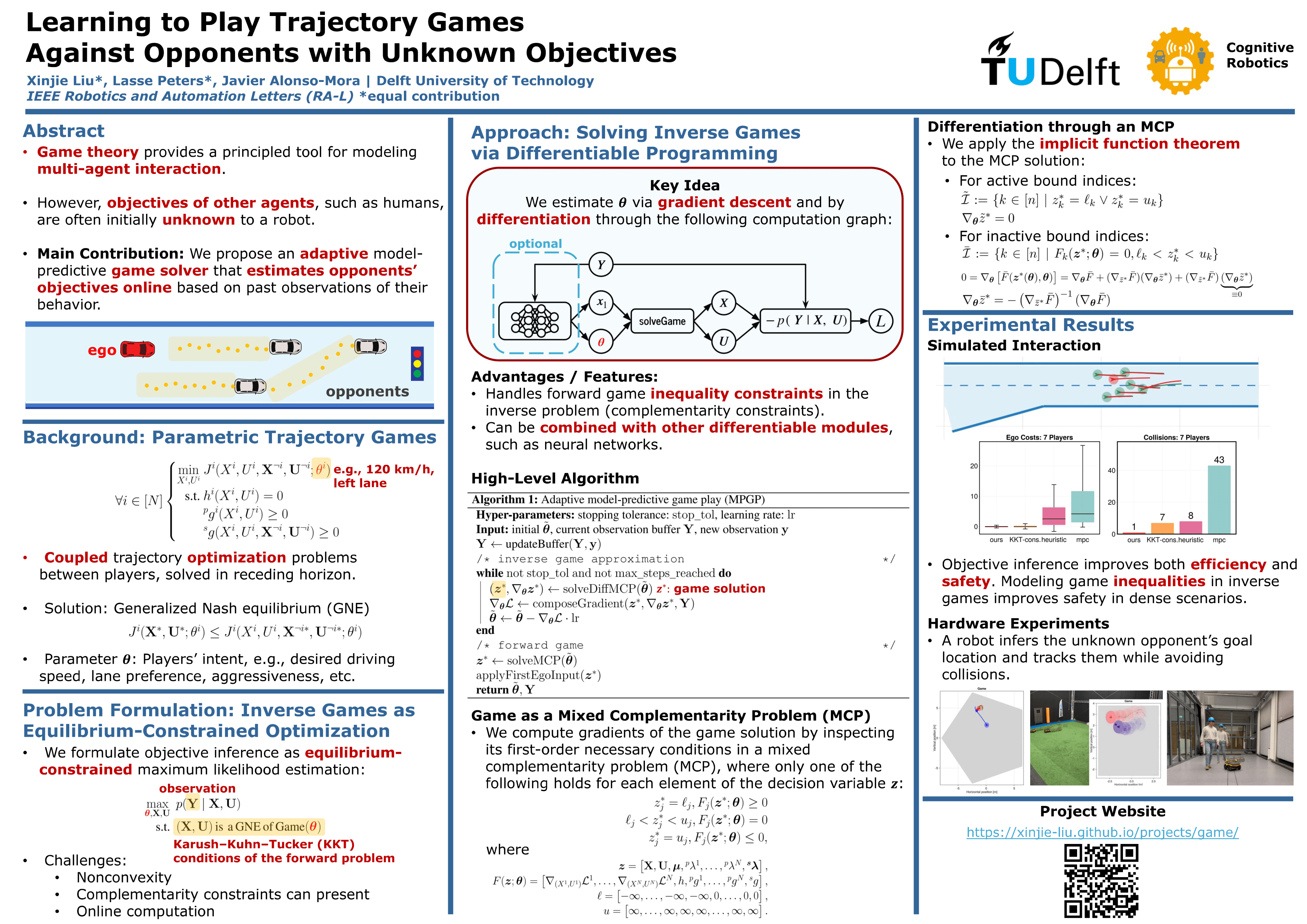

An adaptive game-theoretic planner that jointly infers players' objectives and solves for generalized Nash equilibrium trajectories, enabled by differentiating through a trajectory game solver. The solver is made end-to-end differentiable and supports direct combination with other learning-based components. This is a software package produced by research Learning to Play Trajectory Games Against Opponents with Unknown Objectives. Please consult our project website for more information.

@article{liu2023learning,

author={Liu, Xinjie and Peters, Lasse and Alonso-Mora, Javier},

journal={IEEE Robotics and Automation Letters},

title={Learning to Play Trajectory Games Against Opponents With Unknown Objectives},

year={2023},

volume={8},

number={7},

pages={4139-4146},

doi={10.1109/LRA.2023.3280809}}

This package contains a nonlinear trajectory game solver (/src/) based on a mixed complementarity problem (MCP) formulation. The solver is made differentiable by applying the implicit function theorem (IFT). Experiment code employing this differentiability feature for adaptive model-predictive game-play is provided in /experiment/. Folder /experiment/DrivingExample/ contains code for the ramp-merging experiment in our paper, and hardware experiment code on Jackal UGV robots is in /experiment/Jackal/.

Acknowledgments: Most of the infrastructure used in the Jackal experiment is from a past project by @schmidma, who provided a package for controlling the Jackal robot in ROS using Julia code.

Note: This repository contains the original solver implementation used in our research, "Learning to Play Trajectory Games Against Opponents with Unknown Objectives." Besides, we also published a more optimized implementation for the differentiable game solver (link). We kindly ask you to cite our paper if you use either of the implementations in your research. Thanks!

-

Open REPL (Julia 1.9) in the root directory:

julia -

Activate the package environment by hitting

]to enter the package mode first and then type:activate . -

Instantiate the environment in the package mode if you haven't done so before by typing

instantiate -

Exit the package mode by hitting the backspace key; precompile the package:

using MCPGameSolver -

Run the example:

MCPGameSolver.main()

-

Open REPL (Julia 1.9) in the directory

/experiment/DrivingExample:julia -

Activate the package environment by hitting

]to enter the package mode first and then type:activate . -

Instantiate the environment in the package mode if you haven't done so before by typing

instantiate -

Exit the package mode by hitting the backspace key; precompile the package:

using DrivingExample -

Run the ramp merging example:

DrivingExample.ramp_merging_inference()

-

Open REPL (Julia 1.9) in the directory

/experiment/Jackal:julia -

Activate the package environment by hitting

]to enter the package mode first and then type:activate . -

Instantiate the environment in the package mode if you haven't done so before by typing

instantiate -

Exit the package mode by hitting the backspace key; precompile the package:

using Jackal -

Run the tracking example:

Jackal.launch()

This package uses PATH solver (via PATHSolver.jl) under the hood. Larger-sized problems require to have a license key. By courtesy of Steven Dirkse, Michael Ferris, and Tudd Munson, temporary license keys are available free of charge. For more details about the license key, please consult PATHSolver.jl (License section). Note that when no license is loaded, PATH does not report an informative error and instead may just report a wrong result. Thus, please make sure that the license is loaded correctly before using the solver.