-

Notifications

You must be signed in to change notification settings - Fork 14

Editor (Project)

All objects presented in the scene are available in the corresponding project as well. They could be used as a spatial anchor for any action points or they could provide actions.

Each action object could have 0..n parameters, such as address of the service, virtual or physical port, type of workpiece to be manipulated, etc. Such a parameter is usually set in the scene, but it could be overridden in the project, to achieve higher versatility of the system. Using the gear button in the utility menu, the action object settings menu could be opened (see image). In this menu, all available parameters and their actual value could be seen and altered. When some parameter is overridden, the trash button could be used to remove the override and return to the default value.

Any action object or collision object could be added to the block list, causing that it will not be rendered in the scene and no interaction using the sight will be enabled. It is done by the block list switch in the same menu, where parameters override are defined (see above). This is especially useful with for example safety fences around the workplace, which need to be defined for the robot to avoid them, but the user usually doesn't want to interact with them. Any object placed on the block list could be selected using the block list button in the selector menu.

Action points serve as a spatial anchor in the scene. They represent important points in the environment, such as the position of the object to be picked, the position of the hole where a peg should be inserted, etc. The action point itself only represents the position and not the rotation. Each action point in fact is a container, which could contain several orientations or a robot's joint values. This means that one action point could represent several poses and/or robot arm configurations.

The action point could be defined as global (i.e. has no parent) or it could be added into a hierarchy (action object or another action point could serve as a parent), meaning that any spatial change to the parent will influence corresponding spatial change for all descendants.

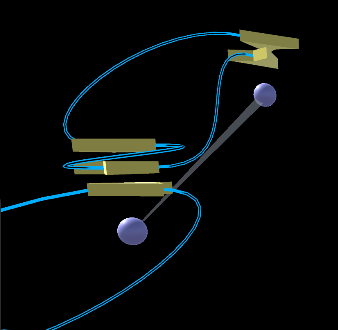

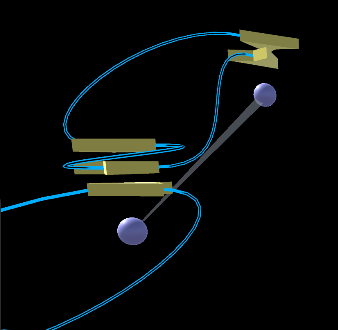

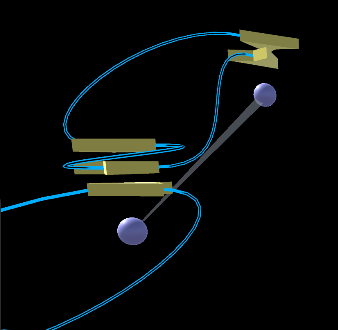

The action points are visualized as spheres at the defined position. The size of the action point could be altered using a main settings menu (see editor settings). The hierarchy of the action points is visualized using an arrow from the action point to its parent.

An action point could be added to the scene using the add category of the left menu. When the user has selected any object which could serve as a parent of an action point (i.e. any action object or another action point), the new action point is added with the selected object as its parent. When no object is selected, the new action point is added with no parent (i.e. is global).

Using the action point button in the add category of the left menu, the action point is added manually to the scene, meaning that its position is set 0.5 meters from the tablet in the direction of sight.

Using the action point with robot button in the add category of the left menu, the action point is added at the position of the currently selected end-effector. The default orientation is added to the newly created action point, representing the current pose of the end-effector. Joint configuration of the selected robot's arm is added as well.

Orientations could be optionally stored in any action point. Together with the action point position, they represent poses in the environment. In the scene, they are represented with a small arrow around the action point, showing the actual orientation (and, for example, the orientation of the end effector in that pose).

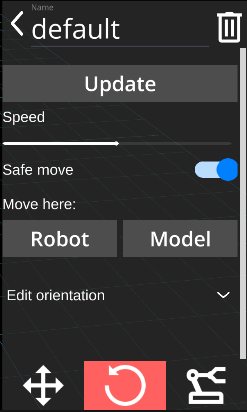

The orientations could be managed in the action point aiming menu (see image). The user could create a new orientation, delete the old one or update the name or position of the existing ones. The orientation could be updated manually as a quaternion or Euler angles or using a selected robot's end-effector.

When the action point is created using the robot (see above), a current orientation of its end-effector is added to the action point (with name Default).

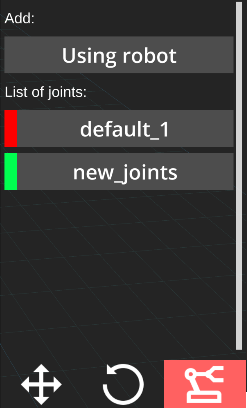

Robot joints represent an arbitrary robot's arm configuration (i.e. the posture of the arm). They could be managed similarly to the orientation, using the action point aiming menu* (see above). The only difference here is that any robot joints could be either valid or invalid. The state of the joints is indicated with the tight line on the side of the joints name, which could be red (invalid) or green (valid). The validity of the joints means that when the robot is set to that joints configuration, its end-effector will be in the position of the action point. The joints get invalidated when the action point's position is changed after the joints configuration has been created. To make them valid again, they need to be updated using the robot.

Contrary to orientations, any robot joints are valid only for the robot they have been created with.

The position of the action point is determined upon the creation process and it could be changed later, in various ways.

The most precise method of defining the position of the action point in "free space" is by using a robot. The action point's position could be aligned with the currently selected robot either by using the *object manipulation menu*or by using the action point aiming menu.

The action points could be manipulated using the object_manipulation_menu. In the action point aiming menu, the position of the action point could also be set directly, using its 3D coordinates (only when expert mode is enabled).

The action represents one step in the robot's program. This step could be composed of several atomic robot instructions (such as action pick could be composed of several move instructions and gripper position instructions). Each action belongs to exactly one action point. This relationship is made purely for visualization and has no implicit semantical meanings, although the action is typically placed to the action point in the spot, where the actual action takes place.

The actions are represented by small, 3D arrows above the action points. When the action is connected with a following action, the orientation of the arrow is set in the direction of the following action.

Actions are added using the Add action button in the add category of the left menu. To add an action, some action point needs to be selected first. After the button was hit, a menu with all available actions is opened. The actions are categorized by corresponding action objects, presented in the scene, which servers as a actions providers (i.e. any action object could provide an action). After the selection of desired action, the action is added to the selected action point with default parameters. Right after that, the action parameters menu is opened and the user could alter the parameter's value.

Each action could contain 0..n parameters of selected type:

-

string

- Arbitrary text

-

double

- Decimal, a minimal and/or maximal value for the parameter could be set in object type definition

-

integer

- A minimal and/or maximal value for the parameter could be set in object type definition

-

bool

- Truth or false

-

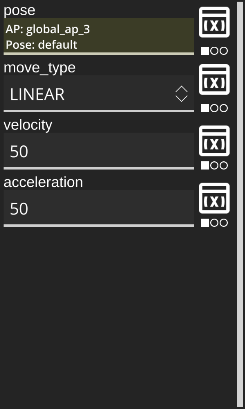

pose

- Combination of action point and its orientation

- Could be selected using the sight button next to the parameter

-

joints

- Combination of action point and its robot joints

- Could be selected using the dropdown

-

string_enum

- Enumeration of strings

- Selected using the dropdown with all available values

-

int_enum.

- Enumeration of integers

- Selected using the dropdown with all available values

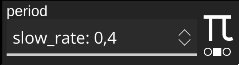

Selected parameter types (string, double, integer and bool) could be either set explicitly by the value or could be set using the project parameters (see image below) or tied with the return value of another action (see image below).

|

|

|

|---|---|---|

| An explicit value of parameter | Value derived from project parameter | Value obtained from other action |

The individual actions could be executed using the execute button in the home category of the left menu. When pressed, the currently selected action will be executed. During the execution, no other action could be executed.

The program flow is defined by the sequence of connected actions. The connections serve as a visualization of the state transition from one action to another. Depending on the type of value, returned from the action, there could be one or more connections coming out from the action:

-

bool

- one connection for "any" returned value

- or two connections, one for when the result is True, the other one for when the result is False

-

string_enum and int_enum

- one connection for "any" returned value

- or N connections, one for each value, where N equals the size of the enumeration

There could be multiple "incoming" connections to any action, regardless of the type of its return value type.

The connection is visualized as a blue line between two actions. The "direction" of the program flow is suggested by the orientation of arrows, representing actions.

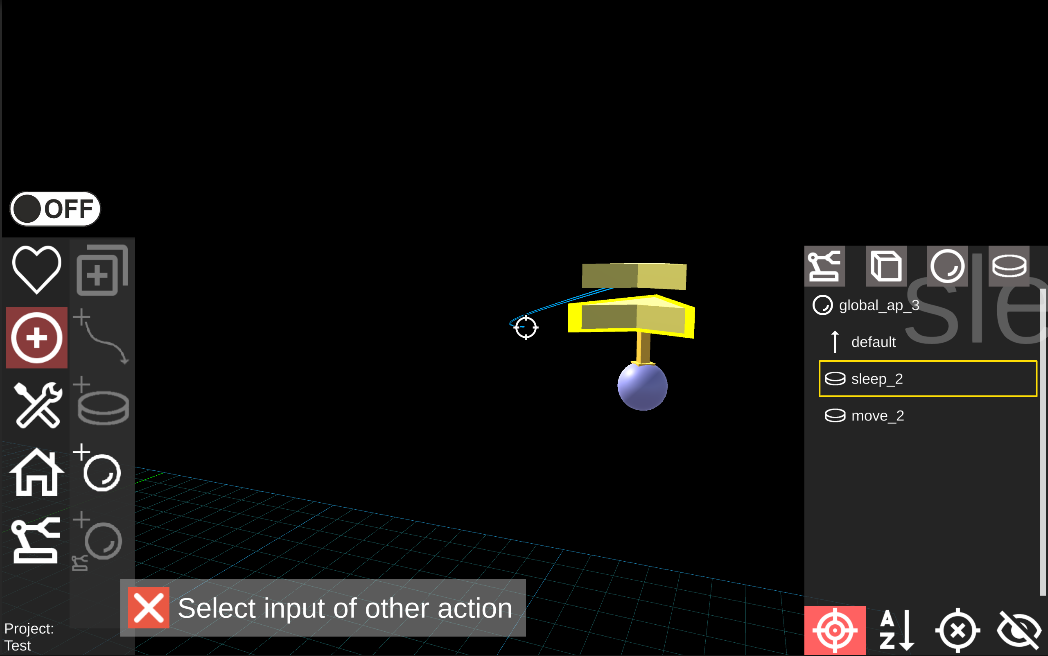

The connection is added to the scene in this way:

- Select first action, i.e. the action where the new transition will start

- Press the Add connection button in the add category of the left menu.

- If the selected action has supported return value type (see above) a dialog appears, allowing the user to select desired output value (i.e. if the newly created connection should be executed only when a specific value is returned from the action)

- Select second action, i.e. the action where the new transition will end

Each connection could be edited or removed in this way:

- Select an action, where a connection you want to edit or remove ends

- Press the Add connection button in the add category of the left menu.

- The connection will "stick" to the sight

- Edit: select a new action, where the connection will end

- Remove: cancel the selection process by pressing the "X" button in the lower part of the screen

The program itself is composed of the individual actions, executed in the order given by the connections. The program could be executed directly from the Project editor, or packed into the self-contained package and executed later.

The package could be created using the create package button in the home category of the left menu. Using the dialog, the user could define the name of the package. When the package is created, it will appear in the packages list on the main screen.

During the development phase, the package could be run within the Project editor, using the execute button when the START action is selected. Internally, the temporal package will be created and executed and after the execution (finished either by project exception or by pressing the stop execution button) the Project editor is opened again.

From within the Project editor, the program could be run in debug mode, meaning that the execution will be automatically paused when action attached to action point marked as a breakpoint is being executed. When the execution is paused, the user could step over the next actions or resume the execution again. Moreover, when the debug run is selected, a dialog appears to allow the user to pause execution on the first action.

The debug run is started using the debug button when the START action is selected.

Using the debug button when an action point is selected causes that the selected action point be marked as a breakpoint. In the scene, it is visualized by the red color of the action point.